Beyond Touch: Tomorrow’s Devices Will Use MEMS Ultrasound to Hear Your Gestures

Touch screens are on the way out; piezoelectric gesture control is on the way in

Today, we control our electronic world by touch—we tap, we swipe, we pinch and zoom. The touch interface went from being a novelty back in 2007, when Apple first brought it to the iPhone, to a ubiquitous feature in less than a decade. It is so commonplace that toddlers declare nontouch displays broken when the screen doesn’t respond to their fingers. But touch isn’t the end of the story. You can’t use it when you’re dripping in the shower, you can’t wear a touch screen on your eyeglasses, and you probably won’t explore virtual reality by swiping and pinching a handheld slab of glass.

Fast-forward to 2020, when, I predict, the touch interface will be used on a few phones and tablets, but not much else. You’ll start your morning run by sweeping your fingers near your fitness band. Tiny ultrasonic transceivers embedded in the band will sense the motion of your fingertips, identify the gesture, and turn on your favorite tunes. After your run, if your cellphone rings while you are showering, you’ll answer the call by sticking your arm out of the shower to pass your palm somewhere above the phone’s display. Later, in the car, navigation alerts and incoming text messages might threaten to distract you, but you’ll dismiss these with a flick of your hand.

This world of gesture magic is coming, thanks to recent breakthroughs in ultrasonic microelectromechanical systems, or MEMS.

The logic behind the gesture-based interface is simple: Humans evolved to use their hands to interact with their environment, so gestures come naturally to all of us. While speech recognition has improved dramatically in recent years, it isn’t always appropriate to issue verbal commands, and the need for key phrases (“Okay, Google”) makes it ill suited for simple controls. For example, in some cars, you can control the audio with your voice, but first you have to press a button to activate the voice control, and then you utter three separate voice commands in sequence: “radio,” “volume,” “up.” That’s not as easy as touching a volume button, and gesture control can be just as easy—or easier: You don’t have to find the button; just wave your hand in front of the radio.

Virtual reality and augmented reality offer clear examples of the limitations of touch interfaces and speech recognition. After all, it isn’t particularly immersive to manipulate virtual objects using a touch screen or voice commands: “Okay, Google, swing the sword.”

Cameras might seem an obvious way to implement gesture-based user interfaces because every laptop, tablet, and smartphone has a camera. But most people are uncomfortable about the idea of “always-on” cameras in their personal electronics. (A photograph of Facebook founder Mark Zuckerberg’s laptop that recently made the rounds on the Internet revealed that even he has tape blocking the camera.) Capturing video, as would be necessary for tracking gestures, is also pretty power intensive: Users of Google Glass reported that the battery ran down after just 30 minutes when shooting video.

And cameras have an even more fundamental problem. They capture only two-dimensional images, making it difficult to separate a user’s hand from a complex optical background. If you’ve ever misjudged the distance to the car behind you when parallel parking and using your rearview mirror, you will agree that it can be awfully hard to gauge distance with just vision.

The computational cost of using images has to be considered, too. Even in Microsoft’s powerful Xbox, many developers embraced the option of disabling the software supporting the Kinect—which uses an infrared camera to track motion—so that they could access the 10 percent of the graphics processing unit dedicated to the Kinect’s image processing.

In recent years, there have been a few efforts to implement gesture-sensing technologies that don’t involve cameras. The three main contenders are radar, optical infrared, and ultrasound.

Google has developed a miniature 60-gigahertz radar system aimed at gesture sensing, which it calls Project Soli. Soli’s latest prototypes, built into a smart watch, claim power consumption of 54 milliwatts, which might seem paltry but is in fact quite a bit for a watch. For this radar to be useful as a primary interface, the company will have to reduce its power consumption by another order of magnitude. And the frequency band Google proposes to use for this interface—60 GHz—is starting to get crowded with communications networks, in the form of IEEE 802.11ad, or WiGig.

Optical sensors are another approach being used for gesture-based interfaces.

Infrared sensors based on low-cost IR LEDs are already used today as proximity sensors in smartphones—that’s how your smartphone knows to disable the touch screen when you’re holding your phone to your ear during a call. These proximity sensors measure the intensity of IR light reflected from nearby objects. Because the reflected intensity depends on the size and color of the object, it provides a poor measure of distance, although it’s good enough to tell if your phone is near your head or not.

Some newer infrared-based sensors rely on time-of-flight measurement instead of light intensity. These can be more accurate, but detecting the time of flight for light requires a wideband receiver and therefore comes at the cost of increased power consumption. The latest infrared time-of-flight sensor from STMicroelectronics, for example, consumes 20 mW at a measurement rate of 10 samples per second.

And all infrared sensors have to contend with the presence of other infrared sources, such as halogen lamps and daylight. One vendor’s infrared time-of-flight sensor has an impressive 2-meter range indoors, although that’s reduced to just 50 centimeters outdoors under overcast skies. Performance in full sun is not specified, presumably because the sensor doesn’t work at all in those conditions.

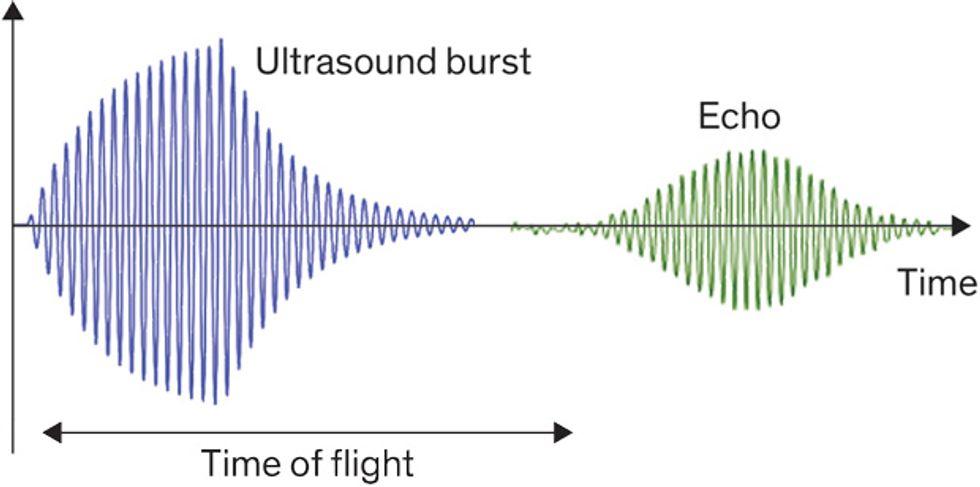

Now consider the case for ultrasound. As children, we learned that bats and dolphins use ultrasonic echolocation. Curiously, most bats and dolphins are not completely blind. Instead, they use ultrasound to complement their vision, enabling them to identify the size, range, position, and speed of prey. They make time-of-flight measurements by emitting a pulse of high-frequency sound and listening for returning echoes. In air, the echo from a target 2 meters away returns in around 12 milliseconds, a timescale short enough for ultrasound to track even fast-moving targets but long enough to separate multiple echoes without requiring a lot of processing bandwidth.

Ultrasonic range-finding in the human world is just over 100 years old, and it still relies on piezoelectric transducers that aren’t so different from the quartz transducers first demonstrated by Paul Langevin in 1917 as part of France’s antisubmarine effort during World War I. Since then, ultrasound has been used extensively in marine applications (on everything from small pleasure boats to nuclear submarines), in medicine, for nondestructive testing, and for some automotive applications both glamorous (think Tesla’s Autopilot) and mundane (parking-distance sensors).

To date, however, ultrasound hasn’t been used much in consumer electronics. One reason for this omission is that while solid-state integrated circuit technology has had a huge impact on RF and IR transducers (the first IR LED was commercialized by Texas Instruments in 1962, shortly after TI’s Jack Kilby invented the integrated circuit), the materials and design of ultrasonic transducers haven’t changed very much over the years. Recent innovations in MEMS acoustic transducers, however, are making it possible to use ultrasound in the consumer world.

MEMS technology has already made a big splash in the area of miniature microphones. Acoustic MEMS components first broke into the cellphone market in 2003, when the popular Motorola Razr phone incorporated a MEMS microphone from Knowles Acoustics. Today, MEMS microphones—which are smaller, consume less power, and include more onboard signal processing than traditional electret condenser microphones—are in virtually all smartphones. Such microphones are currently being produced by Akustica, Cirrus Logic, Infineon, InvenSense, Knowles, STMicroelectronics, and others.

More recently, a few companies have begun to exploit the ability of MEMS microphones to receive near-audio-band ultrasound. While manufacturers’ data sheets specify performance in the audio band (up to approximately 20 kilohertz), the MEMS transducers inside these microphones are often capable of receiving signals at over twice this frequency.

The first MEMS-based ultrasound application to make it to the mass consumer market was Qualcomm’s Snapdragon digital pen, which is incorporated in the HP Slate line of tablets. Qualcomm picked up this technology when it acquired the Israeli ultrasound-technology company EPOS Development in 2012. This ultrasonic stylus can be tracked at a distance from the surface of the tablet, allowing the user to write on a paper pad adjacent to the tablet.

An example of another ultrasound innovation to come to consumer devices is a product named Beauty, introduced this year by Elliptic Labs, of Norway. Beauty is a software-only ultrasound approach that uses a smartphone’s existing earpiece and MEMS microphone to replace the IR proximity sensor that detects when the phone is close to your ear, triggering a disabling of the touch screen and display.

These early applications of consumer ultrasound use conventional MEMS microphones that do fine when receiving ultrasound signals. These microphones, however, are based on capacitive transducers, which are ill suited to transmitting ultrasound in air.

A capacitive microphone transducer consists of two capacitor plates—the backplate and the membrane—separated by a small air gap (on the order of 1 micrometer wide). This type of transducer receives sound by detecting the change in capacitance that occurs when the membrane deflects under an incident sound wave. Normally, this is all that microphones do—receive sound. But they can be made to transmit sound by reversing this process, deflecting the membrane to launch a sound wave.

The problem here is that a good receiver requires a very small air gap between the membrane and backplate. That’s because the receiver’s acoustic sensitivity scales with the inverse square of the gap, so increasing the gap by, say, a factor of three reduces the sensitivity by a factor of nine. While small gaps are good for receiving sound, they pose a problem for transmitting sound because a small gap limits the membrane displacement and therefore the maximum sound pressure level (SPL) that can be transmitted. The SPL is proportional to the product of the acoustic impedance of the surrounding medium—air—and the frequency and amplitude of the membrane’s motion. Capacitive transducers, such as capacitive micromachined ultrasonic transducers (CMUTs), work well in medical applications where the surrounding medium is fluid, the ultrasound frequency is greater than a few megahertz, and high voltage is available to drive the transducer.

However, high-frequency ultrasound is quickly attenuated in air, with absorption loss increasing from about 1 decibel per meter at 40 kHz to 100 dB/m at 800 kHz. For this reason, air-coupled ultrasound transducers typically operate at frequencies from 40 to 200 kHz. At these frequencies, due to the much lower acoustic impedance of air than fluid, an ultrasound transducer must vibrate more than 1 micrometer to transmit at a sound pressure level sufficient to allow echoes to be measured from objects more than a few centimeters from the transducer. CMUTs capable of this much vibration amplitude require large gaps and therefore high voltages (greater than 100 volts) to operate.

So producing a high-pressure sound wave in a low-voltage device that is also a sensitive receiver requires that the membrane displacement not be limited by a nearby backplate.

The answer is piezoelectricity. Piezoelectricity, first discovered by the Curie brothers in 1880, refers to the ability of certain materials to produce an electrical charge when they are mechanically deformed. In a piezoelectric micromachined ultrasonic transducer (PMUT), this deformation allows the transducer to convert an incident ultrasonic pressure wave into an electrical signal. A PMUT transmits ultrasound using the converse piezoelectric effect. That is, an electric field applied to the piezoelectric material results in mechanical deformation of the PMUT’s membrane, launching the ultrasound wave. Because a PMUT doesn’t have a backplate, there is no hard stop that limits the membrane’s motion.

Piezoelectric MEMS devices like PMUTs rely on thin-film piezoelectric materials, which are typically manufactured by depositing them from a chemical solution or from a vapor. Twenty years ago, the thin-film piezoelectric materials that could be deposited by either of these methods did not have the same properties as their bulk ceramic equivalents; film properties such as stress and piezoelectric coefficients could not be well controlled, and the deposition processes were not repeatable. But spurred by a few key applications like inkjet print heads and radio-frequency filters, researchers and equipment manufacturers have solved these problems for two piezoelectric materials: PZT (lead zirconate titanate) and AlN (aluminum nitride). Manufacturers today typically use PZT in conventional ultrasound transducers.

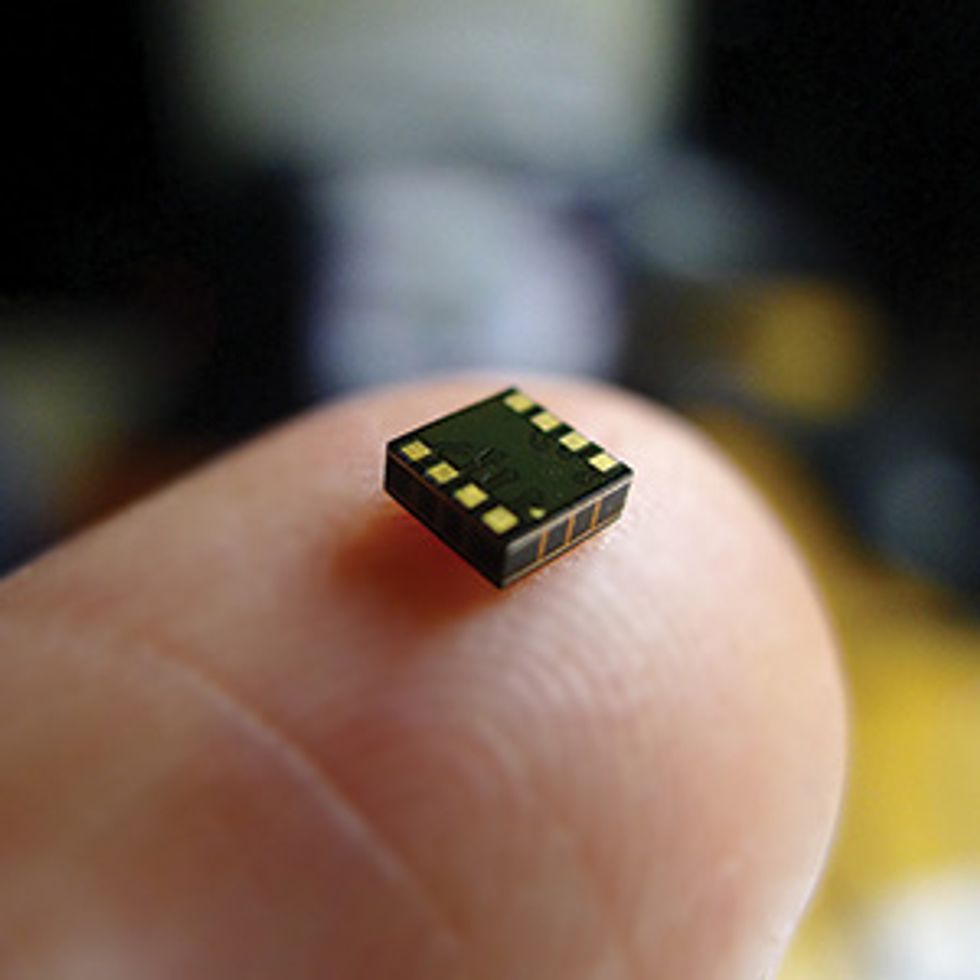

Using PMUT technology licensed from the University of California’s Berkeley Sensor and Actuator Center, the company I cofounded, Chirp Microsystems, is developing transceivers for use in ultrasound-based user interfaces.

On the outside, Chirp’s ultrasound transceivers look identical to MEMS microphones. On the inside, the transceivers include a PMUT chip copackaged with a custom ultralow-power, mixed-signal IC, which manages all the ultrasonic signal processing, allowing the time-of-flight sensor to operate without the oversight of an external processor. As a result, the sensor uses very little power: One time-of-flight measurement consumes approximately 4 microjoules, drawing single-digit microamperes of current at low sample rates. That’s comparable to the power consumption of the always-on MEMS accelerometers used for step counting in popular fitness trackers.

The three-dimensional location of an object, such as a hand or a fingertip, can be determined by measuring the time of flight from at least three sensors and using an algorithm known as trilateration, similar to the way that a GPS receiver finds its location from a constellation of satellites. In Chirp’s system, a low-power microcontroller, operating as a sensor hub, coordinates the trilateration measurement. Because all the ultrasonic signal processing happens within the individual sensors, the hub needs to perform only minimal computation, reading the times of flight from the various sensors and computing the three-dimensional coordinates of the nearest target through trilateration.

Chirp held its first public demonstration of ultrasonic gesture sensing at the 2016 CES technology exhibition. The company is now working with a number of manufacturers to implement ultrasonic gesture sensing in wearable devices and ultrasonic controller tracking in virtual-reality and gaming consoles. We expect some of these products to go on sale late in 2017. Chirp is currently the only company commercializing PMUTs for air-coupled ultrasound; however, a few companies are investigating PMUTs for other purposes. For example, the startup eXo System is working on a portable medical ultrasound system using a large PMUT array, and inertial-sensor manufacturer InvenSense announced in late 2015 that it will introduce a PMUT-based ultrasonic fingerprint sensor in 2017 under the trade name UltraPrint.

Low-power, MEMS-based piezoelectric ultrasound will change the world of consumer devices. Simple devices such as watches and phones could use ultralow-power ultrasound to become context aware through always-on sensing, constantly probing their environment to enter a low-power mode when in a purse or pocket or covered by a sleeve and waking only when needed. Rooms and vehicles could sense our presence, responding to user preferences for entertainment, lighting, and information, all without the use of intrusive cameras. Tablets, entertainment systems, even light switches could all incorporate natural interfaces based on gestures, providing intuitive control through simple motions. If the Internet of Things results in even a fraction of the tens of billions of connected smart devices that are predicted for 2020, we’re going to need a better way to communicate with this connected world than through voice and touch alone.

Ironically, when this kind of intuitive-gesture user interface makes its way into our everyday lives, we’ll all quickly forget that it’s there. We’ll unconsciously flick a hand to silence a phone, brush a finger across a wrist to send a text, or wave to change an app. And somewhere across the room, on the table, or worn somewhere on your body, a tiny ultrasound transducer will be hard at work, pushing around lazy air molecules to squeeze useful information out of the unused ultrasonic spectrum that surrounds us.

This article appears in the December 2016 print issue as “Wave Hello to the Next Inter-face.”

About the Author

David Horsley is a professor of mechanical and aerospace engineering at the University of California, Davis, and cofounder and chief technical officer at Chirp Microsystems, which makes low-power ultrasonic 3D sensors.