Picking things up is such a fundamental skill for robots, and robots have been picking up things for such a long time, that it’s sometimes difficult to understand how challenging grasping still is. Robots that are good at grasping things usually depend on high quality sensor data along with some amount of advance knowledge about the things that they’re going to be grasping. Where grasping gets really tricky is when you’re trying to design a system that can use standardized (and affordable) grippers and sensors to reliably pick up almost anything, including that infinitely long tail of objects that are, for whatever reason, weird and annoying to grasp.

One way around this is to design grasping hardware that uses clever tricks (like enveloping grasps or adhesives) to compensate for not really knowing the best way to pick up a given object, but this may not be a long-term sustainable approach: Solving the problem in software is much more efficient and scalable, if you can pull it off. “I’ve been studying robot grasping for 30 years and I’m convinced that the key to reliable robot grasping is the perception and control software, not the hardware,” Ken Goldberg, a professor of robotics and director of the AUTOLAB at UC Berkeley, told us this week.

Today, Professor Goldberg and AUTOLAB researcher Jeff Mahler are announcing the release of an enormous dataset that provides the foundation for Dex-Net 2.0, a project that uses neural networks to develop highly reliable robot grasping across a wide variety of rigid objects. The dataset consists of 6.7 million point object point clouds, accompanying parallel-jaw gripper poses, along with a robustness estimate of how likely it is that the grasp will be able to lift and carry the object, and now you can use it to train your own grasping system.

Grasping is not such a problem if you have an exact model of the object you’re trying to grasp, a gripper that works exactly like you expect it to work, and plenty of time to run a bunch of simulations to figure out what specific grasp is best. To the frustration of roboticists everywhere, this almost never happens outside of a lab, and doesn’t have happen all that often inside of a lab, especially if you’re trying to do something practical. Sensors tend to only see objects from one perspective at a time, they’re often inaccurate and noisy, and they can’t usually tell much about the physical characteristics of objects. Meanwhile, grippers themselves have finite amounts of accuracy and precision with which they can be controlled. Put this all together, and you end up with enough uncertainty that consistently robust grasping is a real challenge.

At UC Berkeley, Goldberg and Mahler have been working to solve this problem by training a convolutional neural network (CNN) to be able to predict exactly how robust a particular grasp on a given object will be (whether the grasp will fail when the object is lifted, moved, and shaken a bit). One way to go about this is by having a million robots grasp a million objects a million times, but this is not necessarily the most practical solution (although some have tried).

Instead, Dex-Net 2.0 relies on “a probabilistic model to generate synthetic point clouds, grasps, and grasp robustness labels from datasets of 3D object meshes using physics-based models of grasping, image rendering, and camera noise.” In other words, Dex-Net 2.0 leverages cloud computing to rapidly generate a large training set for a CNN, in “a hybrid of well-established analytic methods from robotics and Deep Learning,” as Goldberg explains:

The key to Dex-Net 2.0 is a hybrid approach to machine learning Jeff Mahler and I developed that combines physics with Deep Learning. It combines a large dataset of 3D object shapes, a physics-based model of grasp mechanics, and sampling statistics to generate 6.7 million training examples, and then using a Deep Learning network to learn a function that can rapidly find robust grasps when given a 3D sensor point cloud. It’s trained on a very large set of examples of robust grasps, similar to recent results in computer vision and speech recognition.

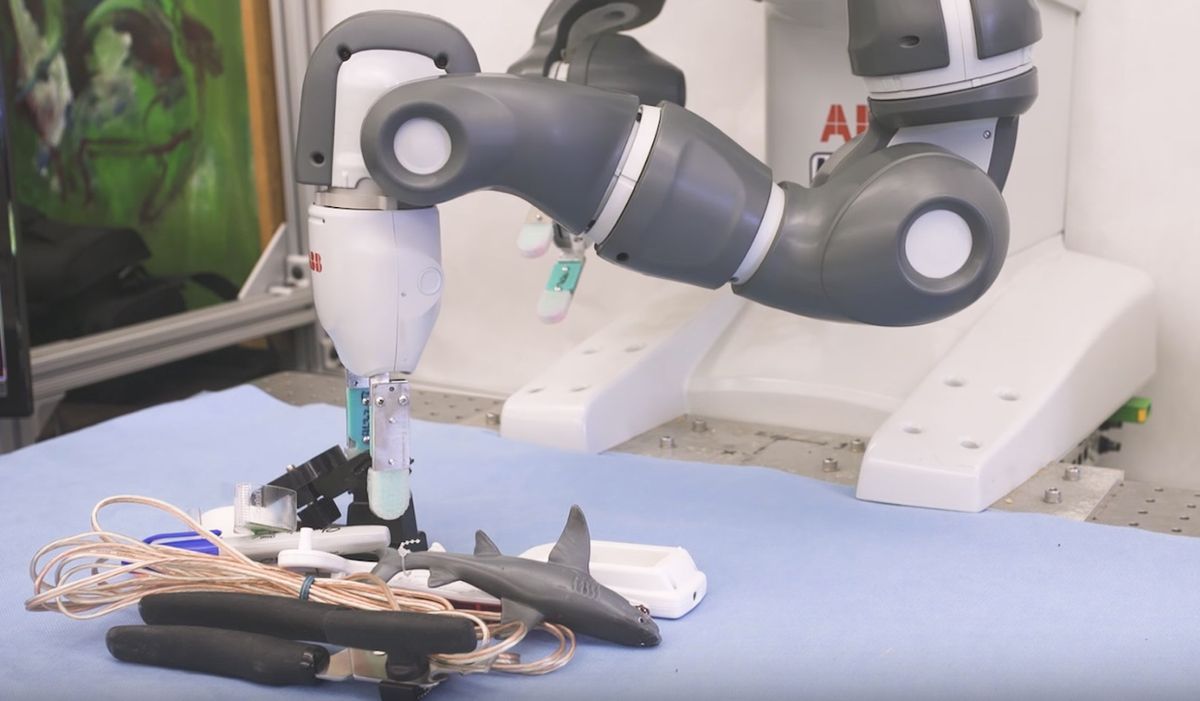

As with anything done in simulation, it’s important to understand what the limitations of the simulation are, and the extent to which things learned in simulation can be usefully applied to reality. This is something that the Berkeley researchers tested, of course, and according to Goldberg, “when this CNN estimates the robustness is high, the grasp almost always worked in our physical experiments.” More specifically, in experiments with an ABB YuMi, the planner was 93 percent successful in planning reliable grasps. It was also able to successfully predict grasp robustness with 40 novel objects (including tricky things like a can opener and a washcloth) with just one false positive out of 69 predicted successes. Since the robot has a pretty good idea of when it’ll succeed, it can also tell when it’s likely to fail, and the researchers suggest that if it anticipates a failure, the robot could either poke the object to change its orientation, or ask a human for some help.

This all sounds great, but the question is, what’s next? How can roboticists use the data that are being released today to make grasping better? We asked Goldberg and Mahler about this.

IEEE Spectrum: To what extent are the grasping performance or techniques demonstrated in this dataset generalizable to other robots that may use different grippers or sensors?

Ken Goldberg: In principle this approach is compatible with any 3D camera and parallel-jaw gripper (and could be used to choose a primary grasp axis for multifingered grippers).

Jeff Mahler: Our current system requires some knowledge specific to the hardware setup, including the focal length and bounds on where the RGB-D sensor will be relative to the robot, the geometry of a parallel-jaw robot gripper (specified as CAD model), and a friction coefficient for the gripper. These parameters could be input to Dex-Net to generate a new training dataset specific to a given hardware setup, and a GQ-CNN trained on such a dataset could have similar performance, in theory. However, there are a lot of differences between robots and without running the experiments it’s hard to say whether or not our results would generalize.

How will this data release help roboticists? How would you suggest that people take advantage of what you’ve made available here?

Mahler: With the release we hope that other roboticists can replicate our training results to facilitate development of new architectures for predicting grasp robustness from point clouds, and to encourage benchmarking of new methods.

In the broader scope of things, we hope that a system like Dex-Net that can automatically generate training datasets for robotic grasping can be a useful resource to train deep neural networks for robot grasp planning across multiple different robots.

What are you working on next?

Goldberg: A primary application for Dex-Net 2.0 is warehouse order fulfillment for companies like Amazon, where each order is different and there are millions of products. Dex-Net 2.0 could also be used by robots in homes to clean and declutter, or in factories to facilitate assembly of varying products like customized cars.

We’re working on a number of extensions such as greatly expanding the training set of object meshes, using simulation to synthesize a training dataset for bin picking.

If you want to get involved and you happen to have an ABB YuMi lying around, Berkeley is keeping a leaderboard of anyone who can improve their CNN architecture to significantly outperform that 93 percent accuracy in grasp classification. If you don’t have a YuMi, you can still download a Python package and use some helpful tutorials to get started on using the Dex-Nex 2.0 dataset for CNN training, and they’re currently working on a ROS package that will let you see the results of grasp planning on custom point clouds.

Later this year, the plan is to release “Dex-Net as a Service,” a web API to create new databases with custom 3D models and compute grasp robustness metrics on them. Look for it this fall.

[ Dex-Net 2.0 ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.