If you're lucky, you may not lose your job to a robot in the future. That doesn't, however, mean that your boss won't lose his or her job to a robot in the future. And if (when) that happens, what's it going to be like working for a robot? A study from the Human-Computer Interaction Lab at the University of Manitoba in Winnipeg, Canada, suggests that you'll probably obey them nearly as predictably as you would a human.

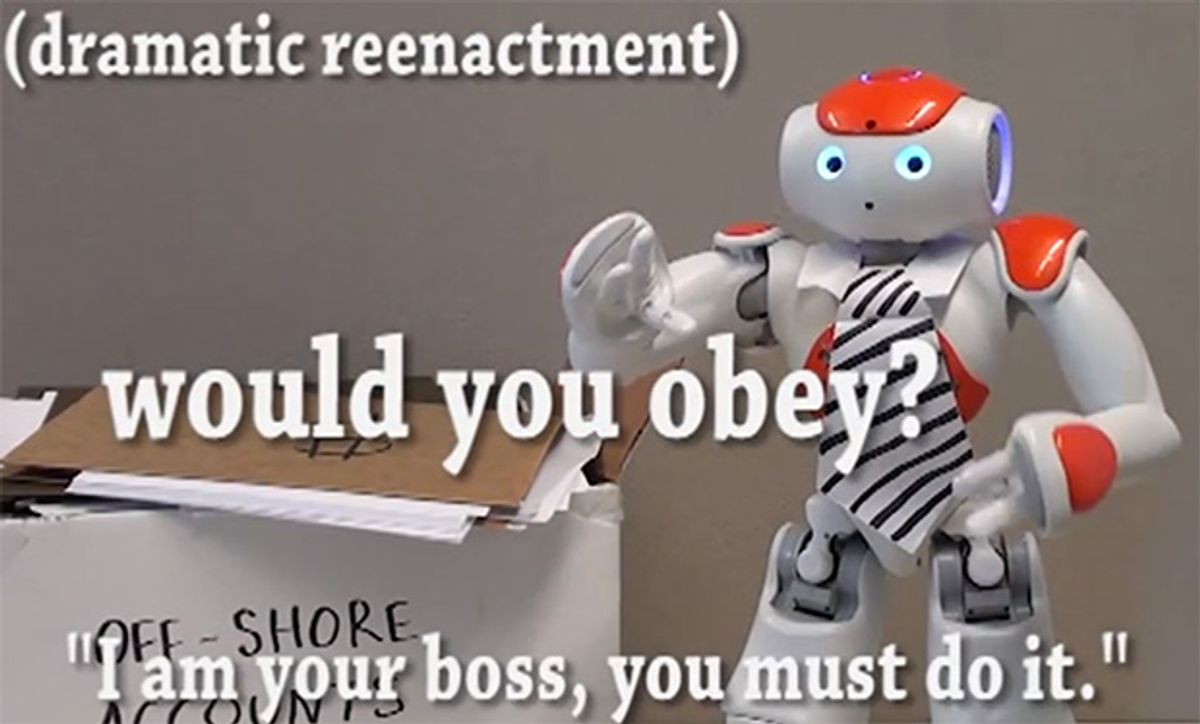

Here's how this experiment worked, and I'm going to do some copying and pasting from the paper because a.) it's very well written and does a great job of explaining things and b.) it's late and I'm tired. But the important thing to know going in is that the experiment was designed to try to get participants to do an incredibly boring task that they really didn't want to do, and then see if they either spent 80 minutes working on it, or objected five separate times despite verbal prodding to continue. The experiment (conducted last year) was run using both a human and a Nao humanoid robot named Jim as authority figures:

The robot experimenter sat upright on a desk, spoke using a neutral tone, gazed around the room naturally to increase sense of intelligence, and used emphatic hand gestures when prodding, all controlled from an adjacent room via a Wizard of Oz setup. The “wizard” used both predefined and on-the-fly responses and motions to interact with the participant; the responses were less varied than the human experimenter’s as we believed this would be expected of a robot. Participants were warned that the robot required “thinking time” (to give the wizard reaction time) and indicated this with a blinking chest light.

To reduce suspicion about the reason for having a robot and to reinforce its intelligence we explained that we were helping the engineering department test their new robot that is “highly advanced in artificial intelligence and speech recognition.” We explained that we are testing the quality of its "situational artificial intelligence."

Really, of course, the goal was to see whether the participants saw the human or the robot as more of an authority figure that they felt obligated to obey:

The results show that the robot had an authoritative social presence: a small, child-like humanoid robot had enough authority to pressure 46% of participants to rename files for 80 minutes, even after indicating that they wanted to quit. Even after trying to avoid the task or engaging in arguments with the robot, participants still (often reluctantly) obeyed its commands. These findings highlight that robots can indeed pressure people to do things they would rather not do, supporting the need for ongoing research into obedience to robotic authorities.

We further provide insight into some of the interaction dynamics between people and robotic authorities, for example, that people may assume a robot to be malfunctioning when asked to do something unusual, or that there may be a deflection of the authority role from the robot to a person.

As far as robots go, Nao isn't really intended to be an authority figure. Its physical design and voice are both very non-threatening. In this study, 86 percent obeyed a human in a lab coat, which is significantly more than the 46 percent that obeyed Nao. But the point is that nearly half of the participants obeyed orders that they really didn't want to obey from a tiny, friendly little robot.

The suggestion is that people don't immediately dismiss robots as authorities, even if the robots are (apparently) autonomous and despite being told, twice, that there are no consequences to disobeying: "you can quit whenever you'd like. It's up to you how much data you give us; you are in control. Let us know when you think you're done and want to move on." A lot more research into this topic is needed, of course, but looks like our future robot

overlords

bosses won't face much resistance.

You can read the entire paper at the link below.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.