Robots of the world, unite!

Google has a plan to speed up robotic learning, and it involves getting robots to share their experiences and collectively improve their capabilities.

Sergey Levine from the Google Brain team, along with collaborators from Alphabet subsidiaries DeepMind and X, published a blog post on Monday describing an approach for “general-purpose skill learning across multiple robots.”

Teaching robots how to do even the most basic tasks in real-world settings like homes and offices has vexed roboticists for decades. To tackle this challenge, the Google researchers decided to combine two recent technology advances. The first is cloud robotics, a concept that envisions robots sharing data and skills with each other through an online repository. The other is machine learning, and in particular, the application of deep neural networks to let robots learn for themselves.

In a series of experiments carried out by the researchers, individual robotic arms attempted to perform a given task repeatedly. Not surprisingly, each robot was able to improve its own skills over time, learning to adapt to slight variations in the environment and its own motions. But the Google team didn’t stop there. They got the robots to pool their experiences to“build a common model of the skill” that, as the researches explain, was better and faster than what they could have achieved on their own:

“The skills learned by the robots are still relatively simple—pushing objects and opening doors—but by learning such skills more quickly and efficiently through collective learning, robots might in the future acquire richer behavioral repertoires that could eventually make it possible for them to assist us in our daily lives.”

Earlier this year, Levine and colleagues from X showed how deep neural nets can help robots teach themselves a grasping task. In that study, a group of robot arms went through some 800,000 grasp attempts, and though they failed a lot in the beginning, their success rate improved significantly as their neural net continuously retrained itself.

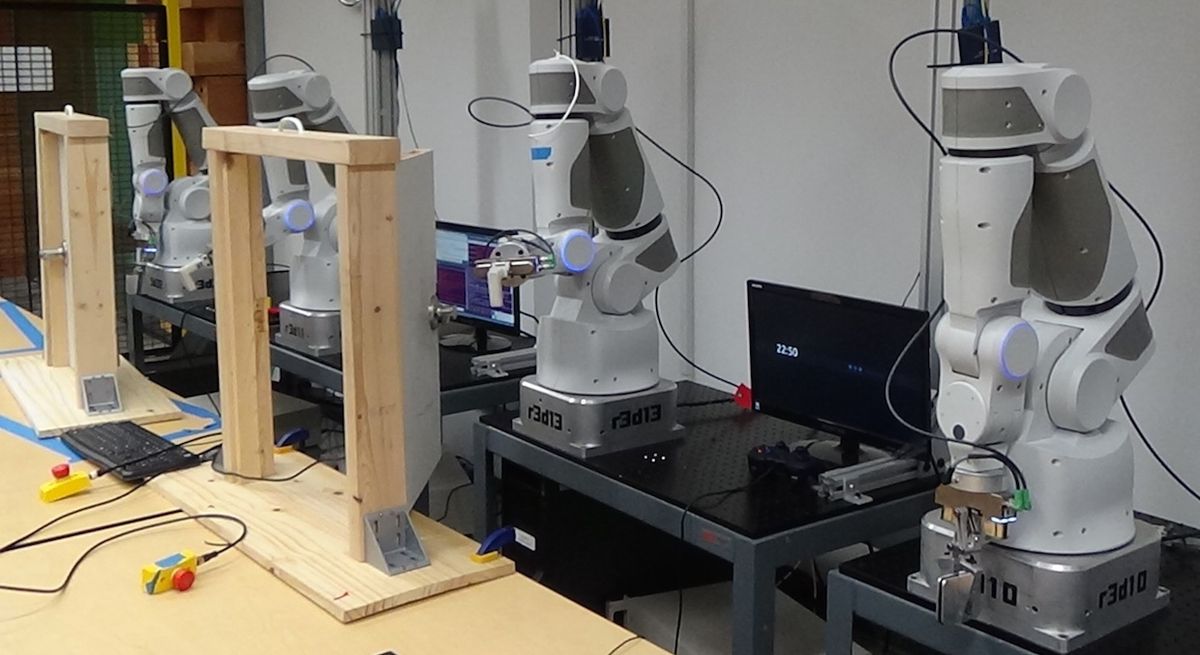

In their latest experiments, the Google researchers tested three different scenarios. The first involved robots learning motor skills directly from trial-and-error practice. Each robot started with a copy of a neural net as it attempted to open a door over and over. At regular intervals, the robots sent data about their performances to a central server, which used the data to build a new neural network that better captured how action and success were related. The server then sent the updated neural net back to the robots. “Given that this updated network is a bit better at estimating the true value of actions in the world, the robots will produce better behavior,” the researchers wrote. “This cycle can then be repeated to continue improving on the task.”

In the second scenario, the researchers wanted robots to learn how to interact with objects not only through trial-and-error but also by creating internal models of the objects, the environment, and their behaviors. Just as with the door opening task, each robot started with its own copy of a neural network as it “played” with a variety of household objects. The robots then shared their experiences with each other and together built what the researchers describe as a “single predictive model” that gives them an implicit understanding of the physics involved in interacting with the objects. You could probably build the same predictive model by using a single robot, but sharing the combined experiences of many robots gets you there much faster.

Finally, the third scenario involved robots learning skills with help from humans. The idea is that people have a lot of intuition about their interactions with objects and the world, and that by assisting robots with manipulation skills we could transfer some of this intuition to robots to let them learn those skills faster. In the experiment, a researcher helped a group of robots open different doors while a single neural network on a central server encoded their experiences. Next the robots performed a series of trial-and-error repetitions that were gradually more difficult, helping to improve the network. “The combination of human-guidance with trial-and-error learning allowed the robots to collectively learn the skill of door-opening in just a couple of hours,” the researchers wrote. “Since the robots were trained on doors that look different from each other, the final policy succeeds on a door with a handle that none of the robots had seen before.”

The Google team explained that the skills their robots have learned are still quite limited. But they hope that, as robots and algorithms improve and become more widely available, the notion of pooling their experiences will prove critical in teaching robots how to do useful tasks:

In all three of the experiments described above, the ability to communicate and exchange their experiences allows the robots to learn more quickly and effectively. This becomes particularly important when we combine robotic learning with deep learning, as is the case in all of the experiments discussed above. We’ve seen before that deep learning works best when provided with ample training data. For example, the popular ImageNet benchmark uses over 1.5 million labeled examples. While such a quantity of data is not impossible for a single robot to gather over a few years, it is much more efficient to gather the same volume of experience from multiple robots over the course of a few weeks. Besides faster learning times, this approach might benefit from the greater diversity of experience: a real-world deployment might involve multiple robots in different places and different settings, sharing heterogeneous, varied experiences to build a single highly generalizable representation.

You can read preprints of four research papers the researchers have posted to arXiv, including two they’ve submitted to ICRA 2017, at the link below.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.