This is a guest post. The views expressed here are solely those of the author and do not represent positions of IEEE Spectrum or the IEEE.

When it comes to new technologies and products, we tend to think of “digital” as synonymous with advanced, modern, and high-def, while “analog” is considered retrograde, outmoded, and low-resolution.

But if you think analog is dead, you’d be wrong. Analog processing not only remains at the heart of many vital systems we depend on today, it is now going to make its way into a new breed of compute and intelligent systems that will power some of the most exciting technologies of the future: artificial intelligence and robotics.

Before discussing the upcoming analog renaissance—and why engineers and innovators working on AI and robot applications should be paying close attention to it—it might be helpful to understand the significance and legacy of the Old Analog Age.

OUR LOVE FOR ANALOG

During World War II, analog circuits played a key role in enabling the first automatic anti-aircraft fire-control systems and, in the decades that followed, analog computers became an essential tool for calculating flight trajectories for rockets and spacecraft.

Analog also became prevalent in control and communication systems used in aircraft, ships, and power plants, and some of those systems are still in operation to this day. Until not long ago, analog circuits controlled vast portions of our telecommunications infrastructure (remember the rotary dial?), and they even ruled the office copy room, where early photocopier machines reproduced images without handling a single digital bit.

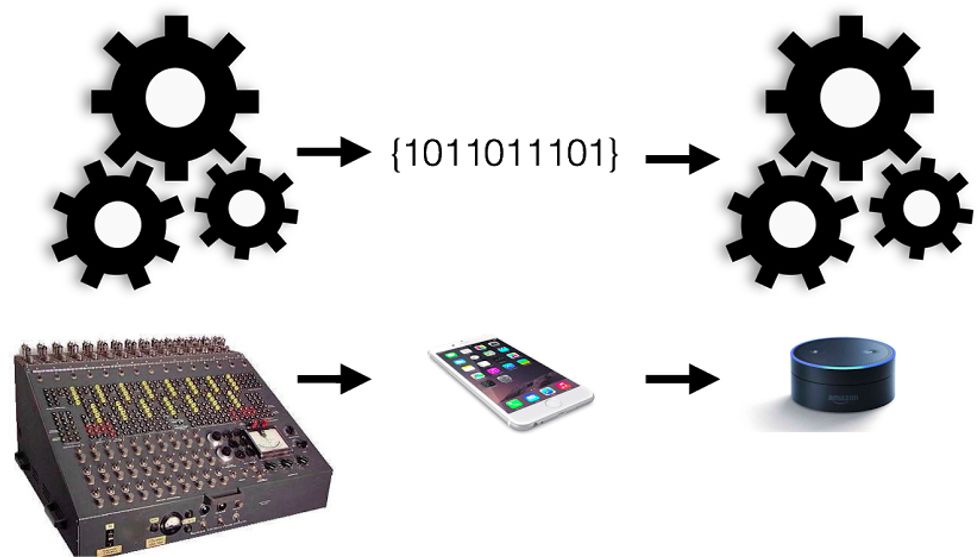

Our love for analog persisted for so long because this technology proved, again and again, to be accurate, simple, and fast. It steered our rockets, sailed our ships, recorded and played back our music and videos, and connected us to each other for many decades. And then, starting in the mid- to late-1960s, digital came along and rapidly took over.

DIGITAL DOMAIN

Why was analog abandoned for digital? The biggest weakness of analog is that it’s rigid, and it gets exponentially more complex when you try to make it flexible. More complexity brings a disproportionate reduction in reliability (bad), so engineers began to notice that Moore’s Law was making dense compute extremely reliable and cheap.

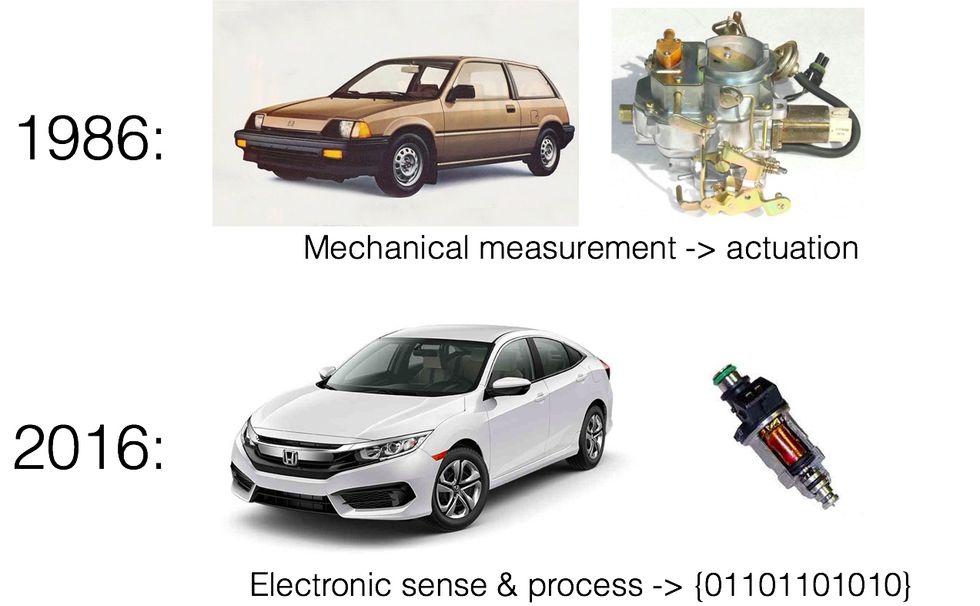

Meanwhile, MEMS and microfabrication techniques commoditized sensors that capture physical signals and convert them to digital. Soon enough, operational amplifiers were abandoned for logic gates that got exponentially cheaper just as Moore’s Law predicted. Mechanical linkages became “ fly-by-wire,” and designers pushed digitization further to the edge.

In today’s consumer electronics world, analog is only used to interface with humans, capturing and producing sounds, images, and other sensations. In larger systems, analog is used to physically turn the wheels and steer rudders on machines that move us in our analog world. But for most other electronic applications, engineers rush to dump signals into the digital domain whenever they can. The upshot is that the benefits of digital logic—cheap, fast, robust, flexible—have made engineers practically allergic to analog processing.

Now, however, after a long hiatus, Carver Mead’s prediction of the return to analog is starting to become a reality.

“Large-scale adaptive analog systems are more robust to component degradation and failure than are more conventional systems, and they use far less power,” Mead, a Caltech professor and microelectronics pioneer, wrote in an influential Proceedings of the IEEE paper in 1990. “For this reason, adaptive analog technology can be expected to utilize the full potential of wafer-scale silicon fabrication.”

AI LIKES ANALOG

Hardware engineers typically view analog as a necessary evil to interact with the physical world. But it turns out that AI and deep learning algorithms seem to conform better to analog and neuromorphic compute platforms.

At my firm Lux Capital, we funded Nervana, which built ASICs that ran convolutional neural networks natively to accelerate the training of deep learning algorithms. Although the mathematical operations were done in the digital domain, the overall system architecture mimicked the human brain at a very high level.

The approach worked well for training AIs in the cloud, though it still consumed too much power to run a portable device like an untethered robot. Nevertheless, Nervana’s expected performance boost was so compelling that Intel rushed to acquire the company.

INSPIRED BY NATURE

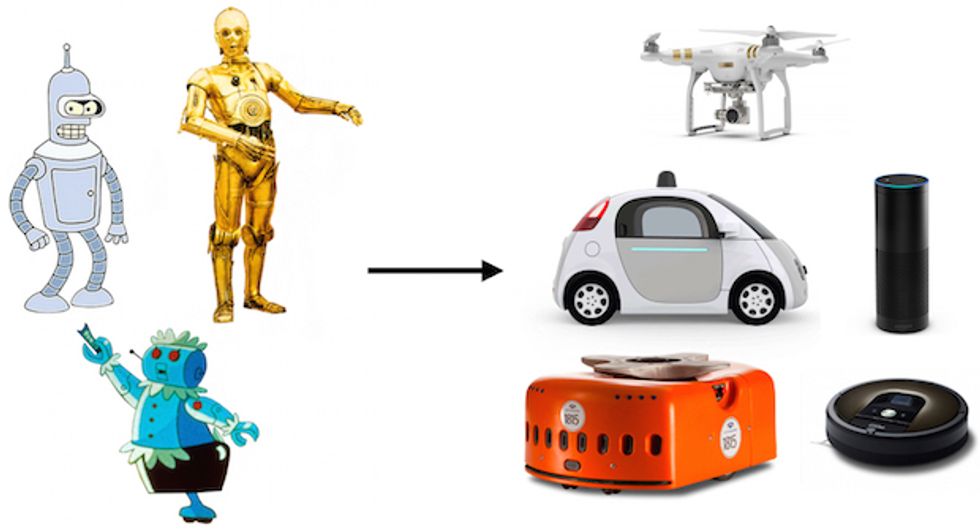

Ask almost anyone (even kids) to sketch out a picture of a robot, and you’ll likely get an image that resembles Rosie, the robot maid from The Jetsons, or C-3PO from Star Wars. That’s not surprising—that’s the vision of robots that science-fiction has portrayed in books, television, and movies for decades. More recently, however, the idea of what a robot is or looks like seems to be evolving. Ask a millennial for an example of a robot and the answer might be Roomba, Amazon’s Echo, or maybe even Siri.

There’s a clear trend that more and more gadgets and other systems that are part of our lives—from thermostats to cars—will become more intelligent, more robotic. These systems will require computers that are small, portable, and low power; they will also need to be super responsive and alert at all times. This is a tough set of requirements for today’s compute systems, which typically consume sizable amounts of power (unless they’re on standby) and need to be cloud-connected to perform useful functions. That’s where analog can help.

Inspired by nature, scientists have been experimenting with analog circuits to better see and hear while consuming a fraction of the power. Stanford’s Brains in Silicon project and the University of Michigan IC Lab, with backing from DARPA’s SyNAPSE and the U.S. Office of Naval Research, are building tools to make it easier to build analog neuromorphic systems. Stealthy startups are also beginning to emerge. Rather than attempt to run deep nets on standard digital circuits, they have designed analog systems that can perform similar computations with much less power, with inspiration from our analog brains.

NOISE IS NO PROBLEM

Why the move to analog now? The answer is simple: We’re at a unique intersection where the neural nets we’re trying to implement are more suitable to analog designs, while demand for these types of AI circuits is expected to explode.

Traditional hard-coded algorithms only function when the innards of the compute are accurate and precise. If the circuits that run traditional hard-coded algorithms aren’t precise, errors will grow out of control as they propagate through the system. Not so with neural nets, where the internal states don’t have to be precise and the system adapts to produce the desired output for a given input. Indeed, our brains are incredibly noisy systems that work just fine. Engineers are learning that they can implement deep nets in silicon using noisy analog approaches as well—yielding an energy savings on the order of 100x.

The implications are huge. Imagine a future wearable device or an Amazon Echo-type assistant that uses almost no power—or that can even harness it from the environment—requiring no power cables or batteries. Or picture a gadget that won’t have to be “cloud connected” to be smart—it will carry enough “intelligence” to be able to work even where no Wi-Fi or cellular coverage is available. And this is just the beginning of what I expect to be a whole new category of amazing AI and robot products to emerge in the not-too-distant future—all thanks to good ol’ analog.

Shahin Farshchi, an IEEE Member, is a partner at Lux Capital, where he invests in transportation, robotics, AI, and space startups. Follow him on Twitter: @farshchi.