The history of AI is often told as the story of machines getting smarter over time. What’s lost is the human element in the narrative, how intelligent machines are designed, trained, and powered by human minds and bodies.

In this six-part series, we explore that human history of AI—how innovators, thinkers, workers, and sometimes hucksters have created algorithms that can replicate human thought and behavior (or at least appear to). While it can be exciting to be swept up by the idea of superintelligent computers that have no need for human input, the true history of smart machines shows that our AI is only as good as we are.

Part 5: Franglen’s Admissions Algorithm

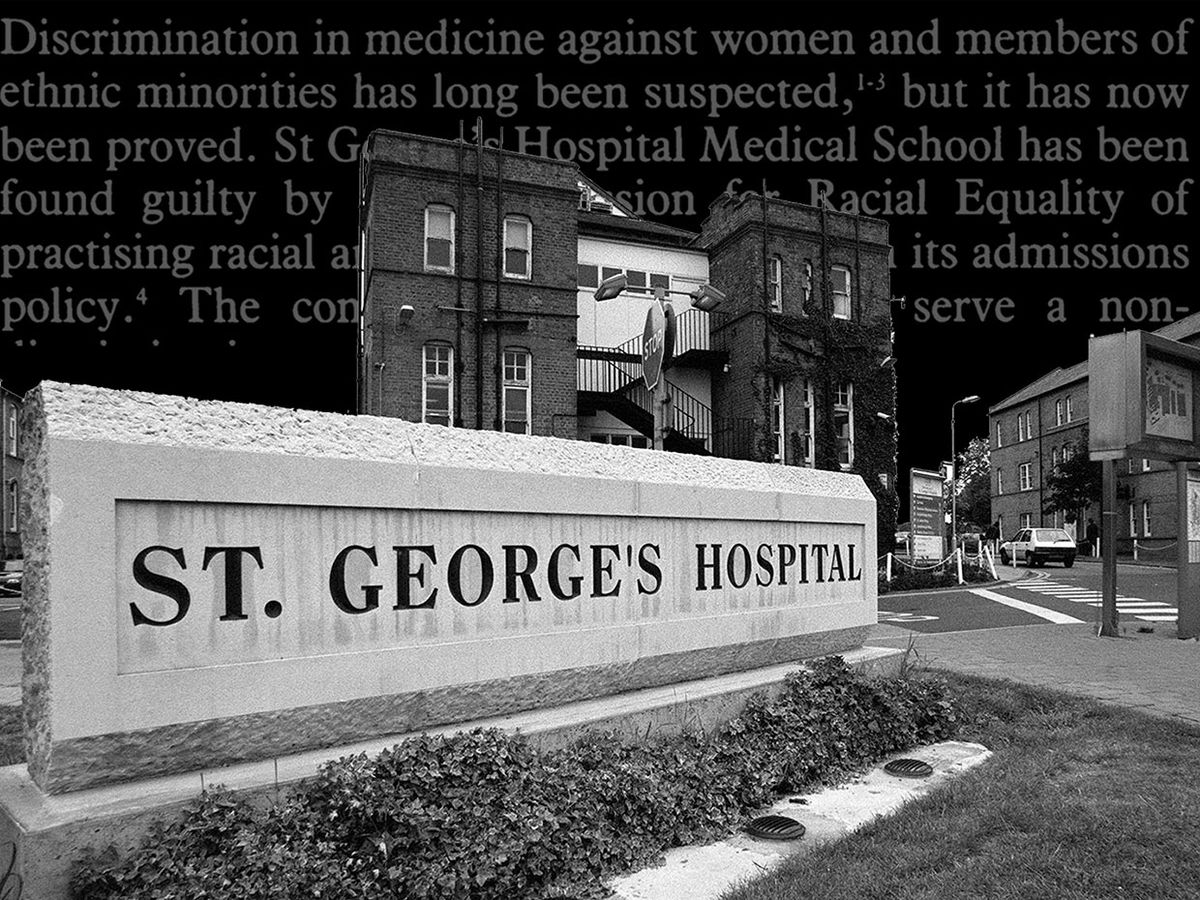

In the 1970s, Dr. Geoffrey Franglen of St. George’s Hospital Medical School in London began writing an algorithm to screen student applications for admission.

At the time, three-quarters of St. George’s 2,500 annual applicants were rejected by the academic assessors based on their written applications alone, and didn’t get to the interview stage. About 70 percent of those who did make it past the initial screening went on to get offered places at the medical school. So that initial “weeding out” round was crucial.

Franglen was the vice-dean of St. George’s and himself an admissions assessor. Reading through the applications was a time-consuming task that he felt could be automated. He studied the processes by which he and his colleagues screened students, then wrote a program that, in his words, “mimic[ked] the behavior of the human assessors.”

While Franglen’s main motivation was to make admissions processes more efficient, he also hoped that it would remove inconsistencies in the way the admissions staff carried out their duties. The idea was that by ceding agency to a technical system, all student applicants would be subject to precisely the same evaluation, thus creating a fairer process.

In fact, it proved to be just the opposite.

Franglen completed the algorithm in 1979. That year, student applicants were double-tested by the computer and human assessors. Franglen found that his system agreed with the gradings of the selection panel 90 to 95 percent of the time. For the medical school’s administrators, this result proved that an algorithm could replace the human selectors. By 1982, all initial applications to St. George’s were being screened by the program.

Within a few years, some staff members had become concerned by the lack of diversity among successful applicants. They conducted an internal review of Franglen’s program and noticed certain rules in the system that weighed applicants on the basis of seemingly non-relevant factors, like place of birth and name. But Franglen assured the committee that these rules were derived from data collected from previous admission trends and would have only marginal impacts on selections.

In December 1986, two senior lecturers at St. George’s caught wind of this internal review and went to the U.K. Commission for Racial Equality. They had reason to believe, they informed the commissioners, that the computer program was being used to covertly discriminate against women and people of color.

The commission launched an inquiry. It found that candidates were classified by the algorithm as “Caucasian” or “non-Caucasian” on the basis of their names and places of birth. If their names were non-Caucasian, the selection process was weighted against them. In fact, simply having a non-European name could automatically take 15 points off an applicant’s score. The commission also found that female applicants were docked three points, on average. As many as 60 applications each year may have been denied interviews on the basis of this scoring system.

At the time, gender and racial discrimination was rampant in British universities—St. George’s was only caught out because it had enshrined its biases in a computer program. Because the algorithm was verifiably designed to give lower scores to women and people with non-European names, the commission had concrete evidence of discrimination.

St. George’s was found guilty by the commission of practicing discrimination in its admissions policy, but escaped without significant consequences. In a mild attempt at reparations, the college tried to contact people who might have been unfairly discriminated against, and three previously rejected applicants were offered places at the school. The commission noted that the problem at the medical school was not only technical, but also cultural. Many staff members viewed the admissions machine as unquestionable, and therefore didn’t take the time to ask how it distinguished between students.

At a deeper level, the algorithm was sustaining the biases that already existed in the admissions system. After all, Franglen had tested the machine against humans and found a 90 to 95 percent correlation of outcomes. But by codifying the human selectors’ discriminatory practices into a technical system, he was ensuring that these biases would be replayed in perpetuity.

The case of discrimination at St. George’s got a good deal of attention. In the fallout, the commission mandated the inclusion of race and ethnicity information in university admission forms. But that modest step did nothing to stop the insidious spread of algorithmic bias.

Indeed, as algorithmic decision-making systems are increasingly rolled out into high-stakes domains, like health care and criminal justice, the perpetuation and amplification of existing social biases based on historical data has become an enormous concern. In 2016, the investigative journalism outfit ProPublica showed that software used across the United States to predict future criminals was biased against African-Americans. More recently, researcher Joy Buolamwini demonstrated that Amazon’s facial recognition software has a far higher error rate for dark-skinned women.

While machine bias is fast becoming one of the most discussed topics in AI, algorithms are still often perceived as inscrutable and unquestionable objects of mathematics that produce rational, unbiased outcomes. As AI critic Kate Crawford says, it’s time to recognize that algorithms are a “creation of human design” that inherit our biases. The cultural myth of the unquestionable algorithm often works to conceal this fact: Our AI is only as good as we are.

This is the fifth installment of a six-part series on the untold history of AI. Part 4 told the story of the DARPA director who dreamed of cyborg intelligence in the 1960s. Come back next Monday for Part 6, which brings the series to a close with a close look at Amazon’s Mechanical Turkers.