Chiplets are a way to make systems that perform a lot like they are all one chip, despite actually being composed of several smaller chips. They’re widely seen as one part of the computing industry’s plan to keep systems performing better and better despite the fact that traditional Moore’s Law scaling is nearing its end. Proponents say the benefits will include more easily-specialized systems and higher yield, among other things. But more importantly, they might lead to a big shift in the fabless semiconductor industry, where the targeted end-product might become a small, specialized chiplet meant to be combined in the same package with both a general purpose processor and many others specialty chiplets. Ramune Nagisetty, a principal engineer and director of process and product integration at Intel’s technology development group in Oregon, has been working to help develop an industry-wide chiplet ecosystem. She told IEEE Spectrum about that and the Intel technologies involved on 21 March 2019.

Ramune Nagisetty on:

- What chiplets are

- Intel’s EMIB solution

- Integration engineering problems

- The need for new testing tech and standards

- Fabless startups in a chiplets world

IEEE Spectrum: Would you define what chiplets are and say why they’re becoming important?

Ramune Nagisetty: A chiplet is a physical piece of silicon. It encapsulates an IP (intellectual property) subsystem. And it’s designed to integrate with other chiplets through package-level integration, typically through advanced package integration and through the use of standardized interfaces.

Why are they becoming important? It’s because there’s no one-size-fits-all approach that works anymore. We have an explosion in the different types of computing and workloads, so there are a lot of different architectures that are emerging to support these different types of computing models. Basically, heterogeneous integration of best-in-class technology is a way to continue the Moore’s law performance trends.

IEEE Spectrum: When you talk about heterogeneous technologies, do you also mean semiconductor materials other than silicon?

Nagisetty: I would say it’s not necessarily just silicon, there will be other types of semiconductor technologies as well. You could include things like germanium-based technologies, or III-Vs. In the future, we will have more varied types of semiconductor technologies. Today, it’s primarily silicon.

Even with only silicon-based chiplets, these will certainly be at different technology nodes. They are usually tuned for better performance in different areas—whether it’s digital, analog , RF or, for example, memory technologies.

One of the things that’s really driving this is certainly the integration of memory. High-bandwidth memory, or HBM, is essentially one of the first proof points of in-package integration of heterogeneous silicon. Memory is essentially the first type of heterogeneous integration that’s really gaining steam using advanced packaging.

IEEE Spectrum: Intel’s way to connect chiplets is called the Embedded Multi-die Interconnect Bridge. Please tell us what it is and how it works.

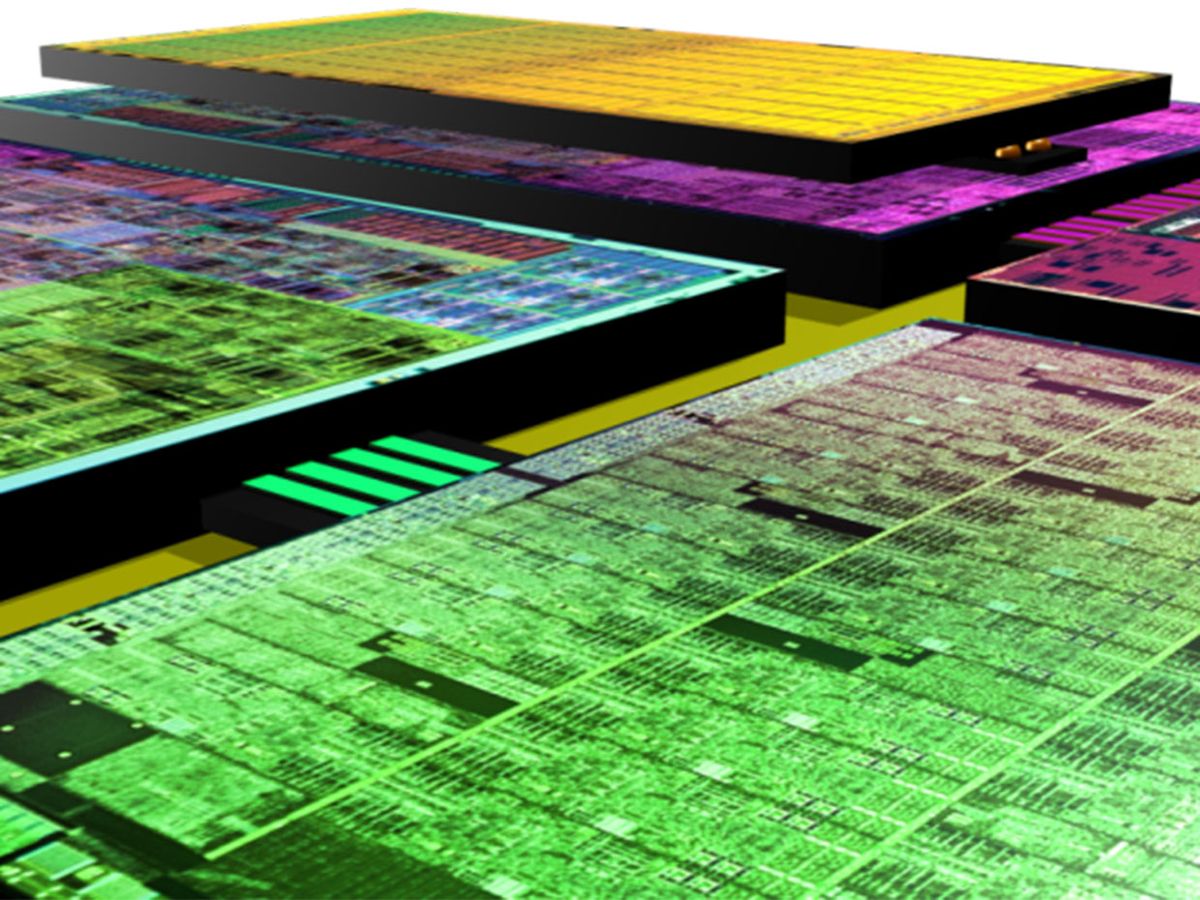

Nagisetty: You can think of it as a high-density bridge connecting two chiplets together, that’s essentially the best way to describe it. I think many people are familiar with the idea of using a silicon interposer as an advanced packaging substrate. [Silicon interposers are silicon substrates with dense interconnects and through-silicon vias built into them. They enable high-bandwidth connections between chips.]

What an EMIB is, is essentially a very tiny piece of a silicon interposer that has very high-density interconnects and what we call microbumps at a much higher density than what you would find on a standard packaging substrate. [Microbumps are tiny balls of solder that link a chip to another chip or to a package's high-density interconnects.]

That EMIB or bridge is essentially embedded into a standard packaging substrate. With the EMIB, you’re essentially able to get the highest interconnect density exactly where you need it, and then you can use a standard packaging substrate for the rest of the interconnect.

There are a lot of benefits to doing it this way. One of them being cost because the cost of a silicon interposer is proportional to the area of that interposer. So, in this case, we really localized the high-density interconnect to the area where it’s needed. And there are also benefits in terms of overall insertion loss—the attenuation of the signal due to material properties—using a standard packaging substrate instead of a silicon interposer.

IEEE Spectrum: What has Intel used the EMIB for so far?

Nagisetty: Intel actually has several demonstrated chiplet solutions, and I’d like to talk about each one of them because I think it helps to shed light on what I think are the three different directions that chiplets will be going.

Intel has two solutions that are based on EMIB currently, but they’re very different. The first one is Kaby Lake-G, and that’s basically where we integrated an AMD Radeon GPU and high-bandwidth memory [HBM] with our CPU chip. We used EMIB to integrate the GPU and HBM, using an HBM interface inside the package. And then we used PCI Express, which is a standard circuit-board-level interface, inside the package to integrate the GPU and the CPU.

What is really interesting about that solution is that we’re using externally developed silicon from several foundries and we’re using common industry-standard interfaces—HBM and PCI Express— to create a best-in-class product. In this case, we took a component (GPU with HBM) that was able to stand alone on a board, and we integrated it in a package. PCI Express is designed to send signals over long distances that are more typical of a board. When you put it inside a package, it’s actually not necessarily an optimal solution, but it is a quick solution because we’re utilizing interfaces that already exist in industry.

IEEE Spectrum: What advantage do you gain from this integration?

Nagisetty: In this case, what we’ve gained is a huge form factor, or size, improvement. For mobile gaming, fitting inside a laptop design is really important. Essentially, you’re always trading form factor, power, and performance off of each other. So in this case, it’s really optimized for having a best-in-class solution in the smallest size possible.

IEEE Spectrum: What’s the other chiplet example you wanted to describe?

Nagisetty: The next thing I’d like to talk about is the Stratix 10 FPGA, which was actually Intel’s first demonstration of the EMIB solution. The Stratix 10 has an Intel FPGA at the center of it. And then it has six chiplets surrounding the FPGA. Four of them are high-speed transceiver chiplets, and two of them are high-bandwidth memory chiplets, and they are all assembled in a package. This example integrates six different technology nodes from three different foundries; so it’s further proof of interoperability in terms of different foundries.

The second thing is that it uses an industry-standard die-to-die interface called AIB, which is Intel’s Advanced Interface Bus. It was created for this product and is essentially the seed of an industry standard for a high-bandwidth, logic-to-logic interconnect inside the package. So HBM is the first standard for memory integration, and AIB is the first standard for logic integration.

AIB is an interface that can be used both with Intel’s EMIB solution and with competing solutions such as silicon interposers. But what’s really great about this interface and the FPGA at the center of this ecosystem is the mix-and-match approach that’s possible. There are many different companies and many different universities as well that are creating chiplets using AIB through an effort sponsored by DARPA’s CHIPS (Common Heteregeneous Integration and IP Reuse Strategies) program.

IEEE Spectrum: What’s the third example?

Nagisetty: The third example I want to talk about is Intel’s Foveros solution, which is our logic-on-logic die stacking, and that was first talked about in December and then the product, called Lakefield, was announced in January at CES. This is chiplet integration, but instead of horizontal stacking, it’s vertical stacking.

This type of integration allows you to get extremely high bandwidth between the two chiplets. But it’s based on internal, proprietary interfaces and the two die are essentially co-designed because they have to be floor-planned together in order to manage things like power delivery and thermals.

For logic-on-logic die stacking, it’ll probably be a much longer timeframe before we see standards emerging in the industry area, because the die are essentially co-designed together. Memory stacking on top of logic will probably be the first place where any kind of standards develop for three-dimensional stacking.

IEEE Spectrum: When designing for stacked chips, what are some of things that you have to watch out for?

Nagisetty: Thermal issues are the major one. As you can imagine, die stacking exacerbates any kind of thermal issues. So we really need to do careful floor planning to accommodate thermal hotspots. We also need to consider the architecture of the entire system. The implications of using 3D stacking will drive all the way down to architectural decisions, not just the physical architecture, but the entire CPU or GPU and system architecture.

Also, if we want to have any kind of interoperability, we need to have interoperable material systems. There are a large range of things that need to be done in order to support interoperability, but thermals, I think, are the top challenge and power delivery and power management are a challenge as well.

IEEE Spectrum: What sorts of standards are still needed to advance chiplets?

Nagisetty: Industry standards around testing are really important. Typically, we do test with fully packaged parts. We need to be able to put “known good die” (chiplets that we know work) inside a package so that we don’t inadvertently end up with yield fall-out due to a single chiplet being bad and the other chiplets being good. So we need a strategy for known good die and we need a strategy for test.

Something else that we need is multi-vendor support for power and thermal management. That means being able to have hooks into all of the integrated chiplets in order to both manage power and manage thermals.

And then in terms of electrical intraoperability, AIB—the interface that we released last July—really is only a standard at what we call the PHY level, which is the electrical and physical interface. But we need to have standards that go all the way up through the upper layer protocols as well.

I guess the last one, and it might seem obvious, is a mechanical standard—literally the placement of microbumps and routing between microbumps needs standards to support interoperability.

IEEE Spectrum: Can you tell us a bit more about the “known good die” problem, and how testing is going to have to be different with chiplets?

Nagisetty: Well, it’s really pretty different, because the ability to completely guarantee Known Good Die is generally based on hot testing of a packaged part. So with chiplets now you have to be able to essentially test bare die—chips before they’ve been packaged—at the same level of capability. It’s easier to test packaged parts, easier to provide power delivery, and so forth. When you do this with bare die, you have challenges with probing because you have extra fine pads that you have to set the probes on.

The other thing is that anything that you need in order to test that die independently, whether it’s a clock or any kind of other collateral to be able to fully test each chiplet, needs to be designed into the chiplet. The chiplets can’t be codependent on each other in order to test them. They have to be individually fully testable before they’re packaged.

There is work going on in this area. And it’s extremely important because, you can imagine, by the time you have a packaged part with many chiplets inside it, there’s a lot of value in that packaged part. And if any of those chiplets are bad and are not redundant or fixable, then essentially you’ve thrown out a lot of other chiplets that have value.

IEEE Spectrum: Isn’t one of the proposed benefits of chiplets that they lead to better yield because they are physically smaller and therefore less error-prone?

Nagisetty: Chiplets do provide a massive yield improvement and that’s one reason to use them, but it’s certainly not the only one. The yield improvement definitely hinges on being able to test those die before you package them.

IEEE Spectrum: How will chiplets change the way things are designed?

Nagisetty: High-bandwidth memory integration is really the first proof point, and it is already being used widely in GPUs and also with AI processors in high-performance systems. So in this case, chiplets and package integration of memory are already changing the way that the chips are designed and integrated.

Co-design of chiplets is certainly going to be an important area that will develop. What I really think is going to happen is we’re going to have multiple vendors supplying chiplets. And so, what are the architectural boundaries? And how do we design best-in-class products based on the building blocks that we have? We’re really only at the beginning of what I think is a revolution. A new industry ecosystem will develop around this.

Being able to comprehend the kind of requirements needed from the different chiplet vendors and being able to communicate across those boundaries is going to be really important. Simulation tools and methodologies will be important to work across what we think of as traditional boundaries. So, yeah, it will change the way we design chips or design packaged parts, and it will definitely change how the semiconductor ecosystem evolves over time.

IEEE Spectrum: Can you say a little bit more about that ecosystem? If you’re a fabless startup 10 years from now, what’s that look like? How does the chiplet revolution potentially change what these businesses actually try to do?

Nagisetty: I think that this is actually a really exciting time for fabless startups, precisely because of the possibility of being able to create a smaller IP subsystem that could be extremely valuable when it’s integrated using a chiplet approach.

One of the goals of the DARPA CHIPS program is to essentially support IP [intellectual property] reuse and also to reduce the overall nonrecurring engineering cost of producing a product. The chiplet approach allows a fabless startup to focus on the piece of the IP that they’re extremely good at, and not necessarily worry about the rest.

I do think of the chiplet approach as a kickstart for fabless startups, and this is also part of a bigger thing that DARPA is sponsoring, which is the ERI, or the Electronics Resurgence Initiative, because as the number of companies that are able to support leading-edge semiconductor technology development has dropped over the years, the ability for small companies to innovate in that market has suffered. This essentially opens up the path for much more innovation, and specifically from fabless startups. I think that this is going to be a platform for innovation moving forward, and it’ll be very important for the industry. And that’s why I think it’s a super exciting time in terms of the semiconductor industry, because we’re going to see a lot of change and a lot of opportunity develop from this.

IEEE Spectrum: How fast will chiplets develop until we get to the point where startups and smaller companies can participate effectively?

Nagisetty: So there are a couple things that have happened recently. There’s a new industry forum called ODSA, which is the Open Domain-Specific Architectures project. And it’s becoming part of an industry-wide collaboration called the Open Compute Project. Companies are coming to ODSA meetings and talking about their interests in using package level integration and a chiplet approach. There’s also the recent announcement of compute express link [CXL] , as a coherent interface for accelerators.

It seems like a lot of things are happening pretty fast in support of this new kind of ecosystem, based on accelerators and based on package integration. I can’t really look into a crystal ball and tell you exactly how long it will take. But I suspect it won’t take that long, maybe a few years. And in some cases, we’re seeing early proof points that are already happening.

IEEE Spectrum: Will chiplets need some sort of intelligence integrated into the substrate or package eventually?

Nagisetty: Well, I think we’ll just see how this evolves. I think it’s really an architectural decision. I think there are a wide range of possibilities here. And when you talk about putting intelligence into the substrate, you may have one substrate that is intelligent stacked on another substrate that is purely just an interconnect. So there will be hierarchies and then maybe even a kind of hybridized type of substrate where you have some sort of intelligence, but maybe in a much older technology node that’s really optimized for something entirely different.

IEEE Spectrum: So what’s next in chiplet tech development at Intel?

Nagisetty: Well, what I can tell you is that the things that we’ve already shown in the market are the leading examples of how we will be creating virtually all of our products in the future. We have a lot of runway with each of these kind of integration schemes, and we’re just starting out in this direction. But with these technologies in our game plan, we’re really, really well positioned to move forward in the coming generations.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.