Intel has passed a key milestone while running alongside Google and IBM in the marathon to build quantum computing systems. The tech giant has unveiled a superconducting quantum test chip with 49 qubits—enough qubits to possibly enable quantum computing that begins to exceed the practical limits of modern classical computers.

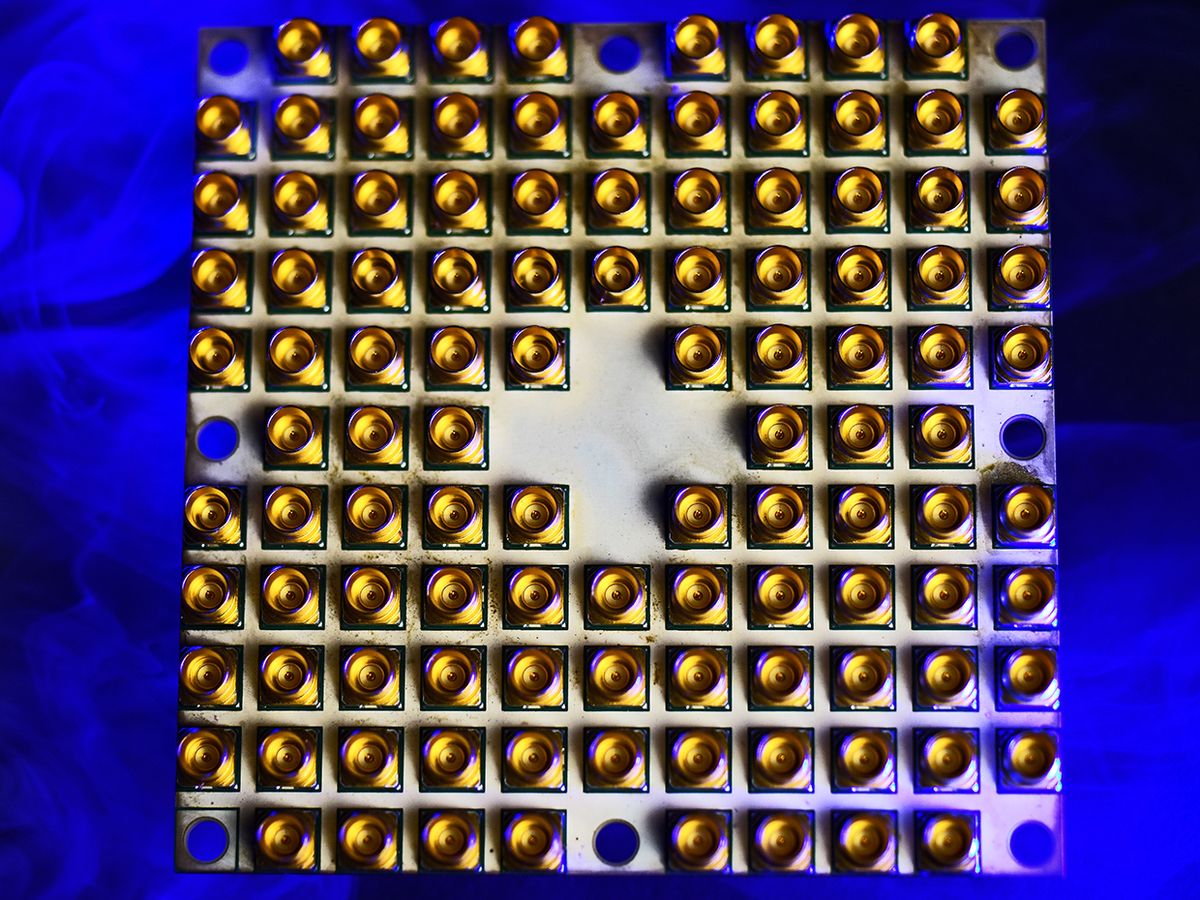

Intel’s announcement about the design and fabrication of its new 49-qubit superconducting quantum chip, code-named Tangle Lake, came during a keynote speech by Brian Krzanich, Intel's CEO, during 2018 CES, an annual consumer electronics trade show in Las Vegas. It’s a milestone that Google and IBM researchers have also been targeting because it could usher in the moment of so-called quantum supremacy, when quantum computing can outperform classical computing.

But Michael Mayberry, corporate vice president and managing director of Intel Labs, chose to describe quantum supremacy in different terms. “Around 50 qubits is an interesting place from a scientific point of view, because you’ve reached the point where you can’t completely predict or simulate the quantum behavior of the chip,” he says.

This newest announcement puts Intel in good company as far as the quantum computing marathon goes. IBM researchers announced that they had built a 50-qubit quantum chip prototype in November 2017. Similarly, Google previously talked about its ambitions to achieve a 49-qubit superconducting quantum chip before the end of last year.

It’s still going to be a long road before anyone will realize the commercial promise of quantum computing, which leverages the idea of quantum bits (qubits) that can represent more than one information state at the same time. Intel’s road map suggests researchers could achieve 1,000-qubit systems within five to seven years. That sounds like a lot until you realize that many experts believe quantum computers will need at least 1 million qubits to become useful from a commercial standpoint.

But practical quantum computing also requires much more than ever-larger arrays of qubits. One important step involves implementing “surface code” error correction that can detect and correct for disruptions in the fragile quantum states of individual qubits. Another step involves figuring out how to map software algorithms to the quantum computing hardware. A third crucial issue involves engineering the local electronics layout necessary to control the individual qubits and read out the quantum computing results.

The 49-qubit Tangle Lake chip builds upon the tech giant’s earlier work with 17-qubit arrays that have the minimum number of qubits necessary to perform surface-code error correction. Intel has also developed packaging to prevent radio-frequency interference with the qubits, and uses so-called flip-chip technology that enables smaller and denser connections to get signals on and off the chips. “We’re focused on a system, not simply a larger qubit count,” Mayberry explains.

The superconducting qubit architecture involves loops of superconducting metal that require extremely cold temperatures of about 20 millikelvin (-273 degrees C). One Intel “stretch goal” is to raise those operating temperatures in future systems.

Overall, Intel has been hedging its bets on the different possible paths that could lead to practical quantum computing. The tech giant has partnerships with QuTech and many other smaller companies to build and test different hardware or software-hardware configurations for quantum computing. The company has also evenly split its quantum computing investment between the superconducting qubit architecture and another architecture based on spin qubits in silicon.

Such spin qubits are generally smaller than superconducting qubits and could potentially be manufactured in ways similar to how Intel and other chip-makers manufacture conventional computer transistors. That translates into a potentially big advantage for scaling up to thousands or millions of qubits, even if development on superconducting qubits is generally ahead of that regarding spin qubits. On the latter front, Intel has already figured out how to fabricate spin qubits based on the processes used to manufacture its 300-mm silicon wafers.

In addition to quantum computing, Intel has been making steady progress in developing neuromorphic computing that aims to mimic how biological brains work. During the 2018 CES talk, Intel CEO Krzanich provided an update on Loihi, the company’s neuromorphic research chip that was first unveiled in October 2017. Such chips could provide the specialized hardware counterpart for the deep-learning algorithms that have dominated modern AI research.

Loihi’s claim to fame involves combining deep-learning training and inference on the chip to supposedly achieve faster computations with greater power efficiency, Mayberry says. That could be a big deal because deep-learning algorithms typically take a while to train on new data sets and make new inferences from the process.

Intel researchers have recently been testing the Loihi chip by training it on tasks such as recognizing a small set of objects within seconds. The company has not yet pushed the capabilities of the neuromorphic chip to its limit, Mayberry says. Still, he anticipates neuromorphic computing products potentially hitting the market within two to four years, if customers can run their applications on the Loihi chip without requiring additional hardware modifications.

“Neither quantum nor neuromorphic computing is going to replace general purpose computing,” Mayberry says. “But they can enhance it.”

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.