The 6th annual ACM/IEEE Conference on Human-Robot Interaction just ended in Switzerland this week, and Georgia Tech is excited to share three of their presentations showcasing the latest research in how humans and robots relate to each other. Let's start from the top:

How Can Robots Get Our Attention?

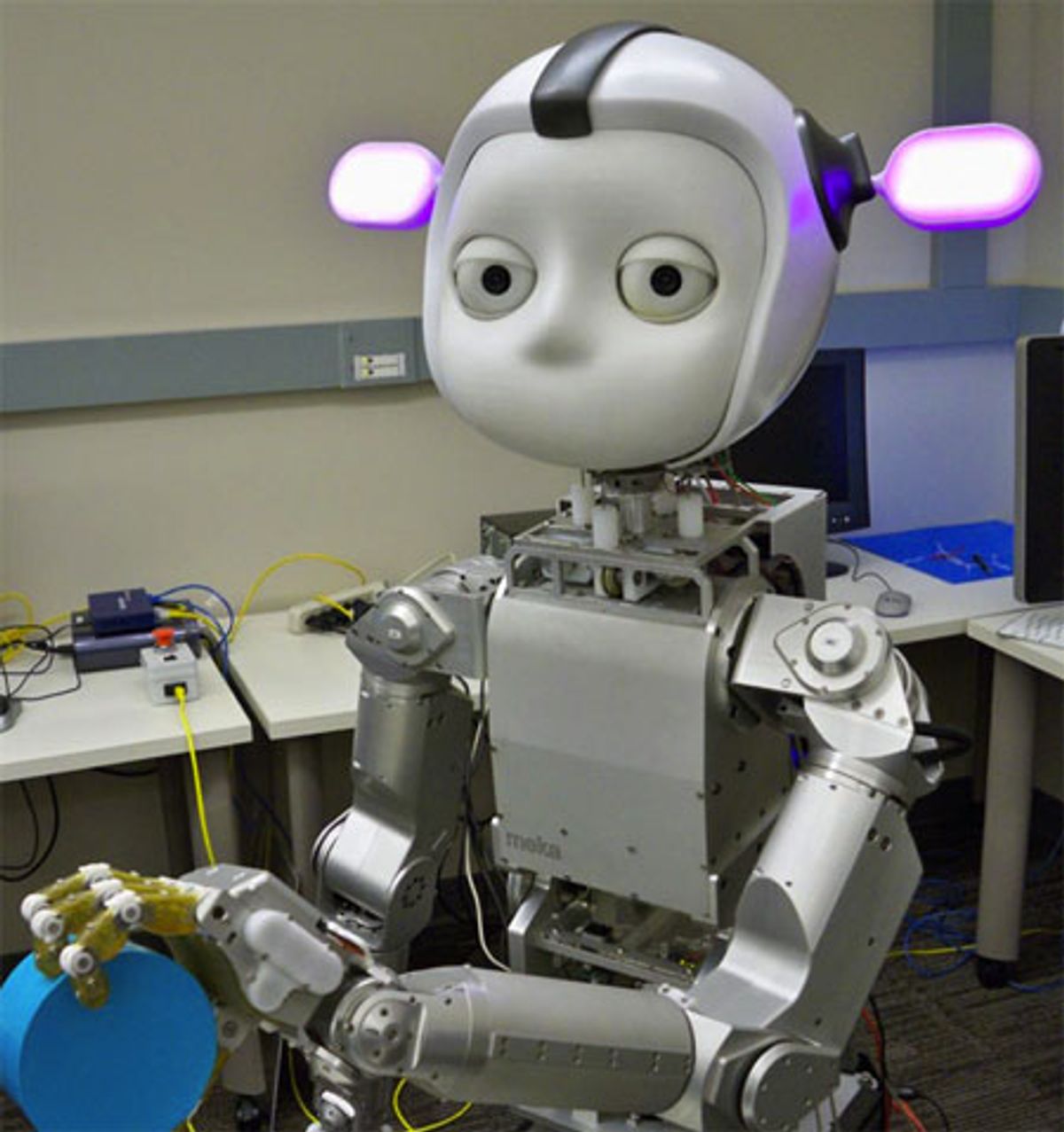

Humans rely on lots of fairly abstract social conventions when we communicate, and most of them are things that we don't even think about, like gaze direction and body orientation. Georgia Tech is using their robot, Simon, to not just try to interact with humans in the same ways that humans interact with each other, but also to figure out how to tell when a human is directing one of these abstract social conventions at the robot.

It's a tough thing, because natural interaction with other humans is deceptively subtle, meaning that Simon needs to be able to pick up on abstract cues in order to minimize that feeling of needing to talk to a robot like it's a robot, i.e. slowly and loudly and obviously. Gesture recognition is only the first part of this, and the researchers are hoping to eventually integrate lots of other perceptual cues and tools into the mix.

More info here.

How Do People Respond to Being Touched by a Robot?

This expands on previous Georgia Tech research that we've written about; the robot in the vid is Cody, our favorite sponge-bath robot. While personally, I take every opportunity to be touched by robots whenever and wherever they feel like, other people may not necessarily be so receptive. As robots spend more time in close proximity to humans helping out with tasks that involve touch, it's important that we don't start to get creeped out or scared.

Georgia Tech's research reveals that what humans perceive a robot's intent to be is important, which is a little weird considering that intent (or at least, perceived intent) is more of a human thing. Cody doesn't have intent, persay: it's just got a task that it executes, although I suppose you could argue that fundamentally, that constitutes intent. In this case, when people thought that Cody was touching their forearm to clean it, they were more comfortable than when they thought that Cody was touching their forearm (in the exact same way, mind you) just to comfort them. Curiously, people also turn out to be slightly less comfortable when the robot specifically states its intent before performing any actions, which is the opposite of what I would think would be the case. Geez, humans are frustratingly complex.

I definitely appreciate where Georgia Tech is going with this research, and why it's so important. As professor Charlie Kemp puts it:

"Primarily people have been focused on how can we make the robot safe, how can we make it do its task effectively. But that’s not going to be enough if we actually want these robots out there helping people in the real world."

More info here.

Teaching Robots to Move Like Humans

This is all about making robots seem more natural and approachable, which is one of those things that might seem a little less important that it is, since by virtue of reading Automaton, you might be a lot more familiar (and comfortable) with robots than most people are. The angle Georgia Tech is taking here is to first try and figure out how to quantify what "human-like" means, in order to better determine what movements are more "human-like" and what movements are less "human-like."

Making more human-like movements is important for a couple reasons. First, it's easier to understand what a robot wants or is doing when it makes movements like a human would. And second, one of the most identifiable things about robots is the fact that they're all robot-y: they tend to make precise and repetitive movements, which might be very efficient, but it's not very human. Humans are a little more random, and giving robots some of that randomness, researchers say, may help people "forget that this is a robot they’re interacting with."

More info here.

Thanks Travis!

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.