Insects like bees, termites, and ants have somehow figured out a whole repertoire of extraordinarily complex cooperative behaviors, which is all the more remarkable considering that their brains are the size of, uh, something very small. They team up to build structures, forage for food, and move enormous amounts of material relative to their size. All of this cleverness has evolved over time, Darwinian selection-style, but without waiting around for however many millions of years that took, it’s hard to see it in action.

In a paper published this month in PLOS Computational Biology, researchers used identical simulated robots to watch behavioral evolution in action, and remarkably, the robots were able to figure out how to organize themselves into a system of specialized division of labor completely on their own.

Social insects get the job done by dividing large, complex tasks that would be very difficult for individuals to complete the entirety of into little chunks that individuals can specialize in. Let’s look at leafcutter ants (as that’s the example that the researchers use); see how cute they are:

What this video doesn’t show is the level of task partitioning going on here. There are the “dropper” ants that nip the bits of the leaves off, dropping them into a pile, and then “collector” ants that pick up the leaf bits from that pile and carry them back to the nest. Some leafcutter ants do the generalist thing, cutting the leaves and then carrying them all the way back to the nest themselves, but if the ants are working high up in a tree, the task partitioning is much more efficient because the ants can let gravity carry the leaves down for them, and they don’t have to make trips to the ground and back.

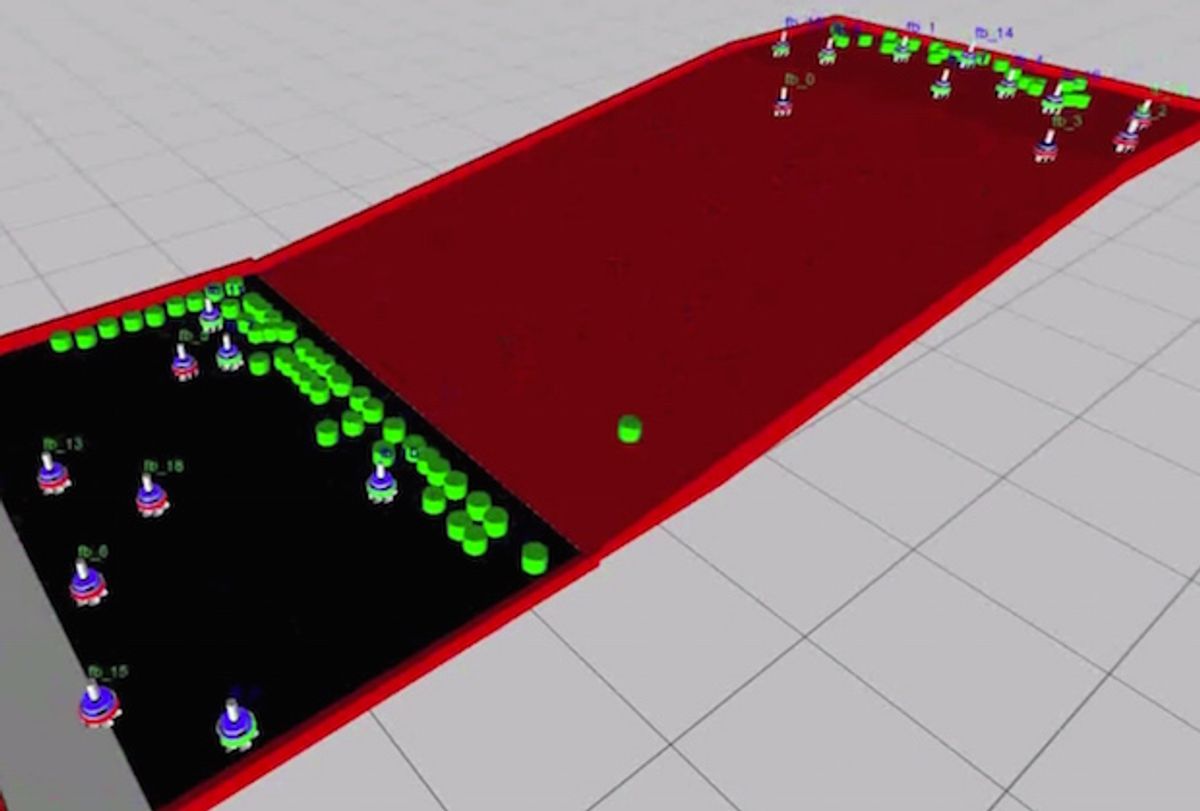

The researchers simulated the entire process with virtual robots based on footbots (these guys). The scenario included a nest (a goal), a tree (a source for virtual leaves), and a ramp with a slope of eight degrees between the two to simulate a tree trunk. The slope was steep enough that the robots had to expend more time and energy going over it, and also steep enough that leaves placed at the top would slide down to the bottom by themselves. The simulation also provided a virtual sun that the robots could use for primitive navigation, with a virtual light sensor that they could use to either move towards the light source or away from it. And finally, each virtual robot had the ability to avoid impending obstacles and sense, pick up, and drop virtual leaves.

For the evolutionary behavior itself, all the robots got was a set of very basic behavioral building blocks, including:

- Move toward light

- Move away from light

- Move randomly

- Pick up something

- Drop something

These basic behaviors were initially mixed up randomly into “genes” and assigned to groups of four virtual robots. One hundred of these groups of robots were set loose in parallel on the virtual tree for 5,000 simulated seconds, and each group was evaluated three times. The evaluation was based on the total number of leaves that ended up in the nest area, and based on how both individuals and groups performed, the genes were crossbred and mutated (more on how that works here) to create a new generation of virtual robots. This process was repeated for 2,000 generations, and here’s how it went:

For both large and small groups of robots, the most efficient strategy is task specialization, with half the robots picking leaves and dropping them at the top of the ramp, and the other half of the robots retrieving the leaves after they slide to the bottom of the ramp and carrying them to the nest. This, of course, is exactly what leafcutter ants do, and what’s so fascinating about this research is that it shows how complex, organized division of labor between individuals can be evolved from extremely simple, basic behaviors, even when every robot starts with the exact same set of basic behavioral genes. The simulated robots start disorganized, evolve into generalists, and then gradually figure out that it’s most efficient to specialize in different subtasks.

Each 2,000 generation experiment was run 22 times, and while a few of the evolved controllers got stuck in locally optimized generalization, most of the controllers rapidly converged on specialization that averaged 92 percent, some of them within just a few hundred generations. The researchers note that the driving force behind this selection for specialization was the slope:

“In terms of the adaptive benefits of division of labor and the environmental conditions that select for it, our results demonstrated that task partitioning was favored only when features in the environment (in our case a slope) could be exploited to achieve more economic transport and reduce switching costs, thereby causing specialization to increase the net efficiency of the group.”

This agrees neatly with the behavior observed in leafcutter ants:

“In leafcutter ants, species that collect leaves from trees tend to engage in task partitioned leaf retrieval, whereas species living in more homogeneous grassland usually retrieve leaf fragments in an unpartitioned way, without first dropping the leaves, particularly at close range to the nest.

However, it’s important to note that insect behaviors, and insects themselves, are far more complex than a bunch of virtual robots executing a few simple behaviors allow for. For example, ants often exhibit significant morphological differences within a species, which makes some ants better at doing some tasks over other tasks. And there’s genetic role specialization to consider, too. Future work could incorporate some of this using heterogeneous groups of robots, or even simulated morphologies that evolve over time.

This research is cool because it uses robots (albeit virtual ones) to illustrate how nature is capable of evolving specialized, complex cooperative behaviors. As far as the robots themselves are concerned, this project is even cooler because it suggests a way in which swarms of real robots could turn to simulations like this to adapt their behavior to new situations and tasks, in effect speeding up evolution to come up with better versions of themselves.

“Evolution of Self-Organized Task Specialization in Robot Swarms,” by Eliseo Ferrante, Ali Emre Turgut, Edgar Duéñez-Guzmán, Marco Dorigo, and Tom Wenseleers from KU Leuven, Middle East Technical University, and Université Libre de Bruxelles, was published earlier this month in PLOS Computational Biology.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.