Physicists love recreating the world in software. A simulation lets you explore many versions of reality to find patterns or to test possibilities. But if you want one that’s realistic down to individual atoms and electrons, you run out of computing juice pretty quickly.

Machine-learning models can approximate detailed simulations, but often require lots of expensive training data. A new method shows that physicists can lend their expertise to machine-learning algorithms, helping them train on a few small simulations consisting of a few atoms, then predict the behavior of system with hundreds of atoms. In the future, similar techniques might even characterize microchips with billions of atoms, predicting failures before they occur.

The researchers started with simulated units of 16 silicon and germanium atoms, two elements often used to make microchips. They employed high-performance computers to calculate the quantum-mechanical interactions between the atoms’ electrons. Given a certain arrangement of atoms, the simulation generated unit-level characteristics such as its energy bands, the energy levels available to its electrons. But “you realize that there is a big gap between the toy models that we can study using a first-principles approach and realistic structures,” says Sanghamitra Neogi, a physicist at the University of Colorado, Boulder, and the paper’s senior author. Could she and her co-author, Artem Pimachev, bridge the gap using machine learning?

The idea, published in June, in npj Computational Materials, was to train machine-learning models to predict energy bands from 16-atom arrangements, then feed the models larger arrangements and see if they could predict their energy bands. “Essentially, we’re trying to poke this world of billions of atoms,” Neogi says. “That physics is completely unknown.”

A traditional model might require ten thousand training examples, Neogi says. But she and Pimachev thought they could do better. So they applied physics principles to generate the right training data.

First, they knew that strain changes energy bands, so they simulated 16-atom units with different amounts of strain, rather than wasting time generating a lot of simulations with the same strain.

Second, they spent a year finding a way to describe the atomic arrangements that would be useful for the model, a way to “fingerprint” the units. They decided to represent a unit as a set of 3D shapes with flat walls, one for each atom. A shapes’ walls were defined by points that were equidistant between the atom and its neighbors. (Together, the shapes fit snugly together into what’s called a Voronoi tessellation.) “If you’re smart enough to create a good set of fingerprints,” Neogi says, “that eliminates the need of a large amount of data.” Their training sets consisted of no more than 357 examples.

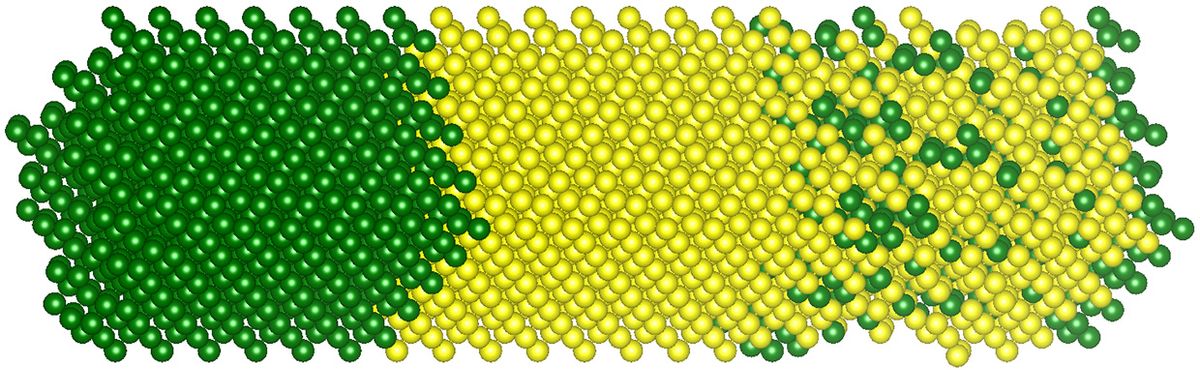

Neogi and Pimachev trained two different types of models—a neural network and a random forest, or set of decision trees—and tested them on three different types of structures, comparing their data with that from detailed simulations. The first structures were “ideal superlattices,” which might contain several atomic layers of pure silicon, followed by several layers of pure germanium, and so on. They tested these in strained and relaxed conditions. The second structures were “non-ideal heterostructures,” in which a given layer might vary in its thickness or contain defects. Third were “fabricated heterostructures,” which had sections of pure silicon and sections of silicon-germanium alloys. Test cases contained up to 544 atoms.

Across conditions, the predictions of the random forests differed from the simulation outputs by 3.7 percent to 19 percent, and the neural networks differed by 2.3 percent to 9.6 percent.

“We didn’t expect that we would be able to simulate such a large system,” Neogi says. “Five hundred atoms is a huge deal.” Further, even as the number of atoms in a system increased exponentially, the hours of computation the models required to make predictions scaled only linearly, meaning that that world of billions of atoms is relatively reachable.

“I thought it was very clever,” Logan Ward, a computational scientist at Argonne National Laboratory, in Lemont, Illinois, says about the study. “The authors did a really neat job of mixing their understanding of the physics at different stages to get the machine learning models to work. I haven’t seen something quite like it before.”

In Neogi’s follow-up work, to be published in the coming months, her lab performed an inverse operation. Given a material’s energy bands, their system predicted its atomic arrangement. Such a system gets them closer to diagnosing faults in computer chips. If a semiconductor’s conductivity is off, they might point to the flaw.

The framework they present has applications to other kinds of materials, as well. Regarding the physics-informed approach to generating and representing training examples, Neogi says, “a little can tell us a lot if we know where to look.”

Matthew Hutson is a freelance writer who covers science and technology, with specialties in psychology and AI. He’s written for Science, Nature, Wired, The Atlantic, The New Yorker, and The Wall Street Journal. He’s a former editor at Psychology Today and is the author of The 7 Laws of Magical Thinking. Follow him on Twitter at @SilverJacket.