Particle physicists have long been early adopters—if not inventors—of tech from email to the Internet. It’s not surprising, then, that as early as 1997, researchers were training computer models to tag particles in the messy jets created during collisions. Since then, these models have chugged along, growing steadily more competent—though not to everyone’s delight.

“I felt very threatened by machine learning,” says Jesse Thaler, a theoretical particle physicist at the Massachusetts Institute of Technology. Initially, he says he felt like it jeopardized his human expertise classifying particle jets. But Thaler has since come to embrace it, applying machine learning to a variety of problems across particle physics. “Machine learning is a collaborator,” he says.

Over the past decade, in tandem with the broader deep-learning revolution, particle physicists have trained algorithms to solve previously intractable problems and tackle completely new challenges.

Even with an efficient trigger, the LHC must store 600 petabytes over the next few years of data collection. So researchers are investigating strategies to compress the data.

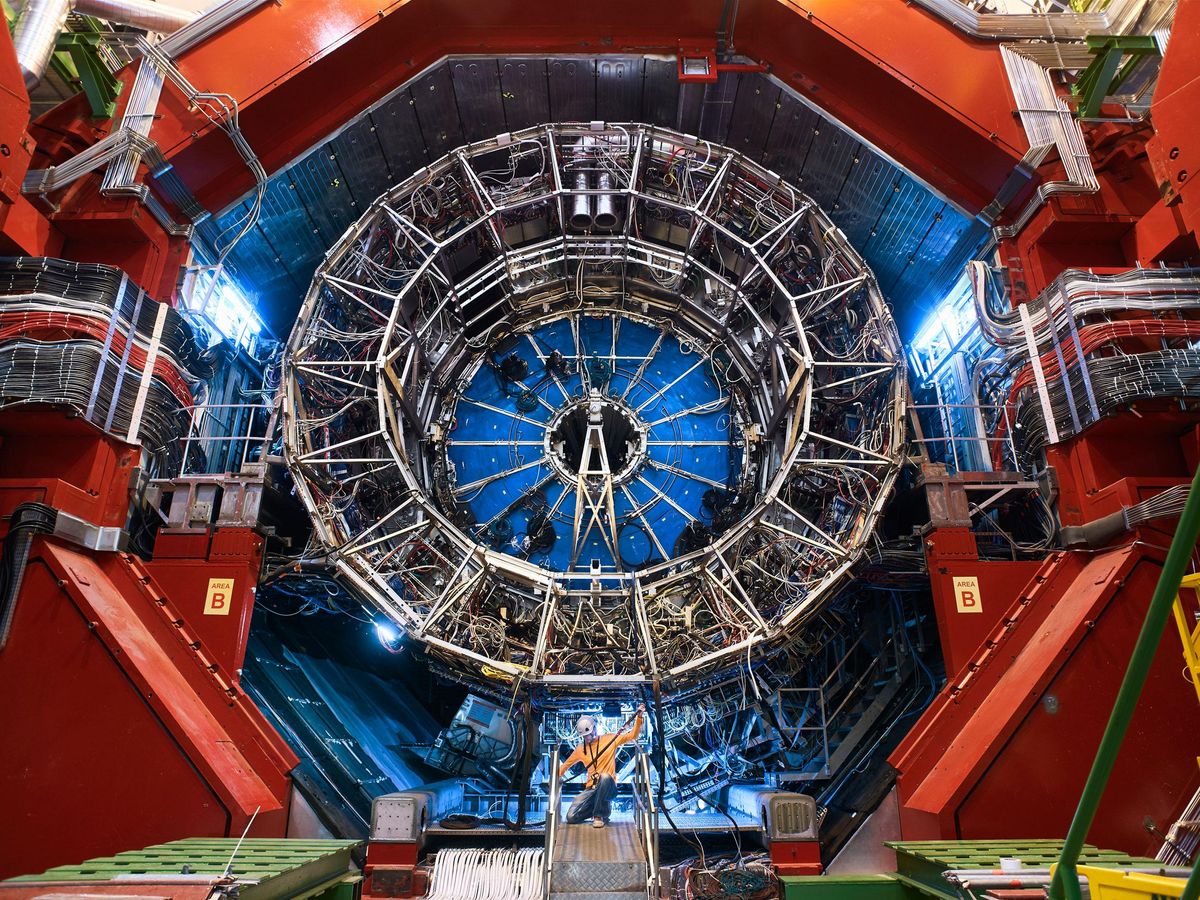

For starters, particle-physics data is very different from the typical data used in machine learning. Though convolutional neural networks (CNNs) have proven extremely effective at classifying images of everyday objects from trees to cats to food, they’re less suited for particle collisions. The problem, according to Javier Duarte, a particle physicist at the University of California, San Diego, is that collision data such as that from the Large Hadron Collider, isn’t naturally an image.

Flashy depictions of collisions at the LHC can misleadingly fill up the entire detector. In reality, only a few out of millions of inputs are registering a signal, like a white screen with a few black pixels. This sparsely populated data makes for a poor image, but it can work well in a different, newer framework—graph neural networks (GNNs).

Other challenges from particle physics require innovation. “We’re not just importing hammers to hit our nails,” says Daniel Whiteson, a particle physicist at the University of California, Irvine. “We have new weird kinds of nails that require the invention of new hammers.” One weird nail is the sheer amount of data produced at the LHC—about one petabyte per second. Of this enormous amount, only a small bit of high-quality data is saved. To create a better trigger system, which saves as much good data as possible while getting rid of low-quality data, researchers want to train a sharp-eyed algorithm to sort better than one that’s hard coded.

But to be effective, such an algorithm would need to be incredibly speedy, executing in microseconds, Duarte says. To address these problems, particle physicists are pushing the limits of machine techniques like pruning and quantization, to make their algorithms even faster. Even with an efficient trigger, the LHC must store 600 petabytes over the next few years of data collection (equivalent to about 660,000 movies at 4K resolution or the data equivalent of 30 Libraries of Congresses), so researchers are investigating strategies to compress the data.

“We’d like to have a machine learn to think more like a physicist, [but] we also just need to learn how to think a little bit more like a machine.”

—Jesse Thaler, MIT

Machine learning is also allowing particle physicists to think differently about the data they use. Instead of focusing on a single event—say, a Higgs boson decaying to two photons—they are learning to consider the dozens of other events that happen during a collision. Although there’s no causal relationship between any two events, researchers like Thaler are now embracing a more holistic view of the data, not just the piecemeal point of view that comes from analyzing events interaction by interaction.

More dramatically, machine learning has also forced physicists to reassess basic concepts. “I was imprecise in my own thinking about what a symmetry was,” Thaler says. “Forcing myself to teach a computer what a symmetry was, helped me understand what a symmetry actually is.” Symmetries require a reference frame—in other words, is the image of a distorted sphere in a mirror actually symmetrical? There’s no way of knowing without knowing if the mirror itself is distorted.

These are still early days for machine learning in particle physics, and researchers are effectively treating the technique like a proverbial kitchen sink. “It may not be the right fit for every single problem in particle physics,” admits Duarte.

As some particle physicists delve deeper into machine learning, an uncomfortable question rears its head: Are they doing physics, or computer science? Stigma against coding—sometimes not considered to be “real physics”—already exists; similar concerns swirl around machine learning. One worry is that machine learning will obscure the physics, turning analysis into a black box of automated processes opaque to human understanding.

“Our goal is not to plug in the machine, the experiment to the network and have it publish our papers so we’re out of the loop,” Whiteson says. He and colleagues are working to have the algorithms provide feedback in language humans can understand—but algorithms may not be the only ones with responsibilities to communicate.

“On the one hand, we’d like to have a machine learn to think more like a physicist, [but] we also just need to learn how to think a little bit more like a machine,” Thaler says. “We need to learn to speak each other’s language.”

This article appears in the December 2022 print issue as “Machine Learning Rethinks Scientific Thinking.”

Dan Garisto is a freelance science journalist who covers physics and other physical sciences. His work has appeared in Scientific American, Physics, Symmetry, Undark, and other outlets.