Most devices today are still in the stone age of haptics—they can intelligently vibrate to communicate different things to the user—but that's about it. While effective, this basic system is very one dimensional, in that the entire phone vibrates instead of just the key that you're pressing. The next generation of haptics promises to make the tactile experience much more nuanced and useful, both on our devices and in the air above them.

Earlier this week, Disney Research presented a new algorithm that's able to translate 3D information in an image or video directly into tactile sensations on a special haptic display. The display itself stays perfectly smooth (unlike, say, a Tactus keyboard), and instead modulates the friction at your fingertips to trick you into feeling like there's texture under them:

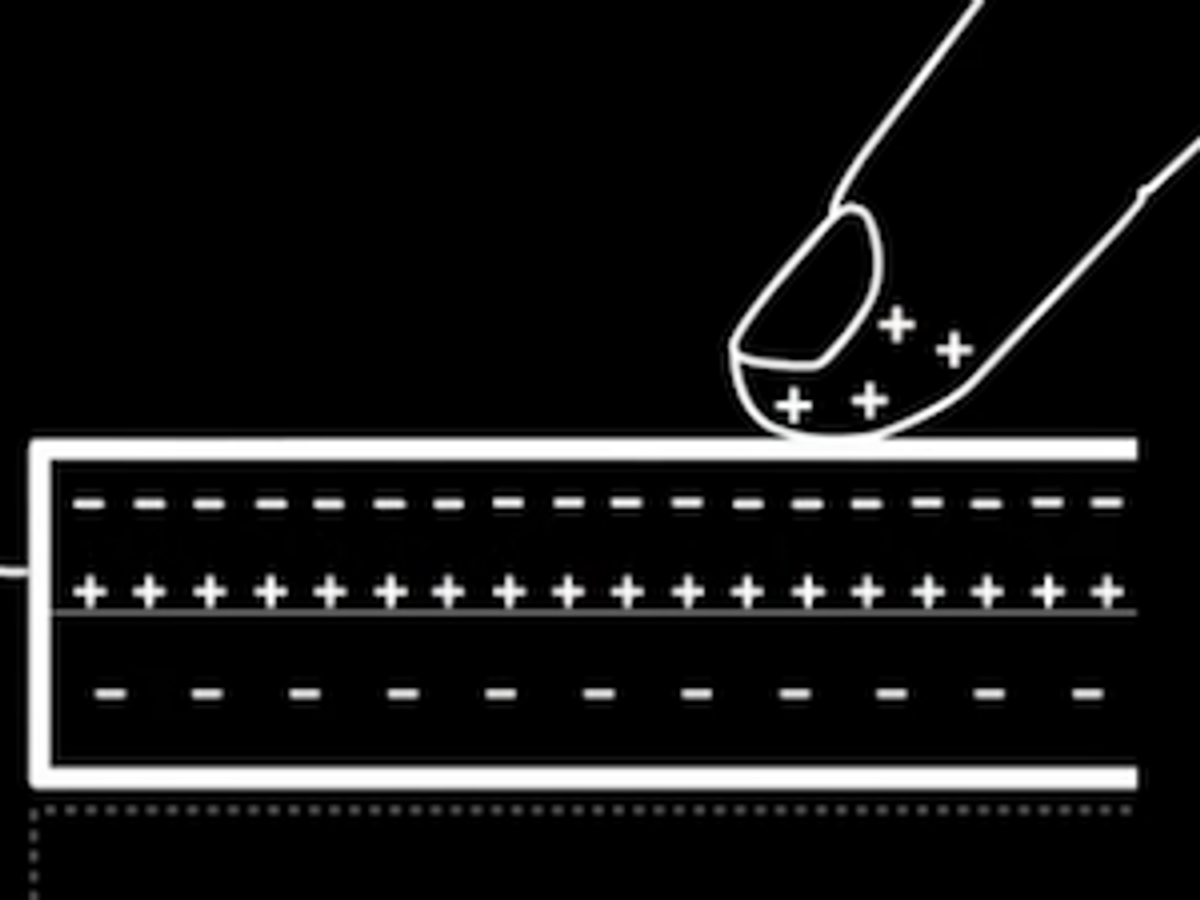

The display creates the illusion of friction using another Disney Research technology from several years ago called TeslaTouch, which uses oscillating electric charges to dynamically adjust the friction between your finger and the touch panel. Here's how it works:

(Simulating friction by changing voltage seems a bit more practical than Microsoft's approach of mounting the entire display on a robotic arm.)

The combination of the TeslaTouch hardware and this new algorithm "will make it possible to render rich tactile information over visual content," leading to "new applications for tactile displays," according to Ivan Poupyrev, director of Disney Research Pittsburgh's Interaction Group. That's exciting, although we're still waiting for tactile displays with any applications at all to become part of our standard tech arsenal.

But what about haptic feedback that goes beyond a screen? Also announced this week was a new type of haptic feedback that does away with tactile displays entirely, and brings touch interaction into the air. It's called UltraHaptics, from the University of Bristol's Interaction and Graphics research group, and it's like nothing you've ever felt before:

To make this work, a transducer array projects carefully calculated waves of ultrasonic sound into the air, which you can't see, hear, or feel. At certain points, however, the waves come into focus and intensify substantially, displacing the air at those points and creating a pressure difference that you can feel. The system can create multiple pressure points in different locations at the same time, and can even endow individual points with distinct tactile properties. A similar technique has been used by AIST to create true 3D displays, using focused lasers to plasmify the air itself.

UltraHaptics is potentially quite relevant at the moment considering how many mid-air gesture-based computer interaction technologies are becoming available to consumers. The most obvious one might be Microsoft's Kinect sensor, but we've also got things like Edge3 and Leap Motion showing up in peripherals and laptops from HP and Asus, among others. And soon, all you'll need is a webcam. Apple is even rumored to be working on 3D gesture control for iPads, and it makes a lot of sense for cellphones as well, where touchscreen real estate is limited.

If the UltraHaptics system can somehow shrink that transducer array into a form factor that can fit either on a desk or (in our fantasy world) inside a phone, it could lead to all sorts of fantastic new applications: we're picturing a full-size, visible, tactile laser plasma keyboard that projects itself out of your phone and into mid-air whenever you need it. It sounds crazy, but all of the technology exists right now, it just needs to get small enough (and cheap enough) to make it into our hands.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.