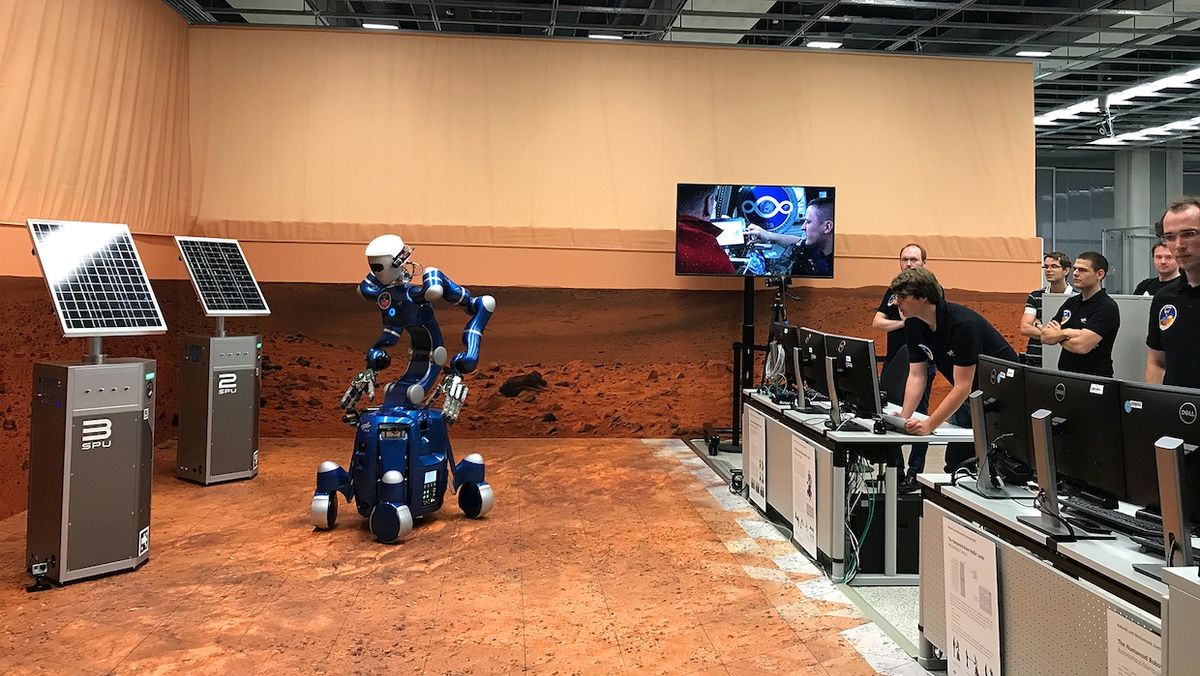

In late August, an astronaut on board the International Space Station remotely operated a humanoid robot to inspect and repair a solar farm on Mars—or at least a simulated Mars environment, which in this case is a room with rust-colored floors, walls, and curtains at the German Aerospace Center, or DLR, in Oberpfaffenhofen, near Munich.

European Space Agency astronaut Paolo Nespoli commanded the humanoid, called Rollin’ Justin, as the robot performed a series of navigation, maintenance, and repair tasks. Instead of relying on direct teleoperation, Nespoli used a tablet computer to issue high-level commands to the robot. In one task, he used the tablet to position the robot and have it take pictures from different angles. Another command instructed Justin to grasp a cable and connect it to a data port.

Roboticists call this approach “supervised autonomy,” and it offers a number of advantages over either full autonomy (in which the robot attempts to do everything on its own) and direct teleoperation (in which the astronaut needs to control every movement of the robot). Supervised autonomy is a robust way of handling unexpected errors and limitations like communication delays. The astronaut acts a supervisor of the robot, and if the robot gets stuck, the human can help it complete the task.

“This concept relies on the robot’s local intelligence to reason and plan the commanded tasks,” says Dr. Neal Y. Lii, the experiment’s principal investigator at DLR’s Robotics and Mechatronics Center. He explains that this allows the operator to carry out tasks without the cognitive strain and pressure of an immersive telepresence system with haptic feedback and visual servo control. “The robot’s intelligence always keeps the robot in a safe state so that it can wait for the feedback and command from the astronaut.”

The experiment, called SUPVIS Justin, was led by DLR in partnership with ESA. It is part of a broader program, the Multi-Purpose End-To-End Robotic Operation Network, or METERON project. Initiated by ESA with DLR, NASA, and Roscosmos, METERON consists of a series of space telerobotics experiments. The goal is to develop advanced human-robot collaboration capabilities to help with future planetary exploration missions.

The idea is that, if humans want to go to Mars, they’ll need to build and maintain habitats and other infrastructure on the surface of the planet, and robots could be a huge help. Before landing, astronauts would remain in orbit and send robots to the surface to work on the needed infrastructure. ESA, and NASA, too, appear to believe that this is the only realistic option to bring humans to Mars. Hence the mock Martian solar farm at DLR.

In the experiment in August, Italian astronaut Nespoli performed two sequences of tasks, or protocols. In the first, he used the robot to perform a solar panel unit inspection and reboot mission. In the second protocol, he performed a system software upgrade mission.

Nespoli went through the two protocols so efficiently that, with some time left, he offered a surprise to the DLR team on the ground: He invited his American colleagues Jack Fischer and Randy Bresnik to try the experiment as well. Although they had never trained to use the tablet interface, the two NASA astronauts, with assistant from Nespoli and the team on the ground, were able to complete some of the tasks without difficulty.

Dr. Lii says two more SUPVIS Justin sessions are scheduled. For the next experiment, his team will make improvements to the tablet’s user interface as well as the robot’s functionalities based on the experience of the ISS crew. “At the same time, we will increase the task complexity and difficulty with each successive experiment session to get a view into the performance envelope we can expect from the astronauts,” he says. In the future, they also plan to test telepresence systems that offer a more immersive experience, because such systems might be necessary during certain missions.

“This allows the user to move between using the robot as a coworker in supervised autonomy form, or as a haptically coupled physical extension on location,” Dr. Lii says. “Ultimately, our hope is to assemble a benchmark/guideline/playbook for designing intuitive space robotic teleoperation systems.”

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.