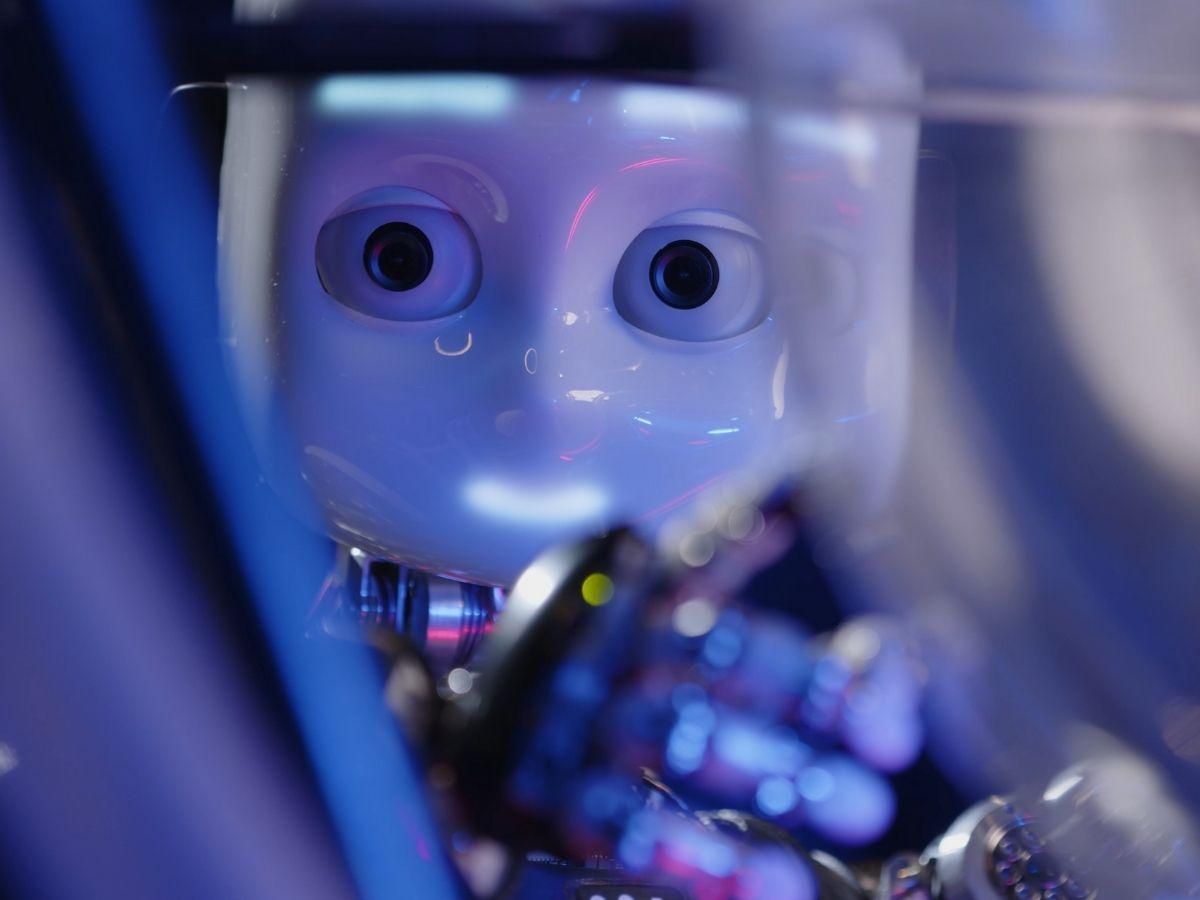

It’s a little weird to think that iCub, the first version of which was released in 2008, is now a full-on teenager—after all, the robot was originally designed after a three year old child, and it hasn’t grown all that much. But as it turns out, iCubhas grown, at least a little bit, with the brand new iCub3, a bigger, heavier, stronger upgrade that can also be used as a sophisticated immersive telepresence platform.

You might not notice the difference between the old and new iCub unless they’re standing side-by-side, but iCub3 is 25 cm taller and weighs 19 kg more, featuring more powerful legs and rearranged actuators in the legs, torso, and shoulders. The head is the same, but the neck is a bit longer to make the robot more proportional overall. In total, iCub3 is 1.25 m tall and weighs 52 kg, with 54 degrees of freedom.

Part of the reason for these upgrades is to turn iCub3 into an avatar platform—that is, a humanoid robot that can embody a remote humanoid of the more biological type. This is something that researchers at the Italian Institute of Technology (IIT) led by Daniele Pucci have been working on for quite a while, but this is by far the most complete (and well filmed) demonstration that we’ve seen:

Now, a couple of those shots right at the beginning look more like drone videos than an actual representation of the user experience, or at least, it won’t be accurate until iCub can fly, which they’re working on, of course. It’s also, honestly, kind of hard to tell what the user experience actually is just based on this very very fancy video, and very very fancy videos rarely inspire confidence about the capabilities of a robotic system. Fortunately, the researchers have published a paper on using iCub3 as an avatar which includes plenty of detail.

This particular demo took place over a distance of almost 300km (Genova to Venice), which matters in the sense that it’s not over infrastructure that is completely under the researcher’s control. Getting it to work requires (as you can see from the video) a heck of a lot of equipment, including:

- HTC Vive PRO eye4 headset

- VIVE Facial Tracker5

- iFeel sensorized and haptic suit6

- SenseGlove DK17 haptic glove

- Cyberith Virtualizer Elite 28 omnidirectional treadmill

Put together, all of this stuff allows for manipulation, locomotion, voice, and even facial expressions to be retargeted from the human to the robot. The facial expressions retargeting is a new one for me, and it applies to eye gaze and eyelid state as well as to the user’s mouth.

Coming back the other direction, from robot to human, is audio, an immersive first-person view, and a moderate amount of haptic feedback. iCub3 has sensorized skin with a fairly high amount of resolution, but that gets translated to just a couple of specific vibration nodes worn by the user. The haptic feedback at the hands is not bad—there’s force feedback on each finger and fingertip vibration motors. Not quite at the level we’ve experienced with HaptX, but certainly functional.

The application here is a remote visit to the Italian Pavilion at the 17th International Architecture Exhibition, which certainly seems to be a very remote-visit-worthy space. But a more practical test will be taking place soon, as iCub3 takes place in the final round of the ANA Avatar XPRIZE competition which “aims to create an avatar system that can transport human presence to a remote location in real time.”

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.