If your robotics lab has a quadruped, it’s become almost a requirement that you post a video of the robot not falling over when walking across some kind of particularly challenging surface. And quadrupeds are getting quite good at keeping their feet, even while negotiating uneven terrain like steps or rubble. One way to do this is without any visual perception at all, simply reacting to obstacles “blindly” by positioning legs and feet to keep the body of the robot upright and moving in the right direction. This can work for terrain that’s continuous, but when you start looking at more dangerous situations like gaps that a robot’s leg could get stuck in, being able to use vision to plan a safe path becomes necessary.

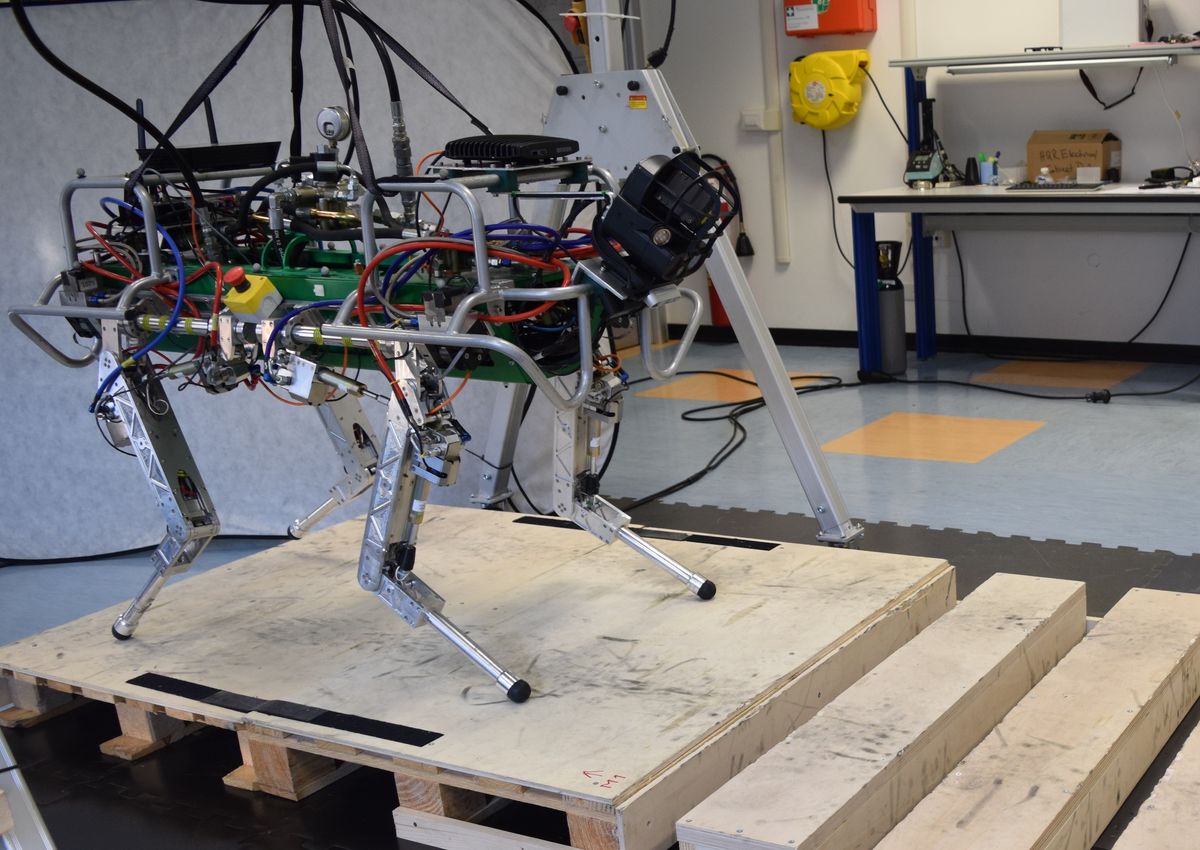

Vision, though, is a real bag of worms, kettle of fish, bushel of geese, or whatever your own favorite tricky metaphor is. Adapting foot placement based on visual feedback takes both reliable sensing and the processing power to back it up, but even under the best of circumstances, there’s only so much that an onboard system can usually handle. At the Italian Institute of Technology, roboticists have used a convolutional neural network to reduce the time that it takes for the HyQ quadruped to plan its foot placement by several orders of magnitude, and it can now make dynamic adaptations, allowing it to withstand an extra helping of abuse from its human programmers.

When HyQ is being yanked around in the video above, what it’s showing is that the robot is able to adjust where it’s placing its feet, even after starting to take a step. Most robots plan their steps by saying, “I’m going to put my foot in that spot over there, ready, go!” This works just fine, except when something happens between the time that the robot lifts its foot up in one place and puts it down in another. HyQ’s new controller allows it to replan almost continuously, enabling adjustments on the fly whether it’s in the middle of a step or not, making it much more robust to external disturbances, whether caused by slippery surfaces, mistakes in foot placement, or shoves from human meanies.

The rest of the video shows an example of a situation in which visual adaptation is critical to the health and happiness of the robot—gap crossing. Without visual feedback, gaps are potentially lethal to those skinny little robot legs. Rather than churn through an entire software stack devoted to interpreting sensor data and calculating optimal foot placement, HyQ instead uses a convolutional neural network trained on a bunch of terrain templates including gaps, bars, rocks, and other nasty things to interpret the 3D map of the area in front of it created by its onboard sensors. The neural network is up to 200 times faster at computation for footstep selection than traditional planning systems, which both enables the continuous planning and opens up the option to do more complex planning in the future, like specifying different gaits or body orientations to make the robot even more adaptable. And while it’s not in the video, the researchers tell us that HyQ can walk across those gaps even while it’s being yanked around.

Octavio Villarreal and Victor Barasuol, from the Dynamic Legged Systems lab at IIT, led by Claudio Semini, will be presenting this work at two IROS workshops on Friday: Development of Agile Robots, and Machine Learning in Robot Motion Planning. If you’re in Madrid, stop by and check it out, and if you’re not, ask yourself whether your commitment to robotics really could be just a bit more serious.

[ IIT ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.