Want an Energy-Efficient Data Center? Build It Underwater

Microsoft wants to submerge data centers to keep them cool and to harvest energy from the sea

When Sean James, who works on data-center technology for Microsoft, suggested that the company put server farms entirely underwater, his colleagues were a bit dubious. But for James, who had earlier served on board a submarine for the U.S. Navy, submerging whole data centers beneath the waves made perfect sense.

This tactic, he argued, would not only limit the cost of cooling the machines—an enormous expense for many data-center operators—but it could also reduce construction costs, make it easier to power these facilities with renewable energy, and even improve their performance.

Together with Todd Rawlings, another Microsoft engineer, James circulated an internal white paper promoting the concept. It explained how building data centers underwater could help Microsoft and other cloud providers manage today’s phenomenal growth in an environmentally sustainable way.

At many large companies, such outlandish ideas might have died a quiet death. But Microsoft researchers have a history of tackling challenges of vital importance to the company in innovative ways, even if the required work is far outside of Microsoft’s core expertise. The key is to assemble engineering teams by uniting Microsoft employees with colleagues from partner companies.

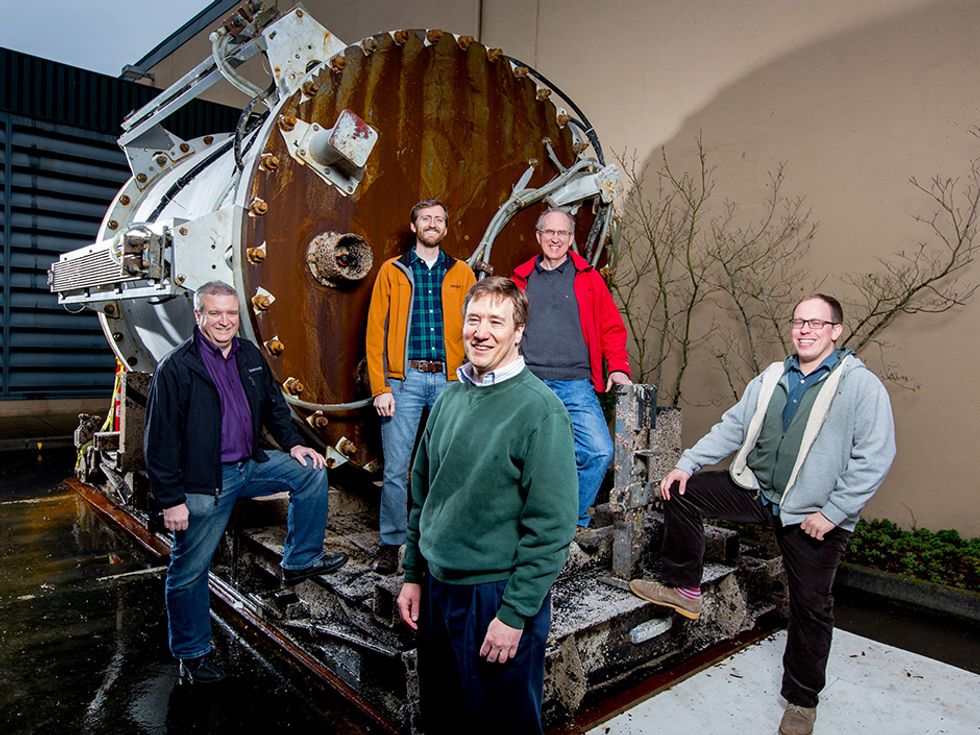

The four of us formed the core of just such a team, one charged with testing James’s far-out idea. So in August of 2014, we started to organize what soon came to be called Project Natick, dubbed that for no particular reason other than that our research group likes to name projects after cities in Massachusetts. And just 12 months later, we had a prototype serving up data from beneath the Pacific Ocean.

Project Natick had no shortage of hurdles to overcome. The first, of course, was keeping the inside of its big steel container dry. Another was figuring out the best way to use the surrounding seawater to cool the servers inside. And finally there was the matter of how to deal with the barnacles and other forms of sea life that would inevitably cover a submerged vessel—a phenomenon that should be familiar to anyone who has ever kept a boat in the water for an extended period. Clingy crustaceans and such would be a challenge because they could interfere with the transfer of heat from the servers to the surrounding water. These issues daunted us at first, but we solved them one by one, often drawing on time-tested solutions from the marine industry.

But why go to all this trouble? Sure, cooling computers with seawater would lower the air-conditioning bill and could improve operations in other ways, too, but submerging a data center comes with some obvious costs and inconveniences. Does trying to put thousands of computers beneath the sea really make sense? We think it does, for several reasons.

For one, it would offer a company like ours the ability to quickly target capacity where and when it is needed. Corporate planners would be freed from the burden of having to build these facilities long before they are actually required in anticipation of later demand. For an industry that spends billions of dollars a year constructing ever-increasing numbers of data centers, quick response time could provide enormous cost savings.

The reason underwater data centers could be built more quickly than land-based ones is easy enough to understand. Today, the construction of each such installation is unique. The equipment might be the same, but building codes, taxes, climate, workforce, electricity supply, and network connectivity are different everywhere. And those variables affect how long construction takes. We also observe their effects in the performance of our facilities, where otherwise identical equipment exhibits different levels of reliability depending on where it is located.

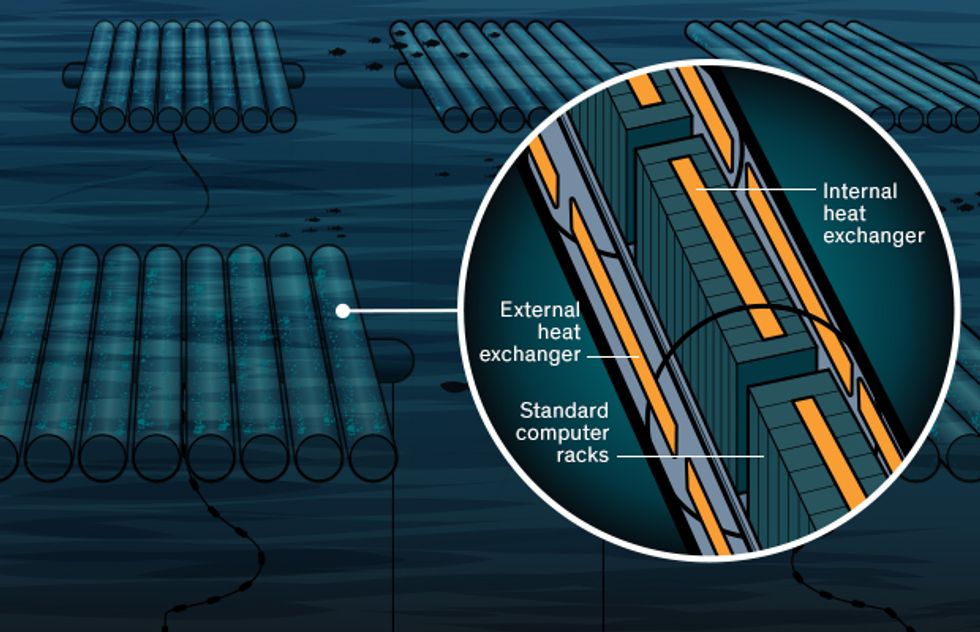

As we see it, a Natick site would be made up of a collection of “pods”—steel cylinders that would each contain possibly several thousand servers. Together they’d make up an underwater data center, which would be located within a few kilometers of the coast and placed between 50 and 200 meters below the surface. The pods could either float above the seabed at some intermediate depth, moored by cables to the ocean floor, or they could rest on the seabed itself.

Once we deploy a data-center pod, it would stay in place until it’s time to retire the set of servers it contains. Or perhaps market conditions would change, and we’d decide to move it somewhere else. This is a true “lights out” environment, meaning that the system’s managers would work remotely, with no one to fix things or change out parts for the operational life of the pod.

Now imagine applying just-in-time manufacturing to this concept. The pods could be constructed in a factory, provisioned with servers, and made ready to ship anywhere in the world. Unlike the case on land, the ocean provides a very uniform environment wherever you are. So no customization of the pods would be needed, and we could install them quickly anywhere that computing capacity was in short supply, incrementally increasing the size of an underwater installation to meet capacity requirements as they grew. Our goal for Natick is to be able to get data centers up and running, at coastal sites anywhere in the world, within 90 days from the decision to deploy.

The server rack used for the prototype data-center pod was populated with both servers and dummy loads that draw the same amount of power as a server.

Most new data centers are built in locations where electricity is inexpensive, the climate is reasonably cool, the land is cheap, and the facility doesn’t impose on the people living nearby. The problem with this approach is that it often puts data centers far from population centers, which limits how fast the servers can respond to requests.

For interactive experiences online, these delays can be problematic. We want Web pages to load quickly and video games such as Minecraft or Halo to be snappy and lag free. In years to come, there will be more and more interaction-rich applications, including those enabled by Microsoft’s HoloLens and other mixed reality/virtual reality technologies. So what you really want is for the servers to be close to the people they serve, something that rarely happens today.

It’s perhaps a surprising fact that almost half [PDF] the world’s population lives within 100 kilometers of the sea. So placing data centers just offshore near coastal cities would put them much closer to customers than is the norm today.

If that isn’t reason enough, consider the savings in cooling costs. Historically, such facilities have used mechanical cooling—think home air-conditioning on steroids. This equipment typically keeps temperatures between 18 and 27 °C, but the amount of electricity consumed for cooling is sometimes almost as much as that used by the computers themselves.

More recently, many data-center operators have moved to free-air cooling, which means that rather than chilling the air mechanically, they simply use outside air. This is far cheaper, with a cooling overhead of just 10 to 30 percent, but it means the computers are subject to outside air temperatures, which can get quite warm in some locations. It also often means putting the centers at high latitudes, far from population centers.

What’s more, these facilities can consume a lot of water. That’s because they often use evaporation to cool the air somewhat before blowing it over the servers. This can be a problem in areas subject to droughts, such as California, or where a growing population depletes the local aquifers, as is happening in many developing countries. Even if water is abundant, adding it in the air makes the electronic equipment more prone to corrosion.

Our Natick architecture sidesteps all these problems. The interior of the data-center pod consists of standard computer racks with attached heat exchangers, which transfer the heat from the air to some liquid, likely ordinary water. That liquid is then pumped to heat exchangers on the outside of the pod, which in turn transfer the heat to the surrounding ocean. The cooled transfer liquid then returns to the internal heat exchangers to repeat the cycle.

Of course, the colder the surrounding ocean, the better this scheme will work. To get access to chilly seawater even during the summer or in the tropics, you need only put the pods sufficiently deep. For example, at 200 meters’ depth off the east coast of Florida, the water remains below 15 °C all year round.

Our tests with a prototype Natick pod, dubbed the “Leona Philpot” (named for an Xbox game character), began in August 2015. We submerged it at just 11 meters’ depth in the Pacific near San Luis Obispo, Calif., where the water ranged between 14 and 18 °C.

Over the course of this 105-day experiment, we showed that we could keep the submerged computers at temperatures that were at least as cold as mechanical cooling can achieve and with even lower energy overhead than the free-air approach—just 3 percent. That energy-overhead value is lower than any production or experimental data center of which we are aware.

Because there was no need to provide an on-site staff with lights to see, air to breathe, parking spaces to fight over, or big red buttons to press in case of emergency, we made the atmosphere in the data-center pod oxygen free. (Our employees managed the prototype Natick pod from the comfort of their Microsoft offices.) We also removed all water vapor and dust. That made for a very benign environment for the electronics, minimizing problems with heat dissipation and connector corrosion.

Microsoft is committed to protecting the environment. In satisfying its electricity needs, for example, the company uses renewable sources as much as possible. To the extent that it can’t do that, it purchases carbon offsets. Consistent with that philosophy, we are looking to deploy our future underwater data centers near offshore sources of renewable energy—be it an offshore wind farm or some marine-based form of power generation that exploits the force of tides, waves, or currents.

These sources of energy are typically plentiful offshore, which means we should be able to match where people are with where we can place our energy-efficient underwater equipment and where we would have access to lots of green energy. Much as data centers today sometimes act as anchor tenants for new land-based renewable-energy farms, the same may hold true for marine energy farms in the future.

Another factor to consider is that conventionally generated electricity is not always easily available, particularly in the developing world. For example, 70 percent of the population of sub-Saharan Africa has no access to an electric grid. So if you want to build a data center to bring cloud services closer to such a population, you’d probably need to provide electricity for it, too.

Typically, electricity is carried long distances at 100,000 volts or higher, but ultimately servers use the same kinds of low voltages as your PC does. To drop the grid power to a voltage that the servers can consume generally requires three separate pieces of equipment. You also need backup generators and banks of batteries in case grid power fails.

Locating underwater data centers alongside offshore sources of power would allow engineers to simplify things. First, by generating power at voltages closer to what the servers require, we could eliminate some of the voltage conversions. Second, by powering the computers with a collection of independent wind or marine turbines, we could automatically build in redundancy. This would reduce both electrical losses and the capital cost (and complexity) associated with the usual data-center architecture, which is designed to protect against failure of the local power grid.

An added benefit of this approach is that the only real impact on the land is a fiber-optic cable or two for carrying data.

The first question everyone asks when we tell them about this idea is: How will you keep the electronics dry? The truth is that keeping things dry isn’t hard. The marine industry has been keeping equipment dry in the ocean since long before computers even existed, often in far more challenging contexts than anything we have done or plan to do.

The second question—one we asked ourselves early on—is how to cool the computers most efficiently. We explored a range of exotic approaches, including the use of special dielectric liquids and phase-change materials as well as unusual heat-transfer media such as high-pressure helium gas and supercritical carbon dioxide. While such approaches have their benefits, they raise thorny problems as well.

While we continue to investigate the use of exotic materials for cooling, for the near term we see no real need. Natick’s freshwater plumbing and radiator-like heat exchangers provide a very economical and efficient cooling mechanism, one that works just fine with standard servers.

A more pertinent issue, as we see it, is that an underwater data center will attract sea life, in effect forming an artificial reef. This process of colonization by marine organisms, called biofouling, starts with single-celled creatures, which are followed by somewhat larger organisms that feed on those cells, and so on up the food chain.

When we deployed our Natick prototype, crabs and fish began to gather around the vessel within 24 hours. We were delighted to have created a home for those creatures, so one of our main design considerations was how to maintain that habitat while not impeding the pod’s ability to keep its computers cool.

In particular, we knew that biofouling on external heat exchangers would disrupt the flow of heat from those surfaces. So we explored the use of various antifouling materials and coatings—even active deterrents involving sound or ultraviolet light—in hopes of making it difficult for life to take hold. Although it’s possible to physically clean the heat exchangers, relying on such interventions would be unwise, given our goal to keep operations as simple as possible.

Thankfully, the heat exchangers on our Natick pod remained clean during its first deployment, despite it being in a very challenging setting (shallow and close to shore, where ocean life is most abundant). But biofouling remains an area of active research, one that we continue to study with a focus on approaches that won’t harm the marine environment.

The biggest concern we had by far during our test deployment was that equipment would break. After all, we couldn’t send a tech to some server rack to swap out a bad hard drive or network card. Responses to hardware failures had to be made remotely or autonomously. Even in Microsoft’s data centers today, we and others have been working to increase our ability to detect and address failures without human intervention. Those same techniques and expertise will be applied to Natick pods of the future.

How about security? Is your data safe from cyber or physical theft if it’s underwater? Absolutely. A Natick site would provide the same encryption and other security guarantees of a land-based Microsoft data center. While no people would be physically present, sensors would give a Natick pod an excellent awareness of its surroundings, including the presence of any unexpected visitors.

You might wonder whether the heat from a submerged data center would be harmful to the local marine environment. Not likely. Any heat generated by a Natick pod would rapidly be mixed with cool water and carried away by the currents. The water just meters downstream of a Natick vessel would get a few thousandths of a degree warmer at most.

So the environmental impact would be very modest. That’s important, because the future is bound to see a lot more data centers get built. If we have our way, though, people won’t actually see many of them, because they’ll be doing their jobs deep underwater.

This article appears in the March 2017 print issue as “Dunking the Data Center.”

About the Authors

Ben Cutler, Spencer Fowers, Jeffrey Kramer, and Eric Peterson all work at Microsoft Research in Redmond, Wash.

- Should Data Centers Be Kept Cool—Or Warm? - IEEE Spectrum ›

- Cool(ing) Ideas for Tropical Data Centers - IEEE Spectrum ›

- DVD’s New Cousin Can Store More Than a Petabit - IEEE Spectrum ›

- Amazon's $650M Data Center Faces Energy Battle - IEEE Spectrum ›

- xMEMS Ultrasonic Coolers for Power Hungry Transceivers - IEEE Spectrum ›

- PowerLattice Voltage Regulator Boosts AI Energy Efficiency - IEEE Spectrum ›