Nvidia, the graphic-card master, wants to do for self-driving cars what it’s done for gaming and supercomputing. It wants to supply the hardware core—the automotive brain onto which others can build their applications.

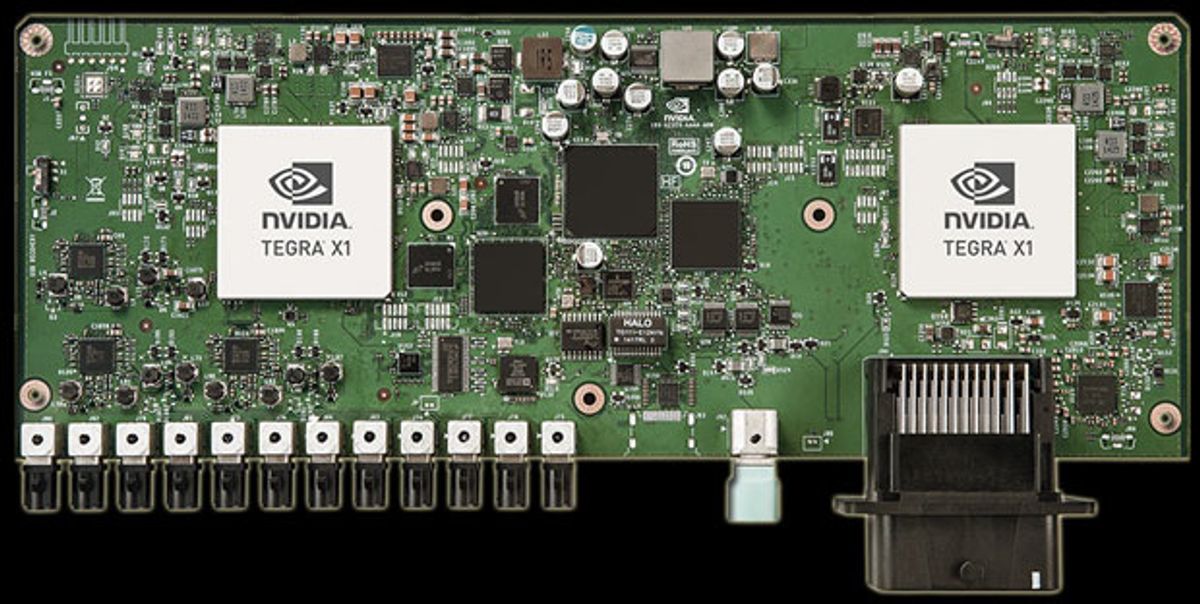

It’s called Drive PX, and next month it will be released to auto makers and top-tier suppliers for US $10,000 a pop (that’s a development kit—future commercial versions will cost far less). It packs a pair of the company’s Tegra X1 processors, each capable of a bit more than a teraflop—a trillion floating-point operations per second. Together they can manage up to 12 cameras, including units that monitor the driver for things like drowsiness or distractedness. “Sensor fusion,” which puts the various streams of data into a single picture, can even include input from radar and its laser-ranging equivalent, lidar. The result is the ability to recognize cars, pedestrians and street signs.

If you’ve played Grand Theft Auto, you’ll have a good idea of what a professional driving simulator is like, and if you’ve played with simulators, you’ll have a passing familiarity with self-driving cars. These systems manage parallel streams of visual data—and parallel processing is what Nvidia’s graphics processing units, or GPUs, are designed for.

Until now, the main non-gamelike application for GPUs has been in supercomputers, which also bears on the self-driving problem, where it’s important to dive into huge databases in order to learn from experience. Nvidia calls this its “deep learning” project.

“The majority of top supercomputers use Nvidia GPUs, including Titan, the largest in the U.S.,” notes Michael Houston, the technical lead for the project. “Deep learning has different applications. The focus has been on the visual analysis of imaging in video—web science, embedded systems and automotive. Fundamentally, we’re processing pixels.”

Learning as you go would be the ideal experimental method, and such a skill would come in handy whenever the high-detail maps on which autonomous cars rely fail—for instance, when a truck jackknifes, closing a lane. Right now, though, safety regulators take a dim view of such cybernetic self-assertion, so anything a car learns must first be uploaded to the cloud for analysis offline. Only later can the car get the lesson via software updates.

Auto companies that work with Nvidia (which, by the way, already has processors of one kind or another in some 8 million cars) and are presumed to be lining up for the development kit include Tesla, Audi and BMW, as well as top-tier suppliers, such as Delphi. These companies will build their own systems on top of the Nvidia framework.

“We produce a reference design,” says Danny Shapiro, Nvidia’s automotive senior director. “But the application layer—with the software, algorithms, and libraries—is still often the role of the automaker itself.”

Nvidia’s gaming savvy is also coming into play in other ways. The company uses similar GPU-based systems in highly realistic simulators that make it much easier to model problems that robotic cars are likely to face on the streets.

“That’s the cool thing about simulation,” Houston says. “There are lots of rare events, but you can create models of them. Take the failure case involving driver-assistance using radar: anything that’s highly reflective—metal confetti or a Mylar balloon, for example—will build a large radar signature. We actually had an engineer driving when an empty potatochip bag blew in front and the car slammed on the brakes.”

Lidar has its own weaknesses, which simulators can model and help to correct. “Lidar doesn’t like highly reflective objects, like store windows downtown,” Houston says. “It’s hard to test in the car, but you can build it in software and test that way. That’s how we harden our stuff before we go to the test track.”

Philip E. Ross is a senior editor at IEEE Spectrum. His interests include transportation, energy storage, AI, and the economic aspects of technology. He has a master's degree in international affairs from Columbia University and another, in journalism, from the University of Michigan.