Breaking the Latency Barrier

Robots and self-driving cars will need completely reengineered networks that have less than one millisecond of lag

For communications networks, bandwidth has long been king. With every generation of fiber optic, cellular, or Wi-Fi technology has come a jump in throughput that has enriched our online lives. Twenty years ago we were merely exchanging texts on our phones, but we now think nothing of streaming videos from YouTube and Netflix. No wonder, then, that video now consumes up to 60 percent of Internet bandwidth. If this trend continues, we might yet see full-motion holography delivered to our mobiles—a techie dream since Princess Leia's plea for help in Star Wars.

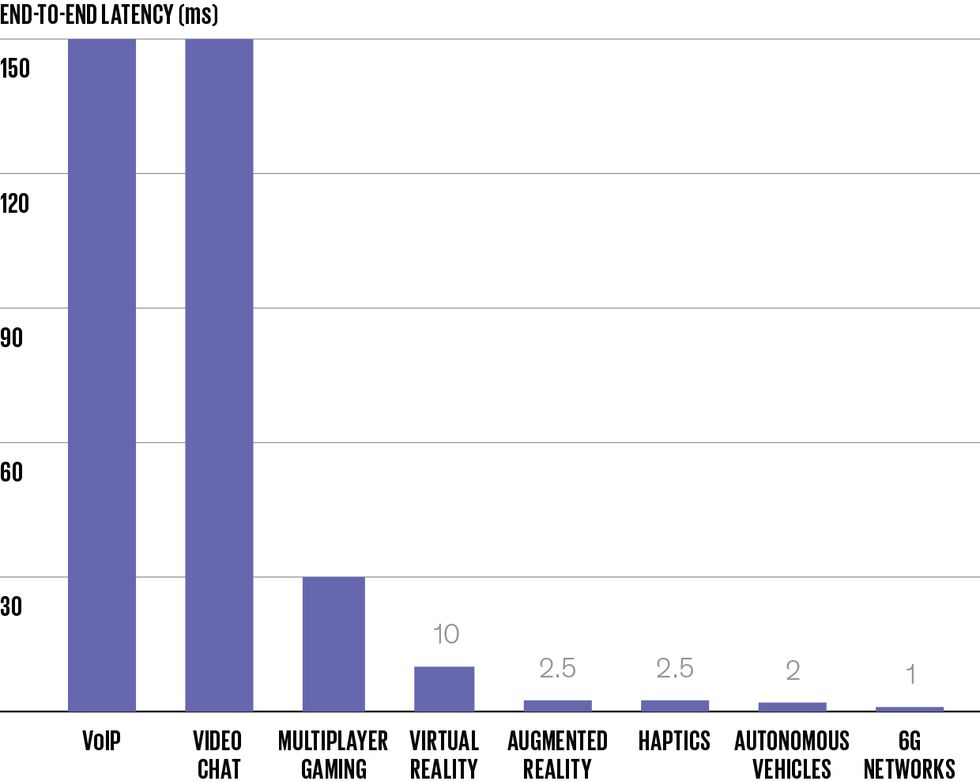

Recently, though, high bandwidth has begun to share the spotlight with a different metric of merit: low latency. The amount of latency varies drastically depending on how far in a network a signal travels, how many routers it passes through, whether it uses a wired or wireless connection, and so on. The typical latency in a 4G network, for example, is 50 milliseconds. Reducing latency to 10 milliseconds, as 5G and Wi-Fi are currently doing, opens the door to a whole slew of applications that high bandwidth alone cannot. With virtual-reality headsets, for example, a delay of more than about 10 milliseconds in rendering and displaying images in response to head movement is very perceptible, and it leads to a disorienting experience that is for some akin to seasickness.

Multiplayer games, autonomous vehicles, and factory robots also need extremely low latencies. Even as 5G and Wi-Fi make 10 milliseconds the new standard for latency, researchers, like my group at New York University's NYU Wireless research center, are already working hard on another order-of-magnitude reduction, to about 1 millisecond or less.

Pushing latencies down to 1 millisecond will require reengineering every step of the communications process. In the past, engineers have ignored sources of minuscule delay because they were inconsequential to the overall latency. Now, researchers will have to develop new methods for encoding, transmitting, and routing data to shave off even the smallest sources of delay. And immutable laws of physics—specifically the speed of light—will dictate firm restrictions on what networks with 1-millisecond latencies will look like. There's no one-size-fits-all technique that will enable these extremely low-latency networks. Only by combining solutions to all these sources of latency will it be possible to build networks where time is never wasted.

Until the 1980s, latency-sensitive technologies used dedicated end-to-end circuits. Phone calls, for example, were carried on circuit-switched networks that created a dedicated link between callers to guarantee minimal delays. Even today, phone calls need to have an end-to-end delay of less than 150 milliseconds, or it's difficult to converse comfortably.

At the same time, the Internet was carrying delay-tolerant traffic, such as emails, using technologies like packet switching. Packet switching is the Internet equivalent of a postal service, where mail is routed through post offices to reach the correct mailbox. Packets, or bundles of data between 40 and 1,500 bytes, are sent from point A to point B, where they are reassembled in the correct order. Using the technology available in the 1980s, delays routinely exceeded 100 milliseconds, with the worst delays well over 1 second.

Eventually, Voice over Internet Protocol (VoIP) technology supplanted circuit-switched networks, and now the last circuit switches are being phased out by providers. Since VoIP's triumph, there have been further reductions in latency to get us into the range of tens of milliseconds.

Latencies below 1 millisecond would open up new categories of applications that have long been sought. One of them is haptic communications, or communicating a sense of touch. Imagine balancing a pencil on your fingertip. The reaction time between when you see the pencil beginning to tip over and then moving your finger to keep it balanced is measured in milliseconds. A human-controlled teleoperated robot with haptic feedback would need a similar latency level.

Robots that aren't human controlled would also benefit from 1-millisecond latencies. Just like a person, a robot can avoid falling over or dropping something only if it reacts within a millisecond. But the powerful computers that process real-time reactions and the batteries that run them are heavy. Robots could be lighter and operate longer if their “brains" were kept elsewhere on a low-latency wireless network.

Before I get into all the ways engineers might build ultralow-latency networks, it would help to understand how data travels from one device to another. Of course, the signals have to physically travel along a transmission link between the devices. That journey isn't limited by just the speed of light, because delays are caused by switches and routers along the way. And even before that, data must be converted and prepped on the device itself for the journey. All of these parts of the process will need to be redesigned to consistently achieve submillisecond latencies.

Right on Time Frame durations dictate how frequently devices and routers can transmit data. Reducing those durations means more data can be sent more rapidly.Illustrations: Greg Mably

Drop in the Bucket Like water dripping into a leaky bucket, data packets can get held up at nodes if more are coming in than can be sent out. Congestion delays can be eased by allowing devices to monitor their transmission rates and throttle them if they're too high.

Fumble the Handoff 5G networks require frequent signal handoffs, meaning plenty of opportunities to waste time on a dropped signal. “Baggage carousels" of base stations, linked with fiber optics, could work together to keep a connection intact.

Point of No Return The speed of light sets a hard limit on how far a signal can travel and still reach its destination in a millisecond, which means either building servers and data centers closer to devices or finding a way to break a fundamental law of physics.

I should mention here that my research is focused on what's called the transport layer in the multiple layers of protocols that govern the exchange of data on the Internet. That means I'm concerned with the portion of a communications network that controls transmission rates and checks to make sure packets reach their destination in the correct order and without errors. While there are certainly small sources of delay on devices themselves, I'm going to focus on network delays.

The first issue is frame duration. A wireless access link—the link that connects a device to the larger, wired network—schedules transmissions within periodic intervals called frames. For a 4G link, the typical frame duration is 1 millisecond, so you could potentially lose that much time just waiting for your turn to transmit. But 5G has shrunk frame durations, lessening their contribution to delay.

Wi-Fi functions differently. Rather than using frames, Wi-Fi networks use random access, where a device transmits immediately and reschedules the transmission if it collides with another device's transmission in the link. The upside is that this method has shorter delays if there is no congestion, but the delays build up quickly as more device transmissions compete for the same channel. The latest version of Wi-Fi, Wi-Fi Certified 6 (based on the draft standard IEEE P802.11ax) addresses the congestion problem by introducing scheduled transmissions, just as 4G and 5G networks do.

When a data packet is traveling through the series of network links connecting its origin to its destination, it is also subject to congestion delays. Packets are often forced to queue up at links as both the amount of data traveling through a link and the amount of available bandwidth fluctuate naturally over time. In the evening, for example, more data-heavy video streaming could cause congestion delays through a link. Sending data packets through a series of wireless links is like drinking through a straw that is constantly changing in length and diameter. As delays and bandwidth change, one second you may be sucking up dribbles of data, while the next you have more packets than you can handle.

Congestion delays are unpredictable, so they cannot be avoided entirely. The responsibility for mitigating congestion delays falls to the Transmission Control Protocol (TCP), one part of the collection of Internet protocols that governs how computers communicate with one another. The most common implementations of TCP, such as TCP Cubic, measure congestion by sensing when the buffers in network routers are at capacity. At that point, packets are being lost because there is no room to store them. Think of it like a bucket with a hole in it, placed underneath a faucet. If the faucet is putting more water into the bucket than is draining through the hole, it fills up until eventually the bucket overflows. The bucket in this example is the router buffer, and if it “overflows," packets are lost and need to be sent again, adding to the delay. The sender then adjusts its transmission rate to try and avoid flooding the buffer again.

The problem is that even if the buffer doesn't overflow, data can still be stuck there queuing for its turn through the bucket “hole." What we want to do is allow packets to flow through the network without queuing up in buffers. YouTube uses a variation of TCP developed at Google called TCP BBR, short for Bottleneck Bandwidth and Round-Trip propagation time, with this exact goal. It works by adjusting the transmission rate until it matches the rate at which data is passing through routers. Going back to our bucket analogy, it constantly adjusts the flow of water out of the faucet to keep it the same as the flow out of the hole in the bucket.

For further reductions in congestion, engineers have to deal with previously ignorable tiny delays. One example of such a delay is the minuscule variation in the amount of time it takes each specific packet to transmit during its turn to access the wireless link. Another is the slight differences in computation times of different software protocols. These can both interfere with TCP BBR's ability to determine the exact rate to inject packets into the connection without leaving capacity unused or causing a traffic jam.

A focus of my research is how to redesign TCP to deliver low delays combined with sharing bandwidth fairly with other connections on the network. To do so, my team is looking at existing TCP versions, including BBR and others like the Internet Engineering Task Force's L4S (short for Low Latency Low Loss Scalable throughput). We've found that these existing versions tend to focus on particular applications or situations. BBR, for example, is optimized for YouTube videos, where video data typically arrives faster than it is being viewed. That means that BBR ignores bandwidth variations because a buffer of excess data has already made it to the end user. L4S, on the other hand, allows routers to prioritize time-sensitive data like real-time video packets. But not all routers are capable of doing this, and L4S is less useful in those situations.

My group is figuring out how to take the most effective parts of these TCP versions and combine them into a more versatile whole. Our most promising approach so far has devices continually monitoring for data-packet delays and reducing transmission rates when delays are building up. With that information, each device on a network can independently figure out just how much data it can inject into the network. It's somewhat like shoppers at a busy grocery store observing the checkout lines to see which ones are moving faster, or have shorter queues, and then choosing which lines to join so that no one line becomes too long.

For wireless networks specifically, reliably delivering latencies below 1 millisecond is also hampered by connection handoffs. When a cellphone or other device moves from the coverage area of one base station (commonly referred to as a cell tower) to the coverage of a neighboring base station, it has to switch its connection. The network initiates the switch when it detects a drop in signal strength from the first station and a corresponding rise in the second station's signal strength. The handoff occurs during a window of several seconds or more as the signal strengths change, so it rarely interrupts a connection.

But 5G is now tapping into millimeter waves—the frequencies above 20 gigahertz—which bring unprecedented hurdles. These frequency bands have not been used before because they typically don't propagate as far as lower frequencies, a shortcoming that has only recently been addressed with technologies like beamforming. Beamforming works by using an array of antennas to point a narrow, focused transmission directly at the intended receiver. Unfortunately, obstacles like pedestrians and vehicles can entirely block these beams, causing frequent connection interruptions.

One option to avoid interruptions like these is to have multiple millimeter-wave base stations with overlapping coverage, so that if one base station is blocked, another base station can take over. However, unlike regular cell-tower handoffs, these handoffs are much more frequent and unpredictable because they are caused by the movement of people and traffic.

My team at NYU is developing a solution in which neighboring base stations are also connected by a fiber-optic ring network. All of the traffic to this group of base stations would be injected into, and then circulate around, the ring. Any base station connected to a cellphone or other wireless device can copy the data as it passes by on the ring. If one base station becomes blocked, no problem: The next one can try to transmit to the device. If it, too, is blocked, the one after that can have a try. Think of a baggage carousel at an airport, with a traveler standing near it waiting for her luggage. She may be “blocked" by others crowding around the carousel, so she might have to move to a less crowded spot. Similarly, the fiber-ring system would allow reception as long as the mobile device is anywhere in the range of at least one unblocked base station.

There's one final source of latency that can no longer be ignored, and it's immutable: the speed of light. Because light travels so quickly, it used to be possible to ignore it in the presence of other, larger sources of delay. It cannot be disregarded any longer.

Take for example the robot we discussed earlier, with its computational “brain" in a server in the cloud. Servers and large data centers are often located hundreds of kilometers away from end devices. But because light travels at a finite speed, the robot's brain, in this case, cannot be too far away. Moving at the speed of light, wireless communications travel about 300 kilometers per millisecond. Signals in optical fiber are even slower, at about 200 km/ms. Finally, considering that the robot would need round-trip communications below 1 millisecond, the maximum possible distance between the robot and its brain is about 100 km. And that's ignoring every other source of possible delay.

The only solution we see to this problem is to simply move away from traditional cloud computing toward what is known as edge computing. Indeed, the push for submillisecond latencies is driving the development of edge computing. This method places the actual computation as close to the devices as possible, rather than in server farms hundreds of kilometers away. However, finding the real estate for these servers near users will be a costly challenge to service providers.

There's no doubt that as researchers and engineers work toward 1-millisecond delays, they will find more sources of latency I haven't mentioned. When every microsecond matters, they'll have to get creative to whittle away the last few slivers on the way to 1 millisecond. Ultimately, the new techniques and technologies that bring us to these ultralow latencies will be driven by what people want to use them for.

When the Internet was young, no one knew what the killer app might be. Universities were excited about remotely accessing computing power or facilitating large file transfers. The U.S. Department of Defense saw value in the Internet's decentralized nature in the event of an attack on communications infrastructure. But what really drove usage turned out to be email, which was more relevant to the average person. Similarly, while factory robots and remote surgery will certainly benefit from submillisecond latencies, it is entirely possible that neither emerges as the killer app for these technologies. In fact, we probably can't confidently predict what will turn out to be the driving force behind vanishingly small delays. But my money is on multiplayer gaming.

This article appears in the November 2020 print issue as “Breaking the Millisecond Barrier."

Right on Time Frame durations dictate how frequently devices and routers can transmit data. Reducing those durations means more data can be sent more rapidly.Illustrations: Greg Mably

Right on Time Frame durations dictate how frequently devices and routers can transmit data. Reducing those durations means more data can be sent more rapidly.Illustrations: Greg Mably Drop in the Bucket Like water dripping into a leaky bucket, data packets can get held up at nodes if more are coming in than can be sent out.

Drop in the Bucket Like water dripping into a leaky bucket, data packets can get held up at nodes if more are coming in than can be sent out.  Fumble the Handoff 5G networks require frequent signal handoffs, meaning plenty of opportunities to waste time on a dropped signal. “Baggage carousels" of

Fumble the Handoff 5G networks require frequent signal handoffs, meaning plenty of opportunities to waste time on a dropped signal. “Baggage carousels" of  Point of No Return The speed of light sets a hard limit on how far a signal can travel and still reach its destination in a millisecond, which means either building

Point of No Return The speed of light sets a hard limit on how far a signal can travel and still reach its destination in a millisecond, which means either building