Brain-machine interface (BMI) technology, for all its decades of development, still awaits widespread use. Reasons include hardware and software that’s not yet up to the task in noninvasive approaches that use electroencephalogram (EEG) sensors placed on the scalp, and that surgery is required in approaches relying on brain implants.

Now, researchers at the University of Technology Sydney (UTS), Australia, in collaboration with the Australian Army, have developed portable, prototype dry sensors that achieve 94 percent of the accuracy of benchmark wet sensors, but without the latter’s awkwardness, lengthy setup time, need for messy gels, and limited reliability outside the lab.

“Dry sensors have performed poorly compared to the gold standard silver-on-silver chloride wet sensors,” says Francesca Iacopi, from the UTS Faculty of Engineering and Information Technology. “This is especially the case when monitoring EEG signals from hair-covered curved areas of the scalp. That’s why they are needle-shaped, bulky, and uncomfortable for users.”

“We’ve used [the new sensors] in a field test to demonstrate hands-free operations of a quadruped robot using only brain signals.”

—Francesca Iacopi, University of Technology Sydney

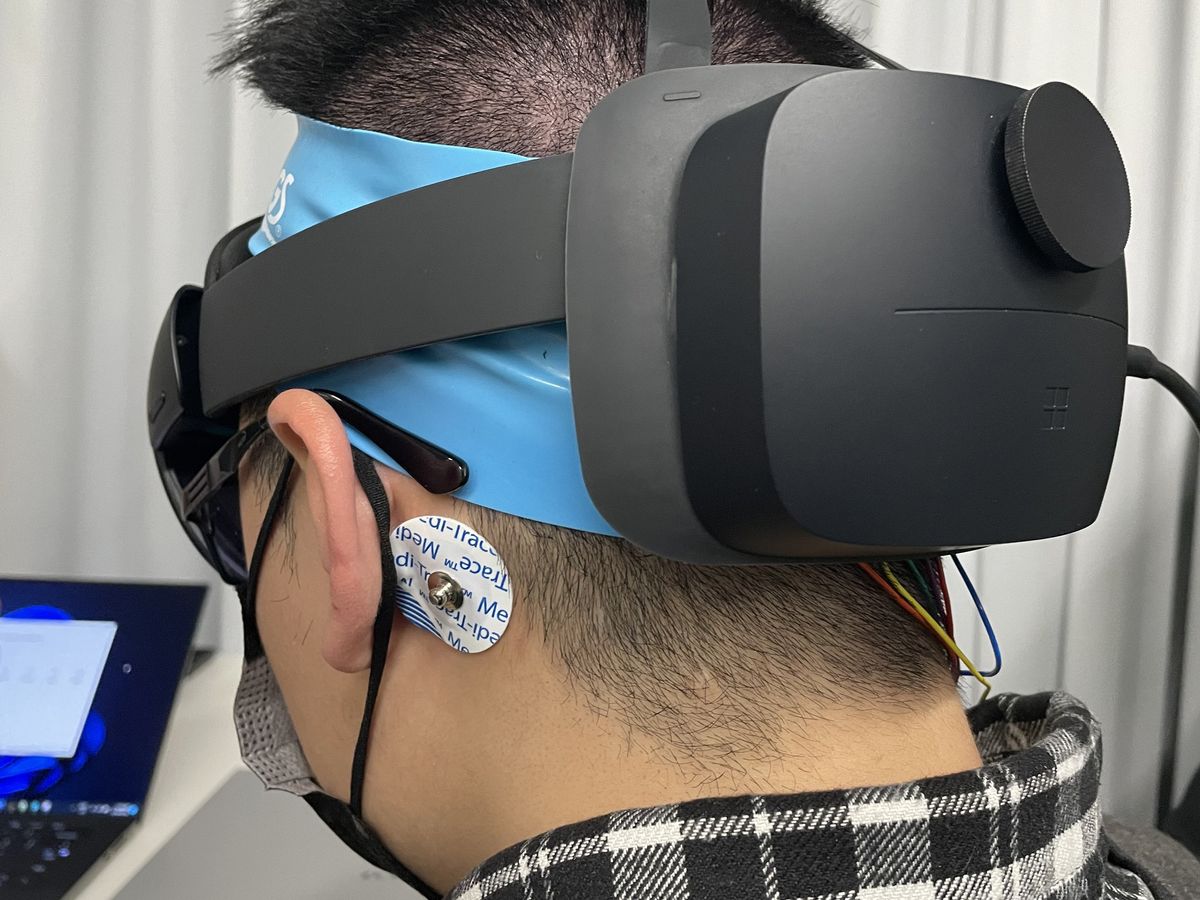

Iacopi, together with Chin-Teng Lin, a faculty colleague specializing in BMI algorithm research, have developed three-dimensional micropatterned sensors using subnanometer-thick epitaxial graphene for the area of contact. The sensors can be attached to the back of the head, the location best for detecting EEG signals from the visual cortex, the area of the brain that processes visual information.

“As long as the hair is short, the sensors provide enough skin contact and low impedance to compare well on a signal-to-noise bases with wet sensors,” says Iacopi. “And we’ve used them in a field test to demonstrate hands-free operations of a quadruped robot using only brain signals.”

The sensors are fabricated on a silicon substrate over which a layer of cubic silicon carbide (3C-SiC) is laid down and patterned using photolithography and etching to form approximately 10-micrometer-thick designs. Three-dimensional designs are crucial to obtaining good contact with the curved and hairy part of the scalp, according to the researchers. A catalytic alloy method is then used to grow epitaxial graphene around the surface of the patterned structure.

The researchers chose SiC on silicon because it’s easier to pattern and to integrate with silicon than SiC alone. And as for graphene, “it’s extremely conductive, it’s biocompatible, and it’s resilient and highly adhesive to its substrate,” says Iacopi. In addition, “it can be hydrated and act like a sponge to soak up the moisture and sweat on the skin, which increases its conductivity and lowers impedance.”

Brain Robotics Interface

Several patterns were tested, and a hexagonal structure that provided the best contact with the skin through the hair was chosen. With redundancy in mind, eight sensors were attached to a custom-made sensor pad using pin buttons, and was then employed on an elastic headband wrapped around the operator’s skull. All eight sensors recorded EEG signals to varying degrees depending on their location and the pressure from the headband, Lin explains. Results of the tests were published last month in Applied Nano Materials.

To test the sensors, an operator is fitted with a head-mounted augmented-reality lens that displays six white flickering squares representing different commands. When an operator concentrates on a specific square, a particular collective biopotential is produced in the visual cortex and picked up by the sensors. The signal is sent to a decoder in the mount via Bluetooth, which converts the signal into the intended command and is then wirelessly transmitted to a receiver in the robot.

“The system can issue up to nine commands at present, though only six commands have been tested and verified for use with the graphene sensors,” says Lin. “Each command corresponds to a specific action or function such as go forward, turn right, or stop. We will add more commands in the future.”

The Australian Army successfully carried out two field tests using a quadruped robot. In the first test, the soldier operator had the robot follow a series of visual guides set out over rough ground. The second test had the operator take on the role of a section commander. He provided directions to both the robot and soldiers on the team as they conducted a simulated clearance of several buildings in an urban war setting, with the robot preceding the soldiers in checking out the buildings.