This article is part of our exclusive IEEE Journal Watch series in partnership with IEEE Xplore.

Driving in the winter, say, or in stormy conditions can induce feelings of fear—and inspire more caution. So could some of the same hallmarks of fear and defensive driving be somehow programmed into a self-driving car? New research suggests that, yes, AI systems can be made more safe and cautious drivers by being assigned neural traits similar to what humans experience when they feel fear.

In fact, the researchers find, this trick can help a self-driving AI system perform more safely than other leading autonomous vehicle systems.

A new kind of “fear-inspired” reinforcement learning technique is proving useful in making self-driving cars safer.

Chen Lv, an associate professor and director of the AutoMan Research Lab, at the School of Mechanical and Aerospace Engineering, at Nanyang Technological University, in Singapore, helped codesign the new system. He notes that over recent years, the fields of neuroscience and psychology have been digging deeper into the inner workings of the human brain, including the amygdala—the part of the brain that regulates emotions. “Fear may be the most fundamental and crucial emotion for both humans and animals in terms of survival,” says Lv.

Psychology and neuroscience studies have suggested that not just fear, he says, but also imagination and forecasting of hazardous situations play a role in survival. “Motivated by [these] insights, we devised a brainlike machine-intelligence paradigm... to acquire a sense of fear, thereby enhancing or ensuring safety,” explains Lv.

The autonomous driving agents developed by Lv’s team, he says, learned how to cope with hazardous scenarios through iteration and forecasting, thereby significantly reducing how often the system makes unsafe decisions or actions when interacting with the real-world environment.

“We can imagine various unpleasant or frightening scenarios in our brains,” Lv says. “Therefore, we understand how to more effectively avoid these frightening situations, such as collision avoidance.”

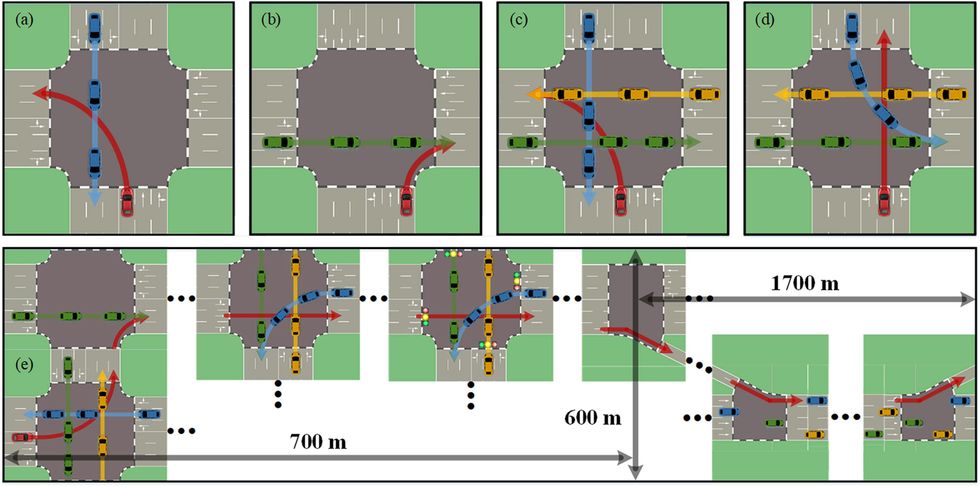

In their study, Lv and his team tested their particular kind of neural network, called FNI-RL (Fear-Neuro-Inspired Reinforcement Learning), through simulations and compared it to 10 other baseline driving or autonomous driving systems—including a basic intelligent-driver neural model, an adversarial imitation learning neural net, and a cohort of licensed human drivers.

Their results show, Lv says, that FNI-RL performs much better than other AI agents. For example, in one short-distance driving scenario—turning left at an intersection—FNI-RL shows improvements ranging from 1.55 to 18.64 percent in driving performance compared to the other autonomous systems. In another, longer simulated driving test, of 2,400 meters, the FNI-RL improved driving performance as much as 64 percent compared to other autonomous systems. Crucially, FNI-RL was more likely to reach its target lane without any safety violations, including collisions and running a red light.

The researchers also conducted experimental tests of FNI-RL against 30 human drivers on a driving stimulator, across three different scenarios (including another driver cutting suddenly in front of them). FNI-RL outperformed humans in all three scenarios.

Lv notes that these are only initial tests, and a considerable amount of work needs to be done before this system could ever, for instance, be pitched to a carmaker or autonomous vehicle company. He says he is interested in combining the FNI-RL model with other AI models that consider temporal sequences, such as large language models, which could further improve performance. “[This could lead to] a high-level embodied AI and trustworthy autonomous driving, making our transportation safer and our world better,” he says.

“As far as I know,” Lv adds, “This research is among the first in the exploration of fear-neuro-inspired AI for realizing safe autonomous driving.”

The researchers detailed their work in a study published in October in IEEE Transactions on Pattern Analysis and Machine Intelligence.

- A Former Pilot On Why Autonomous Vehicles Are So Risky ›

- What Self-Driving Cars Tell Us About AI Risks ›

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.