Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next two months; here's what we have so far (send us your events!):

IEEE TechEthics Conference – October 13, 2017 – Washington, D.C.

HAI 2017 – October 17-20, 2017 – Bielefeld, Germany

CBS 2017 – October 17-19, 2017 – Beijing, China

World MoveIt! Day – October 18, 2017 – Six locations worldwide

ICUAS 2017 – October 22-29, 2017 – Miami, Fla., USA

Robótica 2017 – November 7-11, 2017 – Curitiba, Brazil

Humanoids 2017 – November 15-17, 2017 – Birmingham, U.K.

iREX 2017 – November 29-2, 2017 – Toyko, Japan

Let us know if you have suggestions for next week, and enjoy today's videos.

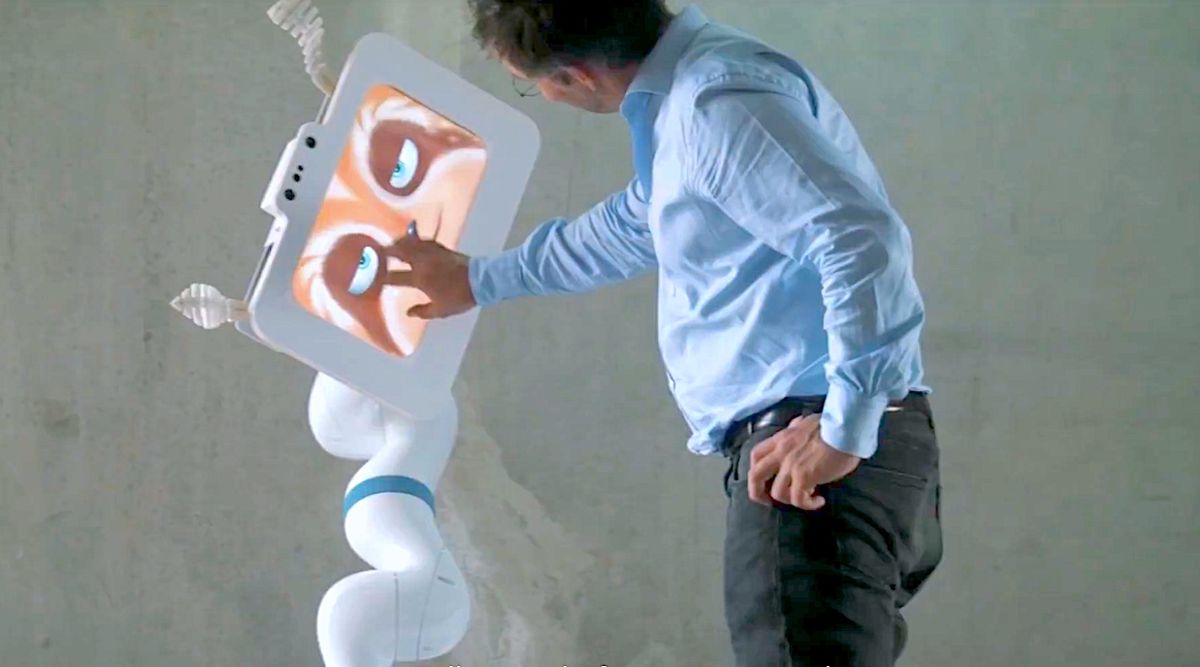

Spoon is a French company that designs new kinds of robotic creatures:

“Our creatures are not humanoids on purpose, as we believe that humanoid robots implicitly promise more that they can actually do,” their website says. I like it.

[ Spoon.ai ]

Robot manipulation, with a twist.

From Michael T. Tolley’s group at UCSD, and presented at IROS last month:

The gripper has three fingers. Each finger is made of three soft flexible pneumatic chambers, which move when air pressure is applied. This gives the gripper more than one degree of freedom, so it can actually manipulate the objects it’s holding. For example, the gripper can turn screwdrivers, screw in lightbulbs and even hold pieces of paper, thanks to this design.

In addition, each finger is covered with a smart, sensing skin. The skin is made of silicone rubber, where sensors made of conducting carbon nanotubes are embedded. The sheets of rubber are then rolled up, sealed and slipped onto the flexible fingers to cover them like skin.

Next steps include adding machine learning and artificial intelligence to data processing so that the gripper will actually be able to identify the objects it’s manipulating, rather than just model them. Researchers also are investigating using 3D printing for the gripper’s fingers to make them more durable.

[ Bioinspired Design Lab ] via [ UCSD ]

Happy fifth birthday to ROS-Industrial!

ROS itself is about to turn 10; that’ll happen next month and we hear there’s some exciting stuff planned.

[ ROS-I ]

We could watch this all day.

From OpenAI:

We’ve found that self-play allows simulated AIs to discover physical skills like tackling, ducking, faking, kicking, catching, and diving for the ball, without explicitly designing an environment with these skills in mind. Self-play ensures that the environment is always the right difficulty for an AI to improve. Taken alongside our Dota 2 self-play results, we have increasing confidence that self-play will be a core part of powerful AI systems in the future.

We set up competitions between multiple simulated 3D robots on a range of basic games, trained each agent with simple goals (push the opponent out of the sumo ring, reach the other side of the ring while preventing the other agent from doing the same, kick the ball into the net or prevent the other agent from doing so, and so on), then analyzed the different strategies that emerged.

These agents also exhibit transfer learning, applying skills learned in one setting to succeed in another never-before-seen one. In one case, we took the agent trained on the self-play sumo wrestling task and faced it with the task of standing while being perturbed by “wind” forces. The agent managed to stay upright despite never seeing the windy environment or observing wind forces, while agents trained to walk using classical reinforcement learning would fall over immediately.

Though it is possible to design tasks and environments that require each of these skills, this requires effort and ingenuity on the part of human designers, and the agents’ behaviors will be bounded in complexity by the problems that the human designer can pose for them. By developing agents through thousands of iterations of matches against successively better versions of themselves, we can create AI systems that successively bootstrap their own performance.

[ OpenAI ]

World MoveIt! Day is October 18th! That’s next Wednesday!

There are eight events taking place around the world! You should check them out! Exclamation points!!!

Thanks Dave!

Dexterous multi-fingered hands are extremely versatile and provide a generic way to perform multiple tasks in human-centric environments. However, effectively controlling them remains challenging due to their high dimensionality and large number of potential contacts. Deep reinforcement learning (DRL) provides a model-agnostic approach to control complex dynamical systems, but has not been shown to scale to high-dimensional dexterous manipulation. Furthermore, deployment of DRL on physical systems remains challenging due to sample inefficiency. Thus, the success of DRL in robotics has thus far been limited to simpler manipulators and tasks. In this work, we show that model-free DRL with natural policy gradients can effectively scale up to complex manipulation tasks with a high-dimensional 24-DoF hand, and solve them from scratch in simulated experiments. Furthermore, with the use of a small number of human demonstrations, the sample complexity can be significantly reduced, and enable learning within the equivalent of a few hours of robot experience. We demonstrate successful policies for multiple complex tasks: object relocation, in-hand manipulation, tool use, and dooropening.

[ Vikash Kumar ]

Thanks Vikash!

This video shows our team performance at the 2nd edition of the international IROS 2017 Autonomous Drone Racing Competition, which took place during the IROS 2017 conference in Vancouver on September 27, 2017. The competition consisted of navigating autonomously through a number of gates. The winner was the one who passed the highest number of gates. We ranked 2nd with an overall time of 35 seconds. The winner (the INAOE institut from Mexico) passed 9 gates with an overall time of 3.1 minutes. Our approach was fully vision based. All computation, sensing and control ran onboard a Qualcomm Snapdragon Flight board and an Intel Up board.

[ UZH ]

Intel is using drones with infrared thermal cameras to monitor—and help save—polar bears.

One ship. One team. One mission: Help save the polar bears, before it’s too late. Our planet’s climate is changing fast, and animals like the polar bear are struggling to adapt. That’s why we set off on an expedition to explore the use of the Intel Falcon 8+ Drone for environmental research.

[ Intel ]

A summary of the main technical contributions of Team MIT-Princeton in the 2017 Amazon Robotics Challenge, submitted to ICRA 2018.

[ arXiv ]

For a look at the state of the art in humanoid robot soccer, here are highlights from NimbRo, which won both AdultSize and TeenSize at RoboCup 2017:

[ NimbRo ]

A demonstration of the robotics project by Richard Ruiqi Yang, in which he trained a deep neural network running on a KUKA robot to greet the members of the Evolving AI Lab.

[ Evolving AI Lab ]

With a customized Iver 3 underwater drone, Professor of Naval Architecture and Marine Engineering Matthew Johnson-Roberson and his team in the Deep Robotic Optical Perception (DROP) Lab have a new set of underwater eyes that could provide regulatory agencies, scientists and the public with a window into the health of the world's lakes and oceans.

[ DROP Lab ]

Ross Mead, who got his Ph.D. in robotics at USC and is now the CEO of Semio, talked with CineFix about movie robot accuracy.

Rather than build a new full-scale X-Plane every time it has a wacky idea, NASA instead builds little robotic ones, which is only slightly disappointing.

[ NASA Armstrong ]

Toyota Research Institute (TRI) is demonstrating its progress in the development of automated driving technology, robotics and other project work. “In the last few months, we have rapidly accelerated our pace in advancing Toyota’s automated driving capabilities with a vision of saving lives, expanding access to mobility, and making driving more fun and convenient,” said Dr. Gill Pratt, CEO of TRI. “Our research teams have also been evolving machine intelligence that can support further development of robots for in-home support of people.”

[ TRI ]

Android Employed is a comedy web series about what will definitely happen as robots enter the workforce. There are four of these on YouTube; here are two:

Caramel the hamster was probably not harmed during filming.

[ Android Employed ]

Here’s another video from ROSCon 2017: “The ROS 2 vision for advancing the future of robotics development.”

Using a concrete use case, this talk will describe the vision of how ROS 2 users will design and implement their autonomous systems from prototype to production. It will highlight features, either available already in ROS 2 or envisioned to become available in the future, and how they can be applied toward building more capable, flexible, and robust robotic systems. While the presentation starts with a simple application, it later utilizes more advanced features like introspection and orchestration capabilities to empower the system for more complex scenarios and harden it to the point of a production-ready system.

Remember, all of the ROSCon 2016 talks are available at the link.

[ ROSCon 2017 ]

This week’s CMU RI Seminar: James McCann on “On-Demand Machine Knitting for Everyone.”

Knitting machines are general-purpose fabrication devices that can robustly create intricate 3D surfaces from yarn by cleverly actuating thousands of mechanical needles. Knitting machines are an established feature of the textiles production landscape, in use today to make everything from socks to sweaters. However, the current design tools for machine knitting are sorely lacking — often both requiring extensive training and the use of a particular brand of machine. In this talk, I will describe our vision for the future of on-demand machine knitting for everyone, enabled by a simple file format and some fancy new software tools — both of which are being actively developed in the Carnegie Mellon Textiles Lab.

[ CMU RI ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.