Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here’s what we have so far (send us your events!):

ELROB 2018 – September 24-28, 2018 – Mons, Belgium

ARSO 2018 – September 27-29, 2018 – Genoa, Italy

ROSCon 2018 – September 29-30, 2018 – Madrid, Spain

IROS 2018 – October 1-5, 2018 – Madrid, Spain

Japan Robot Week – October 17-19, 2018 – Tokyo, Japan

ICSR 2018 – November 28-30, 2018 – Qingdao, China

Let us know if you have suggestions for next week, and enjoy today’s videos.

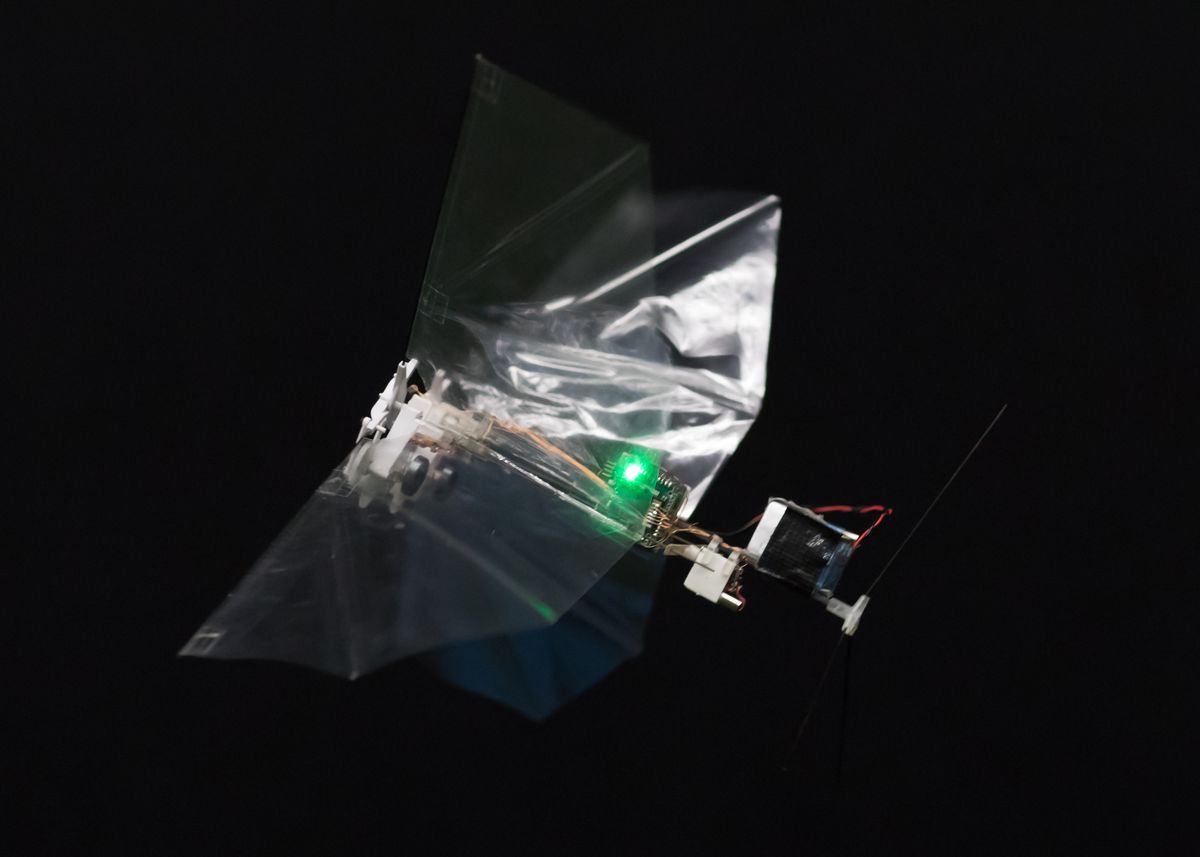

The TU Delft MAVLab has been iterating on their DelFly flapping-wing robots for years, and this is the most impressive one yet:

Insects are among the most agile natural flyers. Hypotheses on their flight control cannot always be validated by experiments with animals or tethered robots. To this end, we developed a programmable and agile autonomous free-flying robot controlled through bio-inspired motion changes of its flapping wings. Despite being 55 times the size of a fruit fly, the robot can accurately mimic the rapid escape maneuvers of flies, including a correcting yaw rotation toward the escape heading. Because the robot’s yaw control was turned off, we showed that these yaw rotations result from passive, translation-induced aerodynamic coupling between the yaw torque and the roll and pitch torques produced throughout the maneuver. The robot enables new methods for studying animal flight, and its flight characteristics allow for real-world flight missions.

In a new paper, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), say that they’ve made a key development in this area of work: a system that lets robots inspect random objects, and visually understand them enough to accomplish specific tasks without ever having seen them before.

The system, called Dense Object Nets (DON), looks at objects as collections of points that serve as sort of visual roadmaps. This approach lets robots better understand and manipulate items, and, most importantly, allows them to even pick up a specific object among a clutter of similar — a valuable skill for the kinds of machines that companies like Amazon and Walmart use in their warehouses.

The team trained the system to look at objects as a series of points that make up a larger coordinate system. It can then map different points together to visualize an object’s 3-D shape, similar to how panoramic photos are stitched together from multiple photos. After training, if a person specifies a point on a object, the robot can take a photo of that object, and identify and match points to be able to then pick up the object at that specified point. This is different from systems like UC-Berkeley’s DexNet, which can grasp many different items, but can’t satisfy a specific request. Imagine a child at 18 months old, who doesn’t understand which toy you want it to play with but can still grab lots of items, versus a four-year old who can respond to "go grab your truck by the red end of it.”

In the future, the team hopes to improve the system to a place where it can perform specific tasks with a deeper understanding of the corresponding objects, like learning how to grasp an object and move it with the ultimate goal of say, cleaning a desk.

[ MIT ]

We demonstrated the high-speed, non-deformation catching of a marshmallow. The marshmallow is a very soft object which is difficult to grasp without deforming its surface. For the catching, we developed a 1ms sensor fusion system with the high-speed active vision sensor and the high-speed, high-precision proximity sensor.

Now they need a high speed robot hand that can stuff those marshmallows into my mouth. NOM!

We haven’t heard from I-Wei Huang, aka Crab Fu, in a bit. Or, more than a bit. He was one of the steampunk makers we featured in this article from 10 years ago! It’s good to see one of his unique steam-powered robot characters in action again.

Toot toot!

[ Crab Fu ]

You don’t need to watch the entire five minutes of this—just the bit where a 4-meter-tall teleoperated robot chainsaws a log in half.

Also the bit at the end is cute.

[ Mynavi ]

A teaser for what looks to be one of the longest robotic arms we’ve ever seen.

This is a little dizzying, but it’s what happens when you put a 360-degree camera on a racing drone.

[ Team BlackSheep ]

Uneven Bars Gymnastics Robot 3-2 certainly charges quickly, but it needs a little work on that dismount.

[ Hinamitetu ]

This video shows the latest results achieved by the Dynamic Interaction Control Lab at the Italian Institute of Technology on teleoperated walking and manipulation for humanoid robots. We have integrated the iCub walking algorithms with a new teleoperation system, thus allowing a human being to teleoperate the robot during locomotion and manipulation tasks.

Also, don’t forget this:

[ Paper ]

AEROARMS is one of the most incredible and ambitious projects in Europe. In this video we explain what AEROARMS is. It part of the H2020 science program of the European Comission. It was funded at 2015 and this year (2018) will be finished in September.

Yup, all drones should come with a pair of cute little arms.

[ Aeroarms ]

There’s an IROS workshop on “Humanoid Robot Falling,” so OF COURSE they had to make a promo video:

I still remember how awesome it was when CHIMP managed to get itself up again during the DRC.

[ Workshop ]

New from Sphero: Just as round, except now with a display!

It’s $150.

[ Sphero ]

This video shows a human-size bipedal robot, dubbed Mercury, which has passive ankles, thus relying solely on hip and knee actuation for balance. Unlike humans, having passive ankles forces Mercury to gain balance by continuously stepping. This capability is not only very difficult to accomplish but enables the robot to rapidly respond to disturbances like those produced when walking around humans.

[ UT Austin ]

Mexico was the partner country for Hannover Fair 2018, and KUKA has a strong team in Mexico, so it was a perfect excuse to partner with QUARSO on a novel use of robots to act as windows on the virtual world in the Mexico Pavilion.

A little Bot & Dolly-ish, yeah?

[ Kuka ]

For the first time ever, take a video tour of the award-winning Robotics & Mechanisms Laboratory (RoMeLa), led by its director Dr. Dennis Hong. Meet a variety of robots that play soccer, climb walls and even perform the 8-Clap.

[ RoMeLa ]

This week’s CMU RI Seminar is from CMU’s Matthew O’Toole on “Imaging the World One Photon at a Time.”

The heart of a camera and one of the pillars for computer vision is the digital photodetector, a device that forms images by collecting billions of photons traveling through the physical world and into the lens of a camera. While the photodetectors used by cellphones or professional DSLR cameras are designed to aggregate as many photons as possible, I will talk about a different type of sensor, known as a SPAD, designed to detect and timestamp individual photon events. By developing computational algorithms and hardware systems around these sensors, we can perform new imaging feats, including the ability to (1) image the propagation of light through a scene at trillions of frames per second, (2) form dense 3D measurements from extremely low photon counts, and (3) reconstruct the shape and reflectance of objects hidden from view.

[ CMU RI ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.