Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next two months; here’s what we have so far (send us your events!):

RiTA 2016 – December 11-14, 2016 – Beijing, China

SIMPAR 2016 – December 13-16, 2016 – San Francisco, Calif., USA

WAFR 2016 – December 18-20, 2016 – San Francisco, Calif., USA

Let us know if you have suggestions for next week, and enjoy today’s videos.

Cybathlon was such a fantastically fantastic event:

Kudos to ETH Zurich and all the teams and athletes!

[ Cybathlon ]

YES PLEASE. In the current issue of IEEE Transactions on Robotics, from Andreas Doumanoglou at Imperial College London:

This work presents a complete pipeline for folding a pile of clothes using a dual-armed robot. This is a challenging task both from the viewpoint of machine vision and robotic manipulation. The presented pipeline is comprised of the following parts: isolating and picking up a single garment from a pile of crumpled garments, recognizing its category, unfolding the garment using a series of manipulations performed in the air, placing the garment roughly flat on a work table, spreading it, and, finally, folding it in several steps. The pile is segmented into separate garments using color and texture information, and the ideal grasping point is selected based on the features computed from a depth map. The recognition and unfolding of the hanging garment are performed in an active manner, utilizing the framework of active random forests to detect grasp points, while optimizing the robot actions. The spreading procedure is based on the detection of deformations of the garment’s contour. The perception for folding employs fitting of polygonal models to the contour of the observed garment, both spread and already partially folded. We have conducted several experiments on the full pipeline producing very promising results. To our knowledge, this is the first work addressing the complete unfolding and folding pipeline on a variety of garments, including T-shirts, towels, and shorts.

[ IEEE TRO ]

The THeMIS ADDER is an unmanned ground vehicle with a rather intimidating machine gun on it:

What you’re not seeing here is the person on the other end of this, operating the robot remotely. This thing isn’t autonomous, there’s a human very much in the loop.

[ THeMIS ADDER ]

New from Dash Robotics: a ladybug! And it lights up!

“Nearly indestructible” you say? Challenge accepted.

[ Kamigami Robots ]

A new system from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) is the first to allow users to design, simulate, and build their own custom drone. Users can change the size, shape, and structure of their drone based on the specific needs they have for payload, cost, flight time, battery usage, and other factors.

[ MIT ]

This is a clever and relatively simple idea for drawing pictures and patterns on grass:

I feel like there’s a market for this sort of thing in stadiums. Maybe a reason to go actually watch a sportsball event in person?

[ Yuta Sugiura ]

Have you gotten me a present lately? No? How about one of these:

Those are the kinds of noises that all robots should make all the time when doing anything.

[ Evil Mad Scientist ] via [ Gizmodo ]

From Fraunhofer IPA via Google Translate:

The Servus-RGS-Adapt Exoskeleton, which allows disabled persons to walk independently without walking stick or walker. The Fraunhofer IPA Group’s bionics and propulsion technology has made it possible to cope not only straight distances, but also slopes up to 7°, thanks to adaptive foot units, which increases the autonomy and mobility of the wearer.

[ Fraunhofer ]

We should get a closer look at this UNIBOT thing from ECOVACS at CES in January, but here’s a German teaser that doesn’t so much tease as give it all away:

Basically, it’s a vacuum that can also carry a camera and humidifier. Not the worst idea.

[ Ecovacs ]

NaviPack looks to be a clone of the RP-LIDAR (which is a clone of the Neato lidar); but it’s only $200 right now on Indiegogo:

The big differentiator here is that NaviPack integrates the SLAM algorithm and sensor into one, which you may or may not care about.

[ NaviPack ]

PAL Robotics opened up their headquarters for European Robotics Week. Looking pretty good:

Those snacks looked pretty good too.

[ PAL Robotics ]

We wrote about these spider-inspired jumping robots last year, but it’s always fun to watch robots getting tossed off of a ramp:

[ UCT ]

Audi is using an adorable little autonomous car to help train its adorable big autonomous cars:

[ Audi ] via [ The Verge ]

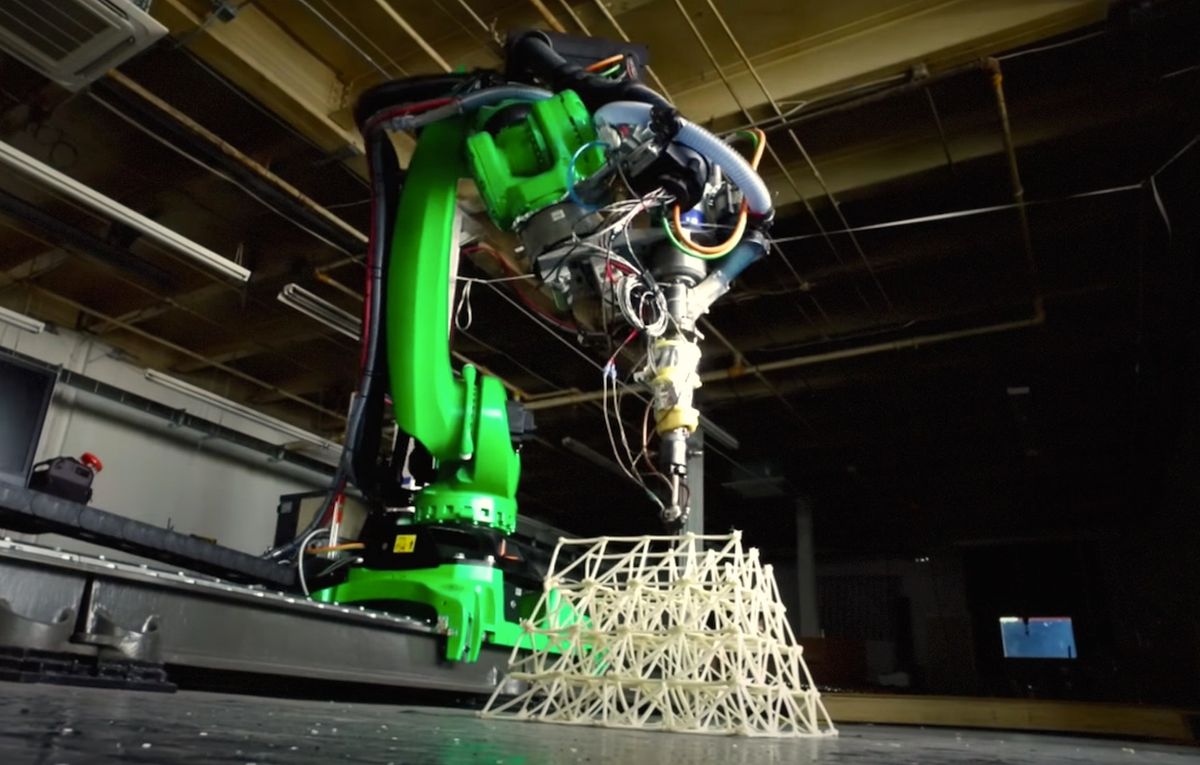

From Kuka:

3D printing has the potential to revolutionize the way humans manufacture and build things, from the small scale to the very large. By combining robots with 3D printing, KUKA partner Branch Technology, working with the architects at Gould Turner Group, is fundamentally shifting the way architects and designers approach not only the building of a final structure, but also the way that structure is designed from beginning to end.

And yes, you can use the same technology to fabricate lifelike androids.

[ Kuka ]

Here’s an interesting idea: using a drone as a movable object for generating haptic feedback in virtual reality:

Encountered-type haptic displays recreate realistic haptic sensations by producing physical surfaces on demand for a user to explore directly with his or her bare hands. However, conventional encountered-type devices are fixated in the environment thus the working volume is limited. To address the limitation, we investigate the potential of an unmanned aerial vehicle (drone) as a flying motion base for a non-grounded encountered-type haptic device. As a lightweight end-effector, we use a piece of paper hung from the drone to represent the reaction force. Though the paper is limp, the shape of paper is held stable by the strong airflow induced by the drone itself. We conduct two experiments to evaluate the prototype system. First experiment evaluates the reaction force presentation by measuring the contact pressure between the user and the end-effector. Second experiment evaluates the usefulness of the system through a user study in which participants were asked to draw a straight line on a virtual wall represented by the device.

[ A-CH Lab ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.