It was billed as an epochal event in humanity’s history: For the first time a computer had proved itself to be as smart as a person. And befitting the occasion, the June story generated headlines all around the world. In reality, it was all a cheesy publicity stunt orchestrated by an artificial-intelligence buff in England. But there was an upside. Many of the world’s best-known AI programmers were so annoyed by the massive coverage, which they deemed entirely misguided, that they banded together. They intend to make sure the world is never fooled by false AI achievement again. The result is a daylong workshop, “Beyond the Turing Test,” where attendees aim to work out an alternative to the current test. The workshop will be held this coming Sunday in Austin at the annual convention of the Association for the Advancement of Artificial Intelligence.

Whether a particular computer program is “intelligent,” as opposed to simply being “useful,” is arguably an unanswerable question. But computer scientists have nonetheless been asking it ever since 1950, when Alan Turing wrote “Computing Machinery and Intelligence” and proposed his now-famous test. The test is like a chat session, except the human doesn’t know if it’s a computer or a fellow person on the other end. A computer that can fool the human can be adjudged to be intelligent or, as Turing put it, “thinking.”

In the early days of AI, the test was considered by scientists to be too far beyond the current capabilities of computers to be worth worrying about. But then came “chatbot” programs. Without using anything that could be described as intelligence, they use key words and a few canned phrases well enough to persuade the unaware that they’re having a real conversation with a flesh-and-blood human. This genre of programs has been fooling some folks for decades, including this past summer, when Eugene Goostman, a chatbot program pretending to be a 13-year-old Ukrainian boy, persuaded a handful of people in England that it was a real boy. (The program was undoubtedly aided by people’s assumption that they were speaking to a disaffected teen with limited English language skills.)

The Goostman win was trumpeted widely in the media, to the enormous chagrin of legitimate researchers. Most of them just groused privately, but one, Gary F. Marcus, a New York University research psychologist, used his forum as a contributor to The New Yorker to raise the issue of whether Turing’s test had become too easy to game, and to urge the AI community to come up with a replacement. To his surprise, researchers from all over the world wrote in offering to help. “We’d clearly touched a nerve,” says Marcus.

The upshot: Marcus is cochairing the 25 January event, along with Francesca Rossi, of the University of Padova, in Italy, and Manuela Veloso of Carnegie-Mellon, in Pennsylvania.

One of the criticisms of the Turing test is that it emphasizes language at the expense of other skills, such as vision or movement. Marcus says he expects the workshop to come up with a series of tests in a variety of different areas—what he describes as a kind of “Turing triathlon.” The day will be divided into two parts; first, an A-list of AI researchers will present their ideas for Turing test alternatives. Then, in the afternoon, the group will break up into committees to begin the task of sketching out specific test ideas and developing timetables for conducting those tests.

Marcus says he doesn’t expect the final work of the committees to be finished until several weeks after the conference. The tests will presumably be conducted on an annual or semiannual basis; what will constitute passing one remains to be decided.

As it works toward a triathlon-like test suite, the workshop will feature discussions of many forms of computer intelligence not involving language. Veloso, for example, is a past president of the RoboCup soccer competition, which seeks by 2050 to field a team of robots that can beat that year’s FIFA World Cup champions. Veloso says she will be emphasizing the importance of a computer being able to understand and interact with the real world. A possible Turing test alternative, she says, is a machine that could monitor video feeds of a shopping mall and understand when something had occurred that warranted reporting an emergency.

Peter Clark, a senior researcher at the Allen Institute for Artificial Intelligence, will be describing the work his group has done toward giving computers the ability to read middle-school science textbooks and then answer standard test questions about them. Another speaker, MIT’s Patrick Henry Winston, will discuss his research on getting computers to read and understand stories.

One of the front-runners for the short list of Turing test alternatives is the Winograd schema challenge. This type of test is named for Terry Winograd, the now semiretired Stanford professor who as a young man developed a widely heralded AI program before becoming skeptical that the AI research being conducted in the 1960s and 1970s would ever lead anywhere. (Later, he was the faculty advisor for two Stanford graduate students with a different idea about computer intelligence: Google cofounders Sergey Brin and Larry Page.)

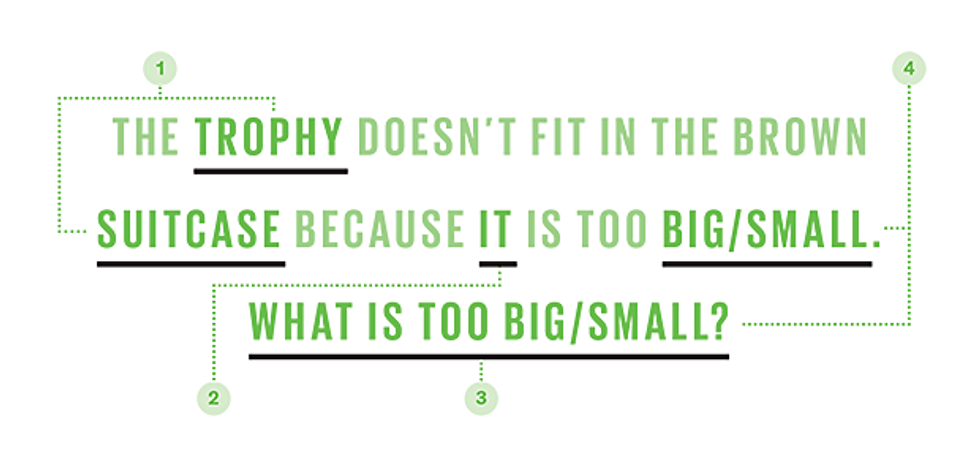

A Winograd schema requires that a computer use commonsense and real-world knowledge to understand an intentionally ambiguous sentence, such as figuring out what “it” refers to in “The trophy doesn’t fit in the brown suitcase because it is too big” [see illustration]. Hector Levesque, of the University of Toronto, has developed collections of Winograd schemas that he believes would make a useful surrogate in demonstrating human-level reasoning.

Not everyone speaking at the workshop believes that the Turing test needs a wholesale revision. Harvard’s Stuart Shieber, for example, says that many of the problems associated with the test aren’t the fault of Turing but instead the result of the rules for the Loebner Prize, under the auspices of which most Turing-style competitions have been conducted, including last summer’s. Shieber says that Loebner competitions are tailor-made for chatbot victories because of the way they limit the conversation to a particular topic with a tight time limit and encourage nonspecialists to act as judges. He says that a full Turing test, with no time or subject limits, could do the job that Turing predicted it would, especially if the human administering the test was familiar with the standard suite of parlor tricks that programmers use to fool people.

That AI specialists are getting serious about testing digital intelligence might lead you to think that entrepreneur Elon Musk was correct when he warned that society is on the brink of a potentially dangerous encounter with superintelligent silicon. To the people actually designing such machines, the idea of imminent super AI is absurd, because today’s systems routinely fail at the most trivial tasks. Clark, for example, says that in natural-language tests, computers can’t predictably handle issues of “coreference resolution”—for example, understanding what “it” refers to in sentences similar to “He saw the doughnut on the table and ate it.”

Indeed, almost no one involved in the workshop thinks that computers will be able to pass any of the proposed tests anytime soon. Which is sort of the point, says Marcus. While there are already dozens of competitions for solving specific computer problems in areas such as vision and speech, most of them, he says, are deliberately designed to be not too far beyond today’s capabilities, lest researchers simply not bother to try to solve them. But the field of AI has made sufficient advances in recent years to warrant that it now start turning its attention to what Marcus calls “high-hanging fruit.”

An important part of the testing process, says Marcus, will be to continually revisit the rules for each test, to make sure that the programs being entered are abiding by the spirit rather than the letter of the competition. That’s essentially the problem that developed over the years with the Loebner Prize version of the Turing test.

It turns out that “gaming” a test is possible in more than just chatbot competitions. For example, in early runs of the RoboCup, which involved teams of small robots playing soccer against each other, someone realized that if a robot could just sit on the ball, the result would be a tie. Even Winograd schemas can be partially gamed. Sameer Pradhan, a Harvard researcher who helps organize the annual Computational Natural Language Learning competitions in the field of natural-language processing, says it’s entirely possible for researchers to come up with algorithms, that would be able to solve some Winograd schemas, but without possessing the generalized knowledge that is the holy grail of AI work.

For Marcus, that wouldn’t be nearly good enough. “If, in 10 years, computers can pass all our tests by using tricks and shortcuts, and not with anything resembling genuine intelligence, we won’t be happy at all. We’re going after the real thing.”

A correction to this article was made on 26 January 2015.

About the Author

Lee Gomes, a former Wall Street Journal reporter, has been covering Silicon Valley for more than two decades. He interviewed the machine learning expert Michael I. Jordan for IEEE Spectrum in October 2014.