Self-Walking Exoskeletons

Brokoslaw Laschowski's team created an AI-based prosthetic that infers the wearer's destination

Steven Cherry Hi this is Steven Cherry for Radio Spectrum.

The Existentialist philosopher Jean-Paul Sartre has an example that he uses to describe what it is to decide to do something … what the process of decision-making is. Or, more precisely, what the process isn’t.

He says, imagine you’re driving your car and waiting at a stop light. When the light turns green, you don’t first decide to take your foot off the brake pedal and put it on the gas pedal. You see the light change, and your foot moves from the one pedal to the other, and that is the decision.

Unfortunately, in the world of prosthetics, specifically exoskeletons, that’s not how it works. We’re still at the stage where a person has to instruct the prosthetic to first do one thing, then another, then another. As University of Waterloo Ph.D. researcher Brokoslaw Laschowski put it recently, “Every time you want to perform a new locomotor activity, you have to stop, take out your smartphone and select the desired mode.”

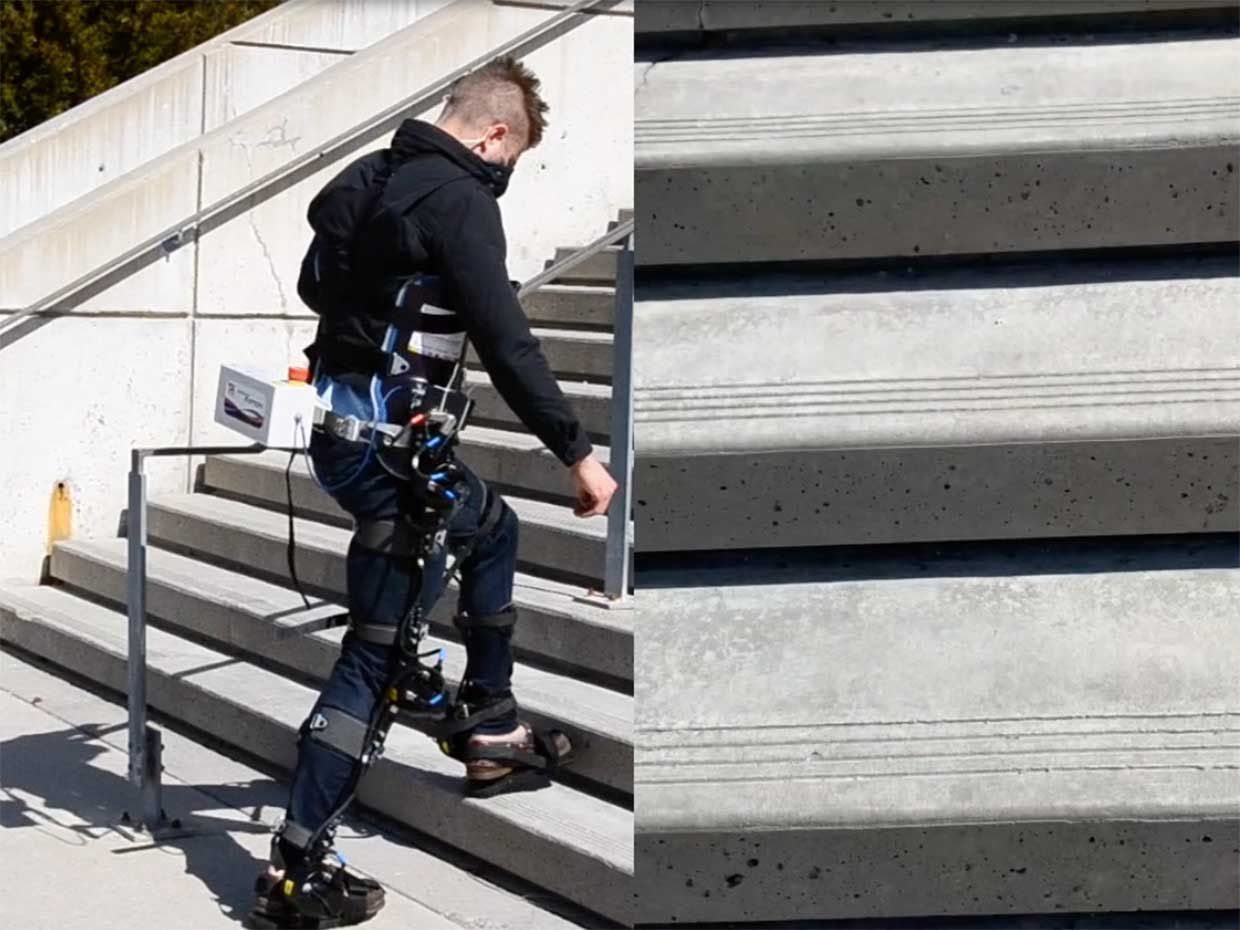

Until perhaps now, or at least soon. Laschowski and his fellow researchers have been developing a device, using a system called ExoNet, that uses wearable cameras and deep learning to figure out the task that the exoskeleton-wearing person is engaged in, perhaps walking down a flight of stairs, or along a street, avoiding pedestrians and parking meters, and have the device make the decisions about where a foot should fall, which new direction the person should face, and so on.

Brokoslaw Laschowski is the lead author of a new paper, “Computer Vision and Deep Learning for Environment-Adaptive Control of Robotic Lower-Limb Exoskeletons,” which is freely downloadable from BioRxiv, and he’s my guest today.

Brock, welcome to the podcast.

Brokoslaw Laschowski Thank you for having me, Steven. Appreciate it.

Steven Cherry You’re very welcome. A press release compares your system to autonomous cars, but I would like to think it’s more like the Jean-Paul Sartre example, where instead of making micro-decisions, the only decisions a person wearing a robotic exoskeleton has to make are at the level of perception and intention. How do you think of it?

Brokoslaw Laschowski Yeah, I think that’s a fair comparison. Right now, we rely on the user for communicating their intent to these robotic devices. It is my contention that there is a certain level of cognitive demand and inconvenience associated with that. So by developing autonomous systems that can sense and decide for themselves, hopefully we can lessen that cognitive burden where it’s essentially controlling itself. So in some ways, it’s similar to the idea of an autonomous vehicle, but not quite.

Steven Cherry Let’s start with the machine learning. What was the data set and how did you train it? [[[[REPP TO HERE]]]]

Brokoslaw Laschowski The data set was generated by myself, this involves using a wearable camera system and collecting millions and millions of images of different walking environments at various places throughout Ontario. And those images were then labeled—this is important for what’s known as supervised learning. So this is when you give the machine an image and that machine has access to the labels. So what is the image showing? And then machine learning is ... Essentially you can think of it almost like tuning knobs and fine-tuning objects in the machine so that the output of that or the prediction of that image matches the labels to which you are to give the image.

So in some ways, it’s kind of similar to an optimization problem and it’s referred to as machine learning because the machine itself learns the optimal design based on a certain optimization algorithm that allows it to immediately update that tunable weights within, say, a neural network.

Steven Cherry So basically, you were training it to understand different walking environments.

Brokoslaw Laschowski Correct. The idea is that if we have access to that perception information, we can then use it to create predictive controllers for a walking exoskeleton or a prosthetic leg, sometimes referred to as a bionic leg. Right now, without that perception, without that environment information, the control of these robotic devices—the onboard controller—has access to only information about the current state of the user and the state of the device. So it has to make new decisions every second and every step, without any knowledge as to what the next instance will be. And so if we have information prior to that, if we have information about the oncoming environment, we can then incorporate that into the controller and we can now make faster decisions as well as more accurate decisions because we’re not starting from scratch every single second it computes a new decision.

Steven Cherry So as I understand it, there’s a mid-level controller and a high-level controller, and the high level controller understands the different working environments and converts its understanding into things like where the foot should go and at what angle, that sort of thing.

Brokoslaw Laschowski Exactly. Yeah, so a lot of research has been done on the mid-level control and the low-level control—this is in in some ways standard engineering practice. These are using mathematical models and standard engineering control algorithms.

What I find most fascinating is the high-level control, and this is typically done by biomedical engineers like myself. At the high level, this is really the interaction between the human and the device. And at the high level, we’re really trying to infer what the user wants to do. And in lower limb systems, this this intent recognition comes in the form of identifying what type of walking activity the user wants to do.

And so right now in these systems, you would have onboard sensors that give you joint angles and joint torques, ground reaction forces, things like these. And the robot essentially makes control decisions based on that information. It decides what does the user want to do or what is the user currently doing and whether or not the user wants to change or continue doing what they’re doing.

Steven Cherry And how much of that is generic to sort of human anatomy and how much would it have to be fine-tuned for an individual user?

Brokoslaw Laschowski That’s a great question. The sensors that are currently used, there are sensors that are embedded within the robotic device itself, and those sensors wouldn’t necessarily have to be changed for a given individual, because if you think in the case of a prosthetic limb, everything is housed on the prosthetic limb. So there’s nothing that necessarily needs to go on the user.

There are things like surface electromyography (EMG). Surface EMG go on top of the skin and these electrodes essentially pick up muscle activity. So when you contract your muscles, there’s a certain electrical activity associated with the contraction. These sensors can pick up that contraction so that we know with varying levels of signals, this is kind of what the user wants to do.

And we can train the machine learning algorithm to identify for some given waveform, that this is typically associated with wanting to walk up a flight of stairs or wanting to sit down or something like this. Those types of neural signals, those type of biological signals, would be tuned for an individual user because the placement of them depends on various things. So in a case of an amputation, not all of the residual muscles are there. So the placement of these sensors would vary from patient to patient.

In an exoskeleton case where the user does have a limb, but it’s an impaired limb—this could also be affected by the level of paralysis. So whether you’re dealing with somebody like a stroke or a spinal cord injury, some muscles may have electrical activity or you may have viable signals to be able to draw information as to what the user wants to do. But again, this depends on the individual. But with regards to patient-specific tuning, that’s usually done at the lower levels, actually. And so tuning these devices is exceptionally time-consuming, typically.

So for a given walking gait, to just take one case, one environment, say just level flat ground. If you and I, Steven, were to compare our gaits and compare walking on level ground and we were to record, say, our muscle signals, they would vastly differ between yourself and myself. And the way in which we walk—and this is sometimes referred to as biomechanics—would differ. So when we’re trying to develop low-level controllers and mid-level controllers that are responsible for providing the assistance for a given activity, the level of assistance and the timing of assistance have to be tuned for individual users. And that can be quite time-consuming, actually.

Steven Cherry And this is all in conjunction with a vision system that’s assessing the environment ... A staircase doesn’t change much, but a street has people moving around, dogs on leashes, whatever. Even a staircase can have a hat that fell off someone’s head or a toy that a child left behind. The person wears a chest camera?

Brokoslaw Laschowski This is my current setup, which the system itself we refer to as Exonet, in the Exonet system, which is a research platform for sensing and classification of different walking environments ... in this prototype design, I have it on the chest. Moving forward, to develop a commercially viable solution, one could make the case that perhaps a leg-mounted camera or some type of sensing system like a laser or some other sensor might be more feasible just because maybe not everyone wants to wear a camera strapped to their chest. But that’s the current prototype design we have right now.

Steven Cherry And so how much of the processing is done locally and how much in the cloud?

Brokoslaw Laschowski So right now, for training our neural networks, everything is currently being done on the cloud, specifically for training for inference—which is when you’re trying to make predictions on new unseen real-time data—that is done locally with regards to not necessarily the camera system, but the current controllers. It depends on the device. There are so many devices out there in research and development and in labs. Some have them off-board, some have them onboard. A commercial device—like if you were to search some commercially available prosthetics and exoskeletons—these are all done, I believe, on board.

Steven Cherry You mentioned the difference between, say, your walking gait and my walking gait, and that all fits within the purview of your existing data set and would just be fine-tuned for the individual. But but there are going to be some pretty big limitations to the data set, right? I mean, even maybe running on a track would need a different data set, maybe even hiking up a mountain.

Brokoslaw Laschowski Potentially. The strength of machine learning is really contingent upon the data in which trained. So if you’re designing a neural network like we did, the accuracy and the robustness of the predictions coming from that neural network, are really dependent on the diversity and the scale of the data set and what you train on. This is why we placed so much emphasis on the design of a very large scale, diverse image data set. And that’s the Exonet database.

This took a little bit over half a year to develop. Actually, if you include the labeling with the images as well, it took approximately a year. So it’s quite time-consuming and this is every day, working on it. But that level of scale and diversity is needed to allow for inference or classification on unseen data. So while I can’t recall if I took the camera up any trails per se, we do have hours upon hours of data of outdoor environments, and hopefully the network is trained sufficiently to be able to extrapolate the data in which it was trained on to previously unseen data, which would be if I were to take the system and walk up a trail that has never been on before.

And that’s really the power of machine learning, specifically deep learning, in that we wanted it to perform well on unseen data, even data that is perhaps not entirely encapsulated within the data set. Larger data sets are obviously very beneficial when you’re doing supervised learning ... Now, there’s unsupervised learning in which he doesn’t need the labels and that’s a whole ’nother animal. But with supervised learning, which is what I focus on, the data and the diversity of these are very important. But hopefully, we find—and this is kind of what we showed in our paper—is that we’re able to make predictions on unseen data with relatively good accuracy, despite the noise and the complexity of real-world environments.

Steven Cherry Exoskeletons are interesting from the point of view also of enhancing ordinary human capabilities, even something as simple as more efficiently walking on uneven ground, maybe especially with a 40-pound pack uphill, let’s say. Has there been any interest from the military?

Brokoslaw Laschowski So exoskeleton research, if you were to trace the genealogy of exoskeleton research, it really came out of a Darpa program in the United States and this was military funding to be able to develop exoskeletons for these combat applications or search-and-rescue applications. And then from there blossomed a field of medical exoskeletons as well as occupational exoskeletons—so for manufacturing and assembly line work applications. So there is certainly applications for military and combat as it was actually that that’s kind of what really helped kickstart this field several decades ago.

Steven Cherry And to what extent would your algorithms be convertible to another limb entirely, say an arm exoskeleton or maybe even a back exoskeleton, you know, which would be needed for a person to better pick up a box or even get in and out of a car.

Brokoslaw Laschowski That’s a great question. So if we’re going to think about a back exoskeleton, a back exoskeleton benefits from alleviating some of the physical burden associated with lifting something up in flexion and extension within the sagittal plane. The dataset that we have right now ... We didn’t train it for object recognition. Let me say that it does have objects on the ground. So it does encapsulate the features like the images .... These images have features that showcase many different objects. These objects could certainly be used, are certainly picked up. So our environment recognition system, with some tweaks in the labels, could be used for identifying objects to be picked up and then that could be used to inform the assistance provided by a back exoskeleton.

Now, for an upper limb exoskeleton, this is really different in that we look specifically at locomotion environments. Now in an upper limb exoskeleton, we would assume that many of the activities that an exoskeleton for the upper limbs would do don’t necessarily show the walking environment, but really are at stationary settings. This may not always be the case, but I would venture to say that perhaps most of the cases would be when you’re providing assistance, when sitting down at a desk, doing a job, sitting down and eating food, brushing your teeth. And in that case, the that the terrain information isn’t really that valuable. So that being said, there are other data sets for upper limit exoskeletons, and that’s kind of being used to promote the design and control of those devices that are specifically for fields of view that show the hands, as well as objects to which the hands could be manipulating like a toothbrush or a cup.

Steven Cherry And your paper describes your success rate. The system is not exactly perfect and—I mean, it’s still at a very experimental stage—but this in principle, I mean, commercially, this is something where we would want something pretty close to perfection. We can’t have people falling down flights of stairs or walking into fire hydrants and falling into the street. What do you think it will take to get to something that we could rely on?

Brokoslaw Laschowski That’s a great question, and that’s one of the focuses of the remaining part of my Ph.D. is trying to hypothesize ways in which we can improve the design and training of these neural networks to perform better on large scale datasets. It’s important to note that if you had a smaller dataset that is not really encapsulating of many of the environments, you’re going to achieve, or you can achieve, relatively high accuracy. So if you were to read some of these papers or if you were to see some of these patterns or a commercial product and they report high accuracy, well, that accuracy, that classification performance is only as good as the data set in, which is trained on. So you can have ninety nine percent accuracy, but perhaps the environments in which you can achieve 99 percent accuracy are extremely limited, perhaps maybe only the research laboratory.

One of the things that we were really humbled by was the fact that the Exonet database is so large. So we were really humbled by the scale and diversity of the data set in that instead of achieving ninety five percent accuracy, which we were able to do on our smaller dataset published in 2019, we achieved accuracy within the low 70 percent. And the reason is the data set is so challenging. That is really actually a good thing in that these challenging data sets can help promote the development of next-generation environment classification systems, where it’s really pushing the boundaries on what we as researchers can do in terms of enhancing the performance of these neural networks.

Some of the things that we can do to improve the accuracy—that’s really the purpose of the data set. it’s open source, so for the entire research community to be able to use their cool ideas and to test them. And we can all use this common platform where now we can do direct comparisons. So if a research group out of Europe or a research group in the United States develops some new algorithm to be able to achieve high image classification performance, we would encourage them to try that on the Exonet data sets—not only try it, but also we can use the Exonet data set to develop these algorithms.

And the idea is that once we have a common platform, we can start comparing. And we as a research community, using these large data sets, can then start achieving higher and higher classification accuracies. The accuracy that we perform, the accuracy that we report in our recent paper, this is the benchmark. This is the inaugural performance on the data set. The challenge now, the open challenge that we’re extending to the research community is to have people beat us. We want to be able to push the boundaries of what these classifiers can do, especially on such a challenging data set like Exonet.

Steven Cherry Well, I personally would be able to use one of these devices only if the data set can discern the intent when I change my mind, you know, like, “oh, I forgot my keys” and go back in the house, which I personally do about, you know, maybe 10 times a day.

Brokoslaw Laschowski Right now, there is a little bit of a difference between recognizing the environment and recognizing the user’s intent. They’re related, but they’re not necessarily the same thing. So, Steven, you can imagine your eyes as you’re walking. They are able to sense a car as you’re walking towards the car. There is ... If you’re standing on the outside, you can imagine that as you get closer and closer to that car, this might infer that you want to get in the car. But not necessarily. Just because you see, it doesn’t necessarily mean that you want to go and pursue that thing.

This is kind of the same case in—and this kind of comes back to your opening statement—this is kind of the same thing in walking where, as somebody is approaching a staircase, for example, our system is able to sense and classify those staircases, but that doesn’t necessarily mean that the user then wants to climb those stairs. But there is a possibility. And as you get closer to that staircase, the probability of climbing the stairs increases. The good thing is that we want to use what’s known as multi-sensor data fusion, where we’re combining the predictions from the camera system with the sensors that are on board. And the fusion of these sensors will be able to give us a more complete understanding as to what the user is currently doing and what they might want to do in the next step.

Steven Cherry Well, there are a number of great challenges for society as a whole, such as climate change, but on the individual level, I think honestly a few things are as important as giving handicapped people improved access to the world around us and someday perhaps giving everyone extra human capabilities. Thank you for devoting your time and energy and creativity to the challenge of better prosthetics and exoskeletons. And thank you for joining us today.

Brokoslaw Laschowski Thank you for having me. Steven.

Steven Cherry We’ve been speaking with Brokoslaw Laschowski, about a new development that uses machine learning to make prosthetic devices far more usable by understanding what the wearer’s intentions are. A new paper describing the advance, “Computer Vision and Deep Learning for Environment-Adaptive Control of Robotic Lower-Limb Exoskeletons,” is freely available and a link to it is in our show transcript.

Radio Spectrum is brought to you by IEEE Spectrum, the member magazine of the Institute of Electrical and Electronic Engineers, a professional organization dedicated to advancing technology for the benefit of humanity.

This interview was recorded April 8, 2021 using Zoom and Adobe Audition. Our theme music is by Chad Crouch.

You can subscribe to Radio Spectrum on Spotify, Stitcher, Apple, Google, and wherever else you get your podcasts, or listen on the Spectrum website, which also contains transcripts of this and all our past episodes. We welcome your feedback on the web or in social media.

For Radio Spectrum, I’m Steven Cherry.

To read more about Laschowski’s exoskeletons, check out “Robotic Exoskeletons Could One Day Walk by Themselves,” IEEE Spectrum, April 12, 2021.

Note: Transcripts are created for the convenience of our readers and listeners. The authoritative record of IEEE Spectrum’s audio programming is the audio version.

We welcome your comments on Twitter (@RadioSpectrum1 and @IEEESpectrum) and Facebook.

- Brain Signals Can Drive Exoskeleton Parts Better With Therapy ... ›

- Cyberdyne's Medical Exoskeleton Strides to FDA Approval - IEEE ... ›