Not all software is perfect—many apps, programs, and websites are released despite bugs. But the software behind critical systems like cryptographic protocols, medical devices, and space shuttles must be error-free, and ensuring the absence of bugs requires going beyond code reviews and testing. It requires formal verification.

Formal verification involves writing a mathematical proof of your code and is “one of the hardest but also most powerful ways of making sure your code is correct,” says Yuriy Brun, a professorat the University of Massachusetts Amherst.

To make formal verification easier, Brun and his colleagues devised a new AI-powered method called Baldur to automatically generate proofs. The accompanying paper, presented in December 2023 at the ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering in San Francisco, won a Distinguished Paper award. The team includes Emily First, who completed the study as part of her doctoral dissertation at UMass Amherst; Markus Rabe, a former researcher at Google, where the study was conducted; and Talia Ringer, an assistant professor at the University of Illinois Urbana-Champaign.

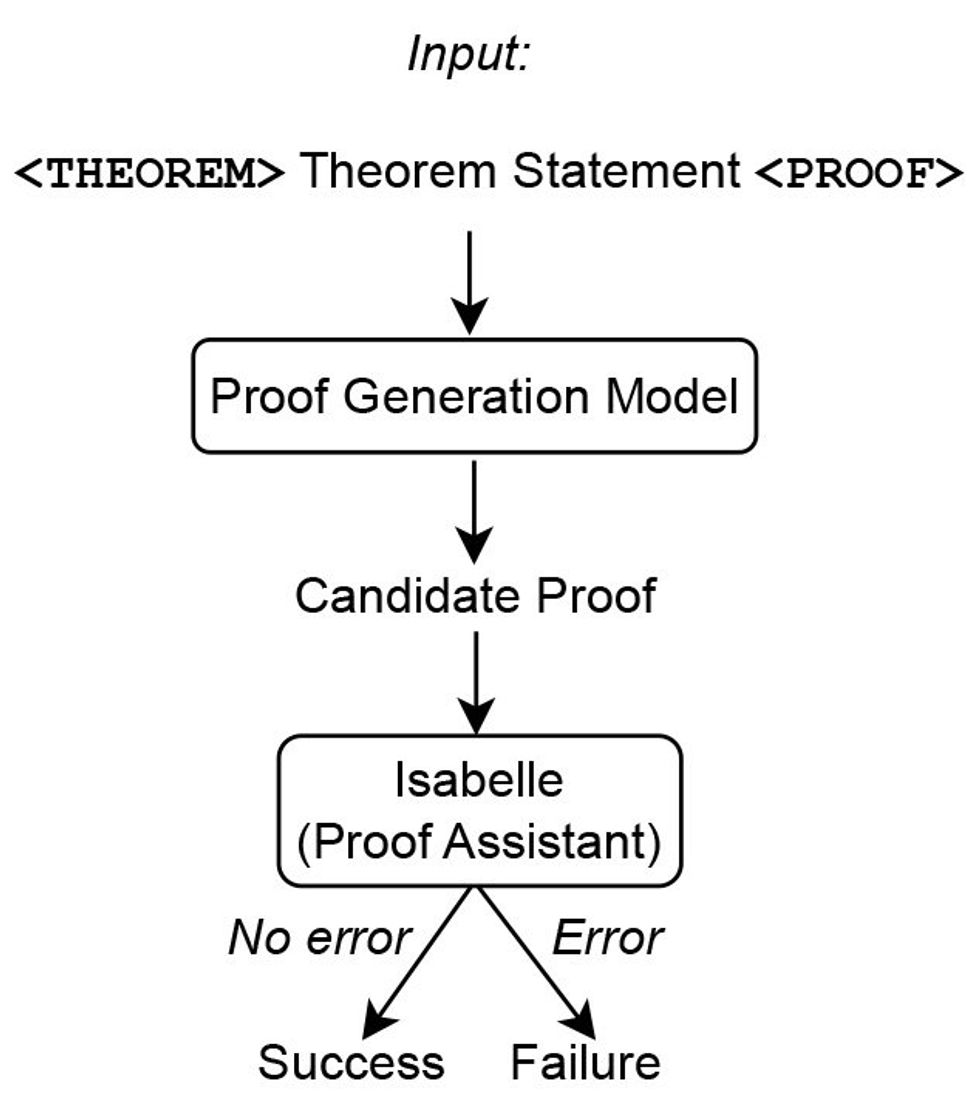

Baldur is powered by Google’s Minerva large language model (LLM)—which was trained on scientific papers and Web pages containing mathematical expressions—and fine-tuned on data about proofs and theorems. Baldur works with Isabelle, a proof assistant or automated theorem prover,to check its proofs. When given a theorem statement, Baldur is able to generate an entire proof almost 41 percent of the time.

To boost Baldur’s success, the team fed the model additional contextual information—such as other definitions or the lines preceding the theorem statement in a theory file—and found that the proof rate increased to 47.5 percent. This means that Baldur is able to take the context and use it to predict a new correct proof, says First. Similar to a programmer who may be better equipped to fix a bug in a method when they know how that method relates to its surrounding code and the other methods in the same class, Baldur can perform better with extra context.

Thor, the current state-of-the-art tool for automatic proof generation, has a higher proof rate at 57 percent. Baldur’s advantage lies in its ability to generate whole proofs; Thor predicts the next step in a proof using a smaller language model combined with a method that searches the space of possible proofs. But when Thor and Baldur (Thor’s brother in Norse mythology) work together, the pair can generate correct proofs nearly 66 percent of the time.

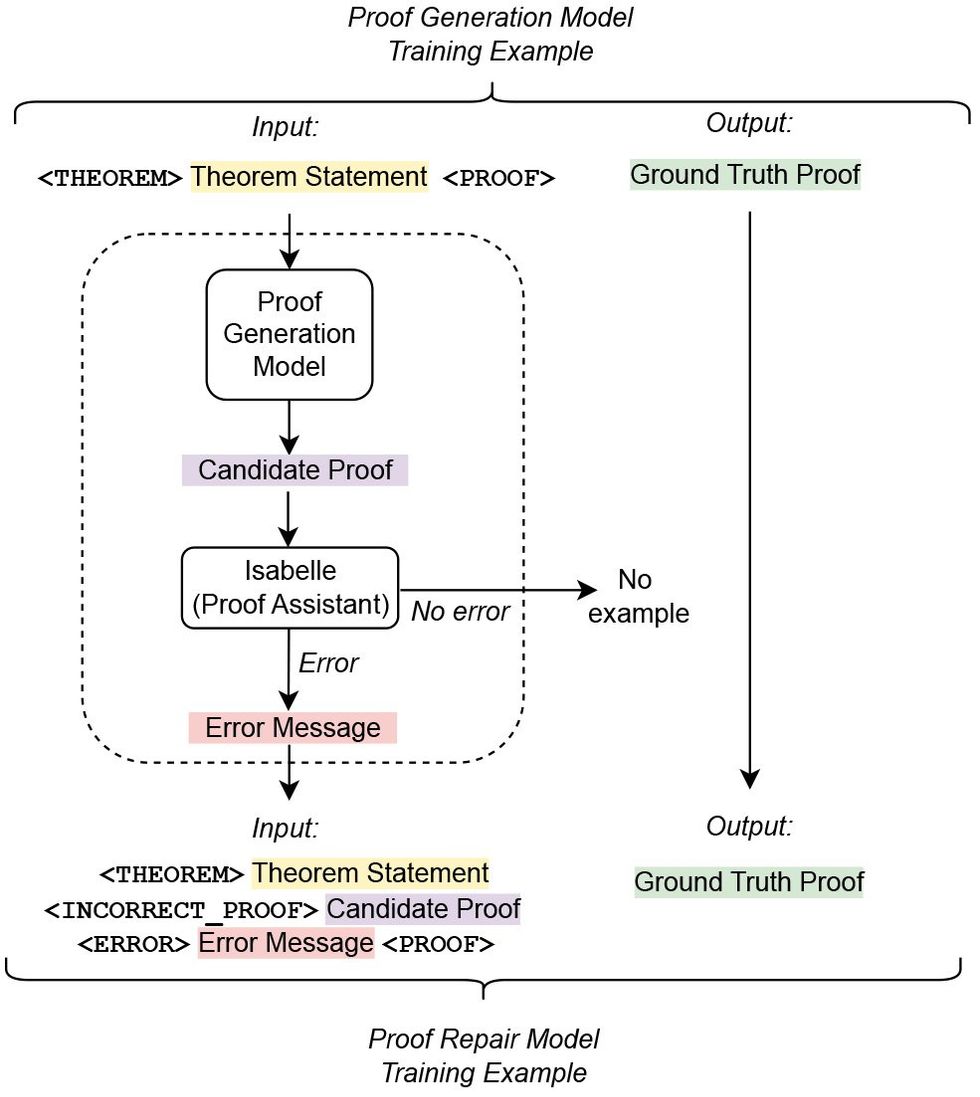

The team also discovered that Baldur can “repair” its own proofs, further improving its proof rate. When provided with its previous failed attempt plus the error message returned by Isabelle, Baldur can turn its wrong proof into a right one.

“The fact that the error message helped so much was surprising,” Brun says. “[It] suggests that there’s more useful information that could potentially be fed into the large language model to give better answers. We’re just scratching the surface.”

The team has yet to find the right amount of information that would be deemed valuable for the model. “One limitation is that we’re giving it some information around the proof that it’s trying to generate, but we don’t know what’s the limit and where it stops being useful,” says Brun. And there’s still a considerable degree of error, which he hopes can be improved by fine-tuning the model using more datasets that better explain what a proof looks like and what a theorem and proof pair look like.

In terms of next steps, First is looking at implementing the approach used for Baldur in other proof assistants, as well as uncovering ways to more smartly gather contextual information that can help enhance the model’s accuracy. The team envisions Baldur helping simplify the jobs of proof engineers, who are tasked with, for example, formal verification of national security systems at organizations like the U.S. Department of Defense and its Defense Advanced Research Projects Agency (DARPA).

On a broader scale, the team is planning on getting feedback from software developers to see how tools like Baldur can help them—be it through debugging an error in their code, refining specifications, or creating a higher-quality system.

“There’s a lot of power in building interactive tools where a developer is trying to prove some property of their code,” Brun says. “Understanding how developers can interact with these tools and supporting them by building automated approaches can take them even farther.”

Rina Diane Caballar is a writer covering tech and its intersections with science, society, and the environment. An IEEE Spectrum Contributing Editor, she's a former software engineer based in Wellington, New Zealand.