Weaving A Web of Ideas

Engines that search for meaning rather than words will make the Web more manageable

This is part of IEEE Spectrum's special R&D report: They Might Be Giants: Seeds of a Tech Turnaround.

Remember software agents? Our own little software robots, they were supposed to represent us on the World Wide Web, which was already shaping up to be more information than any unaided human could sift through. Agents were going to know all about our needs, likes, and interests. They would forage every night for news and information, book our business travel for us, even do the preliminary research for our next management report.

It never happened. These robots were hard to build--too hard, actually. After all, Web pages are designed for human consumption. Words have meaning, indeed, multiple meanings: Is a particular document on "banking" about saving money or turning an airplane? The cues we use to derive meaning--position on the page, context, graphics, and other nontext elements--were beyond any software agent's ken. And some of the best information on the Web was hidden in databases that agents couldn't enter.

Now committees of researchers from around the globe are attacking the problem from the other direction. They want to make the Web more homogeneous, more data-like, more amenable to computer understanding--and then agents won't have to be so bright. In other words, if Web pages could contain their own semantics--if we had a Semantic Web--software agents wouldn't need to know the meanings behind the words.

But in the meantime, the Web has continued to grow. By the late 1990s, the leading search engine of the day, Altavista, could index only 30 percent of the Web. Searches often missed the most salient documents, and the ranking of hits with resp ect to search terms was poor. Just in time, along came Google with a better indexing engine and vastly better relevance ranking.

While Google can match the Web's astonishing growth, can it keep up with the expectations of its users? Someone who today says to a search engine, in effect, "Find some good documents on compound fractures of the ankle" will soon want to ask what he or she really wants to know: "Who are the best orthopedic surgeons near where I live, and are they included in my medical coverage?"

That sort of query can never be asked of an HTML-based Web. If we couldn't build intelligent software agents to navigate a simplistic Web, can we really build intelligence into the 3 billion or 10 billion documents that make up the Web?

While that sounds like moving the mountain to Mohammed, to Tim Berners-Lee, the inventor of the World Wide Web, it's not out of the question. The first step is to get a fulcrum under the mountain and lift it, and it is well under way. That fulcrum is the extensible markup language (XML). A sort of HTML-on-steroids, this coding system isolates, under the hood, the dozens or even hundreds of data elements a Web page might contain. Right now, HTML coding serves mostly to control the appearance and arrangement of the text and images on a Web page, so that only a few elements are tagged, such as <title> and <bold>. With new XML tags, <price>, for instance, a software agent might be able to, for example, comparison shop across different Web sites, or update an account ledger after an e-purchase.

It should come as no surprise that XML is yet another invention spearheaded by Berners-Lee and the organization he leads, the World Wide Web Consortium. With offices in Valbonne, France; Cambridge, Mass.; and Tokyo, and with a full-time staff of more than 60, the W3C, as it's called, brings together about 500 member organizations. While the IEEE Computer Society is one, most of the rest are large or mid-sized corporations like DaimlerChrysler [ranked 4 among the "Top 100 R and D Spenders"], Hewlett-Packard (30), and Autodesk. W3C also coordinates the work of additional researchers as well as volunteers from member and nonmember companies and academia.

The Semantic Web is just one item on the W3C's diverse agenda. Others are interoperability (in file formats, for example) and technologies for trust, like digital signatures. But the Semantic Web is increasingly important--four interest groups are working on its technologies.

Similar goal, simpler strategies

While the W3C works hard to coordinate the work of multifarious organizations, some other companies are overcoming the semantic shortcomings of a human-oriented Web without either restructuring it or waiting for smarter agents. Google Inc. (Mountain View, Calif.) has, to date, not only kept up with the Web's phenomenal growth, it has added new categories of documents in search results--PDFs, Usenet newsgroups, and image files. Autonomy Corp. (Cambridge, UK) and the Palo Alto (Calif.) Research Center [recently spun off from Xerox (73)], each, in different ways, use mathematical models of how long-term memory works in the brain to create concept maps out of the words on Web pages. At Verity Inc. (Sunnyvale, Calif.), researchers add things like organization charts and address books to infuse amorphous corporate documents with additional structure.

What companies like Google, Autonomy, and Verity are doing, in other words, is figuring out better ways of doing what search engines have always tried to do: deliver the best documents the existing Web has on a given topic. The advocates of the Semantic Web, on the other hand, are looking beyond the current Web to one in which agent-like search engines will be able to not just deliver documents, but get at the facts inside them as well. One thing everyone can agree on: even with its billions of pages and countless links, the Web, only a dozen years old, is still in its infancy. As Berners-Lee puts it, the next generation of the Web will be as revolutionary as the original Web itself was..

From words to concepts

The ideas behind the Semantic Web are innovations that simply extend current Web techniques in ways that make documents more datalike, so that agents can interact with them in sophisticated ways.

For instance, URIs (uniform resource identifiers) are like URLs (uniform resource locators), but more general: a URL (such as ) is a link to an entity on the Web, while a URI identifies resources, in general. (All URLs are URIs, while the reverse isn't the case.) For Berners-Lee, items like human beings, corporations, and bound books in a library are resources, just not "network retrievable" ones.

XML build on a second fundamental Web technique: coding elements in a document. With the current scheme, HTML, such codes as <title> for an article's title, <bold> for boldface type and <table> to begin a table, identify document elements only stylistically. XML, however, singles things out as data elements--as dates, prices, invoice numbers, and so on. In fact, XML allows users to mark up any data elements whatsoever.

The resource description framework (RDF) is the third component of the Semantic Web. An RDF makes it possible to relate one URI to another. It is a sort of statement about entities, often expressing a relation between them. An RDF might express, for example, that one individual is the sister of another, or that a new auction bid is greater than the current high offer. Ordinary statements in a language like English can't be understood by computers, but RDF-based statements are computer-intelligible because XML provides their syntax--marks their parts of speech, so to speak.

The Semantic Web notion that ties all the others together is that of an ontology--a collection of related RDF statements, which together specify a variety of relationships among data elements and ways of making logical inferences among them. A genealogy is an example of an ontology. The data elements consist of names, the familial relationships (like sisterhood and parenthood) that hold between them, and logical rules (if X is Y's sister, and Z is Y's daughter, then X is Z's aunt, and so on).

"Syntax," "semantics," and "ontology" are concepts of linguistics and philosophy. Yet their meanings don't change when used by the theorists in the Semantic Web community. Syntax is the set of rules or patterns according to which words are combined into sentences. Semantics is the meaningfulness of the terms--how the terms relate to real things. And an ontology is an enumeration of the categories of things that exist in a particular universe of discourse (or the entire universe, for philosophers).

Such are the building blocks of the Semantic Web, and from them come expansive visions of the next Web. Those visions could be seen in the futuristic scenes Berners-Lee painted in an article last year for Scientific American. In one, your mother needs to see a medical specialist, so a software agent invoked by your browser looks up potential providers, insurance coverages, location maps, and your schedule, and suggests a particular doctor and appointment times.

"That won't happen in three or five years," Berners-Lee told IEEE Spectrum. "But a lot of little things that could make our lives a lot easier will happen by then." A search engine today, he notes, might rank pages describing doctors by how many other Web pages link to them. But an agent-personalized search engine might rank them according to the criteria you're concerned with: specialization, location, and insurance-plan coverage.

Or consider a basic office task, an e-mail invitation to an out-of-town meeting complete with a directive to view the meeting details on a company Web page. Today, the recipient accepts the invitation, then cuts and pastes information into a calendar, an itinerary, an e-mail to a travel agent, a travel site query, and so on.

"Consider the alternative," Berners-Lee suggests. "Suppose the Semantic Web part of your browser says 'Oh, we have an entity of type meeting.' One thing you can do with anything of that type is right-click on it and select 'accept the appointment'."

The semantics of accepting a meeting invitation entails some very simple rules that require a start time and an end time. The browser finds the rules in an RDF and converts them into a vocabulary understood by your calendar. The RDF can in addition trigger the transfer of contact information for the people running the meeting, after picking it up from an address book. At a Web travel site, the destination and departure and return dates will already be entered. Just eliminating all the cutting and pasting, says Berners-Lee, will be an enormous benefit.

People express things like dates and locations in a hundred different ways. Only when they're all identified as such can software take information from disparate Web pages and databases and turn them into items like appointments and departure times.

Berners-Lee seems a bit embarrassed now by the grandiose nature of the examples in the Scientific American article. In another of them, the Semantic Web is responsible for the radio's volume lowering when a telephone rings, while in a third it books an entire business trip, including conference attendance, airline reservations, hotel, and rental car. But Eric Miller, who heads the W3C's technical work on the Semantic Web and was a co-author of the article, isn't at all abashed.

"Maybe a Web agent can't book your entire business trip today," Miller says. "Some day it will. But the baby steps we're now taking are worthy in their own right. If you can make all the travel arrangements in an hour instead of half a day, and you get a cheaper hotel rate because your Semantic Web agent noticed a corporate discount, that's a big help."

Still searching after all these years

The current king of the Web search world, Google, doubts the Web will ever be remade so as to be navigable by computers on their own. "It's going to be very hard," says Peter Norvig, Google's director of search quality. "Markup is an effort, there has to be a return." Norvig thinks that most Web page creators have little incentive to do the detailed markup required by XML. He notes that most users "don't even use today's simplest form of markup, word processor styles such as 'title' or 'body text.' Instead of adding structure, they set something in 20-point bold."

Norvig concedes that specific corners of the Web may use XML encoding and Semantic Web intelligence. "It can take off in e-commerce, for example, if there's a consortium of buyers in the automobile industries," he says.

Car parts are also on Jonathan Dale's mind. Dale is a member of the W3C's Web Ontology Group and a researcher at Fujitsu (26) Laboratories of America Inc., itself a corporate member of the W3C. The Sunnyvale, Calif., company is particularly interested in supply chain management, Dale explains. "For example, Ford doesn't make its own windshields. The time between ordering and receiving them might be as long as nine months, and if the supplier doesn't get it right, you could have some real problems. If you could get that down to one month, there would be a real benefit."

Manufacturing data, production schedules, delivery and purchase orders, and inventories all refer to many of the same entities, such as dates, weights, and part numbers. If they were part of the same ontology, the information could flow across databases within a company's operations, such as purchasing and warehousing, and even across companies, such as to and from a supplier's invoices and shipping orders.

One early example of the Semantic Web vision is the way Amazon.com Inc. (Seattle, Wash.) has created an XML version of its database. For some time, Amazon has provided rudimentary tools to equip another Web site to create HTML pages listing books in the Amazon inventory and creating a purchase list that carries back to Amazon's site. The company now provides RDF-like tools for another company's developers to integrate Amazon purchases with their own. Thus, for example, one company could create a single shopping cart with items ordered from Amazon as well as from its own catalog. Under the term "Web services," which is being seen more and more, IBM's (5) WebSphere, Sun Microsystems' (42) Open Net Environment, and Microsoft's (12) .NET each provide metadevelopment tools of the sort that make Amazon's toolkit possible.

R.V. Guha, an IBM researcher and one of the inventors of RDF, predicts: "In a few years, just as every company has a www.company.com, providing human-readable information about the company, its offerings, etc., it will have a xml.company.com or data.company.com which provides the same information in a machine-readable form."

Brainy Search Methods

Valuable as the Semantic Web might be, it won't replace regular Web searching. Peter Pirolli, a principal scientist in the user interface research group at the Palo Alto Research Center (PARC), notes that usually a Web querier's goal isn't an answer to a specific question. "Seventy-five percent of the time, people are engaged in what we call sense-making," Pirolli says. Using the same example as Berners-Lee, he notes that if someone is diagnosed with a medical problem, what a family member does first is search the Web for general information. "They just want to understand the condition, possible treatments, and so on."

PARC researchers think there's plenty of room for improving Web searches. One method, which they call scatter/gather, takes a random collection of documents and gathers them into clusters, each denoted by a single topic word, such as "medicine," "cancer," "radiation," "dose," "beam." The user picks several of the clusters, and the software rescatters and reclusters them, until the user gets a particularly desirable set. According to Stuart Card, manager of the group, "The user gains an effective mental model of the topic area, and can get a good sense of what's in a million documents in about 15 minutes."

The method works by precomputing a value for every word in the collection in relation to every other word. "The model is a Bayesian network, which is the same model that's used for describing how long-term memory works in the human brain," Card says.

The current king of the Web search world, Google, doubts the Web will ever be navigable by computers on their own

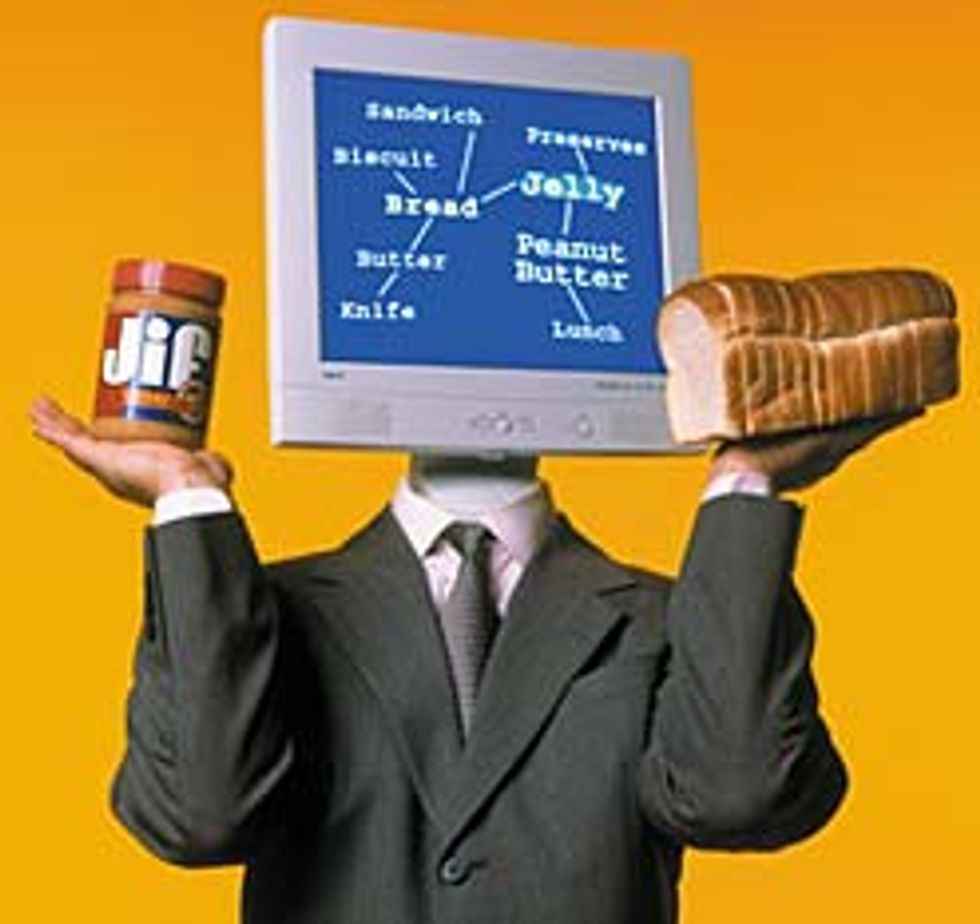

According to this picture of long-term memory (there are others), neurons are linked to one another in a weighted fashion (represented by synapses). These connection-weights are strengthened whenever a pattern of neural activity includes both neurons. Thus frequently associated neurons can activate one another. For example, if your grocer stocks peanut butter on the same shelves as the bread, you might think of jelly, if that's a common sandwich combination for you. The unseen jelly is the activated, or associated, concept. Given a way of assigning weights to the links between concepts, this model can also describe concepts in a concept space. And a concept space is one way to think of terms in a document collection, such as the World Wide Web.

For Autonomy, Bayesian networks are the starting point for improved searches. The heart of the company's technology, which it sells to corporations like General Motors (2) and Ericsson (11), is a pattern-matching engine that distinguishes different meanings of the same term and so "understands" them as concepts. Autonomy's system, by noting that the term "engineer" sometimes occurs in a cluster with others like "electricity," "power," and "electronics" and sometimes with "cement," "highways," and "hydraulics," can tell electrical from civil engineers. In a way, Autonomy builds an ontology without XML and RDFs.

Autonomy uses other techniques as well, such as tacit profiling, which tracks an employee's use of a bunch of related concepts in searching either the Internet or corporate documents and databases. When another employee does a search on one of those concepts, the others are suggested. Alternatively, documents that one person seems to find useful become suggestions for the other. Even the very person can become a recommendation: "Jane seems to be looking at the same things, here's her e-mail address."

Another leading company in search engine technology is Verity, with customers like Compaq and Ernst and Young. It also uses profiling and other techniques, leveraging the "power of the social network," according to the company's chief technology officer Prabhakar Raghavan. Search technologies are, to his way of thinking, a means of adding value to documents after they're created. "Structure is value. When you navigate a Yahoo taxonomy, Yahoo has added structure. Google ranks pages by analyzing link structure [to other Web sites]."

Verity therefore adds value by adding more structure. For example, an organization chart implicitly links pieces of information. "Similarly, classifying some documents as product sheets, or as written in English, or as having been modified in the last week, creates implicit links," says Raghavan. He notes that Google's method of ranking Web pages (it ranks a document highly if other documents link to it) wouldn't even work within an enterprise, where most content isn't hyperlinked in the first place.

Verity and Google agree on one issue, however. Like Norvig, Raghavan questions whether companies and individuals will go through the expense and effort of detailed XML tagging and RDF building. "Tim Berners-Lee has a nice point," Raghavan says, "If everyone would conform to a standard tagging scheme, the world would be a wonderful place. But history has taught us that what's individually advantageous doesn't align with what's socially advantageous."

Ironically, though, semantic technologies like those of Verity and Autonomy can reduce those costs. The same categorization algorithms that make their search tools so powerful can be used to automate XML tagging. Indeed, because two individuals probably won't tag the same document identically, automated tagging may be more consistent.

Are there 10 billion documents on the Web? The fact that no one knows is indicative of how wild a frontier it still is. As the Web continues to grow at a rate not unlike that of Moore's Law, it will take both the Semantic Web and the concept-mapping tools of the search companies to tame it.

To Probe Further

The World Wide Web Consortium's homepage for the Semantic Web is at https://www.w3.org/2001/sw/.

The May 2001 Scientific American article on the Semantic Web, by Tim Berners-Lee, J. Hendler, and O. Lassila, is online at https://www.sciam.com/article.cfm?articleID=00048144-10D2-1C70-84A9809EC588EF21.

The Palo Alto Research Center Inc.'s scatter/gather method of helping someone home in on documents of particular interest is described at https://www.acm.org/sigs/sigchi/chi96/proceedings/papers/Pirolli/pp_txt.htm.

Bayesian networks and their relation to Web searching are discussed in "Spinning a Web Search," by Mark Lager, available at https://www.library.ucsb.edu/untangle/lager.html.