It’s looking more and more like future super powerful quantum computers will be made of the same stuff as today’s classical computers: silicon. A new study lays out the architecture for how silicon quantum computers could scale up in size and enable error correction—crucial steps toward making practical quantum computing a reality.

All quantum computing efforts rely on “spooky” quantum physics that allows the spin state of an electron or an atom’s nucleus to exist in more than one state at the same time. That means each quantum bit (qubit) can represent information as both a 1 and 0 simultaneously. (A classical computing bit can only exist as either a 1 or a 0, but not a mix of both.) Previously, Australian scientists demonstrated single qubits based on both the spin of electrons and the nuclear spin of phosphorus atoms embedded in silicon. Their latest work establishes a quantum computing architecture that paves the way for building and controlling arrays of hundreds or thousands of qubits.

“As you start to scale the qubit architectures up, you have to move away from operating individual qubits to showing how can you make a processor that allows you to operate multiple qubits at the same time,” says Michelle Simmons, a physicist at the University of New South Wales in Australia.

Simmons and her colleagues detailed the silicon quantum computing architecture in the 30 October online issue of the journal Science Advances. The University of New South Wales team handled the experimental side, whereas another team from the University of Melbourne provided the theoretical foundation for the project.

The idea of silicon quantum computing was first proposed in 1998 by Bruce Kane, a physicist at the University of Maryland, in College Park. Quantum computers based on familiar silicon could theoretically be manufactured in conjunction with the conventional semiconductor techniques found in today’s computer industry. A silicon approach to quantum computing also offers the advantage of strong stability and high coherence times for qubits. (High coherence times mean the qubits can continue holding their information for long enough to complete calculations.)

Researchers have made steady progress toward Kane’s vision since that time. Australian teams demonstrated mastery of single qubits based on electron spin in 2012 and control of nuclear spin qubits in 2013. Those results showed the benefits of atom qubits with record coherence times and high fidelity qubits.

But until now, it was unclear how to build large arrays of such qubits without requiring an impracticably huge number of control circuitry lines for manipulating the qubits and reading their information. In addition, researchers needed a scalable architecture suitable for a type of quantum error correction called surface code. The surface code requires the ability to control nearly all qubits in parallel and synchronously.

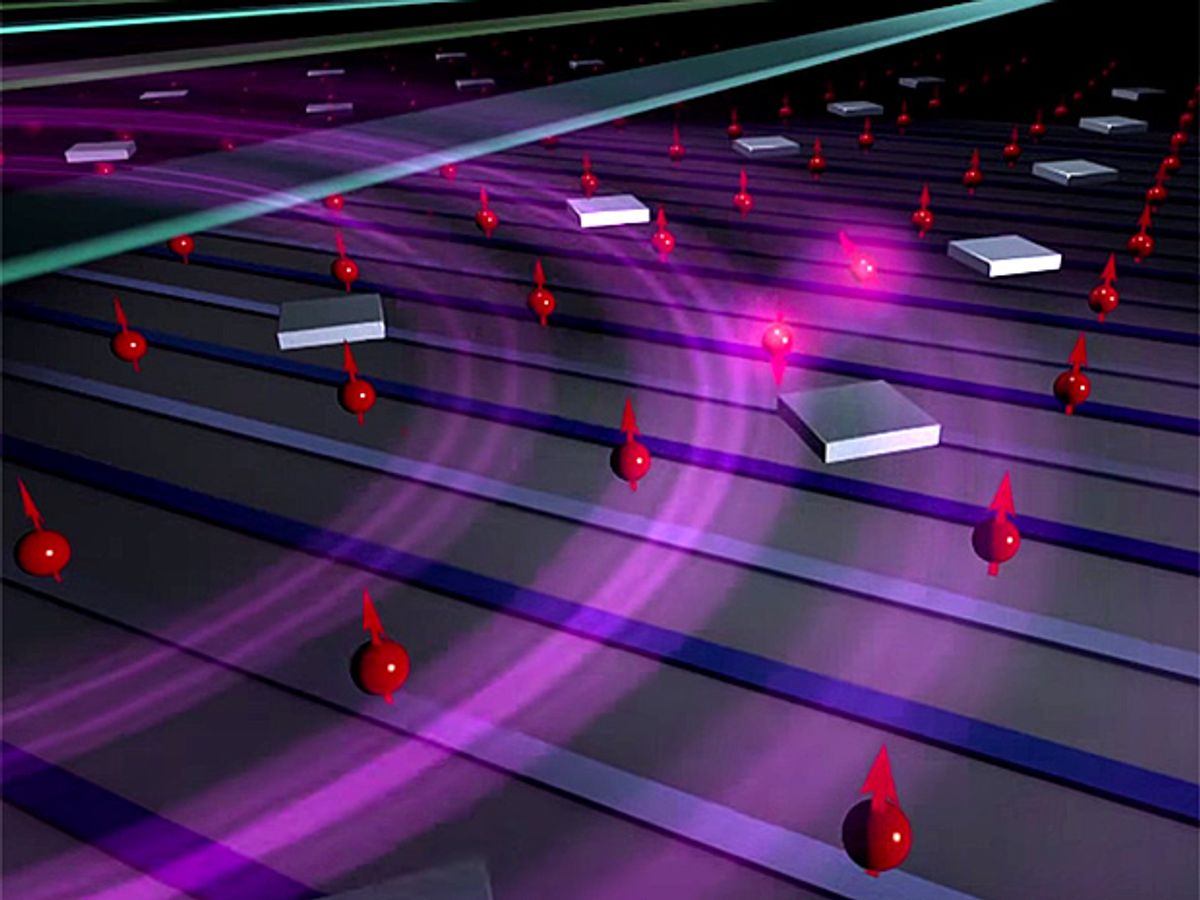

The new research presents a solution to the scaling problem for this version of silicon quantum computing. Researchers detailed an architecture that sandwiches a 2-D layer of nuclear spin qubits between an upper and lower layer of control lines. Such triple-layer architecture enables a smaller number of control lines to activate and control many qubits all at the the same time.

“Instead of sending a number of signals down the control lines to the qubit array—roughly equal to the number of qubits—in this approach we’re able to send far fewer signals to the qubits,” says Lloyd Hollenberg, a physicist at the University of Melbourne. “So the control is much simpler.”

In theory, the new architecture could pack about 25 million physical qubits within an array that’s 150 micrometers by 150 µm. But those millions of qubits would require just 10,000 control lines. By comparison, an architecture that tried to control each individual qubit would have required over 1000 times more control lines.

That theoretical array of 25 million physical qubits assumes a 30-nanometer separation between each qubit. But the team believes it’s possible to design similar arrays with closer separations and faster operation times, Hollenberg says.

The Australian researchers have also begun seeing how the silicon quantum computing architecture can work with the surface code error correction. In the short term, they plan to build non-error-corrected arrays with sizes between 100 and 1000 qubits as “quantum simulators.” But they eventually want to take the next step of enabling small-scale surface code error correction to make this a practical quantum computing approach.

“We aim to perform the first error correction protocols within five years,” Simmons says.

The latest breakthrough represents a crucial step for this approach to silicon quantum computing, but it’s not the only possible way to build quantum computers in silicon. Another University of New South Wales team recently built a two-qubit logic gate in silicon based on electrons trapped in quantum dots. [For more, see "Will Silicon Save Quantum Computing?" IEEE Spectrum, October 2014.]

The race to build quantum computers also includes several methods that do not necessarily use silicon. Some research labs have tried trapping and isolating ions by using electromagnetic fields. Others, including Google’s Quantum AI Lab, have experimented with qubits based on superconducting metal circuits. Earlier this year, Google researchers became the first to demonstrate surface code error correction on a linear array of nine superconducting qubits.

And Canadian company D-Wave Systems has taken the superconducting quantum circuits in a different direction by focusing on a more specialized version of quantum computing, called quantum annealing, that can only solve a more limited set of so-called optimization problems. D-Wave recently installed a 1000-qubit version of its quantum annealing machines at Google’s AI Lab for ongoing testing.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.