Would You Trust a Robot Surgeon to Operate on You?

Precise and dexterous surgical robots may take over the operating room

Inside the glistening red cave of the patient's abdomen, surgeon Michael Stifelman carefully guides two robotic arms to tie knots in a piece of thread. He manipulates a third arm to drive a suturing needle through the fleshy mass of the patient's kidney, stitching together the hole where a tumor used to be. The final arm holds the endoscope that streams visuals to Stifelman's display screens. Each arm enters the body through a tiny incision about 5 millimeters wide.

To watch this tricky procedure is to marvel at what can be achieved when robot and human work in tandem. Stifelman, who has done several thousand robot-assisted surgeries as director of NYU Langone's Robotic Surgery Center, controls the robotic arms from a console. If he swivels his wrist and pinches his fingers closed, the instruments inside the patient's body perform the same exact motions on a much smaller scale. “The robot is one with me," Stifelman says as his mechanized appendages pull tight another knot.

Yet some roboticists, if they were watching this dexterous performance, would see not a modern marvel but instead wasted potential. Stifelman, after all, is a highly trained expert with valuable skills and judgment—yet he's spending his precious time suturing, just tidying up after the main surgery. If the robot could handle this tedious task on its own, the surgeon would be freed up for more important work.

Today's surgical robots extend the surgeon's capacities; they filter out hand tremors and allow maneuvers that even the best surgeon couldn't pull off with laparoscopic surgery's typical long-handled tools (sometimes dismissively called “chopsticks"). But at the end of the day, the robot is just a fancier tool under direct human control. Dennis Fowler, executive vice president of the surgical robotics company Titan Medical, is among those who think medicine will be better served if the robots become autonomous agents that make decisions and independently carry out their assigned tasks. “This is a technological intervention to add reliability and reduce errors of human fallibility," says Fowler, who worked as a surgeon for 32 years before moving to industry.

Giving robots such a job promotion isn't particularly far-fetched; much of the required technology is being rapidly developed in academic and industrial labs. Working primarily with rubbery mock-ups of human tissue, experimental robots have sutured, cleaned wounds, and cut out tumors. Some trials pitted robotic systems against humans and found the robots to be more precise and efficient. And just last month, a robotic system in a Washington, D.C., hospital demonstrated a similar edge while stitching up real tissue taken from a pig's small intestine. Researchers compared the performance of the autonomous bot and a human surgeon on the same suturing task and found that the bot's stitches were more uniform and made a tighter seal.

While these systems are nowhere near ready for use on human patients, they may represent the future of surgery. It's a sensitive subject because it seems to suggest putting surgeons out of work. But the same logic governs both the operating room and the assembly line: If increased automation improves outcomes, there's no stopping it.

Hutan Ashrafian, a bariatric surgeon and a lecturer at Imperial College, London, has studied robotic surgery outcomes and often writes about the potential for artificial intelligence in health care: “I think about it all the time," he admits. He considers automated surgical bots “inevitable," although he's careful to define his terms. In the foreseeable future, Ashrafian expects surgical robots to handle simple tasks at a surgeon's command. “Our goal is to improve patient outcome," he says. “If using a robot means saving lives and lowering risks, then it's incumbent on us to apply these devices."

Ashrafian also looks further ahead: He says it's entirely possible that medicine will eventually employ next-level surgical robots that have enough decision-making power to be considered artificially intelligent. Not only could such machines handle routine tasks, they could take over entire operations. That prospect may seem unlikely now, Ashrafian says, but the path of technical innovation may lead us there naturally. “It's step-by-step, and each one doesn't seem big," he says. “But a surgeon from 50 years ago wouldn't recognize my operating room. And 50 years from now, I expect it will be a different world of surgery."

Surgical robots already make decisions and take independent action more often than you might realize. In surgery for vision correction, a robotic system cuts a flap in the patient's cornea and plots out the series of laser pulses to reshape its inner layer. In knee replacements, autonomous robots cut through bone with greater accuracy than human surgeons. At expensive clinics for hair transplants, a smart robot identifies robust hair follicles on the patient's head, harvests them, and then prepares the bald spot for the implants by poking tiny holes in the scalp in a precise pattern—sparing the doctor many hours of repetitive labor.

Surgeries involving the soft tissues within the chest, abdomen, and pelvic region, however, pose a messier challenge. Every patient's anatomy is a little different, so an autonomous robot will have to be very good at identifying squishy internal organs and snaking blood vessels. What's more, all the parts that make up a person's innards can shift around during procedures, so the robot will have to continuously modify its surgical plan.

We Already Trust Robot Surgeons

Smart surgical systems now make decisions and carry out independent actions in a number of operations

Spine

The guidance robot from Mazor Robotics uses the patient's CT scans to position a frame for surgeons' tools, giving them 1.5-millimeter accuracy.Hair

In hair-graft surgeries, Restoration Robotics' system identifies hair follicles for harvesting, cuts them out, and makes tiny incisions for the transplants.Eye

In Lasik vision correction surgery, robotic systems from a variety of companies plan how to reshape patients' corneas, then fire laser pulses while correcting for eye movement.Hip & Knee

In joint-replacement surgeries, a robot from Think Surgical cuts through bone at precise angles to give the artificial knee the best possible alignment and stability.Cancer Tumors

The robotic radiosurgery system from Accuray circles the patient, correcting for patient movement while delivering targeted beams of high-dose radiation to the tumor.

It must also be reliable in a crisis. The challenge is demonstrated back at the NYU surgery center, where Stifelman releases the arterial clamp that blocked blood flow to the kidney during the tumor removal. “Now we've got to make sure we're not hemorrhaging," he says as he maneuvers his endoscope around the organ. Most of the stitched-up incision looks neat, but suddenly a gusher of bright red fills his view screen. “Oh boy, you see that? Get me another suture," he instructs his assistant. Once his quick stitch has quelled the flow, Stifelman can proceed with the routine cleanup.

For Stifelman, it's all in a day's work, but how well would a robosurgeon have handled the unexpected complication? Its imaging and computer vision would first need to recognize the spurt of red liquid as a serious problem. Next its decision-making software would have to figure out how best to stitch up the gash. Then its instruments would whir into action, wielding needle and thread. Finally an evaluation program would assess the results and determine whether further actions were necessary. Getting a robot to master each one of those steps—sensing, decision making, action, and evaluation—represents a mighty challenge for engineers.

Stifelman, now at Hackensack University Medical Center, in New Jersey, performed his NYU surgeries with the assistance of the da Vinci robot. This machine, from Intuitive Surgical of Sunnyvale, Calif., costs up to $2.5 million and is the only robotic system for soft-tissue surgery approved by U.S. regulators. The da Vinci dominates the market with more than 3,600 installed in hospitals worldwide. Its road to commercial success hasn't always been smooth; patients have brought lawsuits regarding mishaps on the operating table, and one study found that such mishaps were underreported. Some surgeons continue to debate whether robotic surgeries bring real benefits over laparoscopic operations carried out by hand, citing conflicting studies of patient outcomes in various procedures. Despite such controversies, many hospitals have embraced Intuitive's technology, and many patients seek it out.

The da Vinci keeps the human surgeon in complete control; its arms remain inert pieces of plastic and metal until a doctor grasps the levers at the console. Intuitive intends to keep it that way for now, says Simon DiMaio, who manages the company's R&D on advanced systems. However, he adds, robotics experts are already working toward a future where human surgeons operate “with increasing levels of assistance or guidance from a computer."

DiMaio likens research in this area to early efforts on self-driving cars. “The first steps were recognition of road markings, obstacles, cars, and pedestrians," he notes. Engineers next made intelligent cars that used their understanding of the environment to help their drivers; for example, a car that knows the locations of surrounding vehicles can warn its driver if he or she is about to make an ill-advised lane change. For surgical robots to provide similar warnings—by alerting a surgeon whose instruments stray from the typical path, perhaps—they'll need to get a whole lot smarter. Luckily, some are already getting schooled.

The surgical robot sitting in the corner of the lab at the University of California, Berkeley, can't tie knots yet, but it's pretty deft at suturing. Working with a mock piece of flesh, one robotic arm drives a curved yellow needle through two edges of a mock wound. A second grasper pulls the needle as it emerges to tighten the thread. No human hand guides the arms' motions, and no human brain plots their course. The autonomous robot then passes the needle back and starts the next stitch.

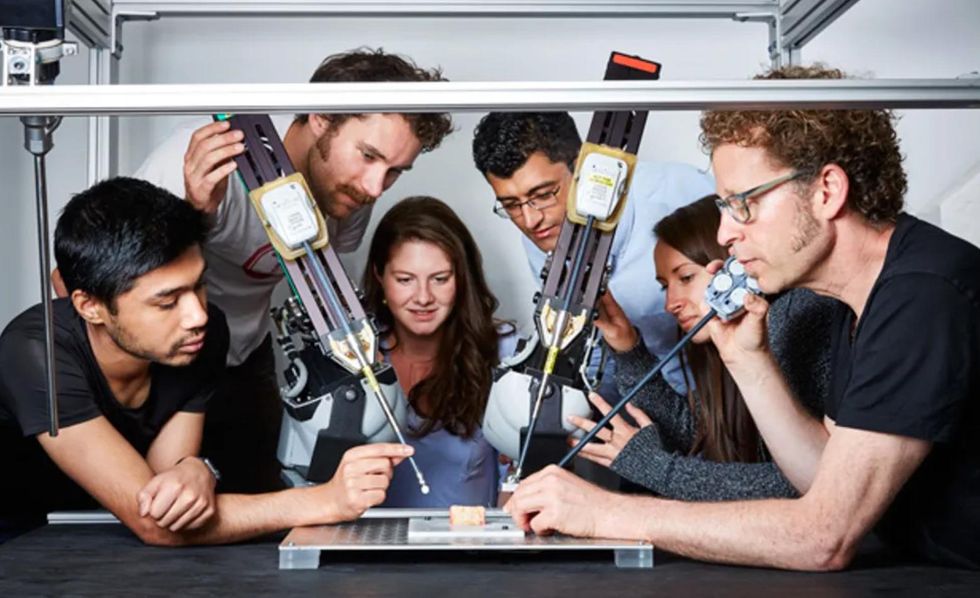

Ken Goldberg darts around his lab, looking like a wild-haired Muppet version of a professor, while the robot carries on. As head of the Berkeley Laboratory for Automation Science and Engineering and a professor in four departments, including electrical engineering and art, Goldberg has a reputation for pulling off surprising feats of robotics. The painted portrait of him that hangs on the wall, which renders his face and torso in clumpy strokes of red and blue, was created by one of his early bots.

While the “flesh" being sutured in his lab is just pink rubber, the technology is the real thing. In 2013, Intuitive began donating used da Vinci systems to robotics researchers at universities around the world. So when Goldberg teaches his da Vinci how to independently perform a surgical task, the same programming could theoretically instruct commercial systems that operate on real patients. “We're driving on the test track," Goldberg says, “but one day we'll take it out on the road." He believes that simple surgical tasks will be automated within the next 10 years.

To pull off its automated suturing task, Goldberg's da Vinci calculates the optimal entry and exit points for each stitch, plans the needle trajectory, and tracks the needle's movements using a combination of location sensors and cameras—the needle is painted bright yellow so the computer vision system can recognize it. The task is still tough. Goldberg's published results report that the robot completed only 50 percent of its four-stitch procedures; it most often failed when the second grasper couldn't get a grip on the needle or got it tangled in the thread.

The professor wants to be clear: Even if robots get really good at routine surgical tasks, he still wants human surgeons watching over the machines. What Goldberg envisions is “supervised autonomy." “The surgeon is still in charge," he says, “but the low-level aspects of the procedure are being handled by the robot." If robots carry out the drudge work with precision and uniformity—“think about sewing machines versus hand stitches," he says—the gestalt of machine and human could create one supersurgeon.

The robots will soon get good enough for clinical use, Goldberg says, because they're starting to learn on their own. In his group's newest experiment with learning from demonstrations, the da Vinci recorded data while eight surgeons of various skill levels used its robotic arms to perform the four-stitch suturing task. A machine-learning algorithm took the visual and kinematic data and divided each stitch into steps (needle positioning, needle pushing, and so forth) that it could tackle sequentially. With this method, the da Vinci could potentially learn any surgical task.

Goldberg believes that learning from demonstration is the only efficient approach. “We think robot learning is the most interesting area now," he says, “because trying to program from first principles just doesn't scale." Of course, there are a multitude of tasks, and complicated ones might require the robot to crunch data from thousands of operations. But data is plentiful. Each year, surgeons do more than 500,000 procedures using the da Vinci, Goldberg notes. What if the surgeons shared the data from all those procedures (with privacy protection for the patients), allowing an artificial intelligence to learn from them? Every time a surgeon uses an assistive robot to successfully stitch up a wound in a kidney, for example, the AI could refine its understanding of that procedure. “The system could extract the data, improve its algorithms, fine-tune the task," Goldberg says. “We'd be collectively getting smarter."

Automation in the operating room need not involve a needle, scalpel, or anything else pointy and dangerous. Two companies now bringing their surgical robots to market have developed cameras that move automatically to give surgeons the views they want, almost as if reading their minds. Today, surgeons using Intuitive's da Vinci machine have to stop what they're doing to move the camera or tell an assistant how to position it.

TransEnterix, based in Morrisville, N.C., sought to one-up the da Vinci with its Alf-X surgical system, which is now available in Europe. The system includes an eye-gaze tracker that's built into the surgeon's viewing console and controls the endoscope, which is the thin fiber-optic camera that snakes into the patient's body. As the surgeon scans the view screen, Alf-X moves the camera to keep the surgeon's point of interest in the center of the frame. “The surgeon's eyes become the computer mouse," explains Anthony Fernando, TransEnterix's chief technology officer.

The Sport Surgical System from Toronto-based Titan Medical takes a different approach to automated imaging. Its system inserts tiny robotic cameras into the body cavity, and the cameras swivel and point based on the location of the surgeon's instruments. This type of automation is a sensible first step in robotic surgery, says Titan's Fowler—and one that likely won't alarm the regulators. When the company applies for market approval in Europe this year and in the United States next, Fowler doesn't expect the camera system to raise questions. “But if automation means the computer is controlling the tool that actually makes a cut, we anticipate it will require additional testing," he says.

Intuitive will soon face competition from big challengers as well. Medtronic, one of the world's largest medical device companies, is developing a surgical robotic system that it won't talk about yet. Similarly, Google joined forces with Johnson & Johnson last year to start a new venture, called Verb Surgical, which is working on a system “with leading-edge robotic capabilities," the company said in a release. With all this activity, analysts predict a market boom. A recent report from WinterGreen Research projects that the worldwide market for abdominal surgical robots (the category consisting of the da Vinci and its competitors) will grow from $2.2 billion in 2014 to $10.5 billion in 2021.

Robosurgeon Specialists: The gang at UC Berkeley include (from left) Siddarth Sen, Stephen McKinley, Lauren Miller, Animesh Garg, Aimee Goncalves, and Ken Goldberg.

If this projection proves true and robotic surgeons become commonplace in operating rooms, it seems only natural that we'll trust them with increasingly complicated tasks. And if the robots prove themselves reliable, the role of human surgeons may change dramatically. One day, surgeons may meet with patients and decide on the course of treatment, then merely supervise as robots carry out their commands.

Ashrafian, the London bariatric surgeon with an interest in artificial intelligence, says the profession may actually be receptive to such a change. The history of medicine shows that surgeons are “fascinated with improving their performance," he says, and adds that they are willing to adopt any new tools that prove helpful. “No one operates with just a scalpel anymore," Ashrafian says wryly. If independent robots get good enough at surgical tasks, the same principle will apply. In pursuit of perfection, surgeons may eventually take the surgery out of their own hands.

This article appears in the June 2016 print issue as “Doc Bot Preps for the O.R."

A correction was made to a caption in this article on 6 June 2016.