The Real-Life Dangers of Augmented Reality

Augmented reality can impair our perception, but good design can minimize the hazards

You know your cellphone can distract you and that you shouldn’t be texting or surfing the Web while walking down a crowded street or driving a car. Augmented reality—in the form of Google Glass, Sony’s SmartEyeglass, or Microsoft HoloLens—may appear to solve that problem. These devices present contextual information transparently or in a way that obscures little, seemingly letting you navigate the world safely, in the same way head-up displays enable fighter pilots to maintain situational awareness.

But can augmented reality really deliver on that promise? We ask this question because, as researchers at Kaiser Permanente concerned with diseases that impair mobility (Sabelman) and with using technology to improve patient care (Lam), we see dangers looming.

With augmented-reality gear barely on the market, rigorous studies of its effects on vision and mobility have yet to be done. But in reviewing the existing research on the way people perceive and interact with the world around them, we found a number of reasons to be concerned. Augmented reality can cause you to misjudge the speed of oncoming cars, underestimate your reaction time, and unintentionally ignore the hazards of navigating in the real world. And the worst thing about it: Until something bad happens, you won’t know you’re at greater risk of harm.

There’s a simple way to fix this, of course. The GPS receivers built into wearables already detect the speed of motion (at least outdoors); designers could use them to stop notifications when the user is moving. And many AR wearables have cameras, so image analysis could likewise trigger a safety mode indoors in situations likely to cause trouble. So technically, there are straightforward solutions. But they aren’t likely to be used: The last thing the people buying wearables want is to stop the flow of information—ever. The whole point of the devices is to stay connected no matter what you are doing.

So there are—and will continue to be—hazards engendered by AR wearables—at least when the user is on the move. We turned to studies conducted with visually impaired people and others using early versions of wearable AR to find out exactly what those hazards are. We also found that some of these studies suggest that augmented reality has the potential to help some people with disabilities to overcome their impairments.

Why would augmented reality be bad for you but good for a fighter pilot? After all, a head-up display, like augmented-reality glasses, overlays information on a person’s view, obscuring it to some extent and potentially causing distraction. The difference is that an aircraft head-up display typically shows information in a highly symbolized and minimalistic way, with little text and no images of people (we’ll talk later about why that is important). And pilots go through extensive training to be able to interpret this information quickly.

To understand how AR wearables affect the way a typical person perceives the world, we considered various natural impairments to vision. Presbyopia, farsightedness, and nearsightedness all affect your ability to focus. Diabetes, glaucoma, and retinitis pigmentosa can create tunnel vision, masking objects in the peripheral visual field. Age-related macular degeneration causes the reverse, leaving only items in peripheral vision clearly defined. A poorly designed AR interface could interfere with vision to the same degree as these diseases.

First consider the general ability to focus. People with an impaired ability to focus either wear corrective lenses or face varying degrees of difficulty in getting around. Have you ever struggled to read a street sign in the distance, or do you find yourself driving more cautiously at night because it’s harder to focus your eyes in dimmer light? Wearables like Google Glass require the ability to quickly shift focus from the real world in the distance to the images presented by the device, which are projected on the retina as if they were about 2.5 meters away. Objects at this distance should be easy for most people with decent distance vision or corrective lenses to handle without strain—but learning how to comfortably adjust focus to clearly see an AR display has a learning curve, similar to that of adapting to bifocals. And as people age, presbyopia makes it harder to rapidly change focus. In our tests of Google Glass on several hundred people, we discovered that roughly 5 to 10 percent experience so much eye strain that they gave up. They’d struggle to focus for about 20 seconds and then look away—it was just that uncomfortable. Another 25 percent of the people we tested had difficulty focusing but were able to stick with it, and eventually most were able to handle Glass.

Based on these tests—which were fairly informal and should be repeated in a broader and more rigorous study—we believe that a significant minority of Glass wearers, at least initially, will have difficulty adjusting, and during that period they will be much more distracted, with much longer reaction times, than other users.

But changing focus is not the only issue. AR wearables also obscure your vision. The loss of central vision is so obviously bad that designers of augmented-reality wearables carefully avoid blocking it—at least when the user is looking straight ahead. That’s why AR displays tuck notifications off to the side. This doesn’t avoid the distraction problem—the same one that makes people look at their phones while driving—because you’re meant to shift your gaze, at least briefly, to check out these alerts.

Shifting your view to the side for too long can create problems, of course. But even if you avoid the temptation to glance at a notification appearing at the edge of your vision—waiting, perhaps, until you finish crossing a street—these intrusions still present a danger.

This can be seen from a study conducted as part of the Salisbury Eye Evaluation and published in 2004. Researchers rated 1,344 adults between the ages of 72 and 92 on their ability to keep track of central and peripheral objects on a display screen. Then they had the subjects walk through an obstacle course and scored them on the number of things they bumped into, taking into account the size of their visual fields, balance, and other factors. All else being equal, a 10 percent decrease in the score on the vision test predicted a 4 percent increase in the number of collisions with obstacles. This suggests that those who couldn’t keep proper track of things in their peripheral vision would be more prone to falls.

Peripheral vision is more important than you might think, because it provides a wealth of information about speed and distance from objects. Central vision, despite the great detail it offers, gives you only a rough estimate of movement toward or away from you, based on changes in size or in the parallax angle between your eyes. But objects moving within your peripheral vision stimulate photoreceptors from the center of the retina to the edge, providing much better information about the speed of motion. Your brain detects objects in your peripheral field and evaluates if and how they (or you) are moving. Interfering with this process can cause you to misjudge relative motion and could cause you to stumble; it might even get you hit by a car one day.

It’s ironic, really. You buy an AR device to make you more able, yet you’re likely to experience some of the same problems faced by visually impaired individuals: reduced depth of focus, distance and speed perception, and reaction time. Indeed, AR users may be at more risk than someone with a permanent vision problem, because they have developed no compensatory strategies for lost vision.

A 2008 study at Johns Hopkins University, in Baltimore, would suggest just this. Researchers used two sets of subjects—one group with long-standing retinitis pigmentosa and another with normal vision who had their peripheral vision temporarily blocked. Each person walked through a room-size virtual space with statues placed throughout it. Then they each navigated the virtual room one more time, without the objects. The second time the subjects were asked to go to the locations where the statues had been placed.

It turned out that the people with the narrowest natural fields of view over estimated the distance between themselves and the remembered objects compared with normal or less-impaired subjects. This tendency to give yourself more room, in effect, is a way of compensating for poor vision. If you know you might run into an object ahead and you’re concerned about your ability to see it, you’d probably slow your steps down sooner and approach more cautiously than people confident in their ability to dodge it. Subjects who had their vision artificially narrowed, however, didn’t automatically add the extra distance—they trusted their abilities, even though this trust was misplaced.

Let’s talk about those fighter pilots again. They need to pay full attention to what is in front of them and to be able to judge the speed and distance of objects around them accurately. Head-up displays don’t interfere with the ability of peripheral vision to help in this task because they present information in the central field. This works only because that information is stripped down to essential lines and symbols.

Newer AR wearables—like Microsoft HoloLens and, reportedly, the system being developed by stealth startup Magic Leap, in Dania Beach, Fla.—appear to be using the central visual field to display objects integrated into the real world for the most part, not relegating them to the peripheral vision. It appears, however, that these will be detailed, full-color, realistic objects, not simple graphics. And that brings up another concern.

AR is really engaging, and designers are likely to make it more and more so, with sophisticated graphics that go far beyond the simple lines and numbers that appear in a fighter pilot’s display. You will likely still be able to see the real world through the projected image. But our innate neural wiring prefers images of people to objects—and that is the case even if the people are virtual and the objects are real. So if you’re looking at something not human in the real world, but the AR image includes people (or even simple shapes resembling the human form) AR will win the struggle for attention.

We know about this phenomenon through experiments conducted since the 1970s with dot patterns that resemble human stick figures. Researchers call these point-light walkers. These patterns of 11 to 15 points illustrate how our perception is biased to recognize human forms with minimal prompting. With just a dozen or so dots, we can identify a figure as a walking human within 200 milli seconds—even though it takes a person between 1 and 2 seconds to take two steps.

The point-light walker studies demonstrate just how easy it is for an image to grab someone’s attention. And you can bet that content providers are going to try to make attention-grabbing apps. The more apps include humanlike shapes, the more users will focus on them, disregarding the real world and increasing the hazards for the users.

We understand the effects of humanlike images presented through augmented reality. We know less about other, nonhuman images that may also be problematic. At Kaiser Permanente, we have been testing virtual images for use in controlled-exposure therapy, intended to help people overcome certain fears. Some of those fears—like a fear of spiders or other living things—can be triggered by very-low-resolution images. Designers of aviation head-up displays don’t use biological forms to present information, but the people creating AR apps might well do that. So for a small percentage of the population, these apps could trigger surprisingly negative responses.

The news is not all bad, though. Used properly, augmented reality can help those who already have difficulty navigating the world. This is possible because an AR device such as Google Glass carries sensors—at the most basic, a camera and an accelerometer—that can allow the system to track the user’s surroundings and movement in real time and generate cues that improve the person’s safety.

To aid blind individuals, researchers at RMIT University, in Melbourne, Australia, are developing a speeded-up robust features (SURF) algorithm to allow AR devices to recognize traffic lights and pedestrian signals and to predict potential collisions with moving people and objects from video data. To date, the algorithm can recognize 90 percent of the people in view and 80 percent of the objects. If installed in an AR device, along with appropriate audible output—that doesn’t mask the real-world sounds essential to navigation without vision—this technology could be of great benefit to blind users. Even though these people would not need the visual display AR glasses provide, such wearables are still a fine choice for this application because the form factor is suitable, the cost of the mass-produced hardware is relatively low, and the camera is perfectly positioned to “see” what’s ahead.

AR wearables can also help patients with Parkinson’s disease, which causes tremors and stiffness. These people often have a perplexing characteristic known as motor block or freezing of gait (FOG)—the sudden inability to initiate a step or stride—putting them at risk for serious falls. FOG occurs more frequently when someone is coming to a turn, changing direction, maneuvering in a closed space, or passing through a doorway.

Some Parkinson’s disease patients with FOG can walk with a nearly normal stride when presented with the proper visual cues. In the 1990s, podiatrist Thomas Riess, of San Anselmo, Calif., who himself has Parkinson’s, developed several early augmented-reality devices for superimposing such cues. He patented a head-mounted array of LEDs that projects a scrolling ladder of bars on a transparent screen in front of the patient. In tests, Riess’s device was able to improve his own and other Parkinson’s disease patients’ ability to walk without freezing.

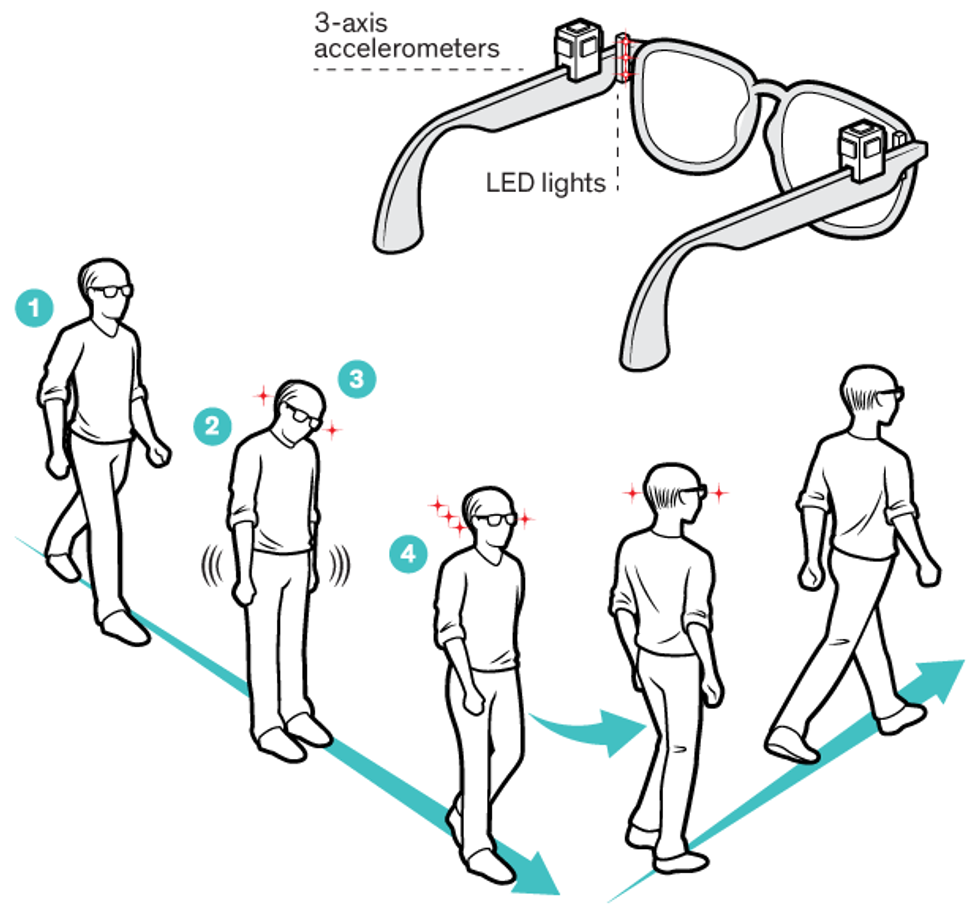

Clearing the FOG

Freezing of gait (FOG), a sudden inability to take that next step, puts Parkinson’s disease patients at risk of falls. Visual cues can, however, prevent FOG in some patients. At the Department of Veterans Affairs Rehabilitation R&D Center in Palo Alto, Calif., researchers used motion sensors and LEDs attached to eyeglasses and connected to a wearable computer to determine if augmented reality could help prevent FOG.

In 2002, at the Department of Veterans Affairs Rehabilitation R&D Center in Palo Alto, Calif., with Riess’s cooperation, one of us (Sabelman) also conducted a test to determine whether computer- generated cues from wearables could reduce the time Parkinson’s patients spent in FOG. In this experiment, the virtual cues were presented only when needed. To do so, Sabelman placed LEDs and 3-axis accelerometers on the corners of eyeglass frames to measure head motion and to flash lights when the computer detected the onset of FOG. This method takes advantage of the fact that early in a FOG state patients tilt their heads forward to visually confirm the position of their feet.

The computer used the head-tilt angle and other sensor inputs to determine the intended motion, at which point it flashed the lights to simulate continuing that motion. If the system determined that a patient froze while trying to turn left, for example, the lights on the left side would flash more slowly than those on the right, because when you are turning you see more motion on the outside of your turn than on the inside. If a patient froze while moving forward, the LEDs would alternately flash in what had been the rhythm of the patient’s steps before the freeze. In a 10-meter walking task, this system reduced FOG by almost 30 percent. We think that Google Glass, or newer symmetric AR devices with displays for the left and right eyes, paired with significantly faster processors, could do much better.

Elsewhere, researchers at Brunel University in London are developing systems that project lines in front of patients. In their current state of development, these are clumsy and difficult to use, but they show the power of AR to solve this particular problem.

The message of all this research is clear. Because AR hardware and applications can impair their users’ vision, their designers owe it to the world to be careful. What’s more, they need to test their products on people of all ages and physical abilities. They need to evaluate users’ reaction times while their apps are running. They need to put wearers through real-world obstacle courses with opportunities to stumble. And they need to determine just how much content you can present via an AR app before it becomes dangerous.

The manufacturers also need to educate the people who buy and use these devices of the hazards, perhaps using mandatory training games so they will learn the hazards before stepping out into the real world. After all, when we get our hands on new gadgets, our tendency is to start using them right away and to read the instructions later—if at all. But new users of wearable AR devices should start slowly—perhaps practicing at home, or in a park or other safe environment. That way users would know there are risks and accept them—or not—consciously.

Software for augmented-reality and virtual-reality wearables is emerging that includes cognitive assessment tools, so any app developer could, if he or she desired, build in diagnostics to measure things like reaction time and balance. With these tools, apps could guide users safely through the initial training and make them understand the dangers. The future of this technology will hinge on whether such improved tools are used to rigorously test new products.

About the Author

Eric Sabelman is a bioengineer in Kaiser Permanente’s neurosurgery department, where he uses deep brain stimulation to address Parkinson’s disease. He has tested augmented reality as a tool to help Parkinson’s patients.

In his personal experiments with Google Glass, Sabelman, hoped his strabismus–a condition of being walleyed–might make it easier to use Google Glass. But Sabelman is also nearsighted, and says, “My experiment looking at Glass with my right eye while the left eye looks straight ahead didn’t work, because I can’t focus on the display,” Sabelman says.

Coauthor Roger Lam researches and evaluates emerging health information technology for Kaiser Permanente. To begin his experiments with Google Glass, he drove a powerboat to pick up his Google Glass prototype at a secret location on the site of the former Alameda Naval Air Station. He made sure to turn off the display before getting back on the boat.