Digital Actors Go Beyond the Uncanny Valley

Computer-generated humans in movies and video games are paving the way for new forms of entertainment

Say hello to Ira. His head is visible on a screen as though he’s in a videoconference. He seems to be in his early 30s, with a shaved head, a pronounced nose, and thin eyebrows. Ira seems a little goofy and maybe just a wee bit strange. But unless you knew his full name—it’s “Digital Ira”—you probably wouldn’t guess that he’s nothing but bits.

In the background, a graphics processor churns through the calculations that determine every roll of his eyes, every crease or bulge of his skin, and every little arch of his eyebrow. Digital Ira can assume almost any expression—joy, befuddlement, annoyance, surprise, concern, boredom, or pleasure—in about the same amount of time it takes a human to do so.

As Digital Ira affirms, graphics specialists are closing in on one of their field’s longest-standing and most sought-after prizes: an interactive and photo-realistically lifelike digital human. Such a digital double will change the way we think about actors, acting, entertainment, and computer games. In movies, digital doubles already replace human actors on occasion, sometimes just for moments, sometimes for most of a feature film. Within a decade or so, computer-game characters will be as indistinguishable from filmed humans as their movie counterparts. And in time, this capability will help bring movies and games together, and out of the union will come entirely new forms of entertainment.

This blurring of the real and the digital became possible in movies recently when moviemakers reached a long-anticipated milestone: They crossed the “uncanny valley.” The term has been used for years to describe a problem faced by those using computer graphics to depict realistic human characters. When these creations stopped looking cartoonish and started approaching photo-realism, the characters somehow began to seem creepy rather than endearing. Some people speculated that the problem could never be solved; now it has proved to be just a matter of research and computing power.

Producing a fully realistic digital double is still fantastically expensive and time-consuming. It’s cheaper to hire even George Clooney than it is to use computers to generate his state-of-the-art digital double. However, the expense of creating a digital double is dependent on the costs of compute power and memory, and these costs will inevitably fall. Then, by all accounts, digital entertainment will enter a period of fast and turbulent change. An actor’s performance will be separable from his appearance; that is, an actor will be able to play any character—short, tall, old, or young. Some observers also foresee a new category of entertainment, somewhere between movies and games, in which a work has many plotted story lines and the viewer has some freedom to move around within the world of the story.

Creators of entertainment also dream that technology will someday make itself invisible. Instead of painstakingly using cameras and computers to share their visions, they hope to be able to directly share the rich worlds of their imaginations using a form of electronic mind reading far more sophisticated than the brain-scan technology of today: If you can think it, you will be able to make someone see it.

“That is the future I wish for—where the hard edges of technology disappear and we return to magic,” says Alexander McDowell, a professor at the University of Southern California’s School of Cinematic Arts.

That magic means not only being able to step inside a realistic virtual world without the cumbersome—and, for some, nauseating—head-mounted screens that create virtual experiences today. It means being able to seamlessly merge the real and the virtual, to allow computer-generated beings to walk among real humans.“I want to be able to jump down the rabbit hole—not just metaphorically, not just visually, but really jump inside” a story or virtual experience, says Tawny Schlieski, a research scientist at Intel Labs. “And I want the rabbit to follow me home.”

Today’s world of moviemaking—in which realistic computer-generated humans are an option, albeit an expensive one—was hard to imagine just 10 years ago. In 2004, the state-of-the-art computer-generated human was a creepily cartoonish Tom Hanks in The Polar Express. And the uncanny valley seemed an impossibly wide chasm.

But just four years later, The Curious Case of Benjamin Button spanned the gap, in the opinion of most movie effects specialists. This motion picture, shifting seamlessly between Brad Pitt and a computer-generated replica of him, revolves around a boy who is born old and ages backward. It was “the Turing test” for computer graphics, says Alvy Ray Smith, who pioneered the use of computer graphics in movies at Lucasfilm in the 1980s and cofounded computer-graphics trailblazer Pixar. “I’d swear it was Brad Pitt right there on the screen—and I’m an evil-eyed, annoying computer-graphics expert,” Smith says. The Turing test, named for the computer pioneer Alan Turing, gauges whether a machine is indistinguishable from a human and was originally proposed in 1950 to test natural-language conversations.

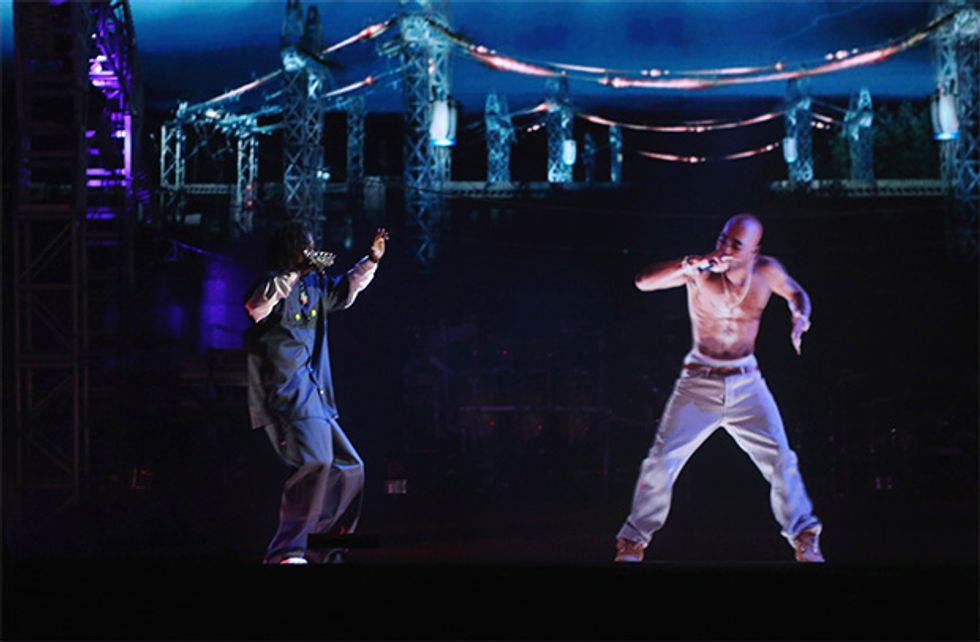

Since Benjamin Button, pioneering moviemakers have begun to buttress the bridge across the uncanny valley, often for short scenes where it is impossible or inconvenient to film an actor directly. For example, a digital double replaced actor Guy Pearce in a few scenes in Iron Man 3 because they were filmed after Pearce had grown a beard for his next project, reports Joe Letteri, senior visual-effects supervisor at New Zealand–based Weta Digital. And a digital version of the late rapper Tupac Shakur performed at the Coachella music festival in 2012.

Creating these scenes took a lot of time and computing power. Each frame of a movie that uses computer-generated humans may take as many as 30 hours to process, using many racks of extremely high-powered computer servers. And just 5 minutes of film requires 7200 frames, at the now standard rate of 24 frames per second. Those figures mean that realistic digital doubles are beyond the reach of video-game creators—at least for now.

“The technology that we’ve developed does push down to games, but it’s driven on the film side,” says Letteri. Weta, which produced the effects for such blockbusters as Avatar, The Lord of the Rings, and the 2005 King Kong, is the world’s leading practitioner of the effects technique known as motion capture. “Games have to put an image on the screen every 60th of a second; for us in movies, we will spend tens of hours getting a frame right.”

Game makers believe that digital doubles, indistinguishable from human actors, will start appearing in games within a decade. It’s Digital Ira that has made them so optimistic. Digital Ira starts with image data created by a sophisticated 3-D scanning system at USC’s Institute for Creative Technologies, in Los Angeles. Graphics processors turn the data into a moving image by using calculations that determine not only the movement of the bones, muscles, and skin but also how light in the scene needs to reflect and scatter to best mimic how real light illuminates a real face as it talks, smiles, or frowns. Each individual frame appears on screen as it becomes available, at a rate of 60 frames per second. So far, two versions of a moving, speaking Digital Ira have been demonstrated: by Nvidia at the March 2013 GPU Technology Conference and by Activision at the July 2013 Siggraph conference. The Nvidia version ran on a Nvidia graphics card performing 4.9 trillion mathematical operations per second; the Activision version ran on a PlayStation 4 with a 1.84-teraflop graphics processor.

For game makers, being able to demonstrate a photo-realistic human created by computer graphics software on the fly was like throwing a rope across the uncanny valley—it made it possible for them to imagine that they, too, will be able to bridge that expanse soon. Says Jen-Hsun Huang, CEO of Nvidia, “In technology, if something is possible now, it will take less than 10 years to make it practical.” He figures that a Digital Ira–level character will be available to computer-game producers within 5 years and that “we’ll see video games with as much realism as Gravity in less than 10 years.” At first, he adds, the technology will be used in limited ways, in games with relatively small numbers of characters or less complex worlds. And questions remain. It’s one thing to present a digital human on the screen and expect you to see it as real; it’s quite another to maintain the illusion of reality when you’re interacting with the character, not just observing it.

The first major change we’ll see in the entertainment world, probably about 10 years from now, when moviemakers and game creators are cranking out digital humans with ease, will be the separation of the actor’s appearance from the actor’s performance. In Benjamin Button, Brad Pitt’s character changed dramatically but was always recognizable as Brad Pitt. Soon though, says graphics pioneer Smith, “it won’t matter what the actor looks like anymore. He can be any age, any color. He can be a seven-foot basketball player with polka dots.” But far from being the end of human actors, such advances may help actors blossom in ways not possible today. Imagine how many more roles Meryl Streep and Judi Dench could play if they weren’t limited by their ages and physical conditions.

Next, when game characters are indistinguishable from movie actors, we’re likely to see the emergence of a type of entertainment that lies somewhere between movies and games—Intel’s Schlieski uses the informal term “cinemagraphic games.” It will be years, maybe a decade or more, before the technical and creative factors align to produce a breakthrough work in the fledgling genre. But in the meantime, there have been a few glimmers of what’s coming.

The game The Walking Dead is probably the best example, says Schlieski. In it, players have to do more than shoot; they have to make choices, such as whether to lie to characters, including a little girl and a police officer. The choices come back to help—or haunt—them later in the game. For example, after you’ve picked up the little girl you meet some strangers, and you need to lie to the strangers about your relationship to the girl. At that point, if the girl trusts you, she will support your lie and help you; if she doesn’t, she won’t.

Most efforts to blend movies and games have focused on the creation of a world for the player to explore. But just sending people into a virtual place to play isn’t real entertainment.

“Stories have to be authored,” says USC’s McDowell. “But multiple threads can exist, and the audience can walk around in a story’s landscape, following a major character and then diverting when another character seems more interesting.” Theater artists have already started to embrace this model. Sleep No More is an interactive version of Macbeth that has been playing since 2011 in New York City. In the production, actors perform throughout a five-story building. Audience members move freely, following sets of actors or exploring the many rooms as they wish. The digital equivalent of this will come, although the details of how it will become a compelling experience are elusive. Schlieski believes the solution will hinge on plots. “What drives this industry is storytellers,” she says. “Storytelling is an art and a gift.”

The entertainment industry will spend decades exploiting the possibilities of digital characters. And much of that will be mere preamble to real revolution, four or five decades from now: teaching computers to act.

Today’s computer-generated characters get their “acting” from real actors, using motion-capture technology: Live actors perform on a special stage, wearing tight jumpsuits studded with dozens of reflectors placed at key points on their bodies; they may also have reflectors or fluorescent paint on their faces. In some cases, head-mounted cameras capture their facial motions from multiple angles.

Motion-capture technology will get easier and more natural to use. But, says Javier von der Pahlen, director of creative research and development at the Central Studios division of Activision, “I would like to see a character that is expressing itself, not precaptured, not generating canned expressions or creating them from semantic rules, but creating expressions by the same things that create our expressions.”

Weta’s Letteri describes this approach as turning the process of generating characters around and driving it “from the inside out—to be able to ask, What is this character thinking, and how does that relate to his face?”

To do this will require overcoming many huge challenges. A truly digital character, one that does its own acting rather than conveying the acting of another actor, will be created only by merging artificial-intelligence technology that passes the Turing test in both verbal and nonverbal responses—physical gestures, facial expressions, and so on—with perfectly rendered computer graphics. That will be really hard.

Computer scientists don’t think the challenge is insurmountable, but they agree that the complexity of the challenge is awe inducing, because even the nature of the goal is hard to spell out. “We don’t have a clue how to replace actors,” Smith says. “We don’t know what acting is. None of us can explain how an actor convinces you that he or she is someone other than who they are.”

Not only do scientists not know how actors generate emotions from a script, they don’t even know how genuine emotions work to change the appearance of the face and body.

“Researchers have yet to agree whether we pull our facial expression first—a smile, say—and then feel happy, or if we feel happy and then smile to communicate it,” says Angela Tinwell, senior lecturer in games and creative technologies at the University of Bolton, in the United Kingdom.

Sebastian Sylwan, Weta’s chief technology officer, is optimistic nevertheless. “Computer graphics is at most 30 years old,” he notes. “And digital doubles have just arrived.”

A half century from now, when we’ve become accustomed to seeing utterly convincing digital doubles, what will we wish we had done today? Collected the raw data to perfectly re-create historical figures, says Nvidia’s Huang. Right now, we should be using digital motion-capture techniques to record world figures giving speeches, being interviewed, or performing, just as painters captured influential figures beginning in the 17th century and photographers in the 19th. “We should capture Obama, the Dalai Lama, all the people of consequence in the world,” Huang says. “Once we capture it, we can enjoy it in ever-increasing fidelity as computer graphics advances.”

Farther out, probably more than 30 years from now, digital humans—maybe displayed as holograms, maybe as robots—will be able to interact convincingly and satisfyingly with us. Ultra-advanced processors and software will let them interpret our thoughts and feelings and respond inventively with humor and compassion. “This could help reduce loneliness in an increasingly aging population,” says Tinwell.

It’s possible that these digital entities will employ a form of astoundingly sophisticated mimicry, mastering the memories, the social conventions, and the messy welter of emotions that underpin human relationships. They’ll either understand us or fake it so well it won’t matter.

And to quote the movie title, that’s entertainment.

What Could Possibly Go Wrong?

When the Fake Trumps the Real

In a world of synthetic faces, human expression could become hard to read

Illustration: MCKIBILLO

Computer-generated characters that are indistinguishable from humans are today typically limited to cameo appearances in action movies, except in a few rare and costly feature films. But when they become the norm on video-game displays as well as on cinema screens, they might not only play interactive games with us. They might start playing lasting games with our minds.

The human brain evolved to understand the subtle cues of people’s emotions in order to anticipate their future behavior. When we lived in caves, that understanding could mean the difference between life and death. While computer-generated characters may, on the surface, be indistinguishable from their human counterparts, no one believes they’ll be reflecting all the nuances of human expression anytime soon. Even so, people will likely spend more and more time interacting with them. They’ll not only be entertainers but also teachers and customer-service agents. And there lies the danger, according to Angela Tinwell, senior lecturer in games and creative technologies at the University of Bolton, in the United Kingdom. When children grow up spending much of their days interacting with realistic computer-generated characters, says Tinwell, they may be handicapped when it comes to interacting with the real thing.

On the other hand, it’s equally possible that the brain will adapt and learn new ways to distinguish the genuine human from the virtual impostor, says Ayse P. Saygin, associate professor of cognitive science and neurosciences at the University of California, San Diego.

But even in that best-case scenario lurks a major potential problem. A person who spends much of his childhood in virtual worlds might not want to leave them, Saygin says. When a game can adapt to your mood better than most acquaintances do, people might prefer the game to the friends. “When you go into the real world and people, unlike a game, are not engineered to entertain you and cater to your needs, or don’t understand automatically when you are annoyed or happy, you will want to go home and hang out with your avatar friends,” says Saygin. “I absolutely believe this technology will do a lot of good. But replacing human relationships could be the dark side of this.”

Writers and directors have already started to explore such themes. The 2013 film Her spins a tale around a man who develops a romantic relationship with what the movie calls an intelligent “operating system,” akin to an extremely smart Siri.

“Sometimes,” Saygin says, “I think, in the Darwinian way, that things that aren’t good for us won’t be part of our future. But look at smoking—people got addicted even though it was bad for them, and we are only now correcting that behavior.” —T.S.P.

This article originally appeared in print as “Leaving the Uncanny Valley Behind.”