This week, China reclaimed the distinction of running the world’s fastest supercomputer; it last held that first-place ranking for eight months starting in October 2010 with its Tianhe-1A machine. Its new Tianhe-2 (“Milky Way–2”) computer is putting its competition to shame, however, performing calculations about as fast as the most recent No. 2 and No. 3 machines combined, according to Jack Dongarra, one of the curators of the list of 500 most powerful supercomputers and professor of computer science at the University of Tennessee, Knoxville.

But in its ability to search vast data sets, says Richard Murphy—senior architect of advanced memory systems at Micron Technology, in Boise, Idaho—the 3-million-core machine is not nearly as singular. And it’s that ability that’s required to solve many of the most important and controversial big data problems in high-performance computing, including those at the recently revealed Prism program, at the U.S. National Security Agency (NSA).

Murphy chairs the executive committee of an alternative supercomputer benchmark called Graph 500. Named for the mathematical theory behind the study of networks and numbered like its long-established competitor, Top500, Graph 500 quantifies a supercomputer’s speed when running needle-in-a-haystack search problems.

While China’s Tianhe-2 claims a decisive victory in the newest Top500 list, the same machine doesn’t even place in the top 5 on the most recent Graph 500 list, Murphy says. (Both rankings are recompiled twice a year, in June and November.)

It’s no mark against the Tianhe-2’s architects that a No. 1 ranking in Top500 doesn’t yield the highest Graph 500 score too. As IEEE Spectrum previously reported in fact, as computers gain in processing power, very often their ability to quickly access their vast memory banks and hard drives decreases. And it’s this latter ability that’s key to data mining.

“If you look at large-scale commercial problems, the data is growing so fast compared to the improvement on performance you get from Moore’s Law,” says Murphy, who previously worked for Sandia National Laboratories, in New Mexico.

The big data sets supercomputers that must traverse today can be staggering. They can be as all-encompassing as the metadata behind every call or text you send and receive, as the latest revelations about the NSA’s operations suggest. On a slightly less Orwellian note, other big data sets in our digital lives include every purchase on Amazon.com or every bit of content viewed on Netflix—which is constantly combed through to discover new connections and recommendations.

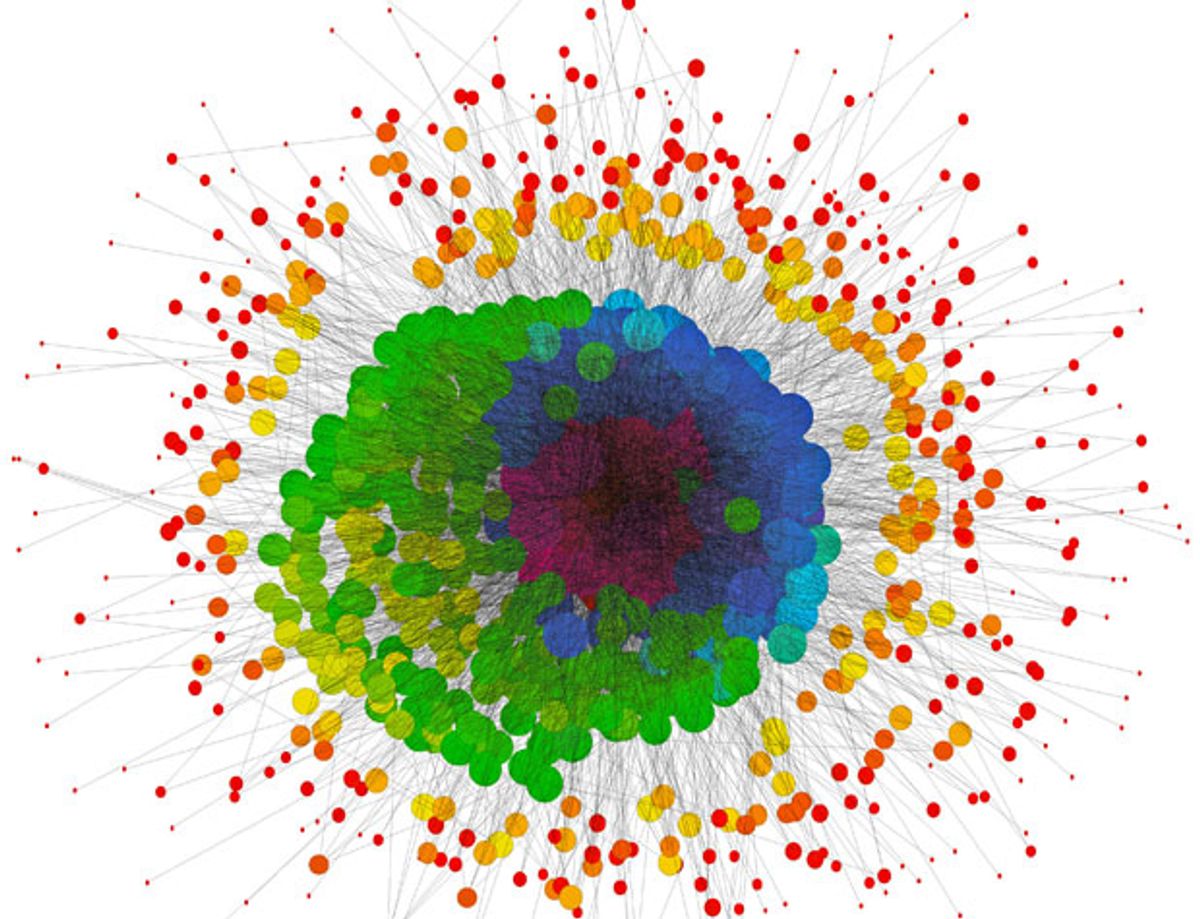

Each of these everyday calculation problems is just another example of the general problem of searching the graph of connections between every point in a data set. Facebook, in fact, called out the problem by name earlier this year when it launched its Facebook Graph Search, a feature that enables users to find, say, restaurants or music that friends like, or to make other, more unusual connections.

The difference between such tasks and the simulations supercomputers typically run boils down to math, of course. Dongarra says that at its heart, the Top500 benchmark tracks a computer’s ability to race through a slough of floating point operations, while Graph 500 involves rapid manipulation of integers—mostly pointers to memory locations.

But Murphy adds that the bottleneck for Graph 500 tests is often not in the processor but instead in the computer’s ability to access its memory. (Moving data in and out of memory is a growing problem for all supercomputers.)

“Graph 500 is more challenging on the data movement parts of the machine—on the memory and interconnect—and there are strong commercial driving forces for addressing some of those problems,” Murphy says. “Facebook is directly a graph problem, as is finding the next book recommendation on Amazon, as [are] certain problems in genomics, or if you want to do [an] analysis of pandemic flu. There’s just a proliferation of these problems.”

Both the Top500 and Graph 500 benchmark rankings are being released this week at the International Supercomputing Conference 2013, in Leipzig, Germany.

Margo Anderson is senior associate editor and telecommunications editor at IEEE Spectrum. She has a bachelor’s degree in physics and a master’s degree in astrophysics.