Low-Power Supercomputers

A new climate-modeling supercomputer will use the processors now found in cellphones and other portable devices

Attempts to calculate the weather numerically have a long history. The first effort along these lines took place not in some cutting-edge university or government lab but on what the lone man doing it described as “a heap of hay in a cold rest billet.” Lewis Fry Richardson, serving as an ambulance driver during World War I and working with little more than a table of logarithms, made a heroic effort to calculate weather changes across central Europe from first principles way back in 1917. The day he chose to simulate had no particular significance—other than that a crude set of weather-balloon measurements was available to use as a starting point for his many hand calculations. It’s no surprise that the results didn’t at all match reality.

Three decades (and one world war) later, mathematician John von Neumann, a computer pioneer, returned to the problem of calculating the weather, this time with electronic assistance, although the limitations of the late-1940s computer he was using very much restricted his attempt to simulate nature. The phenomenal advances in computing power since von Neumann’s time have, however, improved the accuracy of numerical weather forecasting and allowed it to become a routine part of daily life. Will it rain this afternoon? Ask the weatherman, who in turn will consult a computer calculation.

Like weekly weather forecasting, climate simulation has benefited greatly from the steady advance of computational power. Nonetheless, there’s still a long way to go. In particular, predicting the influence of clouds remains a weak link in the chain of reasoning used to make projections about changes in Earth’s climate. Part of the reason is that the resolution of the global climate models in use today is too coarse to simulate individual cloud systems. To gauge their effect, today’s models must rely on statistical approximations; some climatologists would be much happier if they could model cloud systems directly. The problem is that the computing oomph for that isn’t available today. And it probably won’t be anytime soon.

Microprocessor clock speeds are no longer increasing with each new generation of chip fabrication. So to obtain more computational horsepower, the usual strategy is to gang together many processors, each working on a piece of the problem at hand. But that solution has drawbacks, not the least of which is that it multiplies electrical demands. Indeed, the cost of the power required to run such computer systems can exceed their capital costs. This is an industry-wide problem. Companies with large computing needs, such as Google, will build facilities near hydroelectric dams to get inexpensive electricity for their data centers, which can consume 40 megawatts or more.

This power crisis also means that high-performance computing for such things as climate modeling is not going to advance at anything like the pace it has during the last two decades unless fundamentally new ideas are applied. Here we describe one possible approach. Rather than constructing supercomputers as most of them are made now, using as building blocks the kinds of microprocessors found in fast desktop computers or servers, we propose adopting designs and design principles drawn, oddly enough, from the portable-electronics marketplace. Only then will it be possible to reduce the power consumption and cost of a next-generation supercomputer to a manageable level.

Back in the 1970s and 1980s, the high-performance computing industry was focused on building the equivalent of Ferraris—high-end machines designed to drive circles around the kinds of computing hardware a normal person could buy. But by the late 1980s and early 1990s, research and development in the rapidly growing personal-computer industry dramatically improved the performance of standard microprocessors. The ensuing pace of advance was so quick that clusters of ordinary processors, the Fords and Volkswagens of the industry, all driving in parallel, soon proved as powerful as specially designed supercomputers—and at a fraction of the cost.

That development benefited the many scientific groups that required high-performance computers to get them around the next bend on the research frontier. The equipment may not have been quite as efficient as custom-built supercomputers—either computationally or as measured by the power they consumed—but there was no sticker shock, and they were relatively easy to trade in for newer models when the time came.

Although it now seems obvious that using mass-produced commercial microprocessors was the best way to build supercomputers, that lesson took a while to sink in. Over and over since the mid-1990s, some research group would attempt to build special-purpose computing hardware to solve a particular problem with great computational efficiency and elegance. But such efforts would regularly be eclipsed by brute-force improvements in the performance of mass-produced microprocessors, for which transistor density and clock speeds were doubling every 18 months or so. That’s why for more than a decade only the boldest, most ambitious research programs have veered from using commercial off-the-shelf technology for their high-performance computing needs.

But the improvements in clock speeds that have for so long come from shrinking the size of transistors have now come to an end. The reason, broadly speaking, is that to maintain the historical connection between reduced transistor size and increased speed would require too much power—the chip would cook. So to boost computing performance, manufacturers are increasingly putting more than one microprocessor core on each chip.

What’s more, research and development investments today are rapidly shifting from desktop PCs toward handheld devices, which are built around simple low-power cores. Because they are designed to satisfy just the requirements of the application at hand, they draw substantially less power than do desktop microprocessors, which are intended for general use. The performance of such embedded processors is more limited, of course, but they can offer greater computational and energy efficiency. And these low-power processors are now starting to offer the capability for double-precision floating-point operations, which makes them suitable for scientific computing.

Just as the architects of today’s supercomputers first started advocating the use of building blocks based on desktop-computing technology in the early 1990s, we are now urging our colleagues to use commonplace designs—processors intended for portable, embedded-computing applications.

At first blush it might seem strange to want to build a supercomputer using the kind of pared-down processors found in, say, cellphones. But when you consider that both applications are constrained by the amount of power used, this notion begins to makes sense. Indeed, in both cases efficiency is everything. The approach we’re suggesting follows logically from supercomputer pioneer Seymour Cray’s 1995 statement “Don’t put anything in that isn’t necessary.”

Consider also that the embedded-processor market has recently taken off with the emergence of high-performance portable electronics like smartphones, which can perform very sophisticated tasks. Now, for example, you can buy something that handles speech recognition, gaming, Web browsing, even today’s cutting-edge augmented-reality apps—and fits in the palm of your hand.

The availability of such wonders helps to explain why portable devices now account for a larger share of the microprocessor market than personal computers do. And because embedded microprocessors are comparatively simple to design, they can be quickly adapted. Whereas you would expect the vendor of a desktop or server processor to develop just one new CPU core every two years or so, the maker of a typical embedded processor may generate as many as 200 variants every year.

The companies serving the embedded-chip market can keep up that breakneck pace because they have the tools to offer rapid turnaround when a client requests a power-efficient, semicustom chip for its latest mobile gizmo. And those companies can also provide software—compilers, debuggers, profiling tools, even complete Linux operating systems—tailored to each specific chip they sell.

Supercomputer makers would do well to leverage these capabilities to build power-efficient number-crunching machines. This approach differs from past attempts to customize chips for supercomputers, because we envision using designs and design tools that have already been tested in industry. Doing so would allow the construction of unique supercomputers, each one tailored to meet the particular requirements of the task at hand. “What interesting problems can I solve with the best machine available?” will no longer be the question. Instead, researchers will ask, “What kind of hardware do I need to address the scientific issue that’s important to me?” And getting the money to build and run it will finally be within the realm of possibility.

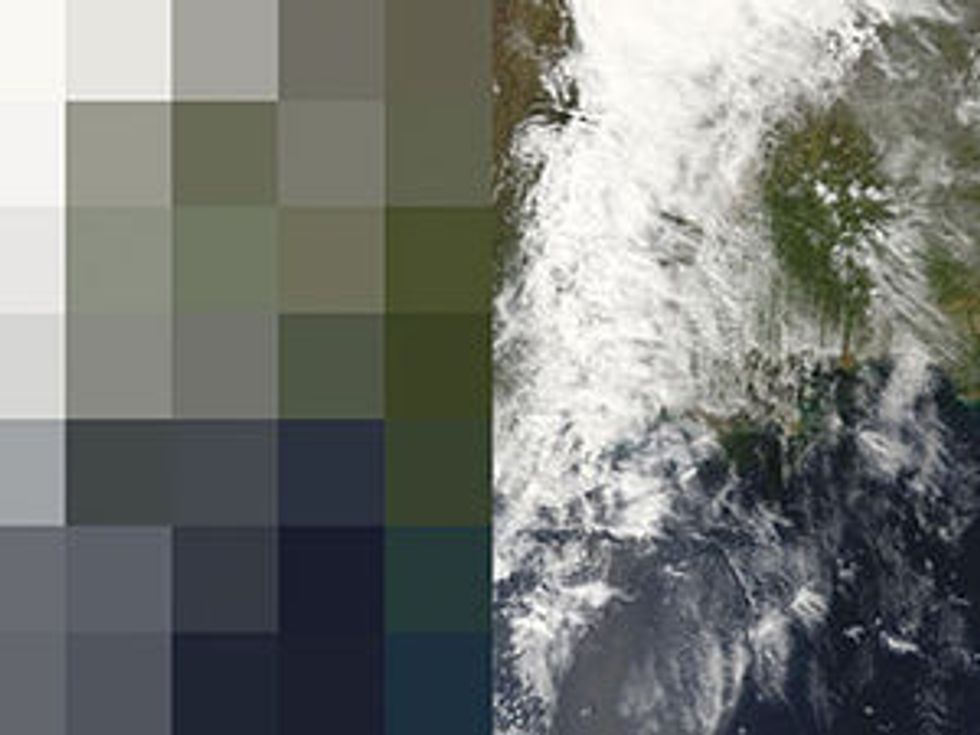

Take the problem of predicting climate change, which requires knowledge of the future evolution of land surfaces, the ocean, and the atmosphere, the last of these being the toughest to model. Most of today’s atmospheric models slice and dice Earth along lines of latitude and longitude. They do so at a surprisingly coarse resolution—often with computational grid cells that measure two or more degrees across in latitude or longitude. That’s to say, one cell—one pixel in the resulting global maps—might measure a couple of hundred kilometers on a side. A lot of interesting weather phenomena take place within areas of such size—the formation of clouds, thunderstorms, perhaps even entire weather fronts—things that now have to be accounted for in some way other than modeling their dynamics using the equations of motion.

The strategy employed to keep track of the weather within each cell involves various statistical averages and parameterizations, which introduce a great deal of uncertainty. For this reason, climatologists would dearly like to improve the resolution of their global atmospheric models, ideally reducing the cell size to just a kilometer or two. But up until now that’s been almost inconceivable because the computing demands would become so great. Indeed, trying to satisfy those demands with the kinds of supercomputers available now would be astronomically expensive; the electricity used alone would probably blow the budget.

Five years ago, a group of us—computer researchers from the University of California, Berkeley, and the Lawrence Berkeley National Laboratory—began informal discussions on the future of computing. We arrived at the idea that the building blocks for tomorrow’s computing systems of all scales were likely to be simple microprocessor cores, like those found now in battery-powered consumer electronics. Even the powerful supercomputers used for such things as climate modeling could be built this way. To investigate that possibility, the three of us looked carefully at a well-regarded climate model (a version of something called the Community Atmospheric Model) to estimate what it would take to reduce its spatial resolution to the point where individual cloud systems could be simulated.

Our target was to have the horizontal resolution be no more than 1.5 kilometers, with the atmosphere separated into 100 layers, rather than just the 25 or so that are typically used. That would make for about 20 billion computational cells. We have a name for the hypothetical supercomputer capable of running such a climate model while using a reasonable amount of electricity: the Green Flash. It sounds like a superhero, and in some sense it would be one.

How fast would the Green Flash have to run? To keep with the superhero theme, we could say that it would have to run faster than a speeding bullet, which travels about 1000 times as fast as a person can walk. That is, the Green Flash would need to simulate atmospheric motions at a rate about 1000 times as fast as the weather plays out in reality. To model Earth’s climate over the course of a century, for example, should take no longer than a month or so. Otherwise the computer would prove too slow to be useful to climate scientists, who must run their models many times to adjust various internal parameters, and to compare different climate scenarios against one another and against one or more millennium-long baseline simulations. To achieve the desired 1000-fold speed advantage over nature would require, at the very least, 1016 floating-point operations per second (flops) using double-precision arithmetic. That’s 10 petaflops.

Thankfully, the atmosphere lends itself to modeling on a machine using many different processors working in unison. That’s because you can calculate changes in the weather at one spot without having to know what’s happening at distant locales. All you really need is information about what’s going on directly to the north, south, east, and west.

You might, for example, divide the globe into 2 million horizontal patches, each one further subdivided into 10 vertical slices. Each of these 20 million domains would span about 1000 cloud-resolving grid cells, demanding a sustained computational rate of 500 megaflops—well within the capabilities of one of today’s high-end embedded processors. Modeling climate in this way would also require about 5 megabytes of random-access memory for each processor, and you would need to be able to read and write to that memory at a rate of about 5 gigabytes per second.

Because the computations for one region within an atmospheric model hinge only on what’s happening in the adjacent regions, the communication requirements between processors are modest. Each second, about 1 GB of data would have to flow back and forth between one processor and a few others, a rate that should be possible with modern embedded hardware.

Having not yet constructed a Green Flash supercomputer, we can provide only a sketch of how one could be put together. The basic building block might be something like the CPUs available from Tensilica, a company based in Santa Clara, Calif., that tailors its relatively simple embedded-processor designs to the needs of each customer. Tensilica’s XTensa LX2 processor cores, for example, can easily be run at 500 megahertz and can carry out 1 gigaflops without breaking a sweat.

It wouldn’t be too difficult to integrate, say, 32, 64, or even 128 of these processor cores, along with enough local memory and data-transfer capacity for the climate-modeling problem, within a single package. But you’d need 10 million or so cores to achieve the required computational might.

Using such low-cost chips would slash the bill for processors and memory, even though you’d still have to pay the usual amounts for hard disks, software, a building to house this gargantuan computer, staff to tend it, coffee to keep them going, and so forth. In all, a supercomputer built along these lines might set you back a couple hundred million dollars. But that’s no more than the cost of some of the existing supercomputing facilities at U.S. national laboratories—or of many big-budget motion pictures. Compare that with what the required supercomputer would cost if it were to be built following the conventional approach, using high-end desktop or server microprocessors.

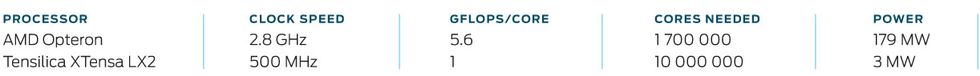

American Micro Devices’ Opteron processor core, for example, can easily outperform one of Tensilica’s embedded processors. “Only” 1.7 million AMD Opterons would be needed to carry out the 10 petaflops required to simulate Earth’s atmosphere at cloud-resolving resolution. So you might then be tempted to assemble a climate-modeling supercomputer out of standard dual-core Opteron chips. But that would be foolish. Even if you could foot the initial bill, which we estimate would be more than a billion dollars, you probably couldn’t afford to run it, because it would consume almost 200 MW, costing perhaps $200 million a year for electricity. In contrast, a Tensilica-equipped Green Flash would sip power, relatively speaking, requiring only 3 MW, a level many large computer centers are already equipped to handle.

These numbers assume that the millions of microprocessors involved are working at high computational efficiency. That is, each of the needed calculations shouldn’t take too many floating-point operations to accomplish. That might be difficult to achieve, particularly with a generic processor like the Opteron. Cores intended for embedded applications are much more promising in this regard because their circuitry can be tailored to the scientific task at hand. Need to include another hardware-multiply unit? No sweat. Want your microprocessor to carry out the most common instructions in climate modeling especially fast? That’s possible too. But there’s a danger if you go overboard: The development of a better algorithm to solve the problem can make your costly supercomputer obsolete in one stroke.

Our concept for the Green Flash is to build in the processing power, memory, and data-transfer pipes needed for each computational domain. Designers would further tune the circuitry to the climate-modeling problem, most likely using prototype hardware built out of field-programmable gate arrays (FPGAs). But they would take care not to make the circuitry too specific to one particular climate model. That way the resulting supercomputer could be reprogrammed easily to do whatever calculations are desired as the numerical recipes used to simulate the oceans and atmosphere improve with time. And the same supercomputer could be used to run many different climate models efficiently, allowing scientists to compare one against another—which helps enormously in evaluating the credibility of the results.

Building a Green Flash supercomputer would obviously be a huge undertaking, so we’re approaching it with baby steps. One of the most significant took place late last year, when we and our coworkers simulated a design for a possible Green Flash processor using something called the Research Accelerator for Multiple Processors, or RAMP, an FPGA-based hardware emulator that our colleagues in the department of electrical engineering and computer science at the University of California, Berkeley, have assembled for research in computer architecture.

We came up with the design in collaboration with Chris Rowan and others at Tensilica. The design uses that company’s XTensa LX2 processor core as its basic building block. The RAMP simulation of our science-optimized processor core ran a coarsened version of a global climate model that David Randall and his colleagues at Colorado State University, in Fort Collins, devised expressly to resolve clouds. The point was not to find out about the climate; it was just to test our RAMP-emulated designs.

Randall and his colleagues are excited about the prospect of a Green Flash to run their model. Using the fastest supercomputers available right now and operating at a somewhat marginal resolution for tracking individual clouds (4 km), their model outpaces nature by only a factor of four—0.4 percent of what’s required. So only with something like the Green Flash will such models ever prove truly useful for long-term climate prediction.

The results of our RAMP experiments should allow us to run a climate model on an emulated Green Flash chip by the end of this year, which in turn will show just how well the real chip would perform. And if all goes well, we hope to have a small prototype system that includes something like 64 or 128 processors and their interconnections built about a year later.

Building a complete supercomputer and optimizing the code to run on it will keep us and many other members of the computer-science community busy well beyond that—perhaps for a decade or more. That’s why it’s so important to begin the process now. Otherwise, changes in Earth’s climate could well get ahead of us.

About the Author

Michael Wehner, Lenny Oliker, and John Shalf work at Lawrence Berkeley National Laboratory. Oliker and Shalf have backgrounds in computer science and electrical engineering, as you might expect for members of a team proposing a supercomputer for modeling climate change—“A Real Cloud Computer.” Wehner, however, was trained in nuclear physics and designed bombs before switching to climate research. Their supercomputer idea is now being taken seriously, but when Wehner first proposed it at a 2008 conference, even he felt it was fanciful. “I was prepared to be laughed off the stage,” he recalls.

To Probe Further

The authors describe their work establishing the requirements for the Green Flash in more detail in “Towards Ultra-High Resolution Models of Climate and Weather,” which appeared in the May 2008 issue of the International Journal of High-Performance Computing Applications. The material posted at https://www.lbl.gov/cs/html/greenflash.html provides recent updates on their research.