Enjoy more free content and benefits by creating an account

Saving articles to read later requires an IEEE Spectrum account

The Institute content is only available for members

Downloading full PDF issues is exclusive for IEEE Members

Downloading this e-book is exclusive for IEEE Members

Access to Spectrum 's Digital Edition is exclusive for IEEE Members

Following topics is a feature exclusive for IEEE Members

Adding your response to an article requires an IEEE Spectrum account

Create an account to access more content and features on IEEE Spectrum , including the ability to save articles to read later, download Spectrum Collections, and participate in conversations with readers and editors. For more exclusive content and features, consider Joining IEEE .

Join the world’s largest professional organization devoted to engineering and applied sciences and get access to all of Spectrum’s articles, archives, PDF downloads, and other benefits. Learn more →

Join the world’s largest professional organization devoted to engineering and applied sciences and get access to this e-book plus all of IEEE Spectrum’s articles, archives, PDF downloads, and other benefits. Learn more →

CREATE AN ACCOUNTSIGN IN

Access Thousands of Articles — Completely Free

Create an account and get exclusive content and features: Save articles, download collections, and talk to tech insiders — all free! For full access and benefits, join IEEE as a paying member.

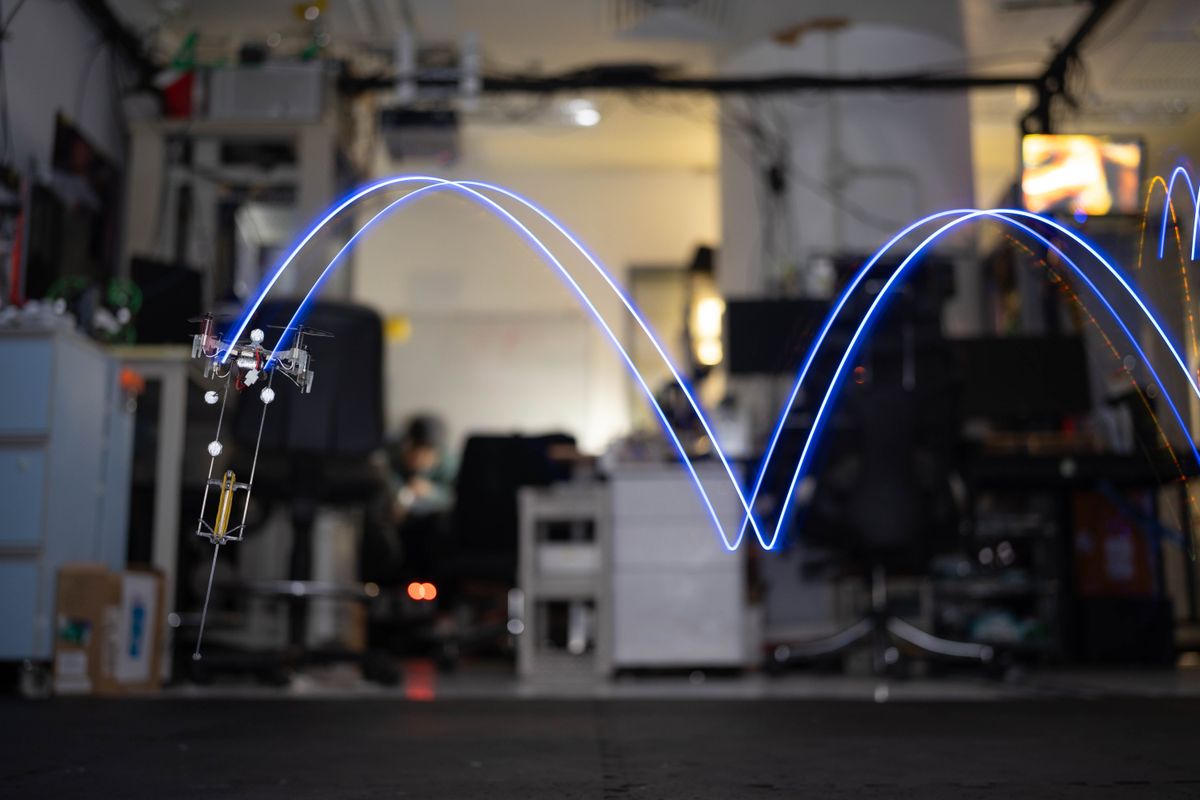

Robotics

The latest developments in consumer robots, humanoids, drones, and automation