Bioethics & The Brain

Microelectronics and medical imaging are bringing us closer to a world where mind reading is possible and blindness banished—but we may not want to live there

Nancy, an airline pilot, arrived promptly for a routine physical. She’d had exams before, but this time was different. She was asked to lie down and place her head in a large metallic torus, while a video screen flashed a series of images before her eyes—the inside of a 747 cockpit, a view of a target seen through a rifle’s scope, a chemical formula for polyester, a photo of Bill Clinton. In an adjacent room, a technician watched as colorful images of Nancy’s brain appeared on his computer screen, lighting up like brushfires with different hues in response to the pictures. As the test ended, the technician forwarded the results to Nancy’s employer.

Reporting for work the next day, Nancy was confronted by her supervisor and an official from the U.S. Federal Aviation Administration. They informed her that the brain images showed Nancy might develop schizophrenia, and had a surprising familiarity with assault rifles as well. The agency revoked her pilot’s license. The airline promptly fired her.

This scenario is fiction. But the basics of the technologies it alludes to already exist. New ways of imaging the human brain and new developments in microelectronics are providing unprecedented capabilities for monitoring the brain in real time and even for controlling brain function.

The technologies are novel, but some of the questions that they will raise are not. Electrical activity in the brain can reveal the contents of a person’s memory. New imaging techniques might allow physicians to detect devastating diseases long before those diseases become clinically apparent. And researchers may one day find brain activity that correlates with behavior patterns such as tendencies toward alcoholism, aggression, pedophilia, or racism. But how reliable will the information be, how should it be used, and what will it do to our notion of privacy?

Meanwhile, microelectronics is making access to the brain a two-way street. The same electrical stimulation technologies that allow some deaf people to hear could be fashioned to control behavior as well. What are the appropriate limits to the use of this technology? In an age of overcrowded prisons, might society be tempted to release criminals if behavior-modifying brain implants could guarantee that they would pose no further threat?

Truth and consequences

Coupled with powerful microelectronics, science’s understanding of the brain is opening the door to new ways of handling criminal investigations and screening potential employees. Several recent applications involve variants of the “guilty knowledge” test, in which investigators try to determine the presence or absence of specific memories implying a person’s guilt by recording electric signals from the head.

So-called brain fingerprinting is the most striking of these. Lawrence A. Farwell [photo, right], chairman and chief scientist of Brain Fingerprinting Laboratories Inc. (Fairfield, Iowa), a commercial venture, invented the technique. Farwell claims that brain fingerprinting allows investigators to “detect information stored in the human brain” for use in forensic examinations. He promotes the method for evaluating criminal suspects and screening for terrorists.

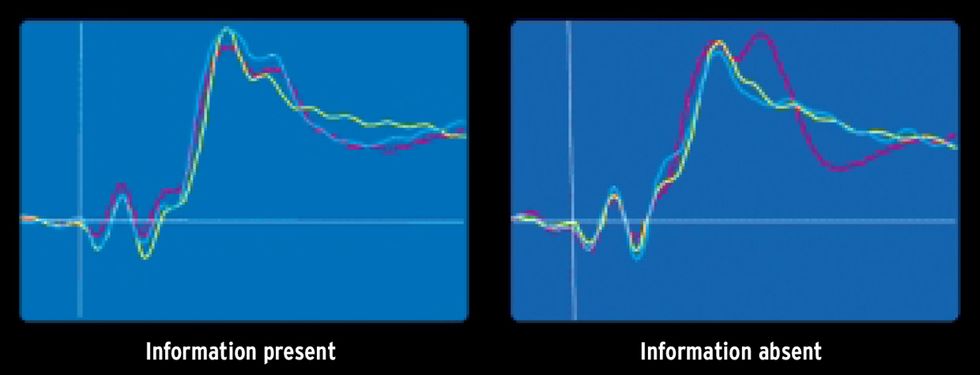

The system that performs brain fingerprinting resembles an electroencephalogram (EEG) under computer control. In a test, the subject is seated in front of a computer screen, wearing a headband with EEG sensors. A series of words, sounds, or images is presented. Some of these are called “irrelevants”: words or images unrelated to the crime or to the investigation. Irrelevants establish a baseline of activity for unimportant information.

Others are “target” stimuli: phrases or images that the subject has been told to pay particular attention to. Targets act as a baseline for information noteworthy to the subject. Finally, there are “probes”: details of the crime under investigation that an innocent would have no knowledge of, such as a picture of a sofa on which a murder victim’s body was found.

In response to these cues, microvolt electrical signals that correspond to brain activity, known as event-related potentials (ERPs), can be measured on the scalp at times ranging from a few tenths of a second to about a full second after the cue. Distinctive ERPs occur when a subject reacts strongly to a meaningful event. For example, a murderer might respond to the probe photograph of the sofa.

Such a reaction would result in a special ERP that includes the so-called P-300 wave. P-300 is a well-researched response that begins 300 ms after the photo appears and is thought to correspond to the brain’s recognizing a noteworthy bit of information. By comparing the ERPs from probes, targets, and irrelevants using a computer algorithm, Farwell claims the system can determine whether the probes represent information that is known to the subject—guilty knowledge [see image].

Brain fingerprinting may seem similar to a polygraph (usually called a lie detector), but it differs in important ways. A polygraph measures physiologic responses such as heart rate, sweating, breathing, and other processes that are only indirectly related to brain function. Brain fingerprinting’s information comes directly from brain function. It and other related tests do not measure truthfulness, but seek to determine whether the subject has a particular memory.

Already brain fingerprinting has been used in two criminal investigations. One was an attempt to win a new trial for Terry Harrington, imprisoned in Iowa for 22 years for murder. Farwell claims that his test shows that Harrington did not commit the crime. A second case involved a man accused of rape and murder in Missouri. Shortly after being found guilty by brain fingerprinting, the suspect (who volunteered to take the test) confessed.

Despite having been financed in part by the U.S. Central Intelligence Agency, brain fingerprinting has struck out there and with other government agencies concerned with security, such as the U.S. Federal Bureau of Investigation and the U.S. Secret Service. Those agencies find brain fingerprinting to be of little use in screening for potential terrorists, spies, or other security risks, according to a 2001 U.S. General Accounting Office study. The reason is that the technology can’t work without specific information known only to the test designers and to guilty people but not to innocents. In the report, the agencies also express concerns about the reliability of the method.

Still, the technique is likely to see use in criminal investigations by expert witnesses testifying in court proceedings. Should judges allow such testimony? One already has. In March 2001, Iowa District Court Judge Timothy O’Grady ruled that brain fingerprinting was admissible in Harrington’s quest for a new trial. But O’Grady wasn’t swayed by brain fingerprinting evidence that seemed to exonerate the defendant, and the judge denied him a new trial. O’Grady’s decision, however, was reversed by the Iowa Supreme Court last February.

But before brain fingerprinting becomes established as a forensic tool, its accuracy surely needs to be assessed. Farwell claims the system has had a perfect record in tests. Such claims are simply premature; the method has received very limited testing, and much of that has been done under artificial laboratory conditions, a problem noted by the federal report. If its validity as a forensic tool is not independently established, we will soon face the kind of battles that now exist concerning the validity of genetic evidence or even polygraphs.

Imaging brain function

Brain fingerprinting is not the only way to monitor the brain to reveal when someone is being deceptive. Functional magnetic resonance imaging, or fMRI, which tells what parts of the brain are active by watching for changes in blood oxygen levels, can do the job, too. Functional MRI is a variant of MRI, which images the distribution in the body of protons and other nuclei—mostly in water—by picking up their RF signal when the nuclei are in a strong magnetic field and perturbed by pulses of RF energy. Through the effects on the signal caused by hemoglobin in the blood that has lost its oxygen, fMRI can sense blood oxygen levels.

An fMRI experiment reported in 2002 by Daniel D. Langleben, an assistant professor of psychiatry at the University of Pennsylvania (Philadelphia), found highly significant correlations between lying or truth telling and the metabolic activity in regions of the brain important to paying attention and monitoring and controlling errors. Langleben’s technique has not yet been applied to forensic examinations.

But fMRI and MRI have other useful, though ethically complex, applications. MRI has long acted as a diagnostic tool for finding brain tumors, strokes, and other serious conditions by observing gross damage to tissue. Recently, physicians have begun to hope that MRI and other imaging techniques would prove useful in diagnosing psychiatric disorders such as schizophrenia, perhaps before the symptoms of those disorders appear. Those hopes are beginning to bear fruit.

MRI and positron emission tomography (PET), which employs radioactive tracers to image brain activity, have become sensitive enough to map the presence of more than a dozen chemicals critical to brain function. These include glucose, an energy source for metabolism, and neurotransmitters, chemicals that relay nerve signals between brain cells. Using these new capabilities, researchers have recently discovered subtle changes in brain structure or function that correlate to disease. The result, as a number of investigators have pointed out in professional journals, is a fundamental change in how physicians view psychiatric disorders.

For example, Gin S. Malhi and colleagues at the University of New South Wales (Sydney, Australia) report that MRI and PET studies have identified specific changes in brain chemistry associated with a range of problems, including Alzheimer’s disease, schizophrenia, alcoholism, anxiety disorders, and post-traumatic stress disorder.

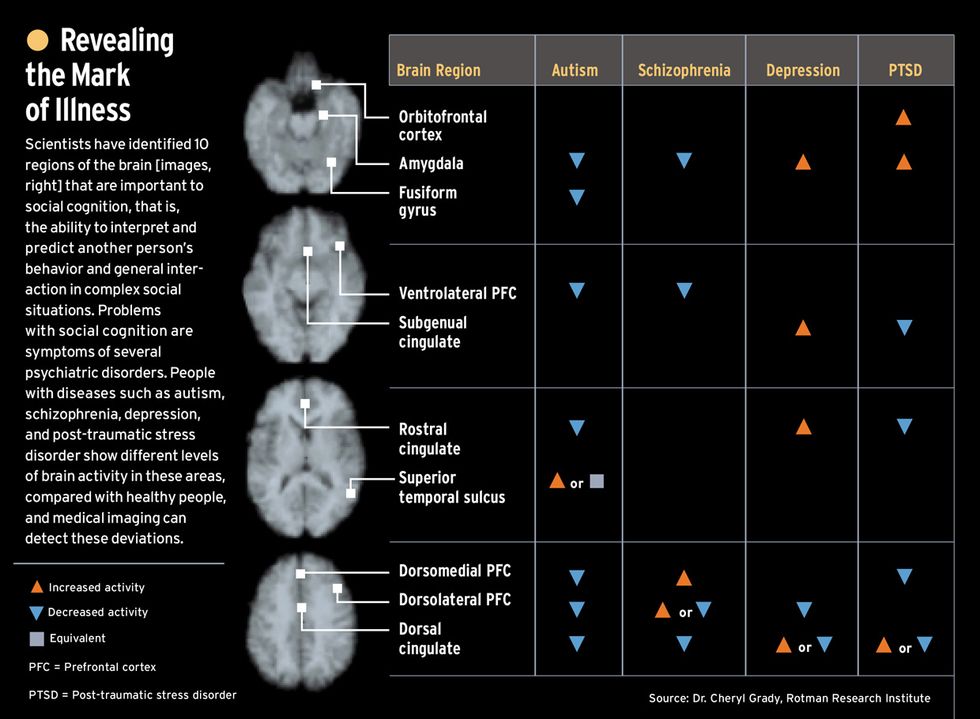

Similarly, the University of Toronto’s Cheryl L. Grady and colleagues report that MRI and PET imaging can spot regions of the brain associated with emotion and how we perceive the emotional responses of others in social situations [see chart]. Thus, for example, imaging can indicate which areas of the brain mediate emotional responses and our perception of them. This implies that the technology has the potential, so far unexplored, for probing emotional responses in people, regardless of how well such responses are hidden.

As this work moves from the research lab into clinical practice, urgent questions will arise. Who should receive pre-symptomatic testing for disease—persons at high risk of inheriting a disease; relatives of those with a particular mental illness; those with particular jobs like Nancy’s? What counseling should a patient receive when a test indicates a likelihood of developing Alzheimer’s or schizophrenia? And what treatment, if there is any, should such patients receive? A complicating factor is that the tests are not necessarily definitive, but may indicate only the probability of developing the disease. And is probability enough for making life-altering decisions for yourself, your employees, or anyone else?

Simply observing the brain is sufficient for some applications, but others require communicating with it. Physicians have long measured electrical potentials from the body and stimulated body processes by means of implanted electrodes—think of the pacemaker. New techniques allow sophisticated circuits to interact with the brain and neural tissue.

Some 30 000 people worldwide already have their hearing enabled by cochlear implants. Unlike a traditional hearing aid, which simply amplifies sound that enters the ear canal, cochlear implants transduce sound from an external microphone and relay the signal to electrode arrays that directly stimulate nerve fibers in the inner ear, bypassing much of the auditory system entirely. This device has created unforeseen dilemmas.

Conceived by their developers as a cure for deafness, cochlear implants were seen in a very different light by some of their intended recipients. Some deaf people reject the notion that deafness is a medical condition that needs to be treated. And so, though the controversy has abated in the last decade, some saw the cochlear implant as antagonistic to the deaf community. They were, for instance, concerned that children fitted with the implants might not learn sign language, setting them apart from others with their condition.

Other devices for stimulating the brain using implanted electrodes are not far off. A visual prosthesis, which directly stimulates the visual cortex, promises to help people blinded by degenerative retinal disease. This approach has been pursued for several years by groups at the University of Utah (Salt Lake City), the nonprofit Huntington Research Institutes (Pasadena, Calif.), and elsewhere. These investigators hope to implant arrays of microelectrodes into the visual cortex of the brain, at the back of the skull, to excite neurons so as to produce images that are taken by an external camera. Daunting technical problems remain, not least of which is the extensive variation in the wiring among neurons in different individuals, which makes it difficult to determine exactly where to place the electrodes.

Computer-to-brain interfaces can work in the other direction as well, using electric signals from the brain to control a prosthesis, software program, or robot, for example. Such systems might assist patients with severe motor impairments like the late stage of amyotrophic lateral sclerosis. But despite sometimes hyperbolic claims regarding such experimental interfaces, they are far from ready to move beyond the laboratory.

One difficulty is the low rate of data transfer (tens of bits per minute) that can be achieved from recordings from the scalp. Such rates are sufficient to allow enhanced communication with patients but not enough to control prostheses. If electrodes are implanted directly into the brain, the bandwidth may improve, but implantation here, as with artificial eyes, is a tricky business.

While few would argue against using electrical stimulation to assist those with disabilities and impairments, researchers have proven that similar technology can be used to control behavior. A sensational, and very controversial, example occurred in the late 1960s, when Spanish investigator José Delgado implanted electrodes into the brain of a bull and induced it to charge at him. Just before impact, an assistant activated the electrodes using a radio frequency transmitter and the bull suddenly stopped his rush.

A far more sophisticated example is “Robo Rat,” described by Sanjiv Talwar and co-workers at the State University of New York’s Downstate Medical Center (Brooklyn) in Nature last year. The investigators implanted electrodes in the brains of rats that were connected to radio frequency receivers attached to the animals’ backs. By stimulating different parts of the brain, the investigators could provide cues to the rats and reward them by stimulating the pleasure center of their brains. The rats quickly learned to respond to the investigators’ commands, transmitted from as far as 500 meters away.

Such technology has nothing to do with the fantasies of mind control by electromagnetic fields, long a staple of science fiction and lately of conspiracy theory Web sites. Nevertheless, its existence raises ethical issues. When, if ever, is it acceptable to manipulate someone’s brain to make him perform actions that he would not ordinarily take voluntarily?

Even the more obviously benevolent brain-computer interfaces, such as cochlear implants, are loaded with difficult decisions that may not be immediately obvious. Assuming all the technical problems are solved and the quality of brain-computer interfaces and implants improves, they could be considered a cure for deafness or blindness. And, if a cure exists for these conditions, do people have the right to remain deaf or blind if they so choose? Should they be compelled to use the technology, and must society continue to accommodate them with Braille instructions and closed-caption television if they opt to reject it?

Neuroethics: an emerging field

Ethicists are only now beginning to take note of these developments in neuroscience. Meetings on the subject have been held recently on both the East Coast (sponsored by the Center for Bioethics at the University of Pennsylvania) and West Coast (hosted jointly by Stanford University and the University of California at San Francisco). The European Commission stepped in earlier, financing a research project on “Ethical, Legal, and Social Aspects of Brain Research” that began in 1997.

One central problem of neuroethics is in establishing the appropriate limits of human intervention in our cognitive (knowledge processing) and affective (emotional) functioning. Should the contents of our minds be sacrosanct, or should police, doctors, employers, school administrators, or parents have the right to probe into a person’s honesty, motivations, phobias, memory, aptitudes, or state of health? How far should we go in using technology to enhance our abilities, and who should have access to and control over the technologies we use?

Some of the prospective ethical dilemmas raised by neuroscience are similar to those that concern many ethicists about genetics. One important question is: how do we minimize the harm to an individual caused by an incorrect test result? Professionals developing the technologies described in this article must take this issue far more seriously than they have so far.

All tests will sometimes produce incorrect results, either a false positive (finding disease when none exists) or a false negative (overlooking a real case of disease). A test that indicates the presence of (or risk of developing) severe psychiatric disorders will have obvious effects on insurance, job opportunities, marriage, childbearing, and other important aspects of a person’s life.

What will happen, for example, if neuroscience succeeds in determining that a child is susceptible to schizophrenia or a late-onset disease like Alzheimer’s? Is the appropriate response prophylactic medication, changes in lifestyle, or the avoidance of environments that could cause stress? The ability to diagnose early stages of Alzheimer’s, for example, has far outstripped the development of effective therapies for this devastating condition. Should people plan their lives around the probabilistic potential indicated by a brain scan?

The problem of probability haunts the use of brain imaging for criminal investigation or in employment screening of individuals, as well. A recent study by the U.S. National Research Council—an organization that is affiliated with the U.S. National Academies (Washington, D.C.)—deemed polygraphs too unreliable for the job of screening groups of people for potential security risks. Brain imaging, by providing information directly from the brain itself, may be more reliable than polygraphy—but just how reliable is it? We need to know, because if we come to rely on it, a false positive result could destroy a career; a false negative could leave society at risk.

The consequences of new technologies derived from neuroscience, as with all new technologies, are hard to predict. Cochlear implants aroused great controversy in the deaf community for reasons unforeseen by the engineers, physiologists, and physicians who developed them. Retinal prostheses, brain-controlled robots, and similar devices have the potential to improve the lives of the people who receive them, but these technologies will certainly bring negative consequences that we cannot clearly foresee. Even if we can never fully anticipate the impact of employing these technologies, it is important to try.

To Probe Further

Two recent reviews of uses of MRI in psychiatry are “Magnetic Resonance Spectroscopy and Its Applications in Psychiatry,” by G.S. Malhi, et al., Australian and New Zealand Journal of Psychiatry, Vol. 36, 2002, pp. 31-43, and “Studies of Altered Social Cognition in Neuropsychiatric Disorders Using Functional Neuroimaging,” by Cheryl L. Grady and Michelle L. Keightley, Canadian Journal of Psychiatry, Vol. 47, May 2002, pp. 327-36.

Using brain wave signals to evaluate memory is explained in “The Role of Psychophysiology in Clinical Assessment: ERPs in the Evaluation of Memory,” by J.J.B. Allen, Psychophysiology, Vol. 39, May 2002, pp. 261-80.

Brain Fingerprinting Laboratories’ Web site is located at https://www.brainwavescience.com/.

Connections between the brain and computers are reviewed in “Brain-Computer Interfaces for Communication and Control,” by J.R. Wolpaw, et al., Clinical Neurophysiology, Vol. 113, no. 6, 2002, pp. 767-91.

Also see “Visual Prostheses,” by E.M. Maynard, Annual Review of Biomedical Engineering, 2001, pp. 145-68.

For copies of the National Research Council report on polygraphs, go to https://www.nap.edu.

The ethical issues surrounding pharmaceuticals for the brain are found in “Treatment, Enhancement, and the Ethics of Neurotherapeutics,” by P.R. Wolpe, Brain and Cognition, Vol. 50, 2002, pp. 387-95.