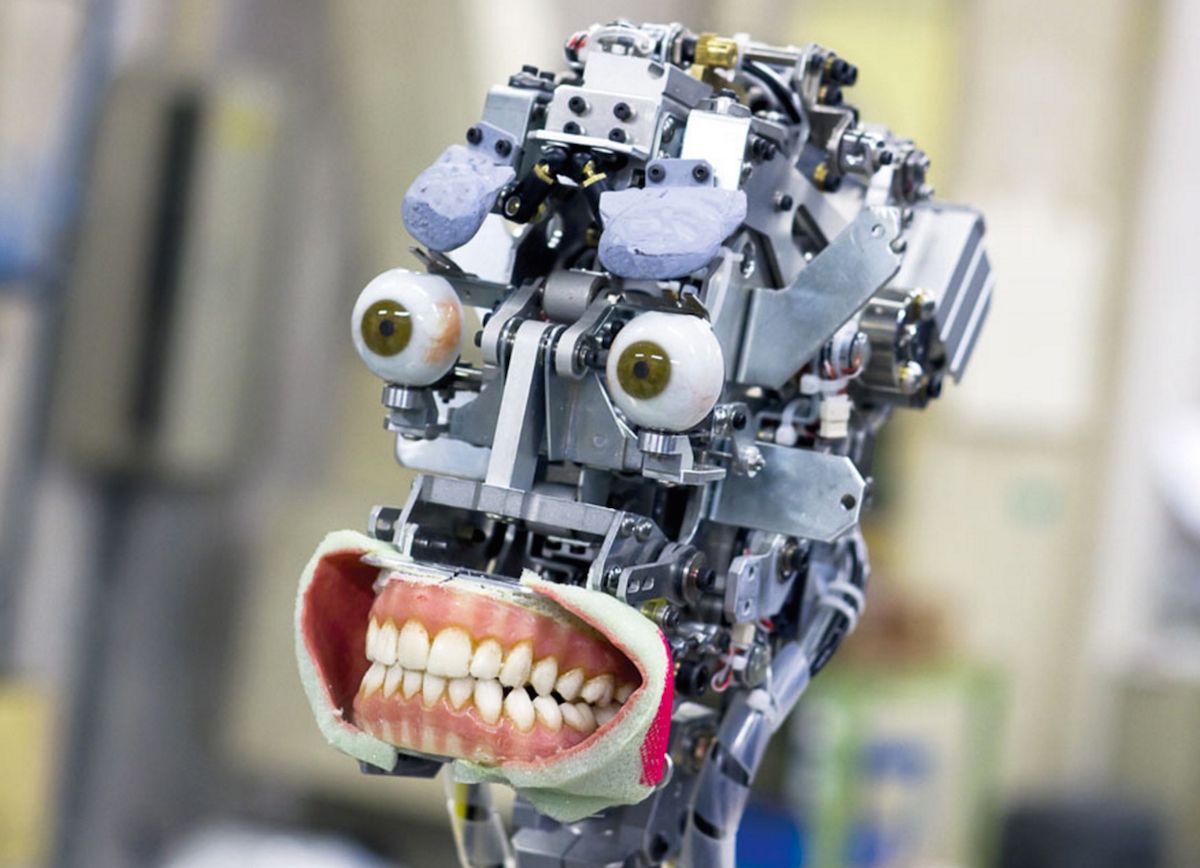

Everybody knows that anthropomorphic robots that try to look and act like people are creepy. The Uncanny Valley, and all that. There’s been a bunch of research into just what it is about such androids that we don’t like (watch the video below to get an idea of what we’re talking about), and many researchers think that we get uncomfortable when we begin to lose the ability to confidently distinguish between what’s human and what’s not. This is why zombies are often placed at the very bottom of the Uncanny Valley: in many respects, they directly straddle that line, which is why they freak us out so much.

Most of the time, robots (even the weird ones) don’t end up way down there with the zombies, because they’re usually a lot more obviously not human. The tricky part about robots, however, is that they can manifest “human-ness” in ways that are more than just physical. When robots start acting like humans, as opposed to just looking like them, things can get much more complicated. This is increasingly relevant with the push towards social robots designed to interact with humans in a very specifically “human-y” way.

In a recent paper in the International Journal of Social Robotics, “Blurring Human–Machine Distinctions: Anthropomorphic Appearance in Social Robots as a Threat to Human Distinctiveness,” Francesco Ferrari and Maria Paola Paladino from the University of Trento, in Italy, and Jolanda Jetten from the University of Queensland, in Australia, argue that what humans don’t like about anthropomorphic robots is fundamentally about a perceived incursion on human uniqueness. If true, it’s going to make the job of social robots much, much harder.

Over the last several years, when surveys have asked people (in Europe and Japan) about how they feel about robots in their lives, along with a positive perception of robots in general there was a significant amount of resistance to the idea of anthropomorphic robots doing things like teaching children or taking care of the elderly. Since social robots are intended for roles like these, it’s important to understand what the root of these feelings are, as the researchers explain:

Why do people fear that the introduction of social robots will have such a negative impact on humans and their identity? Answering this question would enable us to understand the reasons for resistance to this technological innovation. This would be important because the widespread use of social robots in society at large is only possible when psychological barriers to the introduction of robots in our lives have been removed.

Specifically, social robots, because they are designed to resemble human beings, might threaten the distinctiveness of the human category. According to this threat to distinctiveness hypothesis, too much perceived similarity between social robots and humans triggers concerns because similarity blurs the boundaries between humans and machines and this is perceived as damaging humans, as a group, and as altering the human identity.

We expect that for humans, the thought that androids would become part of our everyday life should be perceived as a threat to human identity because this should be perceived as undermining the distinction between humans and mechanical agents. Given the economic investment in the development of social robots and the likelihood that social robots will increasingly become part of everyday life, it is important to understand the reasons why people fear and resist this development.

To test their hypotheses, the researchers showed a group of people a series of pictures of non-human (“mechanical”) robots, humanoid robots, and androids (like Geminoid DK, pictured below), while asking them about perceived potential damage of the robot to human essence and identity, as well as how much agency they perceived in the robot. Predictably, nobody liked the androids much: the results suggested “a linear pattern for the increase of robots’ anthropomorphic appearance, undermining human–machine distinctiveness and perceived damage to humans and their identity. Robot human-likeness directly increases the perception of robot as a source of danger to humans and their identity: the more the robot’s appearance resembles that of a real person, the more the boundaries between humans and machines are perceived to be blurred. These findings are consistent with the idea that worries and concerns about the impact on human identity of highly human-like social robots are related to the fact that these robots look so similar to humans that they can be mistaken to be one of us.” In short, anthropomorphic robots undermine our sense of being human, which is why we don’t like them.

While the focus of this study is on social robots that look like humans, my concern is that what’s discussed here has implications that are more general. Whether or not a social robot looks like a human, it seems like it might run into the same kinds of issues simply by being better at things than humans are, which threatens our uniqueness. Chess, for example, and more recently Go, are arguably now games that computers have mastered, and therefore no longer skills that humans can feel are unique. As computers master more and more skills, and as social robots leverage those skills, our uniqueness will degrade even more. And because of the social component, a much larger population will be affected. To me, this research suggests that at some point, this gradual undermining of our identity will elicit some kind of reaction: When you have a robot in your home that can do everything you can do, except better, is that something that you’d even want to keep around?

A lot of this is speculation on my part: it’s going to be a long, long time before robots or artificial agents reach that point. However, that doesn’t mean we should just ignore the potential for trouble, and there are ways to go about mitigating the problem. The most obvious one is please, please, for heaven’s sake, don’t try to build ultra realistic humanlike androids. I’m sure there are people who may disagree about that. Still, in my opinion, there’s just no good reason to do it. All of the human-robot interaction stuff is great, but you can do it just fine with non-anthropomorphic robots that incorporate human features. (Masahiro Mori, the Japanese researcher who came up with the Uncanny Valley, said the same thing.) Also, it might be interesting to consider how to make sure that robots stay obviously robot-y. Commander Data from Star Trek, for example, didn’t use contractions when he spoke. A simple thing to “fix,” but not fixing it helped to reinforce the fact that the character wasn’t supposed to be a human. Also, green skin and yellow eyes, of course.

The researchers note that they only showed study participants pictures, and not videos (or real robots), which could potentially have a significant impact on how people would have reacted to the robots, although my guess it that it would be for the worse. The study size was also relatively small, and only included Italian students. The researchers hope that in the future, they can more directly address ways of preventing this whole threat to human distinctiveness from arising in the first place, which could include ways of explicitly differentiating robots from humans, or perhaps endowing robots with empathy to lull us into a false sense of security to make us feel more secure.

[ IJSR ]

Thanks Angelica!

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.