Japanese roboticists recently demonstrated a female android singing and dancing along with a troupe of human performers. Video of the entertaining and surprisingly realistic routine went viral on the Net.

How did they do it?

To find out, I spoke with Dr. Kazuhito Yokoi, leader of the Humanoid Research Group at Japan’s National Institute of Advanced Industrial Science and Technology, known as AIST.

The secret behind the dance routine, Dr. Yokoi tells me, is not the hardware—it's software.

The hardware, of course, plays a key role. The AIST humanoids group is one of the world’s top places for robot design. Their HRP-2 humanoids are widely used in research. And the group's latest humanoids, the HRP-4 and a female variant, the HRP-4C, which is the robot in the dance demo, are even more impressive.

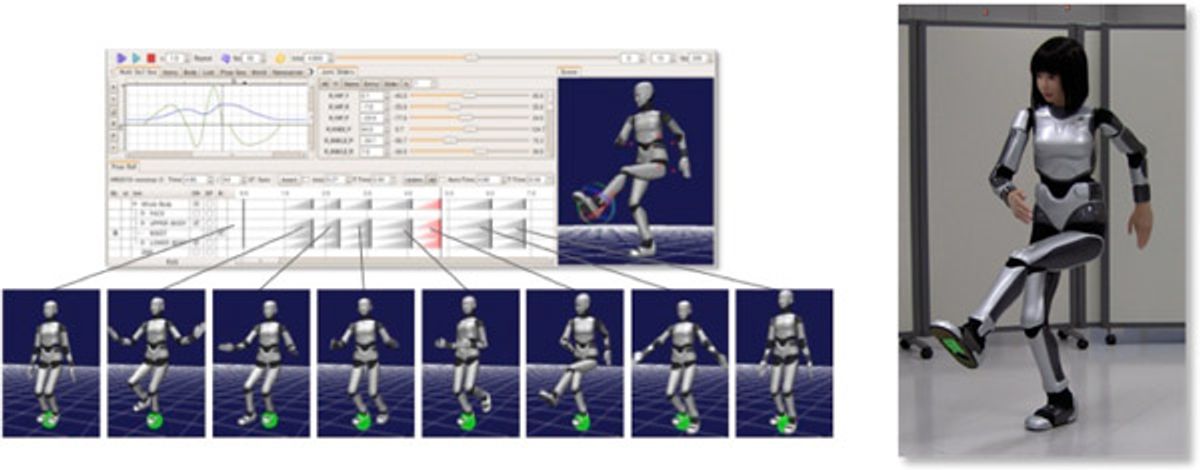

But now the biggest innovation is a new software to program the movements of the robot. The software is similar to those popularly used in CG character animation. You basically click on the legs, arms, head, or torso and drag them to the position you want. You create a sequence of key poses and the software generates the trajectories and low-level control to make the robot move.

So by editing a relatively small number of key poses you can compose complex whole-body motion trajectories. See a screen shot of the software interface below, with a 6.7-second sequence that uses only eight key poses:

The software developed at AIST to create sequences of movements.

The software verifies that the robot can indeed perform the transitions from one pose to the next. If the angular velocity or range of one of the joints exceeds the maximum values, the software adjusts the pose, so that it's feasible to execute.

The software also monitors the robot’s stability. When it generates a trajectory between two key poses, it checks that the waist trajectory won't create instabilities and that foot trajectories will result in enough contact with the floor. If a pose is not safe, the software finds a similar pose that would keep the robot in balance.

After creating a sequence, the user can preview the resulting motion on the 3D simulator—or, if you have an HRP-4C you can upload the code to the robot and watch it dance.

Here's a video showing how the software works:

Dr. Yokoi and colleagues Shin’ichiro Nakaoka and Shuuji Kajita describe the software in a paper titled "Intuitive and Flexible User Interface for Creating Whole Body Motions of Biped Humanoid Robots," presented at last month's IEEE/RSJ International Conference on Intelligent Robots and Systems.

One of their goals in developing the software, Dr. Yokoi says, is simplifying the creation of robot motion routines, so that even non-roboticists can do it. "We want other people—like CG creators, choreographers, anyone—to be able to create robot motions," he adds.

Here’s my full interview with Dr. Yokoi, in which he describes how the new software works, what it took to create the dance routine, and why he thinks Apple's business models could help robotics.

Erico Guizzo: I watched the video of the HRP-4C dancing with the human dancers several times—it’s fascinating. How did you have the idea for this demonstration?

Kazuhito Yokoi: We wanted to prepare a demonstration for this year’s Digital Content Expo, in Tokyo, and one of our colleagues, Dr. [Masaru] Ishikawa from the University of Tokyo, suggested this kind of event. At last year’s Expo, we used the robot as an actress. We didn’t have the software to create complex motions, so we were limited to movements of the arms and face. It was a fun presentation. But this time we wanted to do something different, and one of the ideas we had was a dance performance. One of the key collaborators was SAM, who is a famous dancer and dance choreographer in Japan. He created our dance routine. The human dancers are members of his dance school.

EG: Did he choreograph the robot’s dance movements as well?

KY: We wanted to make the dance as realistic as possible. So we didn’t choreograph the robot first. Instead, SAM created a dance using one of his students. Then we used the software to “copy” the dance from the human to the robot.

HRP-4C performs with human dancers.

EG: How long did this process take?

KY: Programming the software is relatively fast. But because this was a complex performance, we did several rehearsals. After SAM created the dance and we transferred it to the robot, he watched the robot and wanted to make some adjustments to the choreography. We expected that would happen because, of course, there are differences between the abilities of a human and a humanoid. For example, the joint angle and speed have maximum values. So it’s difficult to copy the dance exactly, but we tried to copy as close as possible. Then we transferred SAM's changes to the robot and we did another rehearsal. And at some point we also brought in the human dancers. I think we spent about one month until we had the final performance.

EG: When you’re using the software, what if you program a movement that the robot can’t execute, either because of angle or speed limitations or because it would fall?

KY: What you give the software are key poses. If, for example, you have one pose and you create a new pose and making that transition would require a joint angular velocity higher than what the robot can perform, then the software would inform you about that, and you can adjust the pose, reducing the final angle of the joint. The software also automatically keeps track of stability. Of course, users should have some basic understanding of their robot, how it balances, but the software does the rest—it will alert the user if a pose is unstable and correct the pose.

EG: Does the software compute ZMP [Zero Moment Point] to detect poses that are unstable?

KY: Yes, we use the ZMP concept. Again, the user can freely design the key poses. If a pose is not stable, the software automatically detects that the pose is a problem and modifies it. So it’s doing that in real time, as you design your sequence of movements. And if you don’t like the “corrected” pose you can choose another pose and keep trying until you’re satisfied with the movements. And of course, you can try your whole choreography using the software, before you test it in the real robot!

The software automatically adds a key pose needed to maintain stability.

EG: Was the software designed specifically for HRP-4C?

KY: No. The software is robot independent. You just need the robot model. For example, we have the model for HRP-2, so we can create HRP-2 movements. We also have the model for HRP-4, and we recently created movements for this robot as well.

[See below a recent video of HRP4.]

EG: Speaking of HRP-4, is HRP-4 and HRP-4C the same robot with just different exteriors? And are they both made by Kawada Industries?

KY: They are not the same. HRP-4C has 8 actuators in its head and it can make facial expressions. HRP-4 has no such kind of actuators. HRP-4 is made by Kawada. HRP-4C is special. It’s a collaboration. At AIST we designed the robot, but we have no factory to make robot hardware, so we collaborated with [Japanese robotics firms] Kawada and Kokoro. Kawada makes the body and Kokoro the head. You may know the Geminoid created by Professor [Hiroshi] Ishiguro of Osaka University. He's made several androids. His androids are made by Kokoro. So we also asked them to develop our robot head for HRP-4C. They have very good know-how to make humanlike skin. That’s an important factor.

EG: Can you use the software to design other kinds of movements, such as tasks to help a person in a house?

KY: Yes. That’s our dream. We need more capabilities to do that, like recognizing a person and objects in the house, for example. That’s not part of this software. But this software lets you program any kind of movement. And we want more people to try to program the robot. Now only researchers can do that. But in our opinion that’s not good enough. We want other people—like CG creators, choreographers, anyone—to be able to create robot motions. And maybe that will lead to robotics applications not only in entertainment, but in industry and home applications too. Think about the iPhone. Many people want an iPhone because it has hundreds of nice software applications. Apple didn’t create all of those; they were developed by others, including some small developers, and they were able to have great success. So the iPhone is a platform—video game consoles and computers are similar in that sense—and we want to follow this business model.

EG: When will researchers and others be able to use the software?

KY: We just finished developing the software and we’ve not delivered it to anybody. We have not yet decided what kind of license we will adopt, but we have plans to make it available maybe by the end of next March.

EG: What about the HRP-4 and HRP-4C robots—who will be able to use them?

KY: If you buy one, you can use it. [Laughs.]

EG: So what is the goal of your group at AIST? Do you want to create humanoid robots to help other researchers who study robotics or do you want to develop robots that one day will actually be used in people’s homes and factories?

KY: Humanoid robots in homes and factories, as you mentioned, that’s our final goal. That’s our long, long final goal. But in the mean time, we think we can contribute to other application areas in humanoid robotics. One is the hobby and toy humanoid robots—it's a big area. The second consists of research platforms, like HRP-2 or HRP-4, that people in academia can use to develop new software or theories on how to control robots and how to make them perform tasks naturally. The third area is entertainment. That’s why we created the dance performance. We have also shown the HRP-4C wearing a wedding dress at a fashion show. Or used it as a master of ceremony. But our final goal is not just entertainment. For example this new software can make any kind of motion. Maybe we could use it to make the robot perform tasks to help elderly people, or to perform activities involving education or communication. There are many possibilities.

EG: AIST’s humanoids are among the most impressive. Where do you get inspiration for creating them? And do you always want to make them look more human or is it sometimes a good idea to make them look robotic?

KY: Good questions. I think it depends on the application. HRP-2, HRP-3, and HRP-4 look robotic. If a robot is just walking or doing some dangerous, dirty, or dull task, okay, it doesn’t need a human face. But if we want to bring our robots to the entertainment industry, for example, then a more humanlike appearance is more different and maybe more attractive. That’s why we created a female humanoid. When we decided to bring our humanoids into the entertainment industry, we thought that a female type would be better.

AIST's humanoids HRP-2, 3, and 4. Photo: Impress/PC Watch

EG: Going back to the beginning of our conversation, about the HRP-4C dance, a lot of people have seen the video—why do you think people are so fascinated with this demonstration?

KY: I don’t know. I guess this was a large trial in humanoid robotics. Dancing is something very human. You don't expect to see robots dancing like that with other dancers. Maybe people have seen smaller robots dancing, like Sony's QRIO or the Nao humanoid robot from Aldebaran. But for these small types of robot it’s difficult to collaborate or interact with humans. In our demonstration we wanted to show a realistic dance performance. And of course, we wanted it to be fun!

Images and videos: AIST

This interview has been condensed and edited.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.