Most of the time, most of us have absolutely no idea what robots are thinking. Someone who builds and programs a robot does have some idea how that robot is supposed to act based on certain inputs, but as sensors get more ubiquitous and the software that manages them and synthesizes their data to make decisions gets more complex, it becomes increasingly difficult to get a sense of what’s actually going on. MIT is trying to address that issue, and they’re using augmented reality to do it.

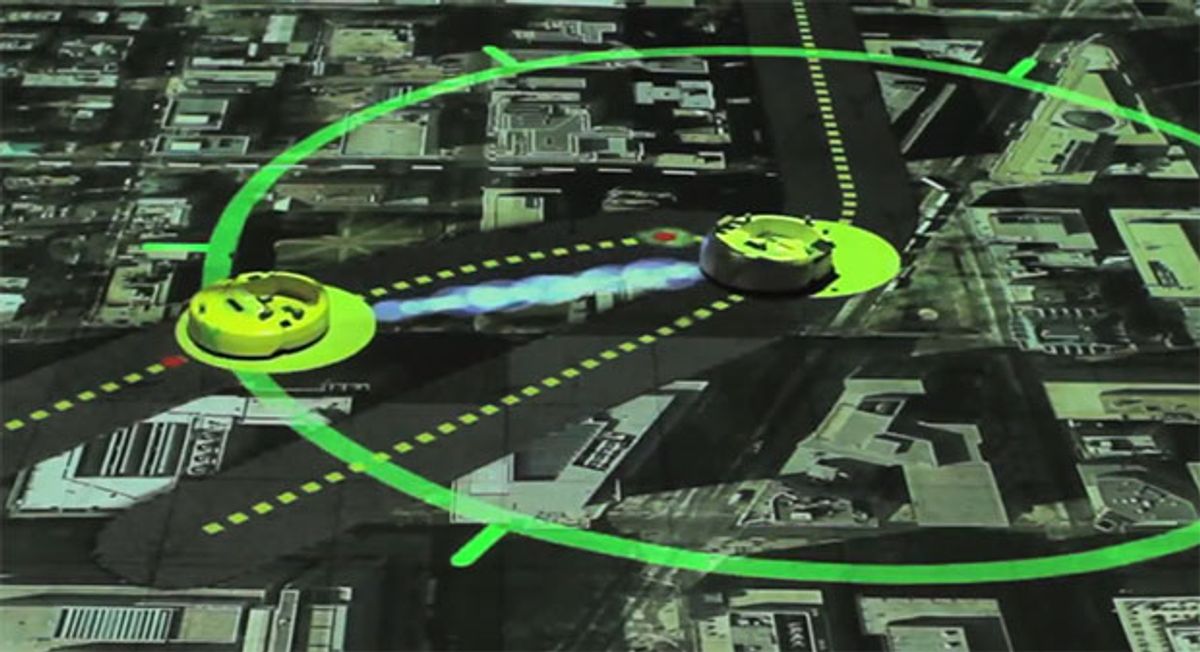

In an experiment, the researchers used their AR system to place obstacles—like human pedestrians—in the path of robots, which had to navigate through a virtual city. The robots had to detect the obstacles and then compute the optimal route to avoid running into them. As the robots did that, a projection system displayed their “thoughts” on the ground, so researchers could visualize them in real time. The “thoughts” consisted of colored lines and dots—representing obstacles, possible paths, and the optimal route—that were constantly changing as the robots and pedestrians moved.

“Robustify” and “cyber-physical systems” are both new to me. Impressive! Also impressive: iRobot Creates still powering along.

On some level, it should be possible to trace back every decision a robot makes to some line of code. This is part of what’s so nice about robots: there’s a sense that everything they do is both understandable, and controllable. It’s different in practice, of course, but the idea here is that by seeing in real-time when and how a robot decides to take the actions that it does, it’ll be a lot simpler to debug and get it doing things that reliably make sense.

As to the other thing that they talked about in the vid, it’s a big floor projection that can be used to test vision systems. From the press release:

In addition to projecting a drone’s intentions, the researchers can also project landscapes to simulate an outdoor environment. In test scenarios, the group has flown physical quadrotors over projections of forests, shown from an aerial perspective to simulate a drone’s view, as if it were flying over treetops. The researchers projected fire on various parts of the landscape, and directed quadrotors to take images of the terrain — images that could eventually be used to “teach” the robots to recognize signs of a particularly dangerous fire.

This is marginally more useful, if somewhat less exciting, than bringing a bunch of plants into your lab and then setting them on fire. Although, I’m sure that’s a thing that’s been done before, intentionally or not.

Via [ MIT ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.