Massimiliano “Max” Versace traces the birth date of his startup to when NASA came knocking in 2010. The U.S. space agency had caught wind of his military-funded Boston University research on making software for a brain-inspired microprocessor through an IEEE Spectrum article, and wanted to see if Versace and his colleagues could help develop a software controller for robotic rovers that could autonomously explore Mars.

NASA’s vision proved no easy challenge. Mars rovers have limited computing, communications, and power resources. NASA engineers wanted artificial intelligence that could rely solely on images from a low-end camera to navigate different environments. More important, such AI software would also have to do the job by running on a single GPU chip. More than six years later, Versace’s startup, called Neurala, has been testing a updated prototype of the AI “brain” it developed for NASA with the goal of rolling it out to select customers within a matter of months.

Versace likes to say that Neurala, which raised $14 million in a recent series A funding round, developed the AI technology for Mars, but now wants to bring it down to Earth. Like NASA Mars rovers, fast-flying drones and self-driving cars could use onboard AI to quickly recognize objects in their surroundings so that they make decisions accordingly. The startup has already commercialized certain slices of its AI software through licensing deals and contracts with drone companies and a major automaker eyeing the future of autonomous vehicles.

Neurala’s vision for an AI brain relies on the latest advances in deep learning, with artificial neural networks training themselves to perform tasks like object recognition by filtering the relevant data through many layers of processing. But unlike tech giants like Google, Amazon, and Facebook—as well as a host of AI startups—that offer deep-learning tool kits and online services based on powerful cloud computing servers, Neurala’s AI can operate on the computationally low-power chips found in smartphones. Versace declined to go into specifics, but noted that Neurala’s approach focuses on edge computing, which relies on onboard hardware, in contrast to other approaches that are based on centralized systems.

“You have to be really lean and innovative in the way you implement stuff at the edge as opposed to on the cloud,” Versace explains. “We want to provide the full, end-to-end solution to customers, including navigation, collision avoidance, and image processing, all small enough to run on a device versus doing the processing on a server.”

The startup’s focus on fast and efficient deep learning done through edge computing may explain why Versace does not sound very worried about the competition so far. Neurala’s secret sauce is primarily its software that is “hardware agnostic” and can run on a variety of industry-standard processors produced by the usual suspects such as ARM, Nvidia, and Intel.

Some early Neurala customers have licensed the company’s technology to adapt for their own needs through Neurala’s software development kit. One example is Teal Drones, which uses Neurala’s onboard software in its speedy racing drones. The drone industry has proven Neurala’s biggest revenue generator so far; the startup also has a multiyear deal with Parrot, one of the world’s largest drone companies.

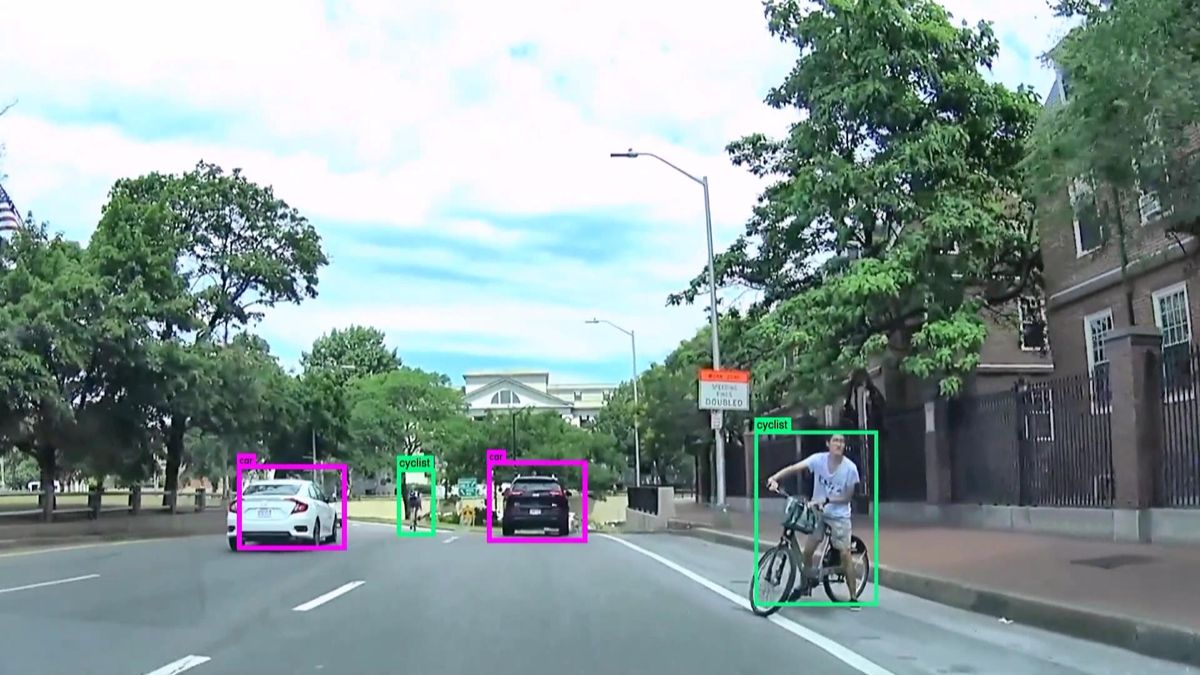

Another Neurala customer is the major automaker interested in developing self-driving cars. Roger Matus, vice president of products and markets at Neurala, was unable to reveal the name at this time, but said that Neurala’s solution would help cars to identify objects such as pedestrians or street signs in real time. The technology is currently being tested in production model vehicles. A third category of applications will involve Motorola Solutions using Neurala’s AI solution in a service for emergency responders such as police and fire departments.

Matus said Neurala is also eager to apply its deep-learning AI to robot toys and perhaps household robots. “Imagine a home robot that can recognize who is giving it a command, and when you say, ‘Get me a beer,’ it will know what a beer is,” he said, adding that such applications require fast, smooth interaction with users, something that cloud systems can’t always guarantee.

Much has happened in the decade since Neurala’s earliest origins. In 2006, Versace was contemplating how hardware limitations represented a major stumbling block for AI in his Ph.D. research. That led him and his colleagues—Anatoly Gorshechnikov and Heather Ames—to identify GPUs as a major candidate for enabling future AI. They patented the use of GPUs in supporting neural networks and created Neurala as a home for the patent. “It’s been a hell of a ride going from writing down a patent on a paper napkin outside a restaurant to deploying the technology to customers,” Versace says.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.