The Truth About Terahertz

Anyone hoping to exploit this promising region of the electromagnetic spectrum must confront its very daunting physics

UPDATE 5 MARCH 2024: In Terahertz technology, everything old is news again.

“Not that much has changed in the past 10 or 15 years,” says Peter H. Siegel. Siegel, an IEEE Life Fellow, was founding editor-in-chief of IEEE Transactions on Terahertz Science and Technology and of the IEEE Journal of Microwaves. “There have been some advances, but most of the terahertz regime is still in the “research science” realm as opposed to actual commercial applications, and is still equally divided between optical techniques (laser downconverting) and microwave techniques (radio upconverting).”

According to Siegel, a Senior Research Scientist Emeritus at the California Institute of Technology and Principal Engineer Emeritus with the Jet Propulsion Laboratory, “The applications that were hyped in those days [when Carter Armstrong debunked the breathless proposals for what terahertz could do] were from people who really didn’t understand the field at all and were treating it as if it were some magic new device or frequency that could do things it really never could do. Everybody in the field knew it couldn’t do those things.”

The applications terahertz is actually being used for include longtime staples such as molecular line spectroscopy in astrophysics, earth science, and planet science to study quantum effects including anything researchers want to know about molecular motion and how molecules interact. “It will remain a key application, I think, forever, because it is paired to the energy levels and the scales at which the phenomena occur,” says Siegel. “Terahertz is the only frequency where you get the information about molecular interactions that tell you all about quantum states, temperature, pressure, and [spectral signature] fingerprinting, et cetera. But it all has to be done at low pressure.”

These days, terahertz is also being used to offer very-wide-bandwidth, high-data-rate point-to-point communications for computer backhaul systems and high-video-rate systems. Narrowly focused beams transmit signals over short distances—say, a few hundred meters at most. “And you can do it without having to get FCC approval for operation in a band which iOS already overcommitted,” Siegel notes. “It’s niche, But you’ll see a lot of papers on that these days in terahertz journals.”—IEEE Spectrum

Original article from 17 August 2012 follows:

Wirelessly transfer huge files in the blink of an eye! Detect bombs, poison gas clouds, and concealed weapons from afar! Peer through walls with T-ray vision! You can do it all with terahertz technology—or so you might believe after perusing popular accounts of the subject.

The truth is a bit more nuanced. The terahertz regime is that promising yet vexing slice of the electromagnetic spectrum that lies between the microwave and the optical, corresponding to frequencies of about 300 billion hertz to 10 trillion hertz (or if you prefer, wavelengths of 1 millimeter down to 30 micrometers). This radiation does have some uniquely attractive qualities: For example, it can yield extremely high-resolution images and move vast amounts of data quickly. And yet it is nonionizing, meaning its photons are not energetic enough to knock electrons off atoms and molecules in human tissue, which could trigger harmful chemical reactions. The waves also stimulate molecular and electronic motions in many materials—reflecting off some, propagating through others, and being absorbed by the rest. These features have been exploited in laboratory demonstrations to identify explosives, reveal hidden weapons, check for defects in tiles on the space shuttle, and screen for skin cancer and tooth decay.

loyaltyshopping_cartlocal_librarydelete

But the goal of turning such laboratory phenomena into real-world applications has proved elusive. Legions of researchers have struggled with that challenge for decades.

The past 10 years have seen the most intense work to tame and harness the power of the terahertz regime. I first became aware of the extent of these efforts in 2007, when I cochaired a U.S. government panel that reviewed compact terahertz sources. The review’s chief goal was to determine the state of the technology. We heard from about 30 R&D teams, and by the end we had a good idea of where things stood. What the review failed to do, though, was give a clear picture of the many challenges of exploiting the terahertz regime. What I really wanted were answers to questions like, What exactly are terahertz frequencies best suited for? And how demanding are they to produce, control, apply, and otherwise manipulate?

So I launched my own investigation. I studied the key issues in developing three of the applications that have been widely discussed in defense, security, and law-enforcement circles: communication and radar, identification of harmful substances from a distance, and through-wall imaging. I also looked at the 20 or so compact terahertz sources covered in the 2007 review, to see if they shared any performance challenges, despite their different designs and features. I recently updated my findings, although much of what I concluded then still holds true now.

My efforts aren’t meant to discourage the pursuit of this potentially valuable technology—far from it. But there are some unavoidable truths that anyone working with this technology inevitably has to confront. Here’s what I found.

loyaltyshopping_cartlocal_librarydelete

Although terahertz technology has been much in the news lately, the phenomenon isn’t really new. It just went by different names in the past—near millimeter, submillimeter, extreme far infrared. Since at least the 1950s, researchers have sought to tap its appealing characteristics. Use of this spectral band by early molecular spectroscopists, for example, laid the foundation for its application to ground-based radio telescopes, such as the Atacama Large Millimeter/submillimeter Array, in Chile. Over the years a few other niche uses have emerged, most notably space-based remote sensing. In the 1970s, space scientists began using far-infrared and submillimeter-wave spectrometers for investigating the chemical compositions of the interstellar medium and planetary atmospheres. One of my favorite statistics, which comes from astronomer David Leisawitz at NASA Goddard Space Flight Center, is that 98 percent of the photons released since the big bang reside in the submillimeter and far-infrared bands, a fact that observatories like the Herschel Space Observatory are designed to take advantage of. Indeed, it’s safe to say that the current state of terahertz technology rests in good measure on advances in radio astronomy and space science.

loyaltyshopping_cartlocal_librarydelete

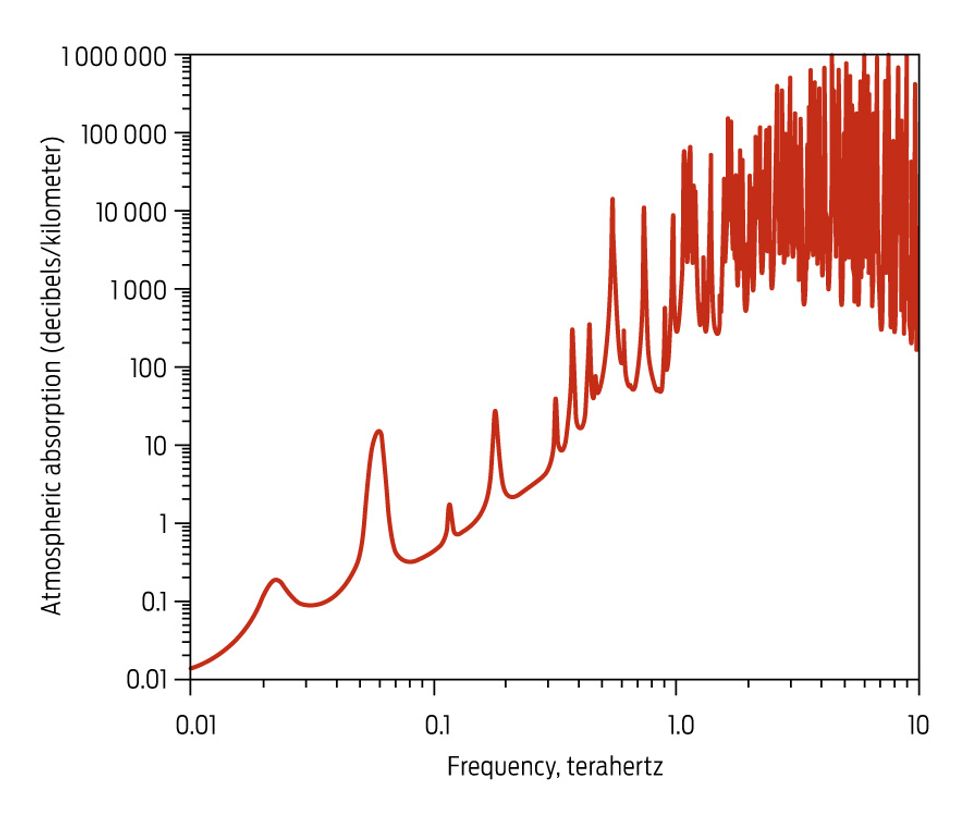

But orbiting terahertz instruments have a big advantage over their terrestrial counterparts: They’re in space! Specifically, they operate in a near-vacuum and don’t have to contend with a dense atmosphere, which absorbs, refracts, and scatters terahertz signals. Nor do they have to operate in inclement weather. There is no simple way to get around the basic physics of the situation. You can operate at higher altitudes, where it’s less dense and there’s less moisture, but many of the envisioned terahertz applications are for use on the ground. You can boost the signal’s strength in hopes that enough radiation will get through at the receiving end, but at some point, that’s just not practical, as we’ll see.

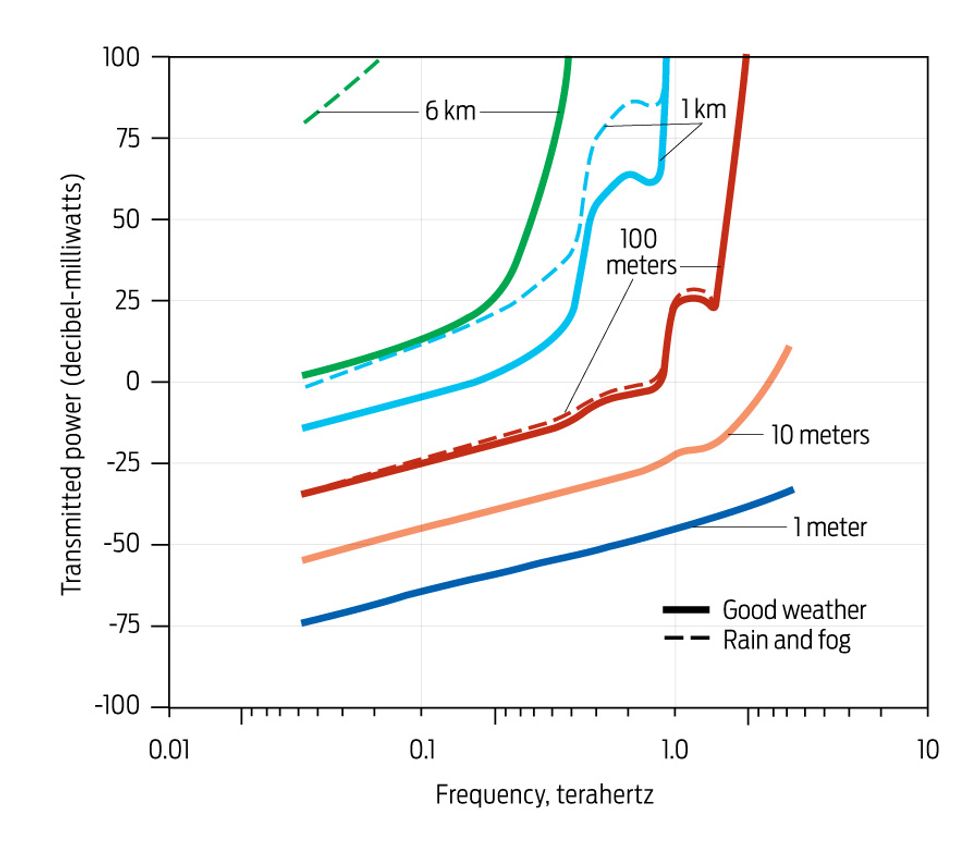

Obviously, atmospheric attenuation poses a problem for using terahertz frequencies for long-range communication and radar. But how big a problem? To answer that question, I compared different scenarios for horizontal transmission at sea level—good weather, bad weather, a range of distances (from 1 meter up to 6 kilometers), and specific frequencies between 35 gigahertz and 3 terahertz—to determine how much the signal strength degrades as conditions vary. For short-range operation—that is, for signals traveling 10 meters or less—the effects of the atmosphere and bad weather don’t really come into play.

loyaltyshopping_cartlocal_librarydelete

Try to send anything farther than that and you hit what I call the “terahertz wall”: No matter how much you boost the signal, essentially nothing gets through. A 1-watt signal with a frequency of 1 THz, for instance, will dwindle to nothing after traveling just 1 km. Well, not quite nothing: It retains about 10-30 percent of its original strength. So even if you were to increase the signal’s power to the ridiculously high level of, say, a petawatt, and then somehow manage to propagate it without ionizing the atmosphere in the process, it would be reduced to mere femtowatts by the time it reached its destination. Needless to say, there are no terahertz sources capable of producing anything approaching a petawatt; the closest is a free-electron laser, which has an output in the low tens of megawatts and isn’t exactly a field-deployable device. (For comparison, the output power of today’s compact sources spans the 1-microwatt to 1-W range—more on that later.) And that’s under ordinary atmospheric conditions. Rain and fog will deteriorate the signal even more. Attenuation that extreme all but rules out using the terahertz region for long-range ground-based communication and radar.

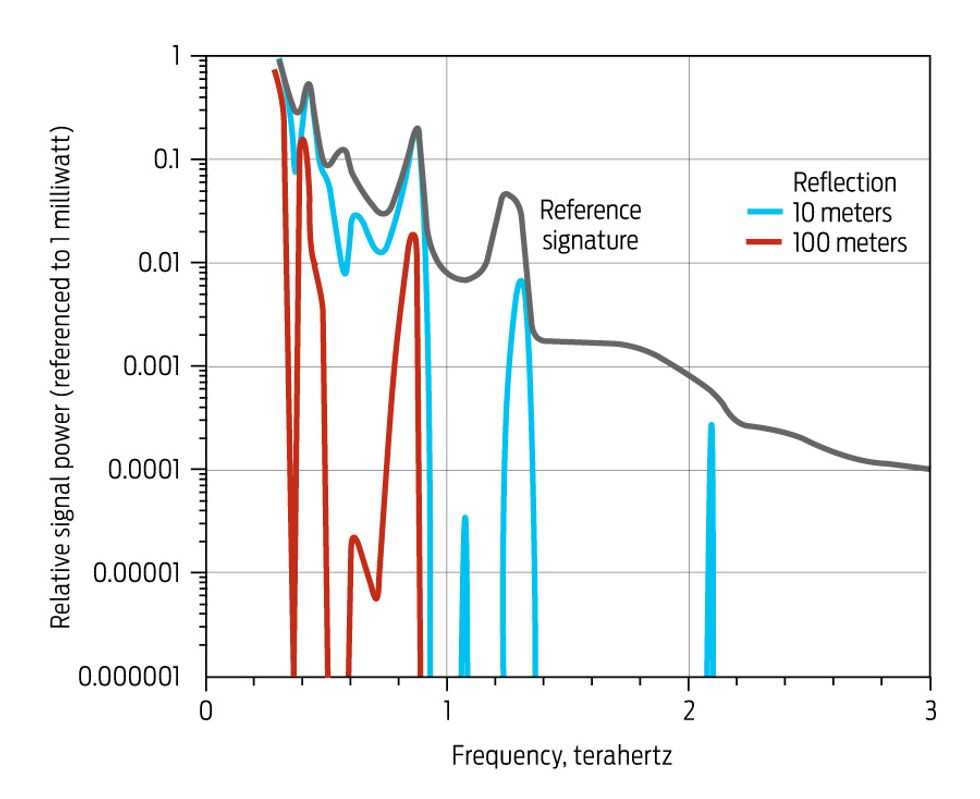

Another potentially invaluable and much hyped use for terahertz waves is identifying hazardous materials from afar. In their gaseous phase, many natural and man-made molecules, including ammonia, carbon monoxide, hydrogen sulfide, and methanol, absorb photons when stimulated at terahertz frequencies, and those absorption bands can serve as chemical fingerprints. Even so, outside the carefully calibrated conditions of the laboratory or the sparse environment of space, complications arise.

Let’s say you’re a hazmat worker and you’ve received a report about a possible sarin gas attack. Obviously, you’ll want to keep your distance, so you pull out your trusty portable T-wave spectrometer, which works something like the tricorder in “Star Trek.” It sends a directed beam of terahertz radiation into the cloud; the gas absorbs the radiation with a characteristic spectral frequency signature. Unlike with communications or radar, which would probably use a narrowband signal, your spectrometer sends out a broadband signal, from about 300 GHz to 3 THz. Of course, to ensure that the signal returns to your spectrometer, it will need to reflect off something beyond the gas cloud, like a building, a container, or even some trees. But as in the case above, the atmosphere diminishes the signal’s strength as it travels to the cloud and then back to your detector. The atmosphere also washes out the spectral features of the cloud because of an effect known as pressure broadening. Even at a distance of just 10 meters, such effects would make it difficult, if not impossible, to get an accurate reading. Yet another wrinkle is that the chemical signatures of some materials—table sugar and some plastic explosives, for instance—are so remarkably nondescript as to make distinguishing one from another impossible.

By now, you won’t be surprised to hear that through-wall imaging, another much-discussed application of terahertz radiation, also faces major hurdles. The idea is simple enough: Aim terahertz radiation at a wall of some sort, with an object on the other side. Terahertz waves can penetrate some—but not all—materials that are opaque in visible light. So depending on what the wall is made of and how thick it is, some waves will get through, reflect off the object, and then make their way back through the wall to the source, where they can reveal an image of the hidden object.

Realizing that simple idea is another matter. First, let’s assume that the object itself doesn’t scatter, absorb, or otherwise degrade the signal. Even so, the quality of the image you get will depend largely on what your wall is made of. If the wall is made of metal or some other good conductive material, you won’t get any image at all. If the wall contains any of the common insulating or construction materials, you might still get serious attenuation, depending on the material and its thickness as well as the frequency you are using. For example, a 1-THz signal passing through a quarter-inch-thick piece of plywood would have 0.0015 percent of the power of a 94-GHz signal making the same journey. And if the material is damp, the loss is even higher. (Such factors affect not just imaging through barriers but also terahertz wireless networks, which would require at the least a direct line of sight between the source and the receiver.) So your childhood dream of owning a pair of “X-ray specs” probably isn’t going to happen any time soon.

It’s true that some researchers have successfully demonstrated through-wall imaging. In these demonstrations, the radiation sources emitted impulses of radiation across a wide range of frequencies, including terahertz. Given what we know about attenuation at the higher frequencies, though, some scientists who’ve studied the results believe it’s highly likely that the imaging occurred not in the terahertz region but rather at the lower frequencies. And if that’s the case, then why not just use millimeter-wave imagers to begin with?

Before leaving the subject of imaging, let me add one last thought on terahertz for medical imaging. Some of the more creative potential uses I’ve heard include brain imaging, tumor detection, and full-body scanning that would yield much more detailed pictures than any existing technology and yet be completely safe. But the reality once again falls short of the dream. Frank De Lucia, a physicist at Ohio State University, in Columbus, has pointed out that a terahertz signal will decrease in power to 0.0000002 percent of its original strength after traveling just 1 mm in saline solution, which is a good approximation for body tissue. (Interestingly, the dielectric properties of water, not its conductive ones, are what causes water to absorb terahertz frequencies; in fact, you exploit dielectric heating, albeit at lower frequencies, whenever you zap food in your microwave oven.) For now at least, terahertz medical devices will be useful only for surface imaging of things like skin cancer and tooth decay and laboratory tests on thin tissue samples.

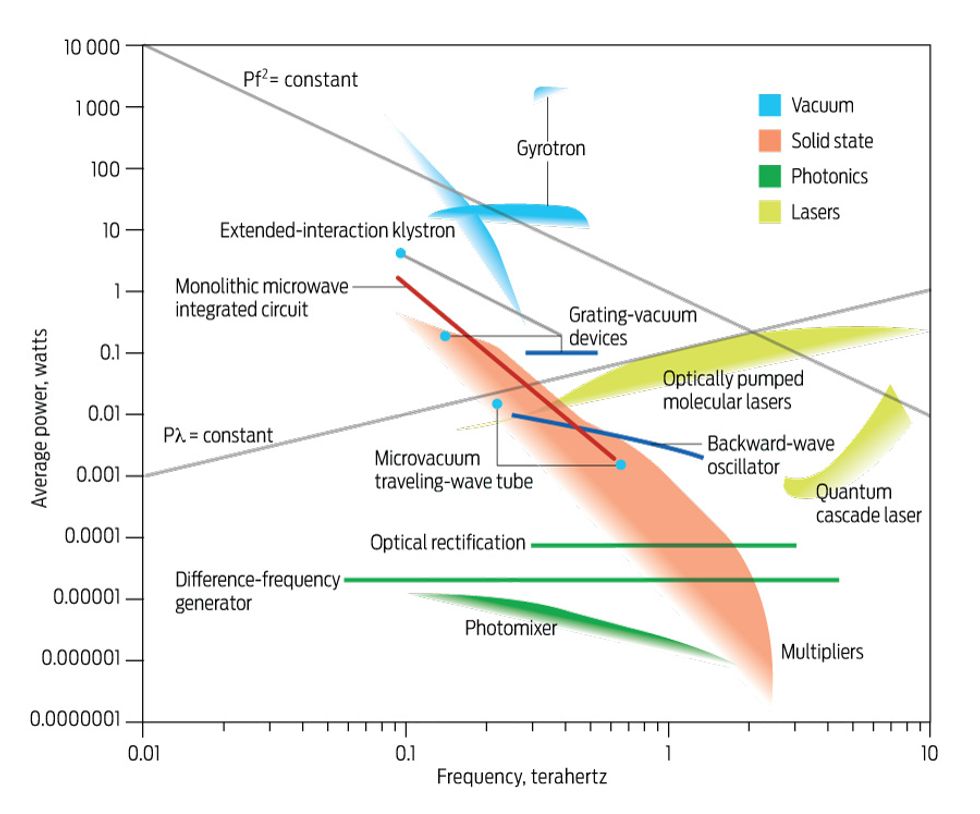

So those are some of the basic challenges of exploiting the terahertz regime. The physics is indeed daunting, but that hasn’t prevented developers from continuing to pursue lots of different terahertz devices for those and other applications. So the next thing I looked at was the performance of systems capable of generating radiation at terahertz frequencies. I decided to focus on these sources—and not detectors, receivers, control devices, and so on—because while those other components are certainly critical, people in the field pretty much agree that what’s held up progress is the lack of appropriate sources.

There’s a very good reason for the shortage of compact terahertz sources: They’re really hard to build! For many applications, the source has to be powerful enough to overcome extreme signal attenuation, efficient enough to avoid having to wheel around your own power generator, and small enough to be deployed in the field without having to be toted around on a flatbed truck. (For some applications, the source’s spectral purity, tunability, or bandwidth is more important, so a lower power is acceptable.) The successful space-based instruments mentioned earlier merely detect terahertz radiation that celestial bodies and events naturally emit; although some of those instruments use a low-power source for improved sensitivity, they don’t as yet attempt to transmit at terahertz frequencies.

The government review in 2007 loosely defined a compact terahertz source as having an average output power in the 1-mW to 1-W range, operating in the 300-GHz to 3-THz frequency band, and being more or less “portable.” (We chose average power rather than peak power because, ultimately, it’s the average power that counts in nearly all of the envisioned applications.) In addition, we asked that the sources have a conversion efficiency of at least 1 percent—for every 100 W of input power, the source would produce a signal of 1 W or more. Even that modest goal, it turns out, is quite challenging.

The 2007 review included about 20 terahertz sources. I don’t have room here to describe how each of these devices works, but in general they fall into three broad categories: vacuum (including backward-wave oscillators, klystrons, grating-vacuum devices, traveling-wave tubes, and gyrotrons), solid state (including harmonic frequency multipliers, transistors, and monolithic microwave integrated circuits), and laser and photonic (including quantum cascade lasers, optically pumped molecular lasers, and a variety of optoelectronic RF generators). Vacuum devices and lasers exhibited the highest average power at the lower and upper frequencies, respectively. Solid-state devices came next, followed by photonic devices. To be fair, calling a gyrotron a compact source is quite a stretch, and while photonic sources can produce high peak power, ranging from hundreds of watts to kilowatts, they also require high optical-drive power.

Despite their considerable design differences and some variations in performance, these three classes of terahertz technology have similar challenges. One significant issue is their uniformly low conversion efficiency, which is typically much less than 1 percent. So to get a 1-W signal, you might need to start with kilowatts of input power, or greater. Other everyday electronic and optical devices are, by comparison, far more efficient. The RF power amplifier in a typical 2-GHz smartphone, for example, operates at around 50 percent efficiency. A commercial red diode laser can convert electrical power to light with an efficiency of more than 30 percent.

That low efficiency combined with the devices’ small size leads to another problem: extremely high power densities (the amount of power the devices must handle per unit area) and current densities (the amount of current they must handle per unit area). For the vacuum and solid-state devices, the power densities were in the range of several megawatts per square centimeter. Suppose you want to use a conventional vacuum traveling-wave tube, or TWT, that’s been scaled up to operate at 1 THz. Such an apparatus would require you to focus an electron beam with a power density of multiple megawatts per square centimeter through an evacuated circuit having an inner diameter of 40 µm—about half the diameter of a human hair. (The solar radiation at the surface of the sun, by contrast, has a power density of only about 6 kilowatts per square centimeter.) A terahertz transistor, with its nanometer features, operates at similarly high power density levels. And all of the electrical and photonic devices examined, even the quantum cascade laser, require high current densities, ranging from kiloamperes per square centimeter to multimega-amperes per square centimeter. Incidentally, the upper portion of that current density range is typical of what you’d see in the pulsed-power electrical generators used for nuclear effects testing, among other things.

Compact electrical and optical devices can handle conditions like that, but you’re asking for trouble—if the device isn’t adequately cooled, the internal power dissipation minimized, and the correct materials used, it can quickly melt or vaporize or otherwise break down. And of course, eventually you reach an upper limit, beyond which you simply can’t push the power density and current density any higher.

As a device physicist, I was naturally interested in the relationship between the sources’ output power and their frequency, what’s known as power-frequency scaling. When you plot the device’s average power along the y-axis and the frequency along the x, you want to see the flattest possible curve. Such flatness means that as the frequency increases, the output power remains steady or at least does not plummet. In typical radio-frequency devices, such as transistors, solid-state diodes, and microwave vacuum tubes, the power tends to fall as the inverse of the frequency squared. In other words, if you double the frequency, the output power drops by a factor of four.

Most of the electrical terahertz sources we reviewed in 2007, however, had much steeper power-frequency curves that basically fell off into the abyss as they were pushed into the terahertz range. In general, the power scaled as the inverse of the frequency to the fourth, or worse, which meant that as the frequency doubled, the output power dropped by a factor of 16. So a device that could generate several watts at 100 GHz was capable of only a few hundred microwatts as it went to 1 THz. Lasers, too, fell off in power in the terahertz region faster than you would expect.

Given what I mentioned earlier about extreme signal attenuation in the terahertz region and the sources’ low conversion efficiencies, this precipitous drop-off in power represents yet another high hurdle to commercializing the technology.

Fine, you say, but can’t all of these problems be attributed to the fact that the sources are still technologically immature? Put another way, shouldn’t we expect device performance to improve? Certainly, the technology is getting better. In the several years between my initial analysis and this article, here are some of the highlights in the device technologies I reviewed:

- The average power of microfabricated vacuum devices rose two orders of magnitude, from about 10 µW to over a milliwatt at 650 GHz, and researchers are now working on multibeam and sheet-beam devices capable of higher power than comparable low-voltage single round-beam units.

- The average power of submillimeter monolithic microwave integrated circuits and transistors climbed by a factor of five to eight, to the 100 mW level at 200 GHz and 1 mW at 650 GHz.

- The operating frequency range for milliwatt-class cryogenically cooled quantum cascade lasers was extended down to 1.8 THz in 2012, compared to 2.89 THz in 2007.

With an eye toward use outside the laboratory, researchers have been enhancing their sources in other ways, too, including improved packaging for photonic devices and lasers and higher-temperature operation for quantum cascade lasers. Given the amount of effort and interest in the field, there will certainly be more advances and improvements to come. (For more on the current state of technology, I suggest consulting the IEEE Transactions on Terahertz Science and Technology and similar journals.)

That said, my main points still hold: While terahertz molecular spectroscopy has continuing scientific uses in radio astronomy and space remote sensing, some of the well-publicized mainstream proposals for terahertz technology continue to strain credulity. In addition, despite recent progress in cracking the terahertz nut, it is still exceedingly difficult to efficiently produce a useful level of power from a compact terahertz device. I strongly feel that any application touted as using terahertz radiation should be thoroughly validated and vetted against alternative approaches. Does it really use terahertz frequencies, or is some other portion of the electromagnetic spectrum involved? Is the application really practical, or does it require such rarefied conditions that it may never function reliably in the real world? Are there competing technologies that work just as well or better?

There is still a great deal that we don’t know about working at terahertz frequencies. I do think we should keep vigorously pursuing the basic science and technology. For starters, we need to develop accurate and robust computational models for analyzing device design and operation at terahertz frequencies. Such models will be key to future advances in the field. We also need a better understanding of material properties at terahertz frequencies, as well as general terahertz phenomenology.

Ultimately, we may need to apply out-of-the-box thinking to create designs and approaches that marry new device physics with unconventional techniques. In other areas of electronics, we’ve overcome enormous challenges and beat improbable odds, and countless past predictions have been subsequently shattered by continued technological evolution. Of course, as with any emerging pursuit, Darwinian selection will have its say on the ultimate survivors.

NOTE: The views presented in this article are solely those of the author.

About the Author

Carter M. Armstrong is vice president of engineering in the electron devices division of L-3 Communications, in San Carlos, Calif. A vacuum-device scientist, he says one of his favorite IEEE Spectrum articles of all times is Robert S. Symons’s “Tubes: Still Vital After All These Years,” in the April 1998 issue. “It was true then, and it’s still true today,” Armstrong says.

To Probe Further

Many of the topics discussed in this article, including terahertz applications and the development of terahertz sources, are treated in greater technical detail in the inaugural issue of IEEE Transactions on Terahertz Science and Technology, Vol. 1, No.1, September 2011. Among the papers dealing with applications, for example, are “Explosives Detection by Terahertz Spectroscopy—A Bridge Too Far?” by Michael C. Kemp and “THz Medical Imaging: In Vivo Hydration Sensing” by Zachary D. Taylor et al.

Mark J. Rosker and H. Bruce Wallace discuss some of the challenges of terahertz imaging in their paper “Imaging Through the Atmosphere at Terahertz Frequencies,” IEEE MTT-S International Symposium, June 2007. The paper, which is the source for the figure “Atmospheric Effects” in this article, details the important role that humidity plays in atmospheric attenuation at terahertz frequencies.

Many studies have looked at various aspects of operating in the terahertz regime. For instance, “A Study Into the Theoretical Appraisal of the Highest Usable Frequencies” [PDF], by J.R. Norbury, C.J. Gibbins, and D.N. Matheson, investigates the upper operational limits for several potential communications and radar systems.

Data on the transmission and reflectance of materials at terahertz frequencies is important for analyzing and developing applications. A helpful database of terahertz attenuation values for many common materials is included in the May 2006 report “Terahertz Behavior of Optical Components and Common Materials” [PDF], by Andrew J. Gatesman et al.