It used to be that even sophisticated mobile robots could be easily defeated by using (say) a table to block its way. The robot would sense the table, categorize it as an obstacle, try to plan a path around it, and then give up when its planner fails. This works because robots generally don’t know what most objects are, or how they work, or what you can do with them: They just get turned into obstacles to be avoided, because in most cases, that’s the easiest and safest thing to do.

You can’t normally use a table across a hallway to deter a human, because humans understand that tables are physical objects that can be moved, and the human will just pull the table out of the way and keep on going. Even if the table doesn’t behave exactly the way we’d expect it to (like, one of the wheels is stuck), we can adapt, and figure it out.

At IROS 2016 in South Korea, Jonathan Scholz from Google DeepMind and collaborators from Georgia Tech presented a paper on “Navigation Among Movable Obstacles with Learned Dynamic Constraints,” which gives mobile manipulators this same capability. They can recognize objects in their way, and get inventive with physics-based tricks to get where they need to go.

The problem of moving through an object-filled space is very common in domestic environments like homes and offices, where things can be cluttered in unpredictable ways. Unlike structured environments (factories and labs and such), you can’t always predict and model exactly how domestic environments will be cluttered, which makes it much harder for robots to figure out how to get around them. So, even if your robot is clever enough to move objects out of the way, it also needs to be able to adapt when objects that it expects to move in one way either move in a different way, or don’t move at all.

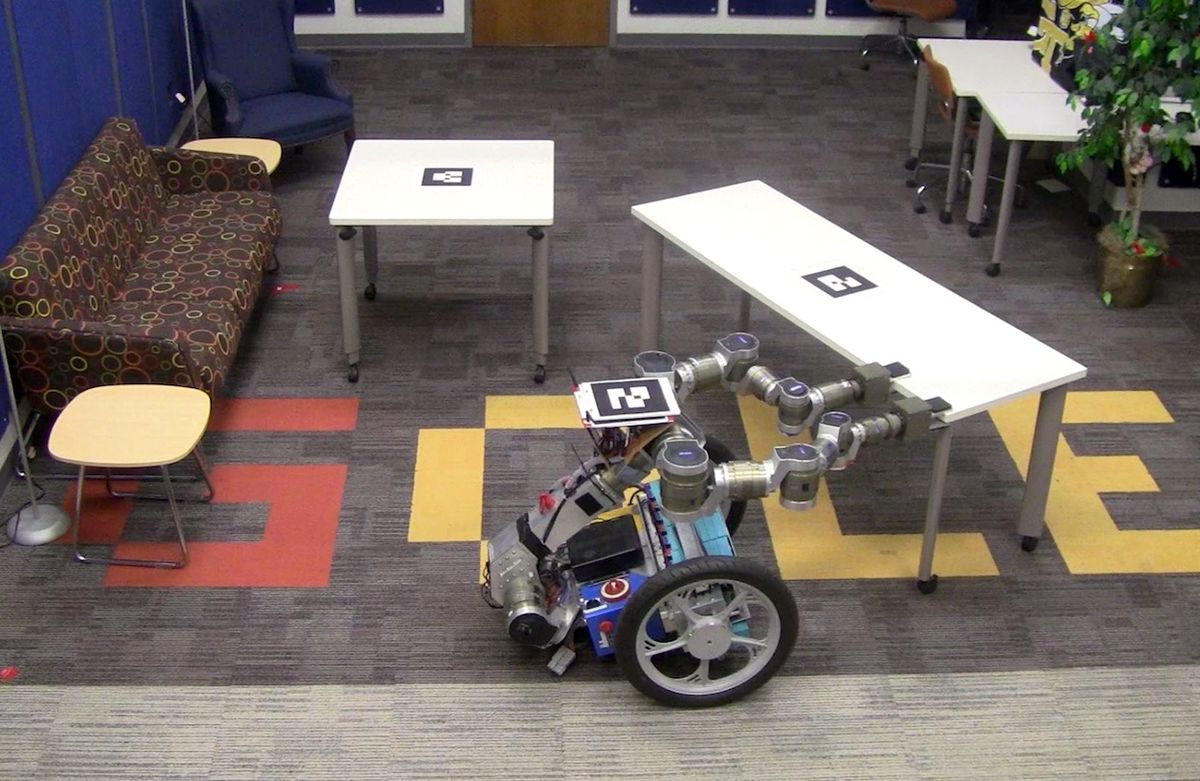

At Georgia Tech, a team of researchers led by Professors Charles L. Isbell and Henrik I. Christensen (who’s since moved to UC San Diego) has been teaching their Golem Krang robot how to move in an object-filled space by combining a Navigation Among Movable Obstacle (NAMO) path-planner with Physics-Based Reinforcement Learning (PBRL). Essentially, Krang is able to use a physics engine to understand and predict how objects will behave, and then use that knowledge to adaptively move those objects around to reach its goal. Here’s an example of how it works, using two tables (each with a mass of about 35 kilograms) that have individually lockable casters:

Success! Here’s one more example, where Krang first tries to brute force its way through both tables, and then has to replan when it doesn’t work;

Krang has no idea what the constraints are on these tables when it starts trying to move them: Every time one of the tables doesn’t move as expected (whether it’s completely stuck or just has one locked caster), Krang updates its physical model of the table, and then comes up with the next best solution that incorporates these new constraints. It’s using the exact same codebase in both of the above videos, adapting as necessary to each situation. This kind of adaptive learning behavior is something that humans do all the time, and it’s going to be an essential skill as mobile robots enter the unstructured environments of our homes and offices.

For Krang to actually do this kind of thing in a real unstructured environment, it’ll have to be weaned off of a six-camera overhead vision system and some pre-programmed manipulation policies, but that’s what the researchers are working on next. And as for the best way to keep a robot from going where you don’t want it to, I’d recommend a closed door with a round knob followed by a flight of stairs covered in black carpet. That should keep most of them out—for now.

“Navigation Among Movable Obstacles With Learned Dynamic Constraints,” by Jonathan Scholz, Nehchal Jindal, Martin Levihn, Charles L. Isbell, and Henrik I. Christensen from Google DeepMind and Georgia Tech was presented this month at IROS 2016 in South Korea.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.