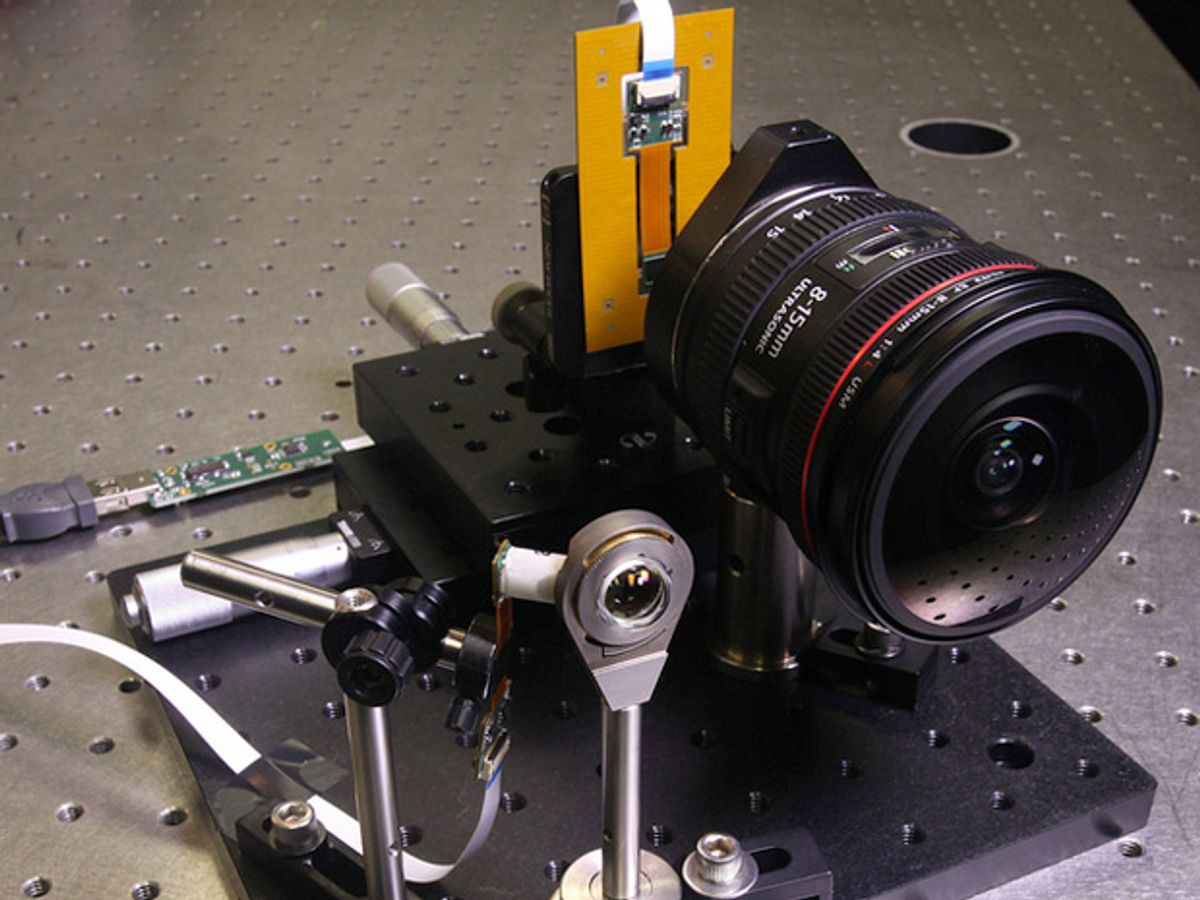

Compared to traditional camera lenses, the small, monocentric lens in the photo above doesn't look very impressive. The lens itself is a glass sphere the size of a jumbo school-yard marble. It’s held in a tear-drop shaped collet perched at the end of a machined rod. It looks very much like a crustacean’s eyestalk, and most photographers would instantly dismiss claims that this 20-mm-diameter, 16-gram crystal ball could outperform a highly engineered, 370g stack of compound lenses in the conventional Canon 12-mm fisheye lens next to it.

But the proof is in the pictures [below]. The lens has a 12-mm focal length, a wide, f/1.7 aperture, and can clearly image objects anywhere from half a meter to 500 meters away with 0.2 milliradian resolution (equivalent, an Optical Society of America release points out, to 20/10 human vision). And it can cover a 120° field of view with negligible chromatic aberration. Joseph E. Ford (leader of the University of California, San Diego’s Photonic Systems Integration Laboratory) debuted the new lens this week at the Frontiers in Optics Conference in Orlando, Florida.

Monocentric lenses—simple spheres constructed of concentric hemispherical shells of different glasses—are not new. Researchers have pursued them for decades, only to be stymied by a series of technical obstacles. Image capture has been a big stumbling-block: A monocentric lens has a focal hemisphere, not a focal plane. Back in the 1960s, researchers started trying to use bundles of optical fibers to do the job, but the available fibers were not up to the task: if they were packed closely enough to capture the high-resolution images, light would bleed through the cladding, producing crosstalk between fibers and degrading the images.

Other alternatives, such as relay optics (essentially using an array of as many as 221 tiny “eyepiece” lenses to image small, overlapping parts of the focal hemisphere and project them onto planes that could be fitted together and re-assembled digitally) were complex and expensive.

The project is funded by the Defense Advanced Research Projects Agency’s SCENICC (Soldier Centric Imaging via Computational Cameras) program. The utility of ultralight optics that combine wide fields with zoom-lens detail is obvious, whether the application is cell-phone cameras or battlefield goggles or (as we will see in a moment) environmental research.

Imaging the Ecosystem

Ecosystems operate on a tremendous range of scales, from the individual microbe to the continent, and they vary over space and time.

A research team from the U.S. Department of Agriculture’s Agricultural Research Service (ARS), Sweet Briar College, and the Carnegie Mellon University Robotics Institute has developed a method for seeing both the forest and the trees…along with the calendar.

And although monocentric lenses might eventually add to the technique, ARS’s Mary H. Nichols and her co-workers put their system together using off-the-shelf parts—principally a Canon G10 camera and robotic panoramic camera mount and photo-integration software from GigaPan.

Images: Joseph E. Ford/UCSD Jacobs School of Engineering; Mary H. Nichols/USDA-ARS

Douglas McCormick is a freelance science writer and recovering entrepreneur. He has been chief editor of Nature Biotechnology, Pharmaceutical Technology, and Biotechniques.