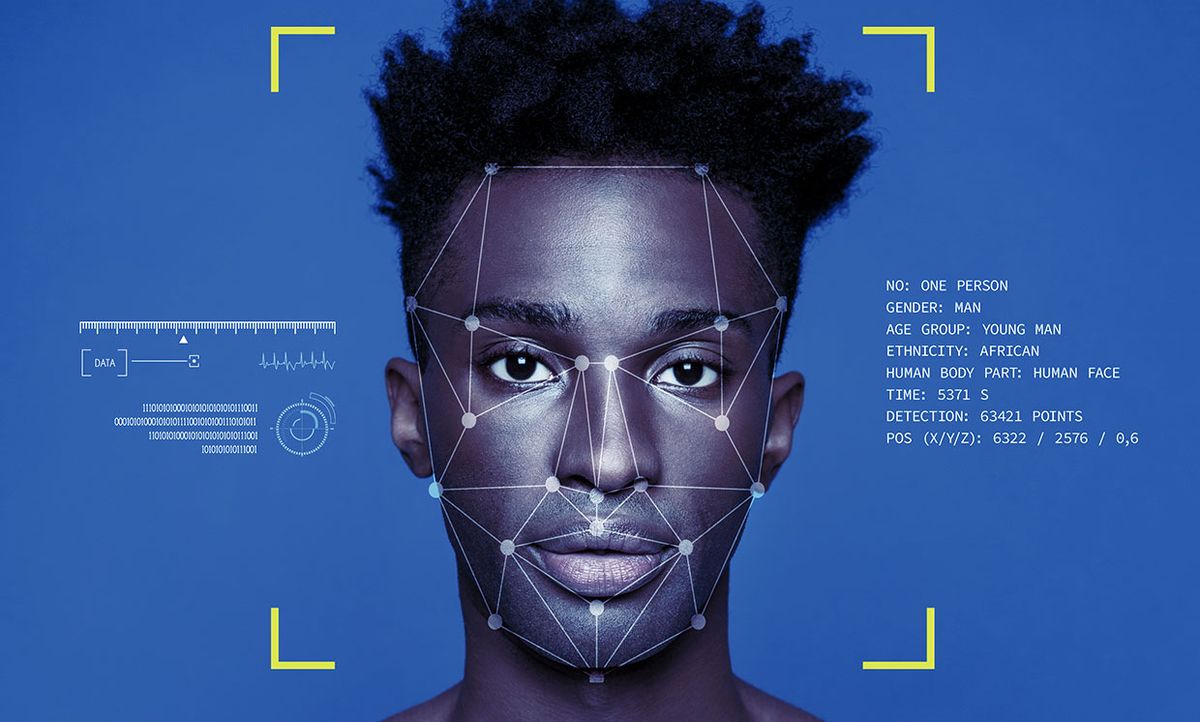

THE INSTITUTESeveral companies, including Amazon and Clarifai, are working to create reliable facial recognition technology for use by government agencies and law enforcement to catch criminals and find missing children.

Amazon’s Rekognition can identify, analyze, and track people in real time. Within seconds, the software can compare information it collects against databases housing millions of images. Law enforcement agencies have used the technology to help find missing people and to identify suspects in terrorist attacks.

While this technology may have benefits, it has recently faced some backlash. Many people are concerned about racial bias and protecting citizen’s privacy.

This month, San Francisco became the first U.S. city to ban the use of facial recognition technology by its police force and city agencies. This ban was prompted by concerns that facial recognition surveillance unfairly targets and profiles certain members of society, especially people of color. According to CPO Magazine, when law enforcement agencies adopt this technology, they usually roll it out in high-crime, low-income neighborhoods, and those disproportionately are neighborhoods with a high percentage of people of color.

The ban gives the city’s Board of Supervisors oversight over all surveillance technology used by agencies and law enforcement authorities. The departments are now required to conduct an audit of all existing surveillance technology such as automatic license plate identification tools, and write an annual report on how technology is being used and how data is being shared. The board must approve the purchase of all new surveillance technologies. The ban does not cover use of the technology by individuals or businesses.

Some proponents of the facial recognition technology say the legislation passed by the city goes too far, and the technology should merely be suspended until it improves, rather than subject to an outright ban.

While speaking with National Public Radio, Daniel Castro, the vice president of a think tank called the Technology and Innovation Foundation, said, “[The Board of Supervisors] is saying, let’s ban the technology across the board, and that seems extreme, because there are many uses of the technology that are perfectly appropriate.” He explains that the government and the companies that produce the software want to use it to fight sex trafficking, for example. “To ban it wholesale is a very extreme reaction to a technology that many people are just now beginning to understand," Castro says.

Similar legislation to San Francisco’s is under consideration in Oakland, Calif. Massachusetts is considering imposing a moratorium on facial recognition software in the state until the technology improves.

Concerns about the technology aren’t unfounded. In a study published by the MIT Media Lab earlier this year, researchers found facial analysis software made mistakes when identifying the gender of female or dark-skinned individuals, according to news website The Verge.

According to the results of an investigation released earlier this month, New York City’s police officers were found to have edited suspects’ photos—and uploaded celebrity lookalikes—in an effort to manipulate facial recognition results. An NYPD representative emphasized the investigative value of this technology when asked about the allegations by The Verge, but he did not dispute any of the claims.

“It doesn’t matter how accurate facial recognition algorithms are if police are putting very subjective, highly edited, or just wrong information into their systems," Clare Garvie, a senior associate at the Center on Privacy and Technology who specializes in facial recognition, said in an interview with NBC News. “They’re not going to get good information out. They’re not going to get valuable leads. There’s a high risk of misidentification. And it violates due process if they're using it and not sharing it with defense attorneys."

Should more municipalities ban the use of this technology or impose a moratorium? Does the good this technology can do outweigh the concerns being raised?

Joanna Goodrich is the associate editor of The Institute, covering the work and accomplishments of IEEE members and IEEE and technology-related events. She has a master's degree in health communications from Rutgers University, in New Brunswick, N.J.