These other three companies were all tied to the energy markets (solar in the case of Konarka and batteries for both A123 and Ener1), which are typically volatile, with a fair number of shuttered businesses dotting their landscapes. But NanoInk is a venerable old company in comparison to these other three and is more in what could be characterized as the “picks-and-shovels” side of the nanotechnology business, microscopy tools. NanoInk had been around so long that they were becoming known for their charity work in bringing nanotechnology to the Third World.

So, what happened? The news tells us that NanoInk’s primary financial backer, Ann Lurie, pulled the plug on her 10-year and $150-million life support of the company. After a decade of showing little return on her investment, Lurie decided that enough was enough. But that’s like explaining that a patient died because their heart stopped. What caused the heart to stop?

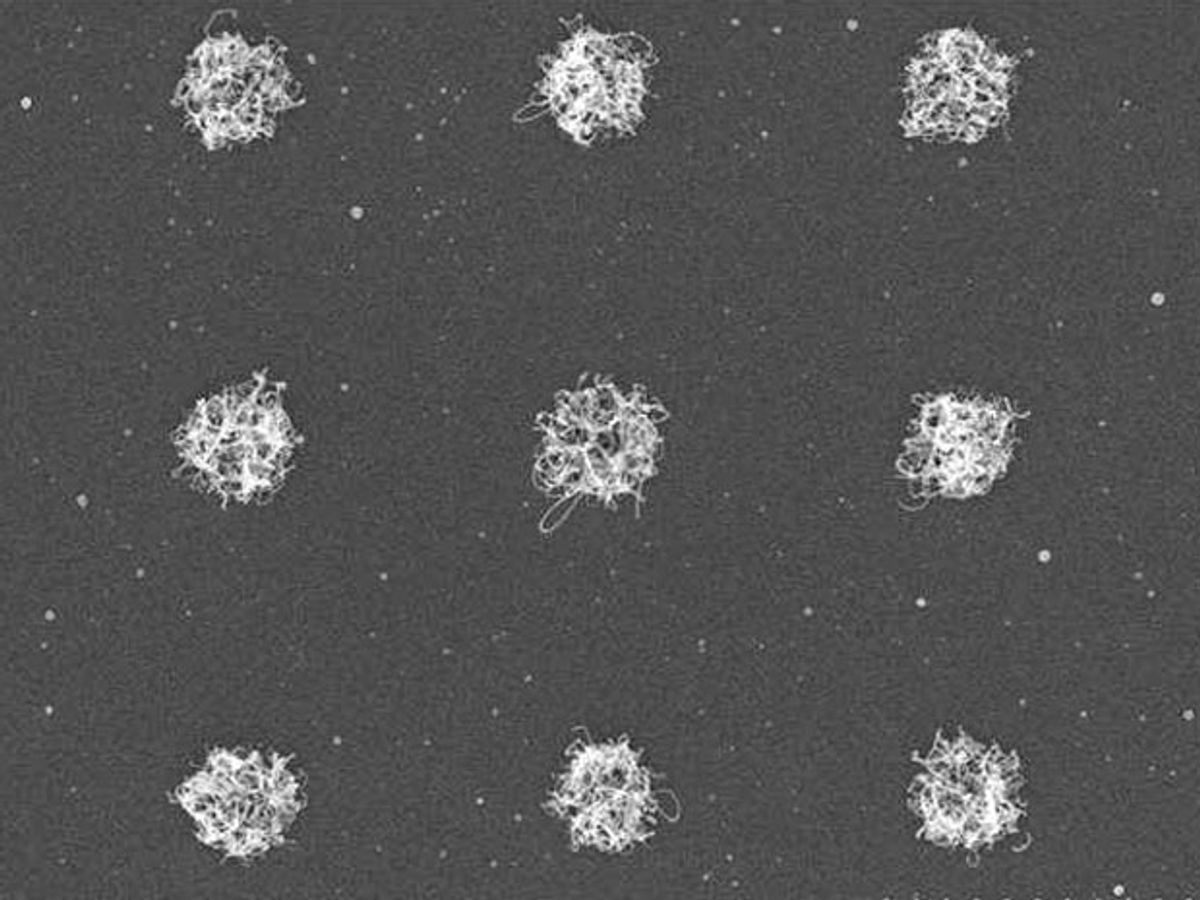

The technology foundation of NanoInk was an atomic force microscope-based dip-pen to execute lithography on the nanoscale. This so-called nanolithography would create nanostructures by delivering 'ink' via capillary transport from the AFM tip to a surface. One thing that always seemed problematic with this technology was that it wasn’t really scalable.

As Tim Harper, CEO of UK-based consulting company, Cientifica (full disclosure: I work for Cientifica), told me: “Nanolithography is interesting on the lab scale, but so is writing down your lab notes with a pen and ink. The rest of the semiconductor works in a mass-produced, highly automated way, so NanoInk's offering was the equivalent of replacing the printing press with a bunch of monks. Illuminated manuscripts can look good but if you can't mass produce things there isn't a business.”

The problem for NanoInk wasn’t just scalability. Companies can be very successful based on one-off products, but there has to be a market for them. NanoInk's lack of a broad market explains how 10 years and $150 millions of investment failed to create a successful business, according to Harper.

“Normally I advise tech companies to find the need, figure out whether their technology can address, and then look at whether or not there is a business model,” says Harper. “Most nanotech businesses started with the technology and tied themselves in knots from there on in.”

In addition to falling victim to chasing “technology push” rather than “market pull,” NanoInk also fell victim to a common malady of nanotechnology companies: Pursuing just about every application for their technology that anyone could imagine.

“A major issue is that you have so many markets to take a shot at that you wind up doing nothing properly,” explains Harper. “NanoInk had five increasingly desperate divisions. Perhaps a better model would have been to realize that dip pen nanolithography was never going to be a mainstream technology, fold the company, write down the investors, and then use the IP and experience to build a few niche companies that addressed a real need.”

Image: NanoInk

Dexter Johnson is a contributing editor at IEEE Spectrum, with a focus on nanotechnology.