We’re hearing about the problem daily: there aren’t enough test kits available to accurately track the new coronavirus. It’s a global problem, though some countries—including the United States—are doing a much worse job of testing than others.

More testing, epidemiologists say, would give us a better understanding of how the virus is moving through the population and whether it’s waxing or waning. More testing, they say, would also help identify new hot spots and allow public health officials to take action before early sparks evolved into blazes. And more testing is essential to allowing us to safely return to any semblance of normal society.

So researchers are scrambling to develop new test kits that deliver results faster and are easier to use and manufacture.

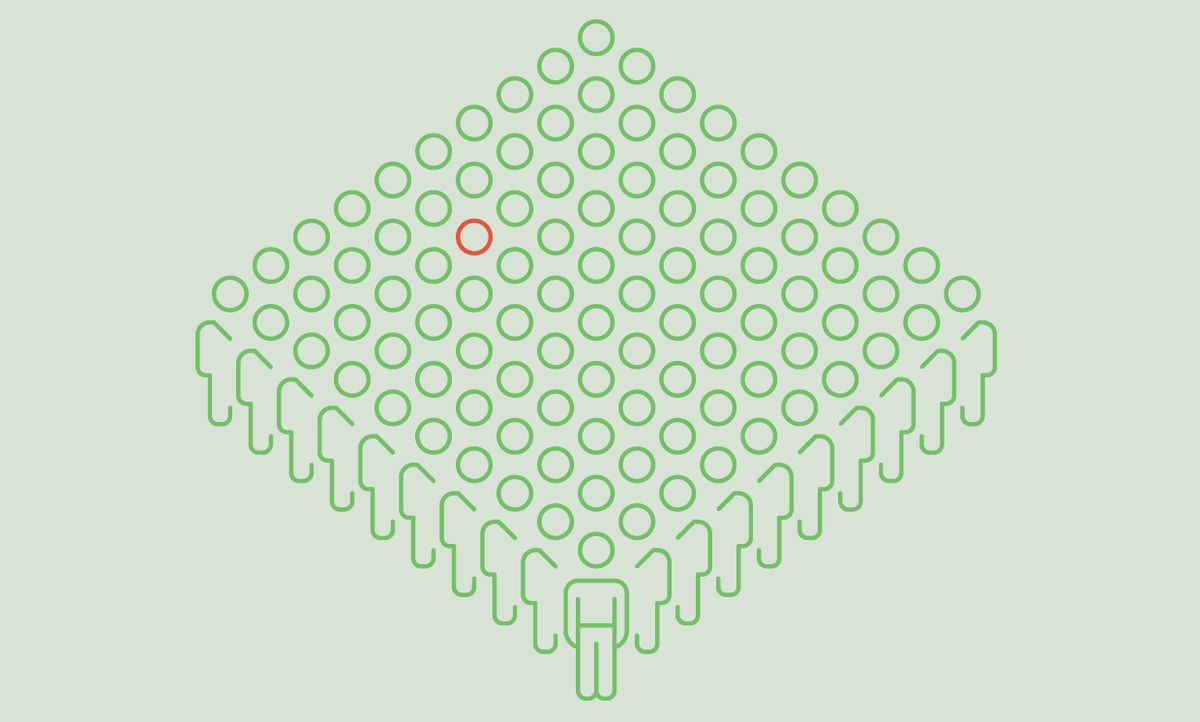

Part of the solution to the testing shortage, however, may come not from making more kits, but rather from algorithms. Computer scientists and engineers who study ways to compress data, decode video, and process medical images say that biological samples from groups of people could be pooled for testing, and the results decoded by cleverly designed algorithms. This pooling of samples could go a long way to addressing the test kit shortage, with one test kit being able to determine the health status of five, ten, or even more people.

I spoke with Dror Baron, associate professor of electrical and computer engineering at North Carolina State University and an IEEE senior member, about his work in developing algorithms for this kind of coronavirus test processing, as well as related efforts by the worldwide computer science and engineering community.

IEEE Spectrum: What exactly is pooled testing?

Dror Baron: In a pooled test, you are taking biological material from multiple people and testing it once. If what you are looking for doesn’t exist in the group, then you can rule out a large number of people with one measurement. This works best in situations in which the majority of people being tested don’t have the disease. It works well for screening HIV in blood samples, because most people who donate blood are HIV negative.

IEEE Spectrum: Who initially came up with the idea of applying this to COVID-19?

Baron: Multiple groups had this idea at the same time, basically, in the past few months. In particular, I’m aware of two groups in Israel, one at Stanford, one in Germany, and one in South Korea. Some have demonstrated that pooled samples can successfully be tested for the coronavirus using [polymerase chain reaction (PCR)]. Other groups, including mine, are working on algorithmic ideas. I’ve read about a dozen preprints of academic papers on this in the past couple of weeks.

IEEE Spectrum: Why so much interest?

Baron: We are going to want to test huge numbers of people for the coronavirus—like anybody who gets on a plane, maybe even anybody who gets into a major government building. If testing were available at a reasonable cost, Amazon, for example, would test its warehouse employees every day. To truly restart the economy, we need to test not tens or hundreds of thousands a day but millions a day.

If manufacturers of PCR test equipment, like Roche, Abbott, and Cepheid, can ramp up to this by brute force—making chemicals and equipment—that would be great. But if they can’t, and we engineers and computer scientists can give them a factor of 10 improvement in capacity, that would be great, too.

Indeed, if pooled testing can reduce the number of tests by a factor of 10 or even five, it seems like it is just a matter of time before this is widely used.

IEEE Spectrum: How do the algorithms work?

Baron: There are two different approaches: One is very simple; it has been around for decades. The second is more modern.

The old-fashioned approach proceeds as follows. Say you have a rare incidence in the population—1 percent—that has this disease. Then say you partition the population to be tested into groups of 20. If one in 100 have the disease, then most of the groups of 20 will test negative. Generally, in 100 people, one will be sick; we can take five measurements of groups of 20 and rule out four of them, narrowing it down to 20 people. You then test those 20 people to find the individual. We have now taken 25 measurements instead of 100 to identify the one in 100.

The drawback to this approach is that if it takes you time to run a single test, say an hour, then you need another hour to test the 20 individually, so the 20 people need to wait for 2 hours.

You can use tests even more efficiently if you can take that group of 20 with the positive result, and split it into four groups of five each. One group will come back positive, so you’ll need to test each of them. That gets you down to 14 measurements, but will take 3 hours. In this approach, which is called adaptive group testing because you use information from the earlier tests to determine who is selected for the next tests, you are trading off time and the amount of tests used.

The newer approach is nonadaptive. Again, we’ll use this hypothetical group of 100 in which there is one person who is sick. If we mix the 100 people into random pools of 30 different people in each pool so that each person appears in six pools, we can sample each person six times using 20 measurements (multiplying 100 people by six gives us 600 samples, dividing those into pools of 30 means we will test 20 times). That, in most cases, will give us enough information to identify the one person who is positive.

That can be more difficult when multiple people in a group are positive. In a recent paper that has not yet been peer-reviewed, a group of researchers tested an algorithm with 384 patients and 48 tests, using leftover samples from patients. That approach does well when the number of people who are sick is three or fewer, pretty well at four, but things start breaking down at five.

The advantage of the nonadaptive approach is that it can be almost as efficient in terms of use of tests as theadaptive approach, yet you get your answer as soon as the first batch of tests is done. You don’t have to wait for follow-up testing.

These algorithms work better with larger groups. If you can test 1,000 people for example, in a situation in which one percent of the population is sick, it is unlikely that 25 or more will be sick. Such large batch testing might be reasonable at large airports or at hospitals; in other situations, you might have to wait to collect samples, trading off time and the cost of testing.

IEEE Spectrum: How does your research relate to this?

Baron: Initially, I had an idea for improving the nonadaptive testing approach by applying an idea called message passing. This is something I have been using in my work on compressed sensing and image reconstruction. This is useful in situations in which you have a bunch of values, most close to zero, but a few very large.

Take an image of the night sky, for example. In that image, 0.1 percent of my pixels will see stars, 99.9 percent will see dark sky. So if I am looking at an image of the sky with a million pixels, only 1,000 will be positive. Therefore, I only need to note which locations out of a million are positive and the light intensity at each location, I don’t have to record information for all one million pixels.

Or, researchers have realized, we can pull together these million pixels into subgroups, and use an algorithm to figure out, by looking at subgroups, which very few pixels are positive. In my work, I had been thinking about very large groups, and how we can use this approach for image reconstruction, including in medical imaging, by looking at groups of pixels, rather than pixel by pixel.

Like so many of us, I have been thinking about the coronavirus. I realized that I could apply one of our algorithms, one that uses a graph data structure. In that example of a group of 100, with each patient appearing in six test groups, you would have 100 nodes that are patients and 20 nodes that are tests. The algorithm passes messages containing statistical information between the patient nodes and the test nodes—the probability that I, as a patient, am sick, based on the information gathered so far.

For example, if most of the six groups I was tested in are positive, then the probability of my being sick is very high. For those people that tested positive in two, three, or four groups, the algorithm would adjust the probabilities of those people being sick based on whether or not I, as very likely sick, was in their groups, that is, “I tested sick five times and you only tested sick twice, both times in tests with me, so you very likely are fine.” If you design the pools cleverly, every pair of patients will be in no more than 2 pools together. This approach allows the overall results to be accurate even if there is an erroneous measurement, say there is a false positive somewhere, or me, as the sick guy, only measures positive in five out of six groups.

I’ve been working on this for a couple of weeks, but, now that I see so many groups addressing group testing algorithms, I’m focusing more directly on bringing side information into the algorithms, including symptoms, household contacts, and social network information. This side information can change the assignment of probabilities and improve accuracy.

IEEE Spectrum: What’s next?

Baron: Like many researchers around the world, my group has something that could be helpful, but we don’t know who to reach out to. This is not just a problem in the U.S., it is worldwide; it’s a chaotic situation. Many of us working on this are in touch with each other, sharing draft papers, talking regularly. It’s likely that others are working on this less openly, owing to concerns about commercialization. We just don’t know.

In spite of this chaos, with multiple groups working on this, I hope it’s just a matter of time before some country begins applying it to actual patients. When that happens, I hope whoever does it will be open to working with others to incorporate future improvements.

A version of this post appears in the June 2020 print issue as “Researchers Are Using Algorithms to Tackle the Coronavirus Test Shortage.”

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.