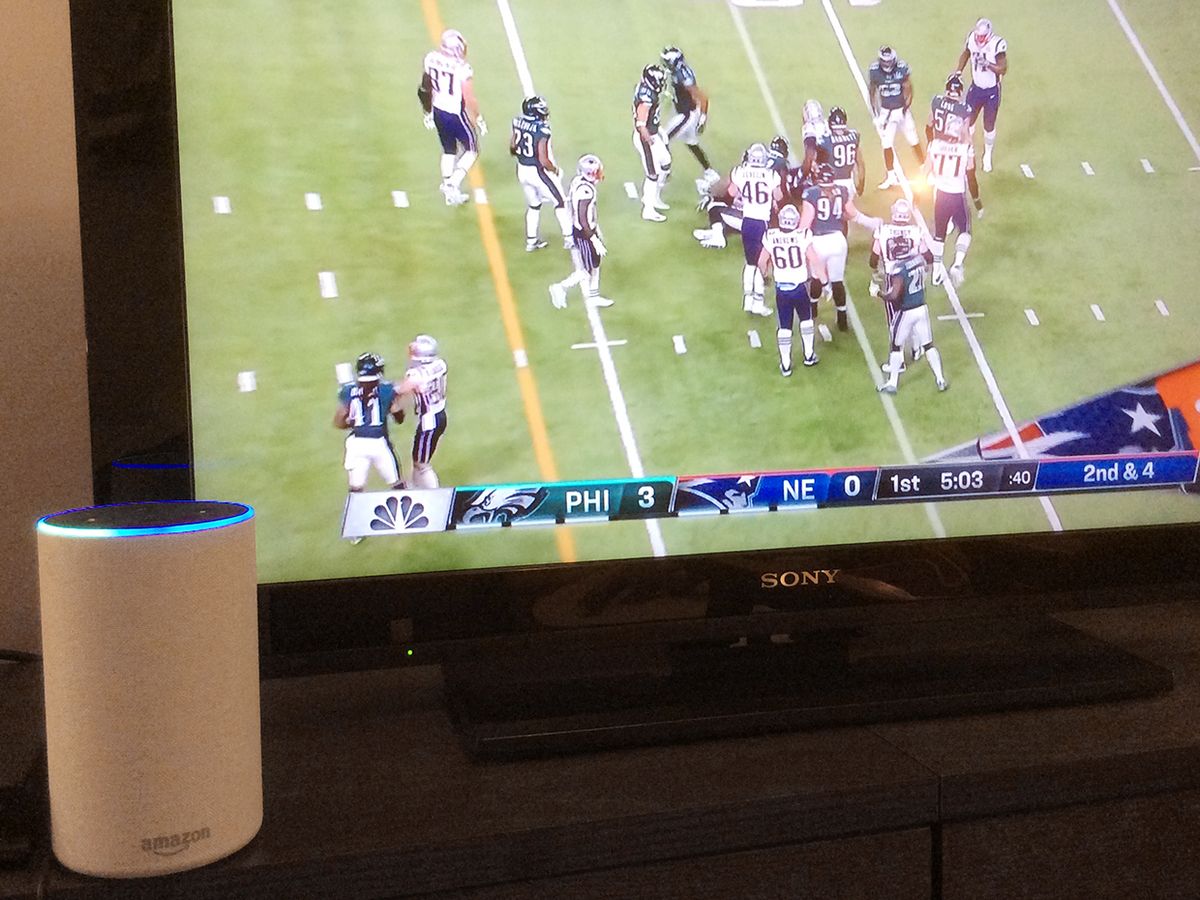

Sunday’s Super Bowl broadcast included a spot for Amazon’s Alexa, in which Alexa’s name was said some 10 times. But the Amazon Echos in people’s homes didn’t even blink, because they were programmed to look for a particular digital fingerprint in the wake-up word, and ignore it.

Said Amazon in a blog post, the company’s acoustic fingerprinting technology allowed the devices to distinguish between the wake-up words uttered in the ad—which sounded perfectly natural—and commands given to Alexa by actual users.

Amazon, of course, had the audio from the advertising to work with, with lots of time to spare. The company indicated that it also can build acoustic fingerprints on the fly. “When multiple devices start waking up simultaneously from a broadcast event, similar audio is streaming to Alexa’s cloud services,” said the blog post. “An algorithm within Amazon’s cloud detects matching audio from distinct devices and prevents additional devices from responding.”

The dynamic fingerprinting isn’t perfect, but as many as 80 to 90 percent of devices won’t respond to these broadcasts, thanks to the fingerprints. The company also can send a signal ahead of the wake word to alert Alexa to ignore it, but didn’t use that technique for the Super Bowl commercial.

Turns out, this is the tip of the iceberg of what sleeping (or “un-woke”) devices can do in response to sounds, without dramatically increasing their power consumption or their communication with the cloud.

Startup company Audio Analytic is modeling sounds beyond voice and music. The company has already encoded the sound of a baby’s cry, a window being broken, a dog barking, and a smoke alarm, as well as the ability to spot a general anomaly in ambient noise, and is licensing its software to various consumer electronics manufacturers.

CEO and founder Chris Mitchell—who has a dual degree in electrical engineering and music technology from Anglia Ruskin University in Cambridge, England, and a Ph.D. involving the identification of music genres—took me through the company’s technology last month. It’s a bit different than programming a device to recognize a wake word—or even an audio fingerprint.

“With speech,” he says, “and in particular wake words, the sound you make is constrained by the words and the broader rules of language. There are a limited number of phonemes that humans can produce, and our collective knowledge here is considerable.”

The audio fingerprint is even simpler, he indicated, given the playback of the content varies only slightly, and Amazon is in control of the device itself.

Considering noises beyond speech gets a lot tougher, Mitchell said. For the sound of a window breaking, say, fingerprinting would require indexing every way every type of glass window could break. Instead, it’s necessary to break the sound down into components, in the same way speech has phonemes. Mitchell uses the word “ideophone”—usually used to mean the representation of a sound in speech, like an onomatopoeic word—to refer to these components.

Using a deep-learning system, Audio Analytic is analyzing sounds and coding them into ideophones. At that point, it’s just as simple for a digital assistant to react to a sound as it is to react to a wake word.

“It’s an extremely efficient algorithm,” Mitchell says, and it is designed to run locally on any type of device with a digital assistant, including smart speakers, phones, and connected cars.

The company has demonstrated window-breaking recognition, an event that, when detected, could trigger an alarm or lights going on. And, using what the company calls scene recognition, the algorithm has developed a general understanding that when music or other audio is playing in an environment with changing noise levels—like clattering pots or running water—it should adjust the audio to compensate, not by drowning out the ambient sound but “pushing through the gaps” to increase the volume in the frequencies between it, improving the overall sound clarity.

These basic sounds are just the beginning of what Audio Analytic hopes will be a much larger sound library, Mitchell says: The company thinks about 50 sounds is the sweet spot. He’d like to add health-related sounds, like coughing and sneezing; sirens, for use in autonomous vehicles; and laughter, because, well, he said, Alexa needs to know when her jokes aren’t funny.

But it hasn’t been easy to build these models. “There are no public sources of our data,” he says. “You can’t play YouTube clips—you’d just get a model of what a sound coming out of your laptop via YouTube sounds like, not a sound from the real world.”

Mitchell started Audio Analytic in 2010, initially targeting the dedicated security market. “The problem was,” he said, “that very few devices had microphones on them. But Alexa and Google came in and solved that.”

The company says it has agreements with a few consumer electronics manufacturers to build its technology into future products, including Hive and Bragi.

So it’s not unlikely that Alexa—and her digital assistant brethren, like OK Google and Siri—will soon be alerting to more than just her name.

Which brings us back to the broadcast question—and when digital assistants should stay asleep. Television shows often include breaking glass, dogs barking, or alarms sounding—these are typically sound effects, added to the actual audio to make it more dramatic and, says Mitchell, have different characteristics from real-world sounds.

If those sounds were indeed recorded in the context of filming instead of added later, they’ve gone through an editing process and then were broadcast or streamed and played through speakers. At all stages, he says, there is accidental or deliberate degradation or editing of sound.

“There are enough differences for this not to trigger an alert most of the time,” says Mitchell.

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.