Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here's what we have so far (send us your events!):

EU Robotics Week – November 16-25, 2018 – Europe

ICSR 2018 – November 28-30, 2018 – Qingdao, China

RoboDEX – January 16-18, 2019 – Tokyo, Japan

Let us know if you have suggestions for next week, and enjoy today's videos.

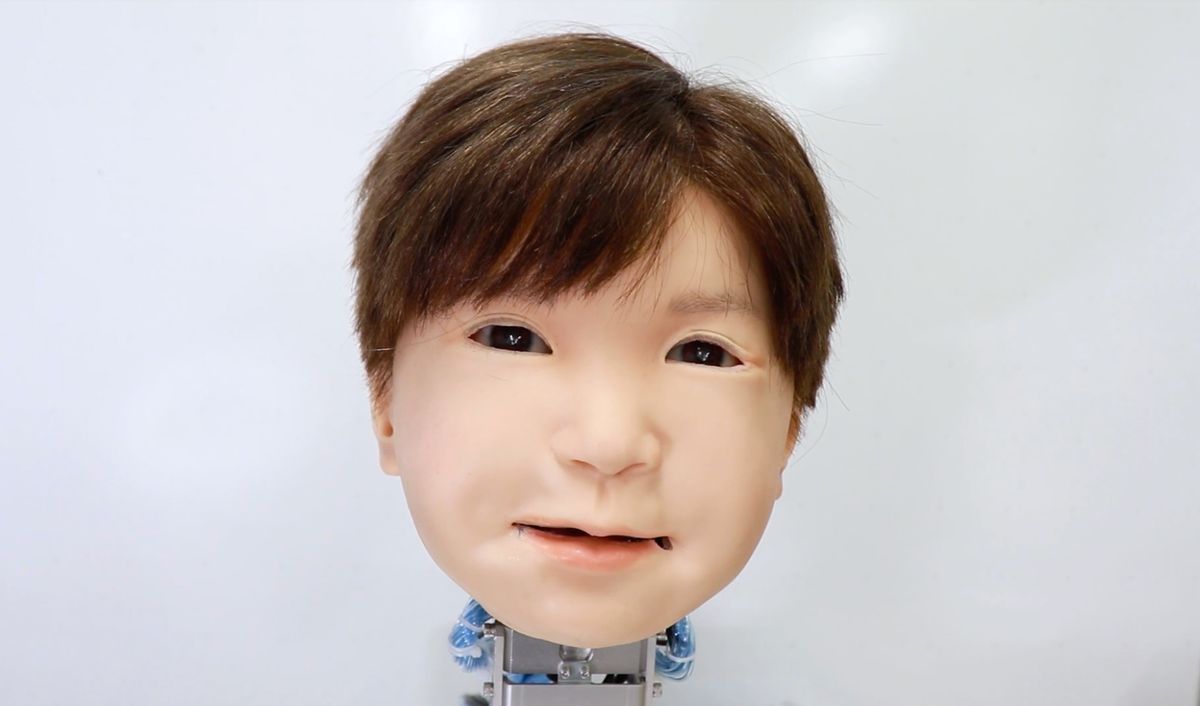

We’ve missed you, Affetto.

A trio of researchers at Osaka University has now found a method for identifying and quantitatively evaluating facial movements on their android robot child head. Named Affetto, the android's first-generation model was reported in a 2011 publication. The researchers have now found a system to make the second-generation Affetto more expressive. Their findings offer a path for androids to express greater ranges of emotion, and ultimately have deeper interaction with humans.

[ Osaka University ]

Vector is getting a brain update and will be able to respond to Alexa voice commands.

Next month, Vector owners in the U.S. and Canada will be able to access the knowledge and utility of Amazon’s voice service. Simply talk to Vector like you’d talk to Alexa and schedule reminders, search for information, control smart home devices, and more. We’ll also launch this feature early next year in other countries where Vector is available. Check out some footage of our engineers testing the integration below.

[ Vector ]

A new jumping robot is in the works at the University of Cape Town.

[ UCT ]

Here’s a video from the MIT Media Lab in 2008 showing AUR, an intelligent robotic desk lamp developed by Cynthia Breazeal and Guy Hoffman.

[ AUR ]

A new twist on robot design.

[ Takanishi Lab ]

Warning: This video has footage of live medical procedures involving robots, needles, and scalps that some viewers may find hard to watch.

KUKA partner, Restoration Robotics, is on a mission to improve the lives of people suffering from hair loss. With the precision, flexibility and safety provided by KUKA's LBR Med robot, Restoration Robotics has taken another large leap in robotic hair restoration procedures with the ARTAS iX system. And with the world's first certification of LBR Med as a medical component, the robot offers a truly unique advantage.

A nicely choreographed (and programmed) robot and human dance video.

Props for using real robots.

[ m plus plus ]

Aurora Flight Sciences is almost ready to launch an enormous solar-powered drone called Odysseus.

HAPS: high-altitude pseudo-satellite. Hadn’t heard that acronym before, but bet we hear it again.

Solve all of your drone range and payload problems by just forgetting about batteries and going with a gas engine instead.

The Airborg 10K H8 Cargo Transport UAV flies hours, not minutes. No batteries to recharge. Hybrid power. Gasoline, available everywhere. Refuel and go. Patented gasoline engine/electric generator creates 10KW of power for electric lift motors and onboard electronics/auxiliary equipment and datacoms. Flies 4kg payloads over 2 hours; 10kg over 1 hour. Can sustain gusts of wind to 35mph. 100 mile range. Cruise speed 55km/34 mph.

[ Top Flight ]

Deep Reinforcement Learning has been successfully applied in various computer games. But it is still rarely used in real world applications especially for continuous control of real mobile robot navigation. In this video, we present our first approach to learn robot navigation in an unknown environment.

The input for the robot is only the fused data from a 2D laser scanner and a RGBD camera and the orientation to the goal. The map is unknown. The output is an action for the robot (velocities, linear, angular). The navigator (small GA3s) is pretrained in a fast, parallel, self implemented simulation environment and then deployed to the real robot. To avoid over fitting we use only a small network and we add random gaussian noise to the laser data. The sensor data fusion with the RGBD camera allows the robot to navigate in real environments with real 3D obstacle avoidance and without environmental interventions in contrast to other approaches. To further avoid over fitting we use different levels of difficulties of the environment and train 32 of them simultaneously.

Thanks Hartmut!

We met the awesome folks at Tanzania Flying Labs a few weeks back—if it’s seemed a little on the quiet side around here, it’s because we were in Africa. Anyway, they’re doing some incredible stuff in Tanzania, and this video doesn’t do them justice.

Pipes can’t hide from HEBI Robotics.

[ HEBI ]

Thanks Dave!

RoboCup 2018 in Montreal featured a small army of SoftBank NAOs.

[ Softbank ]

University of Michigan’s Cassie may not have a face, but it’s got laser eyeballs anyway.

[ U Michigan ]

This has to be one of the least intimidating police robots ever.

[ CNA ]

The Laboratory for Robotics and Intelligent Control Systems - LARICS is a research laboratory within the University of Zagreb Faculty of Electrical Engineering and Computing at the Department of Control and Computer Engineering. LARICS research is focused on control, robotics and intelligence in the areas of flying, walking and driving robots, manipulation, warehousing, as well as collective and automotive systems.

[ LARICS ]

BBC went to Japan to have a look at some of the robots there. Nothing new for loyal Spectrum readers, but it’s the BBC, so it’s well produced with good video.

[ BBC Click ]

Christoph Bartneck gives an excellent and very engaging 30-minute (ish) talk on “Racism and Robot Ethics.” With a title like that, you know it’s going to be good.

The Bay Area Robotics Symposium was live last week, but if you missed it, the livestream is archived here. The nice thing about watching the recording is that you can skip the boring talks, but since there are no boring talks, it doesn't really help that much.

[ BARS ]

This week’s CMU RI Seminar comes from Oliver Kroemer at CMU on “Learning Robot Manipulation Skills Through Experience and Generalization.”

In the future, robots could be used to take care of the elderly, perform household chores, and assist in hazardous situations. However, such applications require robots to manipulate objects in unstructured and everyday environments. Hence, in order to perform a wide range of tasks, robots will need to learn manipulation skills that generalize between different scenarios and objects. In this talk, Oliver Kroemer will present methods that he has developed for robots to learn versatile manipulation skills. The first part of the presentation will focus on learning manipulation skills through trial and error using task-specific reward functions. The presented methods are used to learn the desired trajectories and goal states of individual skills, as well as high-level policies for sequencing skills. The resulting skill sequences reflect the multi-modal structure of the manipulation tasks. The second part of the talk will focus on representations for generalizing skills between different scenarios. He will explain how robots can learn to adapt skills to the geometric features of the objects being manipulated, and also discuss how the relevance of these features can be predicted from previously learned skills. The talk will be concluded by discussing open challenges and future research directions for learning manipulation skills.

[ CMU RI ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.