ICRA is almost over, and we hope you’ve been enjoying our coverage, which so far has featured robot moths, zipper actuators, machine learning, and duckies. We’ll have lots more from the conference over the next few weeks, but for you impatient types, we’re cramming Video Friday this week with a painstakingly curated selection of ICRA videos—emphasis on pain: there were over 400 videos!

We tried to include videos from many different areas of robotics: control, sensing, humanoids, actuators, exoskeletons, manipulators, prosthetics, aerial vehicles, grasping, AI, VR, haptics, vision, and microrobots. There’s even a cybernetic living tree that drives around on a mobile robotic base! We’re posting the abstracts along with the videos, but if you have any questions about these projects, let us know and we’ll get more details from the authors.

We’ll return to normal Video Friday next week. Have a great weekend everyone!

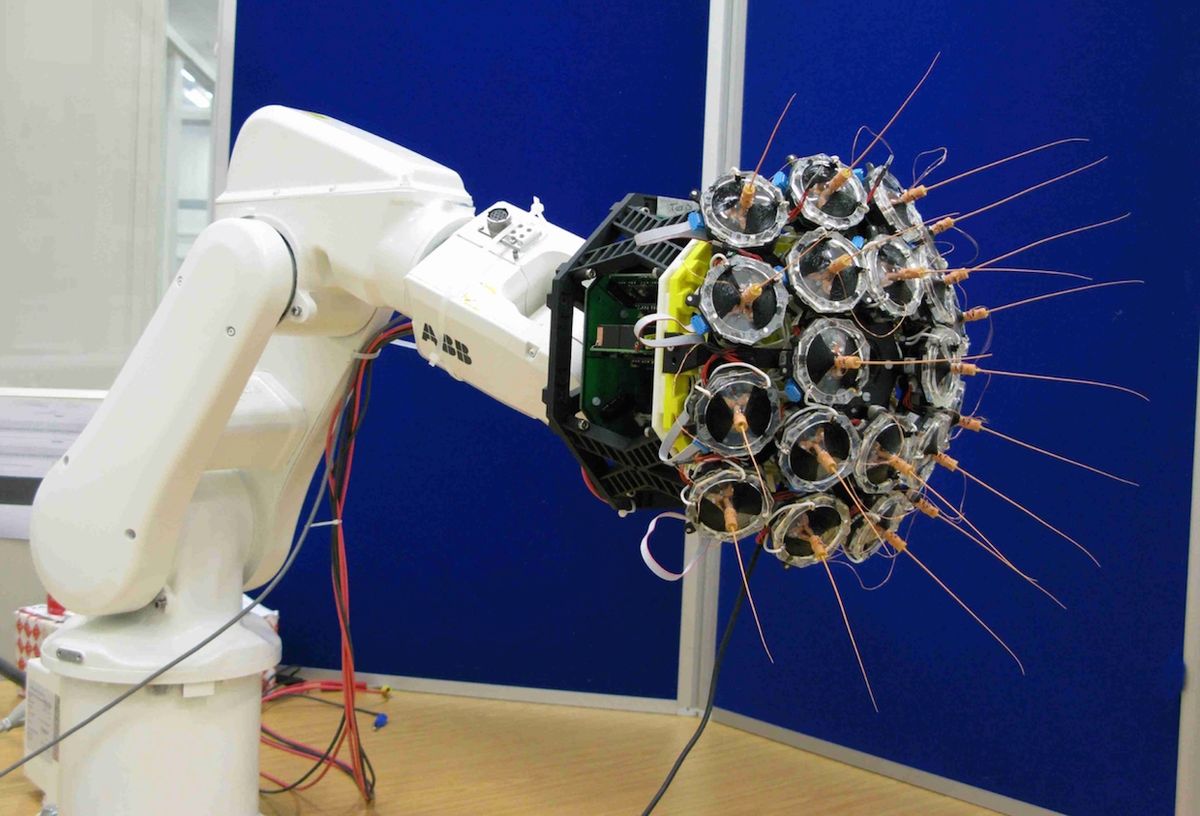

“Visual-Tactile Sensory Map Calibration of a Biomimetic Whiskered Robot,” by Tareq Assaf, Emma D. Wilson, Sean Anderson, Paul Dean, John Porrill, and Martin J. Pearson from the University of Bristol, University of the West of England, and University of Sheffield, U.K.

We present an adaptive filter model of cerebellar function applied to the calibration of a tactile sensory map to improve the accuracy of directed movements of a robotic manipulator. This is demonstrated using a platform called Bellabot that incorporates an array of biomimetic tactile whiskers, actuated using electro-active polymer artificial muscles, a camera to provide visual error feedback, and a standard industrial robotic manipulator. The algorithm learns to accommodate imperfections in the sensory map that may be as a result of poor manufacturing tolerances or damage to the sensory array. Such an ability is an important pre-requisite for robust tactile robotic systems operating in the real-world for extended periods of time. In this work the sensory maps have been purposely distorted in order to evaluate the performance of the algorithm.

“A Structurally Flexible Humanoid Spine based on a Tendon-Driven Elastic Continuum,” by Jens Reinecke, Bastian Deutschmann, and David Fehrenbach from German Aerospace Center (DLR).

When working in unknown environments, it is beneficial for robots to be mechanically robust to impacts. To obtain such robustness properties and maintain human-like performance, strength, workspace and size especially in the upper body, an elastic backbone approach is presented and discussed. Compared to the human spine stabilized by ligaments, intervertebral discs and muscles, we use a continuum mechanism based on silicone and tendons for actuation. The work presents the development of such a mechanism, that could be either used as neck or torso, but concentrates on the cervical part (neck). To prove functionality of the proposed concept, a planar setup was designed and experimental data regarding motion capabilities, robustness and dynamics are presented. With that knowledge, a modular multiple DOF prototype is built which can easily be equipped with different tendon routing and elastic continuum shapes. The results of that final setup will help the mechanical designer to choose the suitable solution for the robotic spine and provides a test bed to develop control strategies for such types of mechanisms.

“Let’s All Pull Together: Principles for Sharing Large Loads in Microrobot Teams,” by David L. Christensen, Srinivasan A. Suresh, Katie Hahm, and Mark R. Cutkosky from Stanford University.

We present a simple statistical model to predict the maximum pulling force available from robot teams. The expected performance is a function of interactions between each robot and the ground (e.g. whether running or walking). We confirm the model with experiments involving impulsive bristlebots, small walking and running hexapods, and 17 gram μTugs that employ adhesion instead of friction. With attention to load sharing, each μTug can operate at its individual limit so that a team of six pulls with forces exceeding 200 N. Stanford University.

“Development of a Polymer-Based Tendon-Driven Wearable Robotic Hand,” by Brian Byunghyun Kang, Haemin Lee, Hyunki In, Useok Jeong, Jinwon Chung, and Kyu-Jin Cho from Seoul National University, South Korea.

This paper presents the development of a polymer-based tendon-driven wearable robotic hand, Exo-Glove Poly. Unlike the previously developed Exo-Glove, a fabric-based tendon-driven wearable robotic hand, Exo-Glove Poly was developed using silicone to allow for sanitization between users in multiple-user environments such as hospitals. Exo-Glove Poly was developed to use two motors, one for the thumb and the other for the index/middle finger, and an under-actuation mechanism to grasp various objects. In order to realize Exo-Glove Poly, design features and fabrication processes were developed to permit adjustment to different hand sizes, to protect users from injury, to enable ventilation, and to embed Teflon tubes for the wire paths. The mechanical properties of Exo-Glove Poly were verified with a healthy subject through a wrap grasp experiment using a mat-type pressure sensor and an under-actuation performance experiment with a specialized test set-up. Finally, performance of the Exo-Glove Poly for grasping various shapes of object was verified, including objects needing under-actuation.

“An Approach to Combine Balancing with Hierarchical Whole-Body Control for Legged Humanoid Robots,” by Bernd Henze, Alexander Dietrich, and Christian Ott from German Aerospace Center (DLR).

Legged humanoid robots need to be able to perform a variety of tasks including interaction with the environment while maintaining the balance. The external wrenches, which arise from the physical interaction, must be taken into account in order to achieve robust and compliant balancing. This work presents a new control approach for combining multi-objective hierarchical control based on null space projections with passivity-based multi-contact balancing for legged humanoid robots. In order to achieve a proper balancing, all task forces/torques are first distributed to the end effectors and then mapped into joint space considering the task hierarchy. The control approach is evaluated both in simulation and experiment with the humanoid robot TORO.

“Versatile Aerial Grasping Using Self-Sealing Suction,” by Chad C. Kessens, Justin Thomas, Jaydev P. Desai, and Vijay Kumar from the University of Maryland, U.S. Army Research Laboratory, and University of Pennsylvania.

This paper addresses the challenge of versatile aerial grasping utilizing suction while considering the limita- tions of an on-board vacuum pump. It builds upon our patented self-sealing suction cup technology, which allows the exertion of local pulling contact forces for grasping a wide range of objects. The novel self-sealing nature of the cups enables the gripper to be versatile, employing just one, several, or all of the cups for the grasp in a passively actuated manner. We begin by describing the design of the system and its components. Because aerial applications are typically sensitive to weight constraints, we used a micro-pump vacuum generator, which introduced new challenges for our system. To investigate and overcome those challenges, we tested the relationship between the cup’s design and its leakage, activation force, and maximum holding force. In addition, we tested the performance of the individual gripper components, the aerial vehicle’s ability to transfer force to the cups, the system’s ability to grip inclined surfaces, and finally the vehicle’s ability to grasp a multitude of objects using various numbers of cups. This included the grasping of one object, followed by the grasping of a second object while still holding the first object.

“TransHumUs: A Poetic Experience in Mobile Robotics,” by Guilhem Saurel, Michel Taïx, and Jean-Paul Laumond from LAAS-CNRS.

TransHumUs is an artistic work recently exhibited at the 56th Venice Biennale. The work aims at freeing trees from their roots. How to translate this poetic ambition into technological terms? This paper reports on the setup and the implementation of the project. It shows how state of the art mobile robotics technology can contribute to contemporary art development. The challenge has been to design original mobile platforms carrying charges of three tones, while moving noiseless according to tree metabolism, in operational spaces populated by visitors.

“What Lies Behind: Recovering Hidden Shape in Dense Mapping,” by Michael Tanner, Pedro Pinies, Lina Maria Paz, and Paul Newman from the University of Oxford, U.K.

In mobile robotics applications, generation of ac- curate static maps is encumbered by the presence of ephemeral objects such as vehicles, pedestrians, or bicycles. We propose a method to process a sequence of laser point clouds and back-fill dense surfaces into gaps caused by removing objects from the scene – a valuable tool in scenarios where resource constraints permit only one mapping pass in a particular region. Our method processes laser scans in a three-dimensional voxel grid using the Truncated Signed Distance Function (TSDF) and then uses a Total Variation (TV) regulariser with a Kernel Conditional Density Estimation (KCDE) “soft” data term to interpolate missing surfaces. Using four scenarios captured with a push-broom 2D laser, our technique infills approximately 20 m2 of missing surface area for each removed object. Our reconstruction’s median error ranges between 5.64 cm - 9.24 cm with standard deviations between 4.57 cm - 6.08 cm.

“Integrated Control of a Multi-fingered Hand and Arm Using Proximity Sensors on the Fingertips,” by Keisuke Koyama, Yosuke Suzuki, Aiguo Ming, and Makoto Shimojo from the University of Electro-Communications, Japan.

In this study, we propose integrated control of a robotic hand and arm using only proximity sensing from the fingertips. An integrated control scheme for the fingers and for the arm enables quick control of the position and posture of the arm by placing the fingertips adjacent to the surface of an object to be grasped. The arm control scheme enables adjustments based on errors in hand position and posture that would be impossible to achieve by finger motions alone, thus allowing the fingers to grasp an object in a laterally symmetric grasp. This can prevent grasp failures such as a finger pushing the object out of the hand or knocking the object over. Proposed control of the arm and hand allowed correction of position errors on the order of several centimeters. For example, an object on a workbench that is in an uncertain positional relation with the robot, with an inexpensive optical sensor such as a Kinect, which only provides coarse image data, would be sufficient for grasping an object.

“Inverse Kinematics and Design of a Novel 6-DoF Handheld Robot Arm,” by Austin Gregg-Smith and Walterio W. Mayol-Cuevas from the University of Bristol, U.K.

We present a novel 6-DoF cable driven manipulator for handheld robotic tasks. Based on a coupled tendon approach, the arm is optimized to maximize movement speed and configuration space while reducing the total mass of the arm. We propose a space carving approach to design optimal link geom- etry maximizing structural strength and joint limits while minimizing link mass. The design improves on similar non-handheld tendon-driven manipulators and reduces the required number of actuators to one per DoF. As the manipulator has one redundant joint, we present a 5-DoF inverse kinematics solution for the end effector pose. The inverse kinematics is solved by splitting the 6-DoF problem into two coupled 3- DoF problems and merging their results. A method for gracefully degrading the output of the inverse kinematics is described for cases where the desired end effector pose is outside the configuration space. This is useful for settings where the user is in the control loop and can help the robot to get closer to the desired location. The design of the handheld robot is offered as open source. While our results and tools are aimed at handheld robotics, the design and approach is useful to non-handheld applications.

“A Lightweight, Low-power Electroadhesive Clutch and Spring for Exoskeleton Actuation,” by Stuart Diller, Carmel Majidi, and Steven H. Collins from Carnegie Mellon University.

Clutches can be used to enhance the functionality of springs or actuators in robotic devices. Here we describe a lightweight, low-power clutch used to control spring engagement in an ankle exoskeleton. The clutch is based on electrostatic adhesion between thin electrode sheets coated with a dielectric material. Each electrode pair weighs 1.5 g, bears up to 100 N, and changes states in less than 30 ms. We placed clutches in series with elastomer springs to allow control of spring engagement, and placed several clutched springs in parallel to discretely adjust stiffness. By engaging different numbers of springs, the system produced six different levels of stiffness. Force at peak displacement ranged from 14 to 501 N, and the device returned 95% of stored mechanical energy. Each clutched spring element weighed 26 g. We attached one clutched spring to an ankle exoskeleton and used it to engage the spring only while the foot was on the ground during 150 consecutive walking steps. Peak torque was 7.3 N·m on an average step, and the device consumed 0.6 mW of electricity. Compared to other electrically-controllable clutches, this approach results in three times higher torque density and two orders of magnitude lower power consumption per unit torque. We anticipate this technology will be incorporated into exoskeletons that tune stiffness online and into new actuator designs that utilize many lightweight, low- power clutches acting in concert.

“Differential Feed Control Applied to Corner Matching in Automated Sewing,” by Johannes Schrimpf and Geir Mathisen from Norwegian University of Science and Technology, SINTEF Raufoss Manufacturing, and Applied Cybernetics, Norway.

This paper presents a new method for independent feed control in an automated sewing cell. This is important to match the corners of the parts as well as to compensate for uncertain material characteristics and variations in the length of the parts. In this method, the feed speed for the two parts is controlled independently, based on measurements of the endpoints of the parts while keeping an equal sewing force in both parts. Different strategies for correcting errors are presented and experiments are shown to evaluate the different strategies. Possibilities for using the methods to match reference points during the sewing are discussed.

“Investigating Spatial Guidance for a Cooperative Handheld Robot,” by Austin Gregg-Smith and Walterio W. Mayol-Cuevas from the University of Bristol, U.K.

In this paper we address the question of how to provide feedback information to guide users of a handheld robotic device when performing a spa- tial exploration task. We consider various feedback methods for communicating a five degree of freedom target pose to a user including a stereoscopic VR display, a monocular see-through AR display and a 2D screen as well as robot arm gesturing. The spatial exploration task with the handheld robot arm was compared against a baseline of a passive handheld wand. We compared the performance of each of the methods with 21 volunteers using a repeated measures ANOVA experimental design, and recorded users’ opinions via a NASA Task Load Index Survey. The robot assisted reaching feedback methods signifi- cantly outperform manual reaching with the wand for all three visual feedback methods. However, there is little difference between each of the three visual feed- back methods when using the robot. The completion time of the task varies with changing difficulty when using the wand but remains stable when assisted by the robot. These results convey useful information for the design of cooperative handheld robots.

“Supersizing Self-supervision: Learning to Grasp from 50K Tries and 700 Robot Hours,” by Lerrel Pinto and Abhinav Gupta from Carnegie Mellon University.

Current model free learning-based robot grasping approaches exploit human-labeled datasets for training the models. However, there are two problems with such a methodol- ogy: (a) since each object can be grasped in multiple ways, man- ually labeling grasp locations is not a trivial task; (b) human labeling is biased by semantics. While there have been attempts to train robots using trial-and-error experiments, the amount of data used in such experiments remains substantially low and hence makes the learner prone to over-fitting. In this paper, we take the leap of increasing the available training data to 40 times more than prior work, leading to a dataset size of 50K data points collected over 700 hours of robot grasping attempts. This allows us to train a Convolutional Neural Network (CNN) for the task of predicting grasp locations without severe overfitting. In our formulation, we recast the regression problem to an 18- way binary classification over image patches. We also present a multi-stage learning approach where a CNN trained in one stage is used to collect hard negatives in subsequent stages. Our experiments clearly show the benefit of using large-scale datasets (and multi-stage training) for the task of grasping. We also compare to several baselines and show state-of-the-art performance on generalization to unseen objects for grasping.

“Closed-Loop Shape Control of a Haptic Jamming Deformable Surface,” by Andrew A. Stanley, Kenji Hata, and Allison M. Okamura from Stanford University.

A Haptic Jamming tactile display, consisting of an array of thin particle jamming cells, can change its shape and mechanical properties simultaneously through a combination of vacuuming individual cells, pressurizing the air chamber beneath the surface, and pinning the nodes between the cells at various heights. In previous particle jamming devices for haptics and soft robotics, shape has typically been commanded open-loop or manipulated directly by a human user. A new algorithm was designed for the three types of actuation inputs for a Haptic Jamming surface, using the depth map provided by an RGB-D sensor as shape feedback for closed-loop control to match a desired surface input. To test the closed-loop control accuracy of the system, a mass-spring model of the Haptic Jamming device generated three unique surface shapes as desired inputs into the controller with a mean height range of 25.1 mm. The average correlation coefficient between the desired input shape and the experimental output generated by the controller on the actual device across four trials for each shape was 0.88, with an average height error of 2.7 mm. When the desired input surface is generated from a 3D model of an object that a Haptic Jamming surface cannot necessarily re- create, the difference between the input and the output increases substantially. However, simulation of a larger array suggests that a Haptic Jamming surface can provide a compelling match for these more complicated shapes.

“SoRo-Track: A Two-Axis Soft Robotic Platform for Solar Tracking and Building-Integrated Photovoltaic Applications,” by Bratislav Svetozarevic, Zoltan Nagy, Johannes Hofer, Dominic Jacob, Moritz Begle, Eleni Chatzi, and Arno Schlueter from ETH Zurich.

We present SoRo-Track, a two-axis soft robotic actuator (SRA) for solar tracking and building-integrated pho- tovoltaic applications. SRAs are gaining increasing popularity compared to traditional actuators, such as dc motors and hydraulic or pneumatic pistons, due to their inherent com- pliance, low morphological complexity, high power-to-weight ratio, resilience to external shocks and adverse environmental conditions, design flexibility, ease of fabrication, and low costs. We present the design, modelling and experimental character- isation of SoRo-Track. Finally, we demonstrate the suitability of SoRo-Track for solar tracking applications, which makes it a viable component for dynamic building facades.

“Saccade Mirror 3: High-speed Gaze Controller With Ultra Wide Gaze Control Range Using Triple Rotational Mirrors,” by Kazuhisa Iida and Hiromasa Oku from Gunma University, Japan.

This paper reports the prototype of a new optical high-speed gaze controller, named Saccade Mirror 3, that overcomes the limited gaze control range (∼60 deg) of our previously proposed high-speed gaze controller, called Saccade Mirror. Saccade Mirror 3 is based on three automated rota- tional mirrors, and this gaze control mechanism can achieve an ultrawide gaze control range of 360 deg in theory. A prototype was developed based on this mechanism. A gaze control range of over 260 degrees in the pan direction and a high-speed response of about 10 ms were confirmed. Furthermore, the prototype was applied to high-speed visual tracking coupled with a 1000 fps high-speed vision system. A visual tracking algorithm was developed for this mechanism, and high-speed tracking of a table tennis ball in play and a flying drone was successfully demonstrated.

“Design, Modeling and Control of an Omni-Directional Aerial Vehicle,” by Dario Brescianini and Raffaello D’ Andrea from ETH Zurich.

In this paper we present the design and control of a novel six degrees-of-freedom aerial vehicle. Based on a static force and torque analysis for generic actuator configurations, we derive an eight-rotor configuration that maximizes the vehicle’s agility in any direction. The proposed vehicle design possesses full force and torque authority in all three dimensions. A control strategy that allows for exploiting the vehicle’s decoupled translational and rotational dynamics is introduced. A prototype of the proposed vehicle design is built using reversible motor-propeller actuators and capable of flying at any orientation. Preliminary experimental results demonstrate the feasibility of the novel design and the capabilities of the vehicle.

“Five-Degree-of-Freedom Magnetic Control of Micro-Robots Using Rotating Permanent Magnets,” by Patrick Ryan and Eric Diller from the University of Toronto.

Recent work in magnetically-actuated micro-scale robots for biomedical or microfluidic applications has resulted in electromagnetic actuation systems which can command precise five-degree-of-freedom control of simple magnetic devices at the sub-millimeter scale in remote environments. This paper presents a new type of actuation system which uses an array of large, rotatable, permanent magnets to generate the same level of control over untethered micro-robotic systems with the potential for increased field and gradient strength and minimal heat generation. We show that the system can produce any field or field gradient at the workspace (including a value of zero). In contrast with previous permanent magnet actuation systems, the system proposed here accomplishes this without any hazardous translational motion of the control magnets, resulting in a simple, safe, and inexpensive system. The proof-of-concept prototype system presented, with eight permanent magnets, can create fields and gradients in any direction with strength of 30 mT and 0.83 T∙m-1, respectively. The effectiveness of the system is shown through characterization and feedback control of a 250 μm micro-magnet in a path-following task with average accuracy of 39 μm.

“Development of a Humorous Humanoid Robot Capable of Quick-and-Wide Arm Motion,” by T. Kishi, S. Shimomura, H. Futaki, H. Yanagino, M. Yahara, S. Cosentino, T. Nozawa, K. Hashimoto, and A. Takanishi from Waseda University and Mejiro University, Japan.

This paper describes the development of a humanoid arm with quick-and-wide motion capability for making humans laugh. Laughter is attracting research attention because it enhances health by treating or preventing mental diseases. However, laughter has not been used effectively in healthcare because the mechanism of laughter is complicated and is yet to be fully understood. The development of a robot capable of making humans laugh will clarify the mechanism how humans experience humor from stimuli. Nonverbal funny expressions have the potential to make humans laugh across cultural and linguistic differences. In particular, we focused on the exaggerated arm motion widely used in slapsticks and silent comedy films. In order to develop a humanoid robot that can perform this type of movement, the required specification was calculated from slapstick skits performed by human comedians. To meet the required specifications, new arms for the humanoid robot were developed with a novel mechanism that includes lightweight joints driven by a flexible shaft and joints with high output power driven by a twin-motor mechanism. The results of experimental evaluation show that the quick-and-wide motion performed by the developed hardware is effective at making humans laugh.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.