Creating your virtual clone isn’t as difficult as you’d think.

Epic Games recently introduced Mesh to MetaHuman, a framework for creating photorealistic human characters. It lets creators sculpt an imported mesh to create a convincing character in less than an hour.

“It’s incredibly simple compared to a lot of other tools,” says Stu Richards (a.k.a. Meta Mike), partner success lead at GigLabs and Cofounder of Versed. “I’d compare it to a character creator in a game.”

Creating your clone

Photorealistic characters remain the holy grail of 3D graphics. It’s a difficult task due to the “uncanny valley” phenomenon, which theorizes humanlike characters become less appealing as they approach a realistic representation. Characters in big-budget games or animated films take months (if not years) of effort and often rely on detailed scans of real human models captured with expensive, specialized equipment.

Mesh to MetaHuman breaks this barrier to entry. Richards used his iPhone to scan a model of his face and create his own MetaHuman avatar. “After, I think, about 40 photos, it put together all the different angles, and creates a 3D model of my face,” he says. “You take that mesh, bring it into Unreal Engine…and overlay a MetaHuman head onto that mesh.”

“The barrier of entry to getting into the tool itself is almost zero.”

—Justin Vu, 3D animator

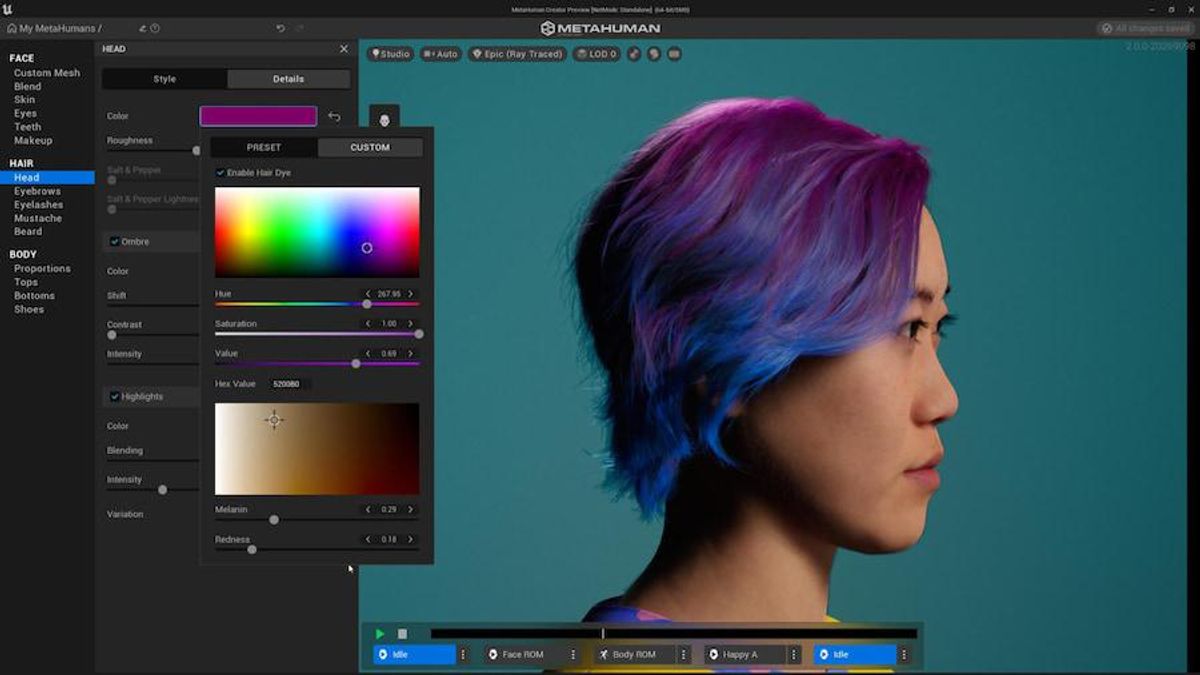

The mesh begins as an untextured, gray surface, but MetaHuman’s interface provides numerous options that can be changed with a click. Creators can tweak physique, head and facial hair, eyebrows, skin tone, and even the look of pores and opacity of skin to create a convincing virtual clone—or model an idealized avatar.

MetaHuman also rigs the mesh for animation. “You can select different poses and different animations,” says Richards. “But when you bring that back into Unreal, you have a lot of flexibility. You can create your own animation sets, create full-on controller mapping.”

Creators can even use Live Link, an Unreal Engine plug-in, to capture facial expressions in real time on a smartphone or tablet and stream it to a MetaHuman character.

Live Link Face Tutorial with New Metahumans in Unreal Engine 4www.youtube.com

While Mesh to MetaHuman is more approachable than previous workflows, Richards cautions that some familiarity with 3D modeling and animation is required for the best results. Creators can expect minor errors in the mesh captured by a smartphone or tablet that must be fixed in 3D-modeling software. And while MetaHuman’s interface is simple, Unreal Engine 5 remains complex.

“From a user perspective, I think that at this point in time, having something a bit more lightweight, with lower fidelity, and more interoperable, is what’s going to resonate with people,” says Richards. “Especially in the NFT and web3 world.”

MetaHuman beyond the metaverse

Contrary to its name, MetaHuman is not a tool exclusively for metaverse avatars. In fact, that’s not even its primary use case (for now). Rather, MetaHuman is, according to Epic Games, ideal for creators working on smaller projects with a modest budget, such as independent games and short films.

Justin Vu, an animator and 3D generalist specializing in filmmaking with Maya and Unreal Editor, is one such creator. He put MetaHuman to work in a series of promotional shorts from Allstate Insurance Company called The Future of Protection. Vu was able to use MetaHuman despite having little prior experience with Unreal Engine.

“The barrier of entry to getting into the tool itself is almost zero,” says Vu. “So long as you have a computer that runs a modern video game, you can get started.”

It was especially useful during the peak of COVID. The difficulty of shooting live action in the middle of a lockdown and travel restrictions made animation appealing. MetaHuman helped Vu create convincing animated characters in a fraction of the time that might otherwise be required.

“What’s great about Metahuman is that it generates high fidelity models, which can be used with facial recording capture,” says Vu. “It’s very convincing to the average person and does a lot to cross the uncanny valley.”

Animators can conduct a virtual casting by quickly iterating on characters that differ in age, hairstyle, height, facial structure, and physique. The tool’s automatic animation rigging is especially useful, as Vu says manual rigging of a high-fidelity 3D character can take “a few weeks, if not a few months.” Quick rigging helps animators try poses and expressions before deciding which character to use for a project.

Despite these strengths, Vu shared Richards’s caution that MetaHuman’s approachability has limits. While creating a character is relatively simple, character models and animations require tweaks for best results. He also notes MetaHuman has a limited selection of wardrobe options. This can be changed once a character is imported to Unreal Engine but, again, requires expertise in traditional 3D modeling and animation.

“While the tools are accessible and easy to start up, it really requires the hand of a good animator, a good actor, or good director, to drag it out of uncanny valley and make it convincing,” says Vu.

This sets the stage for competition in the space as alternative tools become more capable. Unity purchased Ziva Dynamics, a VFX studio known for its work creating lifelike characters, in January 2022. Other alternatives, like Animaze and Ready Player Me, lack realism but don’t require experience with 3D modeling or animation for usable results. These tools are popular with Vtubers and fans of metaverse social platforms like VRChat.

MetaHuman leads the effort to cross the uncanny valley—but it’s still anyone’s race.

Correction (18 July 2022): This story was updated to remove reference to a short film Justin Vu worked on, in which he used Unreal Engine but did not use MetaHuman as was originally reported.

- The Uncanny Valley: The Original Essay by Masahiro Mori - IEEE ... ›

- What Is the Uncanny Valley? - IEEE Spectrum ›

Matthew S. Smith is a freelance consumer-tech journalist. An avid gamer, he is a former staff editor at Digital Trends and is particularly fond of wearables, e-bikes, all things smartphone, and CES, which he has attended every year since 2009.